- Artificial Intelligence›

- Responsible AI

Responsible AI: From principles to practice

Accelerate trusted AI deployment with expert guidance and practical tools

Building AI responsibly

The rapid growth of AI and intelligent agents brings promising innovation and new challenges. At AWS, we make responsible AI practical and scalable, freeing you to accelerate trusted AI innovation. We take a people-centric approach that prioritizes education, science, and our customers to integrate responsible AI across the end-to-end AI lifecycle. Our science-based best practices, built-in safeguards, and tools help you build and operate AI responsibly across your use cases. Build with responsible AI practices from day one to move faster with confidence and earn customer trust.

What is responsible AI?

AWS defines responsible AI using a core set of dimensions that we assess and update over time as AI technology evolves.

Fairness

Considering impacts on different groups of stakeholders

Explainability

Understanding and evaluating system outputs

Privacy and security

Appropriately obtaining, using, and protecting data and models

Safety

Preventing harmful system output and misuse

Controllability

Having mechanisms to monitor and steer AI system behavior

Veracity and robustness

Achieving correct system outputs, even with unexpected or adversarial inputs

Governance

Incorporating best practices into the AI supply chain, including providers and deployers

Transparency

Enabling stakeholders to make informed choices about their engagement with an AI system

Responsible AI best practices

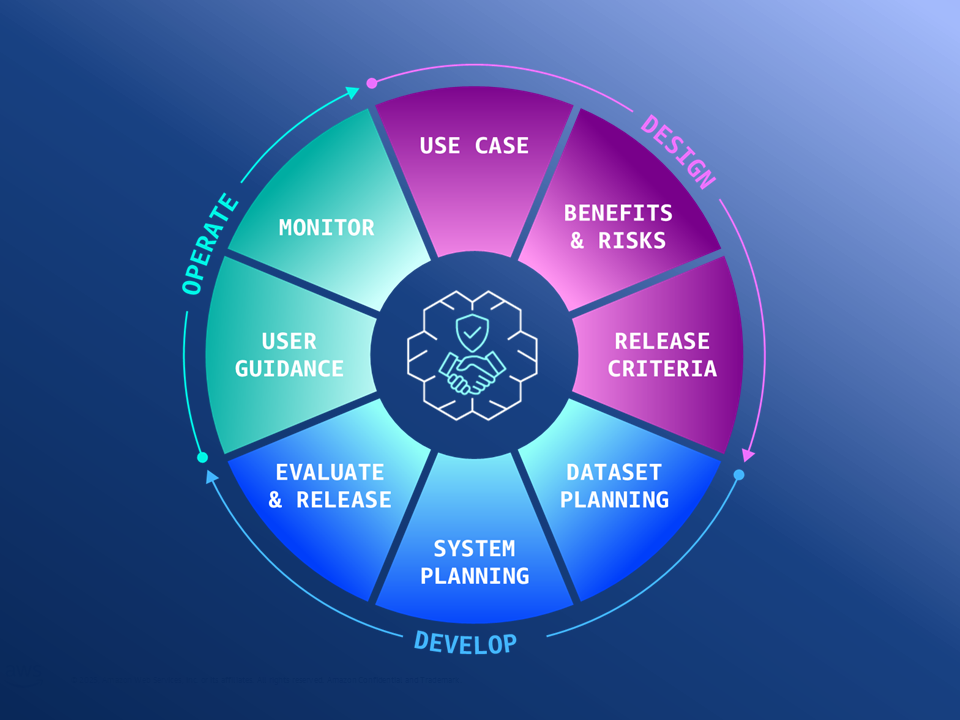

These core dimensions of responsible AI represent technical properties inherent in every AI system—your task is making deliberate design choices for your specific use case. The new AWS Well-Architected Responsible AI Lens provides best practices and practical guidance to help you address these considerations across design, development, and operation. Apply this guidance to make informed decisions that balance your business and technical requirements and help speed up the deployment of trusted AI systems.

Services and tools

AWS offers services and tools to help you design, build, and operate AI systems responsibly.

Build with configurable safeguards

Amazon Bedrock Guardrails helps you implement safeguards customized to your application requirements and responsible AI policies. With industry-leading safety protections that block up to 88% of harmful content and deliver auditable, mathematically verifiable explanations for validation decisions with 99% accuracy, Bedrock Guardrails provides configurable safeguards to help detect and filter harmful text and image content, redact sensitive information, and detect model hallucinations. Use Bedrock Guardrails with any foundation model—in Amazon Bedrock or self-hosted—for consistent safety and privacy controls across all your applications.

Foundation model (FM) evaluations

Model Evaluation on Amazon Bedrock helps you evaluate, compare, and select the best FMs for your specific use case based on custom metrics, such as accuracy, robustness, and toxicity. You can also use Amazon SageMaker Clarify and fmeval for model evaluation.

Detecting bias and explaining predictions

Biases are imbalances in data or disparities in the performance of a model across different groups. Amazon SageMaker Clarify helps you mitigate bias by detecting potential bias during data preparation, after model training, and in your deployed model by examining specific attributes.

Understanding a model’s behavior is important to develop more accurate models and make better decisions. Amazon SageMaker Clarify provides greater visibility into model behavior, so you can provide transparency to stakeholders, inform humans making decisions, and track whether a model is performing as intended.

Monitoring and human review

Monitoring is important to maintain high-quality machine learning (ML) models and help ensure accurate predictions. Amazon SageMaker Model Monitor automatically detects and alerts you to inaccurate predictions from deployed models. And with Amazon SageMaker Ground Truth you can apply human feedback across the ML lifecycle to improve the accuracy and relevancy of models.

Improving governance

ML Governance from Amazon SageMaker provides purpose-built tools for improving governance of your ML projects by giving you tighter control and visibility over your ML models. You can easily capture and share model information and stay informed on model behavior, like bias, all in one place.

AWS AI Service Cards

AI Service Cards are a resource to enhance transparency by providing you with a single place to find information on the intended use cases and limitations, responsible AI design choices, and performance optimization best practices for our AI services and models.

What’s new in responsible AI

Community contribution and collaboration

With deep engagement with multi-stakeholder organizations such as the OECD AI working groups, the Partnership on AI, and the Responsible AI Institute as well as strategic partnerships with universities on a global scale, we are committed to working alongside others to develop AI and ML technology responsibly and build trust.

We take a people-centric approach to educating the next generation of AI leaders with programs like the AI & ML Scholarship program and We Power Tech to increase access to hands-on learning, scholarships, and mentorship for underserved or underrepresented in tech.

Our investment in safe, transparent, and responsible generative AI includes collaboration with the global community and policymakers including the G7 AI Hiroshima Process Code of Conduct, AI Safety Summit in the UK, and support for ISO 42001, a new foundational standard to advance responsible AI. We support the development of effective risk-based regulatory frameworks for AI that protects civil rights, while allowing for continued innovation.

Responsible AI is an active area of research and development at Amazon. We have strategic partnerships with academia, like the California Institute of Technology and with Amazon Scholars, including leading experts who apply their academic research to help shape responsible AI workstreams at Amazon.

We innovate alongside our customers – staying at the forefront of new trends and research to deliver value – with ongoing research grants via the Amazon Research Awards and scientific publications with Amazon Science. Learn more about the science to build generative AI responsibly in this Amazon Science blog that unpacks the top emerging challenges and solutions.