AWS News Blog

Amazon SQS – Long Polling and Request Batching / Client-Side Buffering

We announced the Simple Queue Service (SQS) eight years ago, give or take a day. Although this was our first infrastructure web service, we launched it with little fanfare and gave no hint that this was just the first of many such services on the drawing board. I’m sure that some people looked at it and said “Huh, that’s odd. Why is my online retailer trying to sell me a message queuing service?” Given that we are, as Jeff Bezos has said, “willing to be misunderstood for long periods of time,” we didn’t see the need to say any more.

Today, I look back on that humble blog post and marvel at how far we have come in such a short time. We have a broad array of infrastructure services (with more on the drawing board), tons of amazing customers doing amazing things, and we just sold out our first-ever AWS conference (but you can still register for the free live stream).SQS is an essential component of any scalable, fault-tolerant architecture (see the AWS Architecture Center for more information on this topic).

As we always do, we launched SQS with a minimal feature set and an open ear to make sure that we met the needs of our customers as we evolved it. Over the years we have added batch operations, delay queues, timers, AWS Management Console Support, CloudWatch Metrics, and more.

We’re adding two important features to SQS today: long polling and request batching/client-side buffering. Let’s take a look at each one.

Long Polling

If you have ever written code that calls the SQS ReceiveMessage function, you’ll really appreciate this new feature. As you have undoubtedly figured out for yourself, you need to make tradeoff when you design your application’s polling model. You want to poll as often as possible to keep end-to-end throughput as high as possible, but polling in a tight loops burns CPU cycles and gets expensive.

Our new long polling model will obviate the need for you to make this difficult tradeoff. You can now make a single ReceiveMessage call that will wait for between 1 and 20 seconds for a message to become available. Messages are still delivered to you as soon as possible; there’s no delay when messages are available.

As an important side effect, long polling checks all of the SQS hosts for messages (regular polling checks a subset). If a long poll returns an empty set of messages, you can be confident that no unprocessed messages are present in the queue.

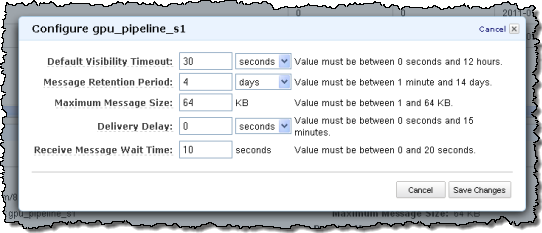

You can make use of long polling on a call-by-call basis by setting the WaitTimeSeconds parameter to a non-zero value when you call ReceiveMessage. You can also set the corresponding queue attribute to a non-zero value using the SetQueueAttributes function and it will become the default for all subsequent ReceiveMessage calls on the queue. You can also set it from the AWS Management Console:

Calls to ReceiveMessage for long polls cost the same as short polls. Similarly, the batch APIs cost the same as the non-batch versions. You get better performance and lower costs by using long polls and the batch APIs.

Request Batching / Client-Side Buffering

This pair of related features is actually implemented in the AWS SDK for Java. The SDK now includes a buffered, asynchronous SQS client.

You can now enable client-side buffering and request batching for any of your SQS queues. Once you have done so, the SDK will automatically and transparently buffer up to 10 requests and send them to SQS in one batch. Request batching has the potential to reduce your SQS charges since the entire batch counts as a single request for billing purposes.

If you are already using the SDK, you simple instantiate the AmazonSQSAsyncClient instead of the AmazonSQSClient, and then use it to create an AmazonSQSBufferedAsyncClient object:

AmazonSQSAsync sqsAsync = new AmazonSQSAsyncClient (credentials ) ;

// Create the buffered client

AmazonSQSAsync bufferedSqs = new AmazonSQSBufferedAsyncClient(sqsAsync);

Then you use it to make requests in the usual way:

String body = “test message_” + System. currentTimeMillis ( ) ;

request. setMessageBody ( body ) ;

request. setQueueUrl (res. getQueueUrl ( ) ) ;

SendMessageResult sendResult = bufferedSqs.sendMessage(request);

The SDK will take care of all of the details for you!

You can fine-tune the batching mechanism by setting the maxBatchOpenMs and maxBatchSize parameters as described in the SQS Developer Guide:

- The maxBatchOpenMs parameter specifies the maximum amount of time, in milliseconds, that an outgoing call waits for other calls of the same type to batch with.

- The maxBatchSize parameter specifies the maximum number of messages that will be batched together in a single batch request.

The SDK can also pre-fetch and then buffer up multiple messages from a queue. Again, this will reduce latency and has the potential to reduce your SQS charges. When your application calls ReceiveMessage, you’ll get a pre-fetched, buffered message if possible. This should work especially well in high-volume message processing applications, but is definitely applicable and valuable at any scale. Fine-tuning can be done using the maxDoneReceiveBatches and maxInflightReceiveBatches parameters.

If you are using this new feature (and you really should), you’ll want to examine and perhaps increase your queue’s visibility timeout accordingly. Messages will be buffered locally until they are received or their visibility timeout is reached; be sure to take this new timing component into account to avoid surprises.

Help Wanted

The Amazon Web Services Messaging team is growing and we are looking to add new members who are passionate about building large-scale distributed systems. If you are a software development engineer, quality assurance engineer, or engineering manager/leader, we would like to hear from you. We are moving fast, so send your resume to aws-messaging-jobs@amazon.com and we will get back to you immediately.

— Jeff;