- AWS Solutions Library›

- Guidance for Digital Twin Framework on AWS

Guidance for Digital Twin Framework on AWS

Overview

How it works

Overview

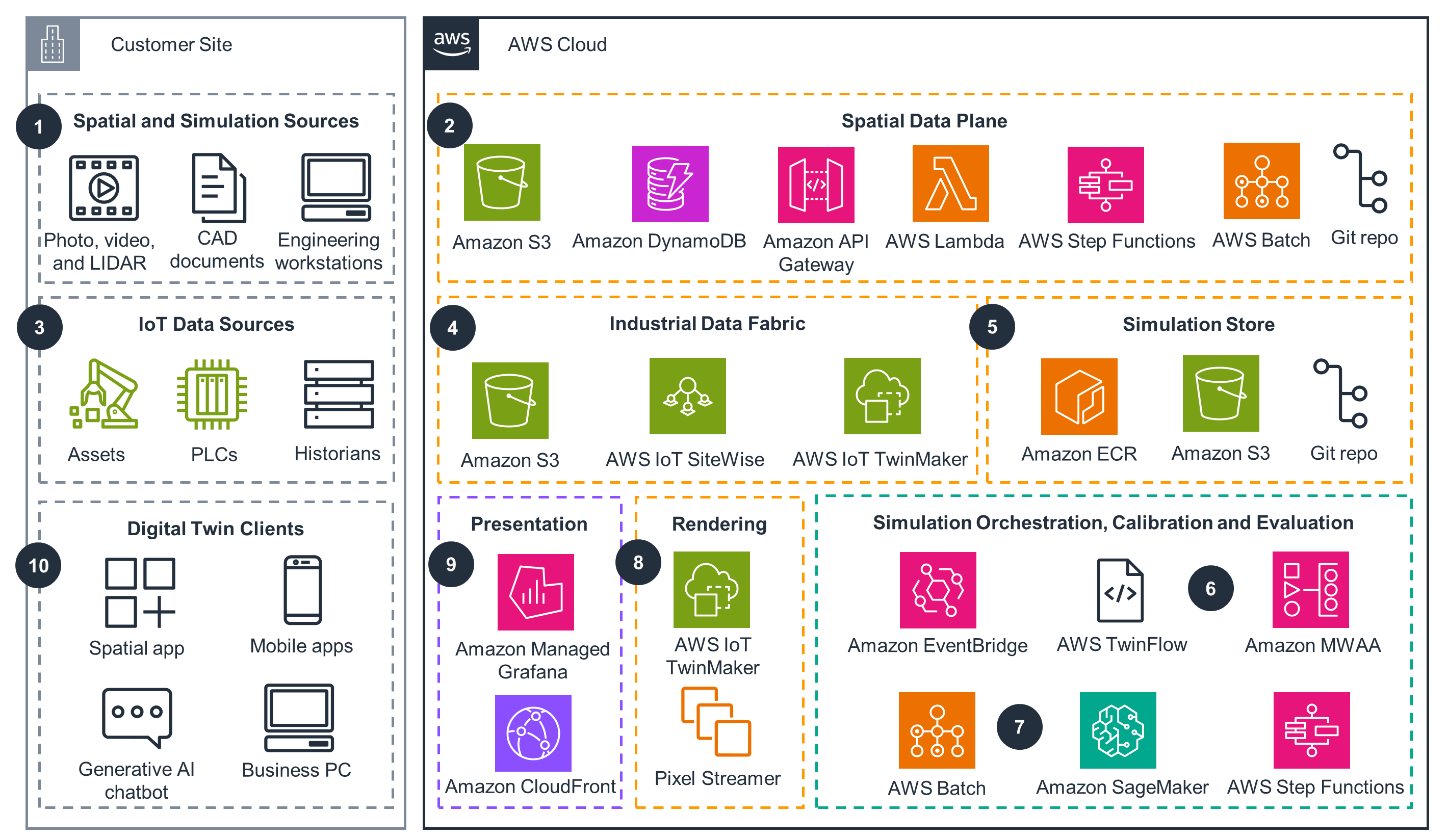

This architecture diagram consists of three integrated modules that address key stages of workforce safety and compliance and create the Digital Twin framework: IoT, spatial compute, and simulation components.

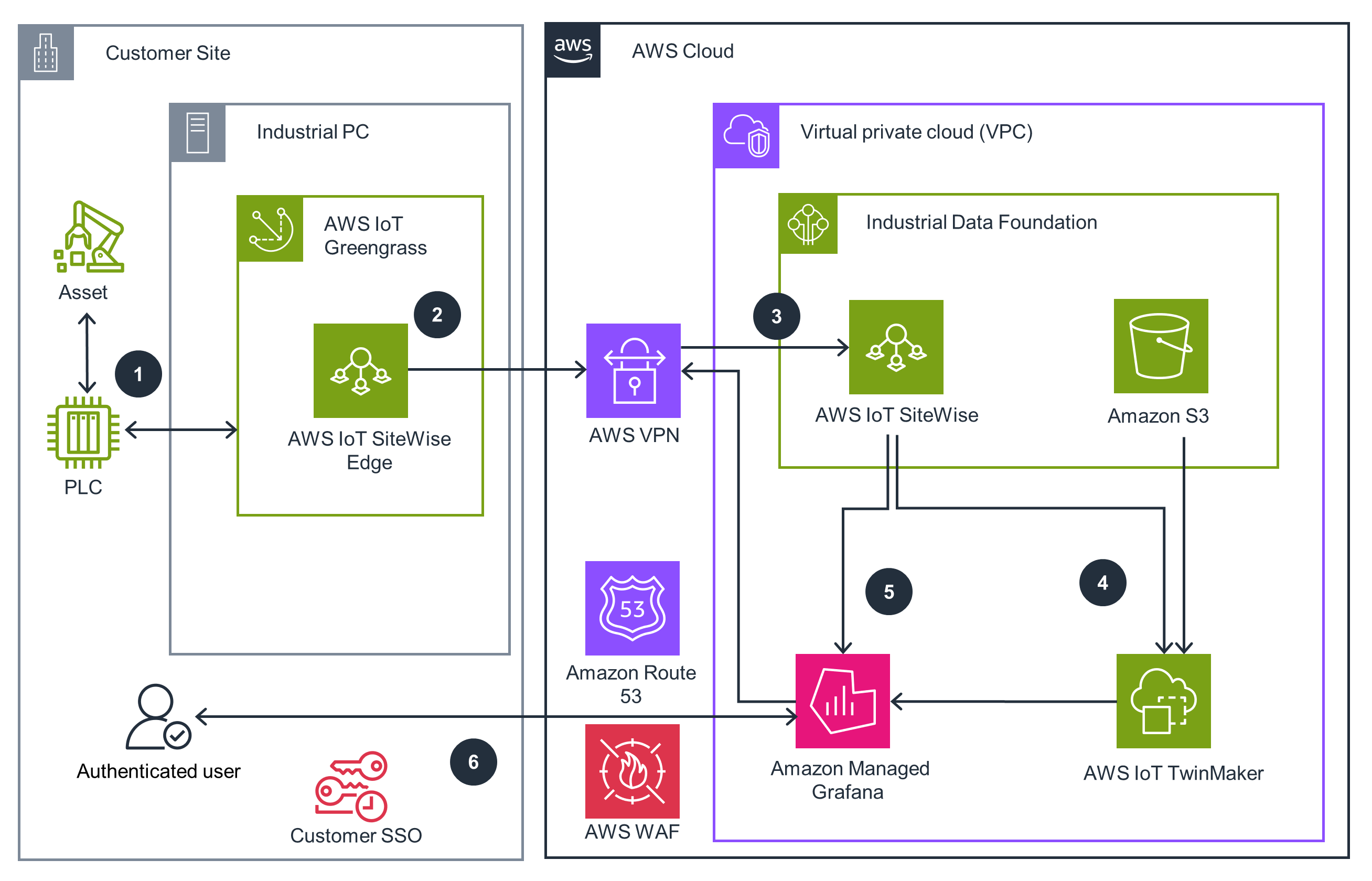

IoT Data

This architecture diagram shows how to connect IoT data to the digital twin.

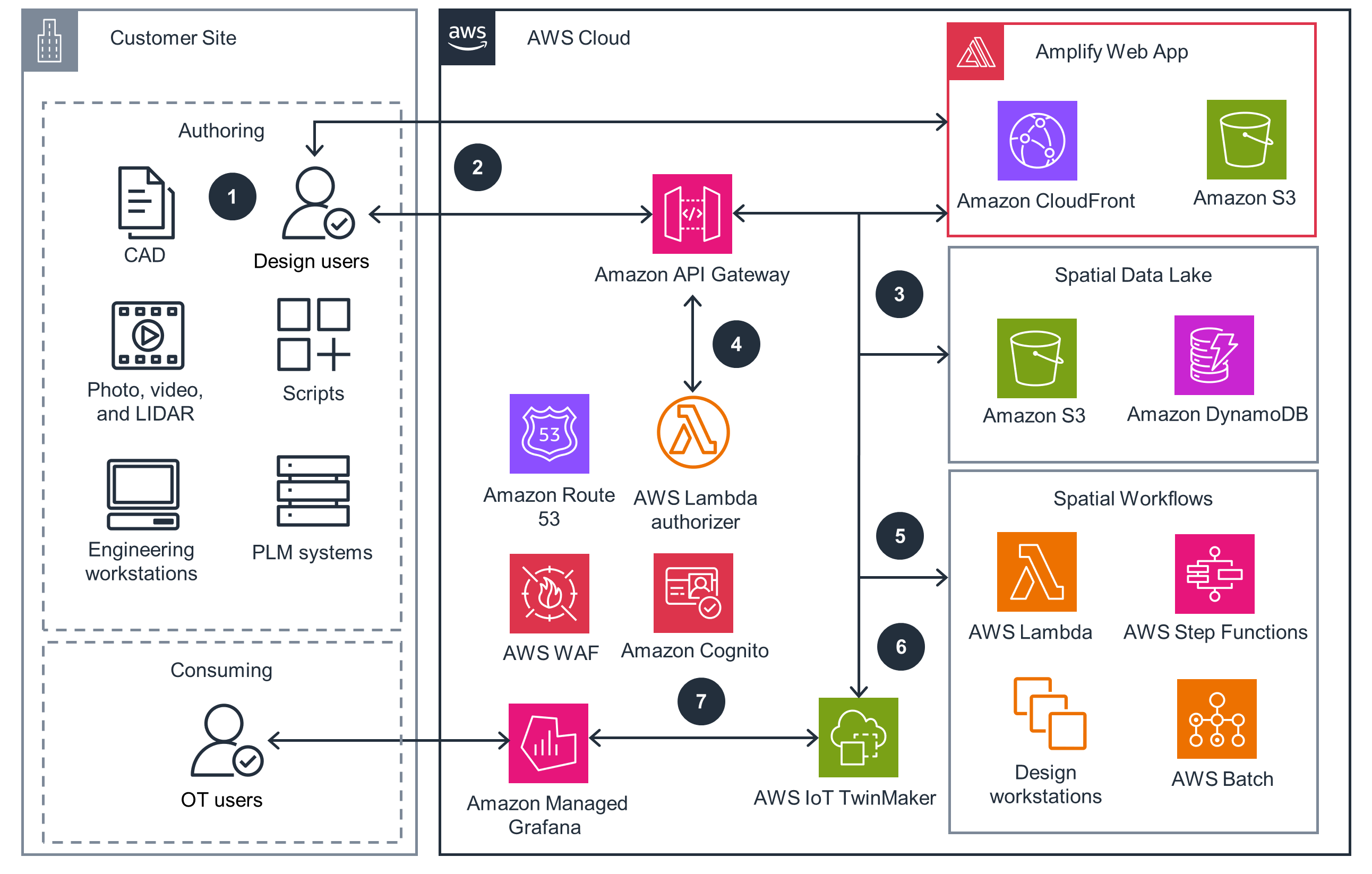

Spatial Data Plane

This architecture diagram shows how to create the spatial component of a digital twin, including the ingestion and processing of data into real-time 3D assets.

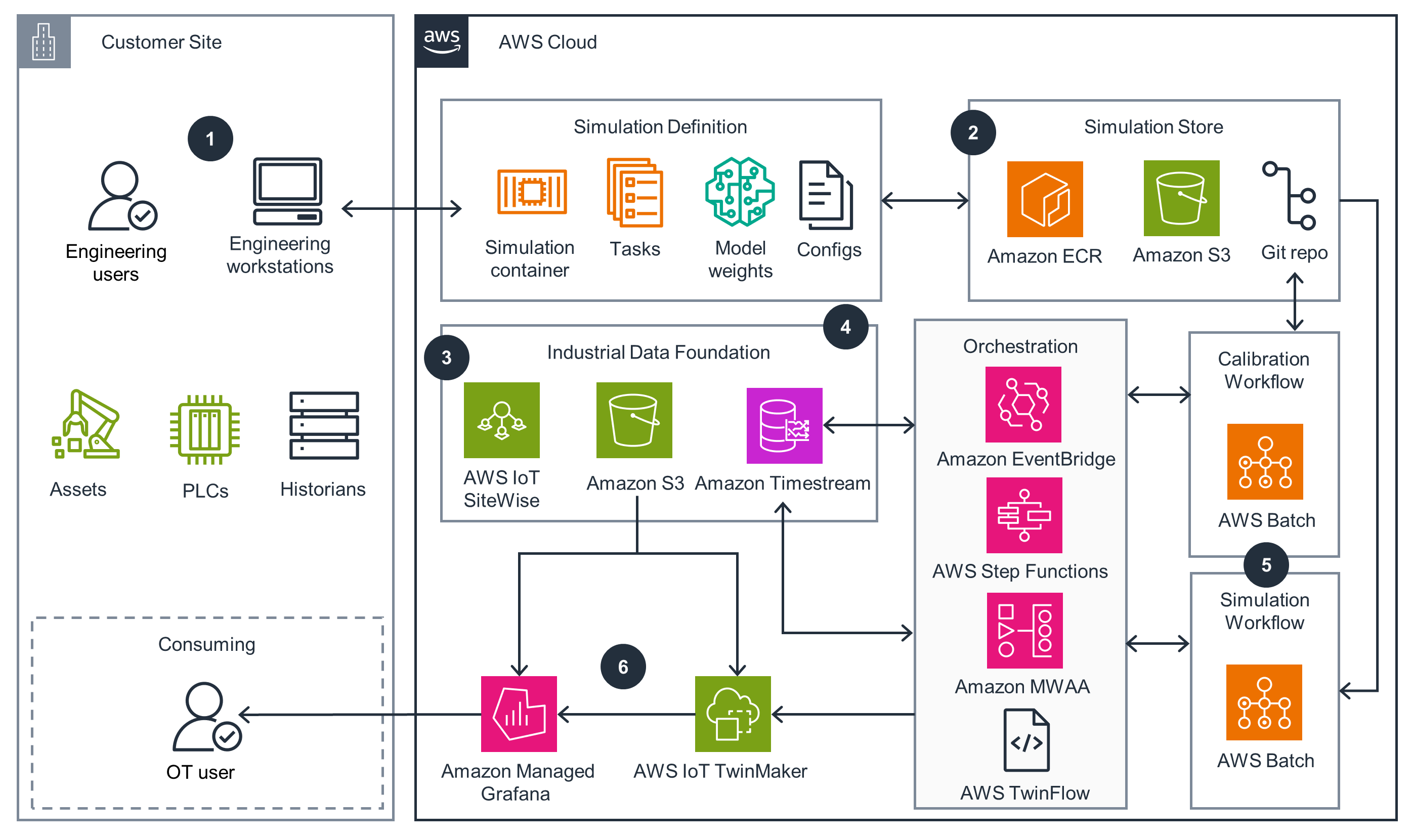

Building and Orchestrating Simulation Twins

This architecture diagram shows how to simulate a digital twin.

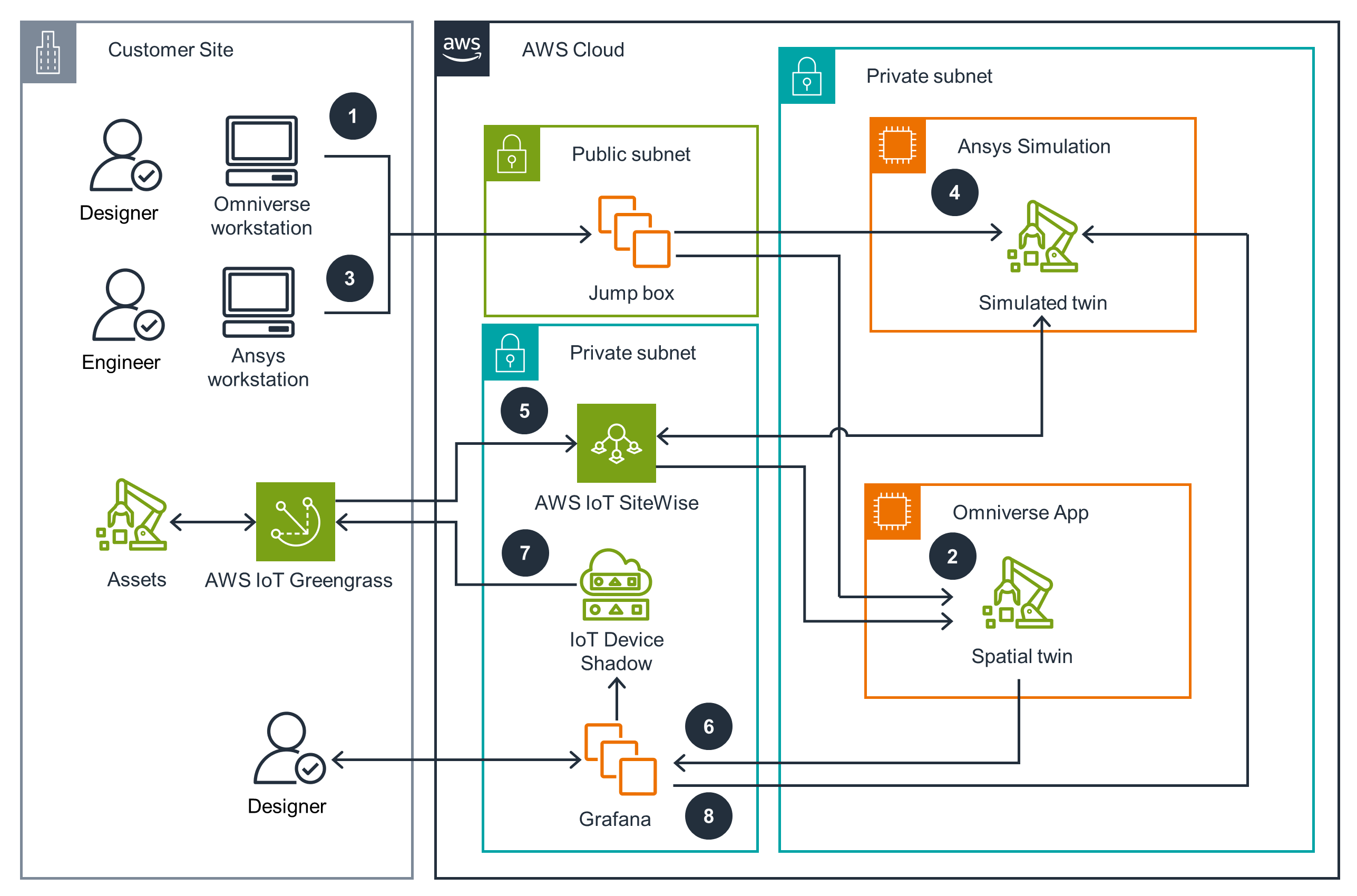

Product Design Example

This architecture diagram shows a specific implementation of the Digital Twin framework used for product design.

Well-Architected Pillars

The architecture diagram above is an example of a Solution created with Well-Architected best practices in mind. To be fully Well-Architected, you should follow as many Well-Architected best practices as possible.

The majority of AWS services used in this Guidance are serverless, lowering the operational overhead of maintaining the Guidance. The VAMS and Industrial Data Fabric solutions leverage AWS Cloud Development Kit (AWS CDK) to provide infrastructure as code. Using AWS CDK and AWS CloudFormation, you can apply the same engineering discipline that you use for application code to your entire environment.

Integration with Amazon CloudWatch enables monitoring of incoming data and alerting on potential issues. By understanding service metrics, you can optimize event workflows and ensure scalability. Visualizing and analyzing data and compute components using CloudWatch helps you identify performance bottlenecks and troubleshoot requests.

By using AWS Identity and Access Management (IAM), Amazon Cognito, API Gateway, and Lambda authorizers, this Guidance prioritizes data protection, system security, and asset integrity, aligning with best practices and improving your overall security posture. Amazon Route 53, AWS WAF, and AWS VPN are used in this Guidance to provide secure connections for both public and private networking between on-premises facilities and the AWS Cloud.

We recommend enabling encryption at rest for all data destinations in the cloud, a feature supported by both Amazon S3 and AWS IoT SiteWise, to further safeguard sensitive information.

Through multi-Availability Zone (multi-AZ) deployments, throttling limits, and managed services like Amazon Managed Grafana, this Guidance helps to ensure continuous operation and minimal downtime for critical workloads. Specifically, AWS IoT SiteWise and AWS IoT TwinMaker implement throttling limits for data ingress and egress for continued operations, even during periods of high traffic or load.

Furthermore, the Amazon Managed Grafana console provides a reliable workspace for visualizing and analyzing metrics, logs, and traces without the need for hardware or infrastructure management. It automatically provisions, configures, and manages the workspace while handling automatic version upgrades and auto-scaling to meet dynamic usage demands. This auto-scaling capability is crucial for handling peak usage during site operations or shift changes in industrial environments.

By utilizing the capabilities of AWS IoT SiteWise to manage throttling, in addition to the automatic scaling of both AWS IoT SiteWise and Amazon S3, this Guidance can ingest, process, and store data efficiently, even during periods of high data influx. This automatic scaling eliminates the need for manual capacity planning and resource provisioning, enabling optimal performance while minimizing operational overhead.

The majority of AWS services used by this Guidance are serverless, cost-optimized services, providing digital twin capabilities at a low price point. These services offer a pay-as-you-go pricing model, meaning you are only charged for data ingested, stored, and queried.

AWS IoT SiteWise also offers optimized storage settings, enabling data to be moved from a hot tier to a cold tier in Amazon S3, further reducing storage costs.

The services in this Guidance use the elastic and scalable infrastructure of AWS, which scales compute resources up and down based on usage demands. This prevents overprovisioning and minimizes excess compute capacity, reducing unintended carbon emissions. You can monitor your CO2 emissions using the Customer Carbon Footprint Tool.

Additionally, the agility provided by technologies like digital twins (built with AWS IoT TwinMaker), event-based automation, and AI/ML-based insights empowers engineering teams to optimize on-site operations, increasing efficiency and minimizing emissions from industrial processes.

Disclaimer

Did you find what you were looking for today?

Let us know so we can improve the quality of the content on our pages