AWS News Blog

100% on Amazon Web Services: Soocial.com

I’m Simone Brunozzi, technology evangelist for Amazon Web Services in Europe.

This period of the year I decided to dedicate some time to better understand how our customers use AWS, therefore I spent some online time with Stefan Fountain and the nice guys at Soocial.com, a “one address book solution to contact management”, and I would like to share with you some details of their IT infrastructure, which now runs 100% on Amazon Web Services!

In the last few months, they’ve been working hard to cope with tens of thousands of users and to get ready to easily scale to millions. To make this possible, they decided to move ALL their architecture to Amazon Web Services. Despite the fact that they were quite happy with their previous hosting provider, Amazon proved to be the way to go.

Overview of the new Architecture

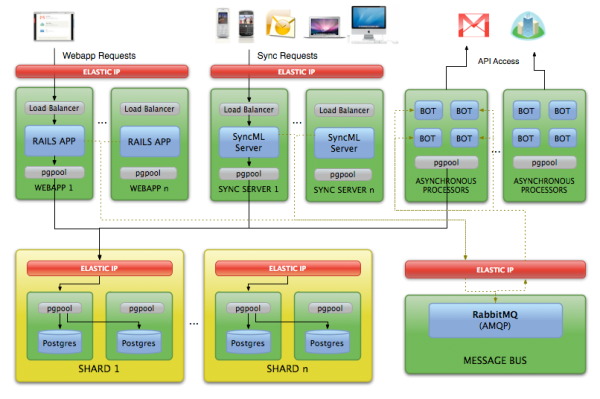

This is how their new architecture looks:

1. Soocial Web application: Nginx running behind an Elastic IP, proxying the request to an HAProxy on every “webapp” instance, each of which has its own memcache server (they don’t need to explicitly expire the cache). The reason they use Nginx is to supply SSL support, if HAproxy would do SSL they probably wouldn’t be using Nginx.

2. Sync server. This sync server communicates with phones, OSX and Outlook using the SyncML sync protocol. Similar setup as the web application.

3. Sync bots. The sync bots are jobs that run periodically to get and push changes to and from the online apps that they sync with. Currently these are GMail and Highrise. These jobs happen asynchronously. Previously they were using a database as queue for asynchronous processing, but there were several issues:

– it wasn’t flexible enough,

– it locked the database unnecessarily,

– the bots were constantly polling the database – not exactly the kind of thing an RDBMS is built for.

The obvious (and most simple) solution for this would be to use a messaging queue, so they switched to AMQP, namely RabbitMQ. Not only did this allow them to feed the bots but also turned out to be good for logging and other purposes. The bots themselves were already running on EC2 before they moved the whole infrastructure over, so the only thing that changed there was that they no longer needed to use a tunnel to connect to the database.

4. Database: After evaluating all the different database replication solutions for PostgreSQL, they decided to use pgpool-II. This is PostgreSQL middleware that allows you to do all sorts of stuff including: multi-master synchronous replication, sharding, master-slave replication (although in this case it won’t actually do the replication for you, therefore you need to use something like slony for that), online recovery, connection pooling, caching, etc.

The nice thing is that it didn’t require any change in their application layer. It behaves like a real PostgreSQL server, therefore they can continue using the exact same previous ActiveRecord configuration. Both the webapp and the sync server use ActiveRecord and the same models as their data abstraction layer.

They use it for different purposes:

– Data shards: they configure rules for where the data goes and comes from, and pgpool-II takes care of it.

– Replication: shards aren’t PostgreSQL, they have in fact an extra layer of pgpool-II instances that do synchronous multi-master replication. This way, if ones of their servers goes down, they’re not affected. All they have to do is switch the Elastic IP for the given shard which allows to do hot-failover as all the db connections will automatically switch to the new server (not exactly heartbeat, but good enough).

Scaling

As you can see in the diagram above, to scale the webapp and sync server they simply add EC2 instances, change the config file and that’s it. When it comes to DB nodes, they need to do an online recovery. When the user base grows they can add shards to the DB, and have different sets of users run on different shards. This allows them to move heavy users to a more powerful instance, perhaps even optimize the DB for their specific use.

Performance

EBS performs really well and the fact that they can attach more than one EBS volume to a single instance is useful because they use different volumes for data and write-ahead logging (which brings up another interesting performance boost):

“The snapshot capability has proven to be quite handy as we’re able to use that for backups instead of doing a SQL dumping directly from the server. Now, whenever we want to do a SQL backup, we start a new EC2 instance, create a volume from a snapshot, start a PostgreSQL instance from that volume, dump from that server and then upload to S3. This way we can do a full backup without trashing a production database server.”

Did you find this interesting? Do you have similar stories you want to share with our blog readers? Then send me a message on twitter: @simon, or email me: simoneb @ amazon d0t lu

Cheers,

– Simone