AWS News Blog

Focusing on Spot Instances – Let’s Talk About Best Practices

I often point to EC2 Spot Instances as a feature that can only be implemented at world-scale with any degree of utility.

I often point to EC2 Spot Instances as a feature that can only be implemented at world-scale with any degree of utility.

Unless you have a massive amount of compute power and a multitude of customers spread across every time zone in the world, with a wide variety of workloads, you simply won’t have the ever-changing shifts in supply and demand (and the resulting price changes) that are needed to create a genuine market. As a quick reminder, Spot Instances allow you to save up to 90% (when compared to On-Demand pricing) by placing bids for EC2 capacity. Instances will run whenever your bid exceeds the current Spot Price and can be terminated (with a two minute warning) in the presence of higher bids for the same (as determined by region, availability zone, and instance type) capacity.

Because Spot Instances come and go, you need to pay attention to your bidding strategy and to your persistence model in order to maximize the value that you derive from them. Looked at another way, by structuring your application in the right way you can be in a position to save up to 90% (or, if you have a flat budget, you can get 10x as much computing done). This is a really interesting spot for you, as the cloud architect for your organization. You can exercise your technical skills to drive the cost of compute power toward zero, while making applications that are price aware and more fault-tolerant. Master the ins and outs of Spot Instances and you (and your organization) will win!

The Trend is Clear

As I look back at the history of EC2 — from launching individual instances on demand, then on to Spot Instances, Containers, and Spot Fleets — the trend is pretty clear. Where you once had to pay attention to individual, long-running instances and to list prices, you can now think about collections of instances with an indeterminate lifetime, running at the best possible price, as determined by supply and demand within individual capacity pools (groups of instances that share the same attributes). This new way of thinking can liberate you from some older thought patterns and can open the door to some new and intriguing ways to obtain massive amounts of compute capacity quickly and cheaply, so you can build really cool applications at a price you can afford.

I should point out that there’s a win-win situation when it comes to Spot. You (and your customers) win by getting compute power at the most economical price possible at a given point in time. Amazon wins because our fleet of servers (see the AWS Global Infrastructure page for a list of locations) is kept busy doing productive work. High utilization improves our cost structure, and also has an environmental benefit.

Spot Best Practices

Over the next few months, with a lot of help from the EC2 Spot Team, I am planning to share some best practices for the use of Spot Instances. Many of these practices will be backed up with real-world examples that our customers have shared with us; these are not theoretical or academic exercises. Today I would like to kick off the series by briefly outlining some best practices.

Let’s define the concept of a capacity pool in a bit more detail. As I alluded to above, a capacity pool is a set of available EC2 instances that share the same region, availability zone, operating system (Linux/Unix or Windows), and instance type. Each EC2 capacity pool has its own availability (the number of instances that can be launched at any particular moment in time) and its own price, as determined by supply and demand. As you will see, applications that can run across more than one capacity pool are in the best position to consistently access the most economical compute power. Note that capacity in a pool is shared between On-Demand and Spot instances, so Spot prices can rise from either more demand for Spot instances or an increase in requests for On-Demand instances.

Here are some best practices to get you started.

Build Price-Aware Applications – I’ve said it before: cloud computing is a combination of a business model and a technology. You can write code (and design systems) that are price-aware, and that have the potential to make your organization’s cloud budget go a lot further. This is a new area for a lot of technologists; my advice to you is to stretch your job description (and your internal model of who you are and what your job entails) to include designing for cost savings.

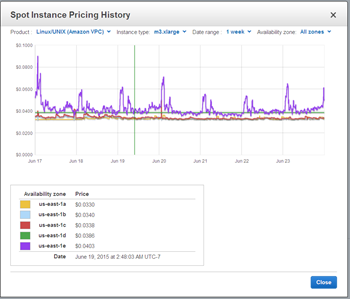

You can start by spending some time investigating (or by building some tools using the EC2 API or the AWS Command Line Interface (AWS CLI)) the full range of capacity pools that are available to you within the region(s) that you use to run your app. High prices and a high degree of price variance over time indicate that many of your competitors are bidding for capacity in the same pool. Seek out pools that have lower prices and more stable prices (both current and historic) to find bargains and lower interruption rates.

Check the Price History – You can access historical prices on a per-pool basis going back 90 days (3 months). Instances that are currently very popular with our customers (the R3‘s as I write this) tend to have Spot prices that are somewhat more volatile. Older generations (including c1.8xlarge, m1.small, cr1.8xlarge, and cc2.8xlarge) tend to be much more stable. In general, picking older generations of instances will result in lower net prices and fewer interruptions.

Use Multiple Capacity Pools – Many types of applications can run (or can be easily adapted to run) across multiple capacity pools. By having the ability to run across multiple pools, you reduce your application’s sensitivity to price spikes that affect a pool or two (in general, there is very little correlation between prices in different capacity pools). For example, if you run in five different pools your price swings and interruptions can be cut by 80%.

A high-quality approach to this best practice can result in multiple dimensions of flexibility, and access to many capacity pools. You can run across multiple availability zones (fairly easy in conjunction with Auto Scaling and the Spot Fleet API) or you can run across different sizes of instances within the same family (Amazon EMR takes this approach). For example, your app might figure out how many vCPUs it is running on, and then launch enough worker threads to keep all of them occupied.

Adherence to this best practice also implies that you should strive to use roughly equal amounts of capacity in each pool; this will tend to minimize the impact of changes to Spot capacity and Spot prices.

To learn more, read about Spot Instances in the EC2 Documentation.

Stay Tuned

As I mentioned, this is an introductory post and we have a lot more ideas and code in store for you! If you have feedback, or if you would like to contribute your own Spot tips to this series, please send me (awseditor@amazon.com) a note.

— Jeff;