AWS News Blog

AWS Import/Export Snowball – Transfer 1 Petabyte Per Week Using Amazon-Owned Storage Appliances

Even though high speed Internet connections (T3 or better) are available in many parts of the world, transferring terabytes or petabytes of data from an existing data center to the cloud remains challenging. Many of our customers find that the data migration aspect of an all-in move to the cloud presents some surprising issues. In many cases, these customers are planning to decommission their existing data centers after they move their apps and their data; in such a situation, upgrading their last-generation networking gear and boosting connection speeds makes little or no sense.

We launched the first-generation AWS Snowball service way back in 2009. As I wrote at the time, “Hard drives are getting bigger more rapidly than internet connections are getting faster.” I believe that remains the case today. In fact, the rapid rise in Big Data applications, the emergence of global sensor networks, and the “keep it all just in case we can extract more value later” mindset have made the situation even more dire.

The original AWS Snowball model was built around devices that you had to specify, purchase, maintain, format, package, ship, and track. While many AWS customers have used (and continue to use) this model, some challenges remain. For example, it does not make sense for you to buy multiple expensive devices as part of a one-time migration to AWS. In addition to data encryption requirements and device durability issues, creating the requisite manifest files for each device and each shipment adds additional overhead and leaves room for human error.

New Data Transfer Model with Amazon-Owned Appliances

After gaining significant experience with the original model, we are ready to unveil a new one, formally known as AWS Snowball. Built around appliances that we own and maintain, the new model is faster, cleaner, simpler, more efficient, and more secure. You don’t have to buy storage devices or upgrade your network.

Snowball is designed for customers that need to move lots of data (generally 10 terabytes or more) to AWS on a one-time or recurring basis. You simply request one or more from the AWS Management Console and wait a few days for the appliance to be delivered to your site. If you want to import a lot of data, you can order one or more Snowball appliances and run them in parallel.

The new Snowball appliance is purpose-built for efficient data storage and transfer. It is rugged enough to withstand a 6 G jolt, and (at 50 lbs) light enough for one person to carry. It is entirely self-contained, with 110 Volt power and a 10 GB network connection on the back and an E Ink display/control panel on the front. It is weather-resistant and serves as its own shipping container; it can go from your mail room to your data center and back again with no packing or unpacking hassle to slow things down. In addition to being physically rugged and tamper-resistant, AWS Snowball detects tampering attempts. Here’s what it looks like:

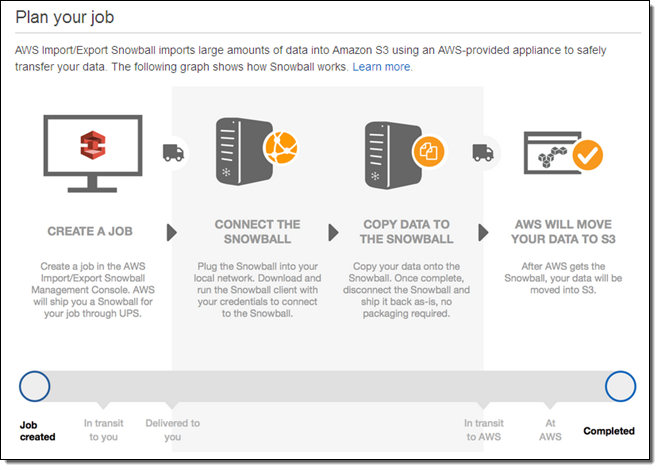

Once you receive a Snowball, you plug it in, connect it to your network, configure the IP address (you can use your own or the device can fetch one from your network using DHCP), and install the AWS Snowball client. Then you return to the Console to download the job manifest and a 25 character unlock code. With all of that info in hand you start the appliance with one command:

$ snowball start -i DEVICE_IP -m PATH_TO_MANIFEST -u UNLOCK_CODEAt this point you are ready to copy data to the Snowball. The data will be 256-bit encrypted on the host and stored on the appliance in encrypted form. The appliance can be hosted on a private subnet with limited network access.

From there you simply copy up to 50 terabytes of data to the Snowball and disconnect it (a shipping label will automatically appear on the E Ink display), and ship it back to us for ingestion. We’ll decrypt the data and copy it to the S3 bucket(s) that you specified when you made your request. Then we’ll sanitize the appliance in accordance with National Institute of Standards and Technology Special Publication 800-88 (Guidelines for Media Sanitization).

At each step along the way, notifications are sent to an Amazon Simple Notification Service (Amazon SNS) topic and email address that you specify. You can use the SNS notifications to integrate the data import process into your own data migration workflow system.

Creating an Import Job

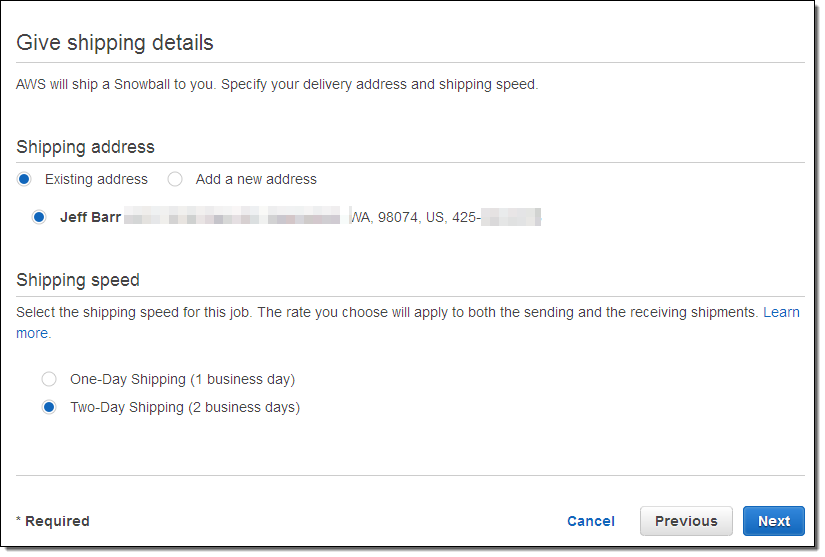

Let’s step through the process of creating an AWS Snowball import job from the AWS Management Console. I create a job by entering my name and address (or choosing an existing one if I have done this before):

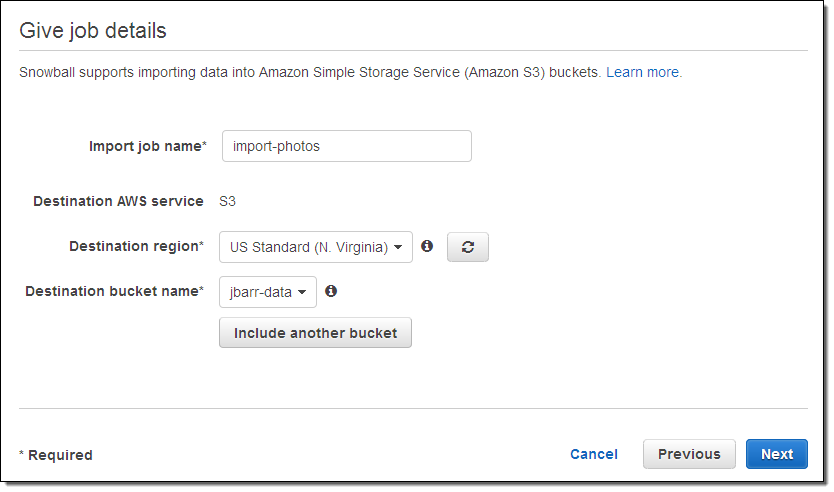

Then I give the job a name (mine is import-photos), and select a destination (an AWS region and one or more S3 buckets):

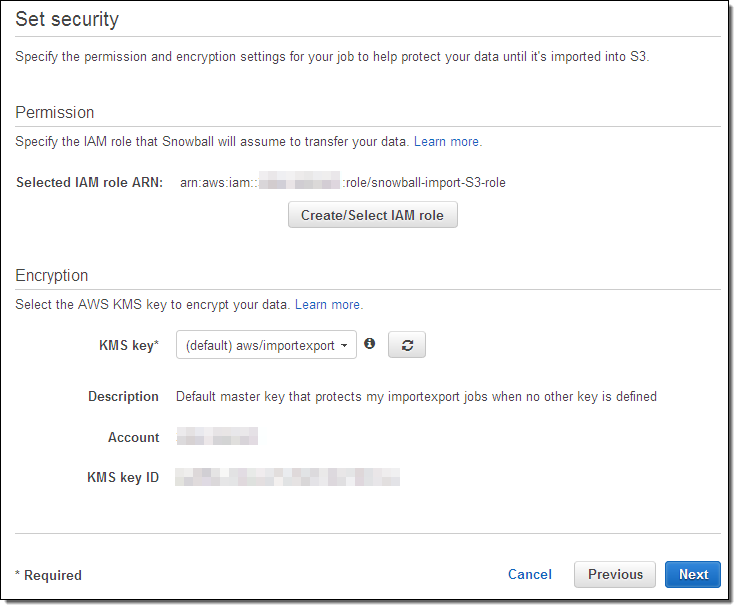

Next, I set up my security (an IAM role and a KMS key to encrypt the data):

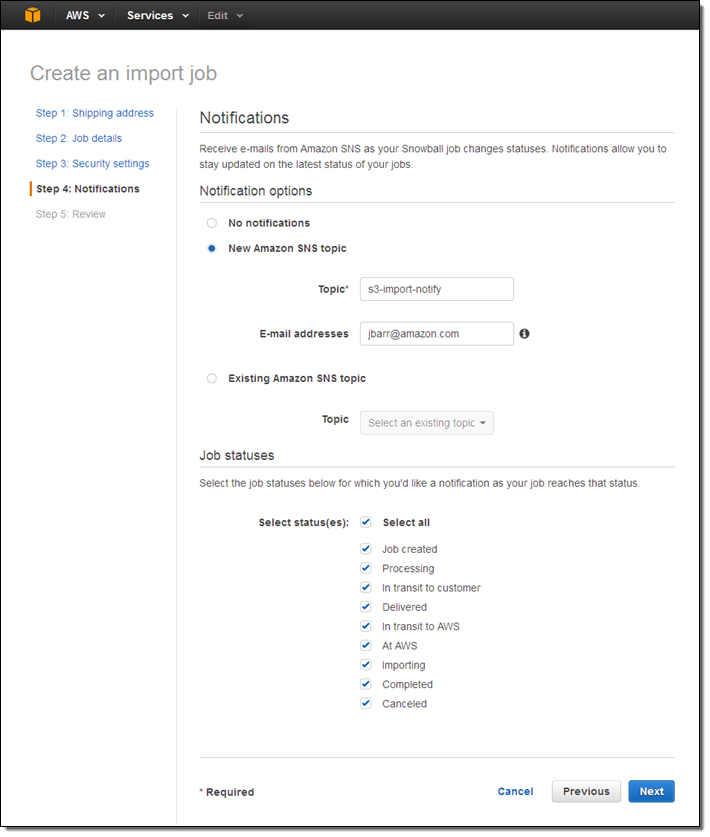

I’m almost ready! Now I choose the notification options. I can create a new SNS topic and create an email subscription to it, or I can use an existing topic. I can also choose the status changes that are of interest to me:

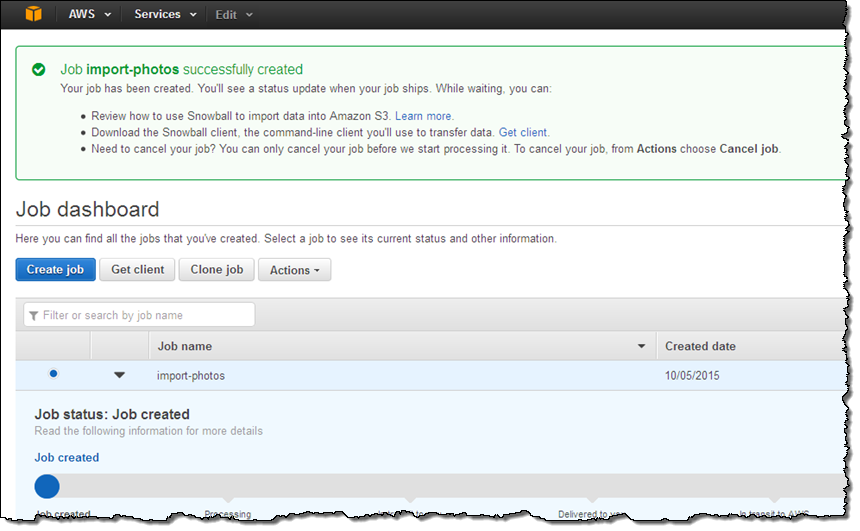

After I review and confirm my choices, the job becomes active:

The next step (which I didn’t have time for in the rush to re:Invent) would be to receive the appliance, install it and copy my data over, and ship it back.

In the Works

We are launching AWS Snowball with import functionality so that you can move data to the cloud. We are also aware of many interesting use cases that involve moving data the other way, including large-scale data distribution, and plan to address them in the future.

We are also working on other enhancements including continuous, GPS-powered chain-of-custody tracking.

Pricing and Availability

There is a usage charge of $200 per job, plus shipping charges that are based on your destination and the selected shipment method. As part of this charge, you have up to 10 days (starting the day after delivery) to copy your data to the appliance and ship it out. Extra days are $15 each.

You can import data to the US Standard and US West (Oregon) regions, with more on the way.

— Jeff;