AWS News Blog

NOvA uses AWS to Shed Light on Neutrino Mysteries

My colleague Sanjay Padhi wrote the guest post below in order to tell the story of how AWS powered an important scientific discovery.

— Jeff;

Ghostlike particles called neutrinos are everywhere, cosmic rays bombard us with them, the sun bathes us in them. Though incredibly difficult to detect, they may hold the key to why there is more matter than antimatter around. Prominent physicist and Nobel laureate Enrico Fermi named this mysterious particle the “neutrino” (or “little neutral one”). It took time to realize that neutrinos have very unstable egos. They are able to switch or change their identities (“flavors”) through space also called neutrino oscillation. The 2015 Nobel prize in Physics was awarded to Takaaki Kajita (Super-Kamiokanda, Japan) and Arthur B. McDonald (SNO, Canada) for the discovery of neutrino oscillations.

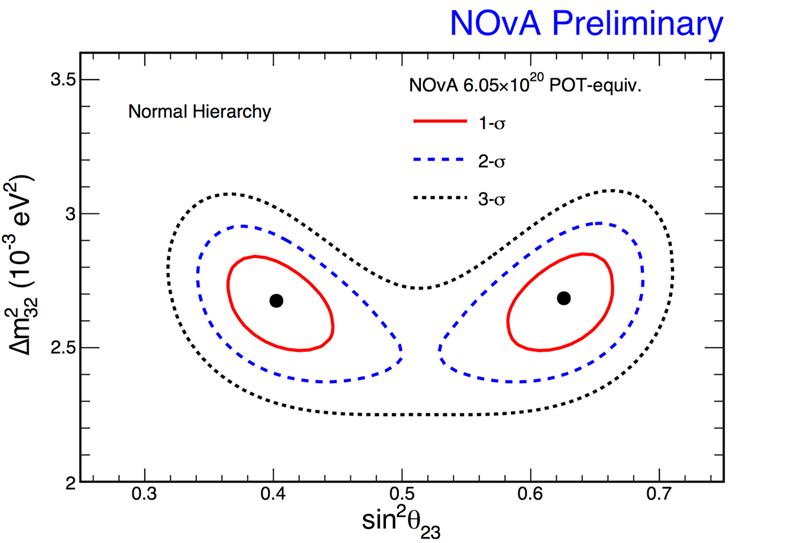

It is extremely exciting to announce that the US flagship experiment in the intensity frontier, NOvA, unveils new results with the help of AWS infrastructure, that sheds light on our understanding of the quantum universe. They see an intriguing preference for “non-maximal” mixing between neutrino identities. These results were presented at the Imperial College in London England, in the presence of researchers from around the world that are gathered for the XXVII International Conference on Neutrino Physics and Astrophysics.

From Experiment to Understanding the Data

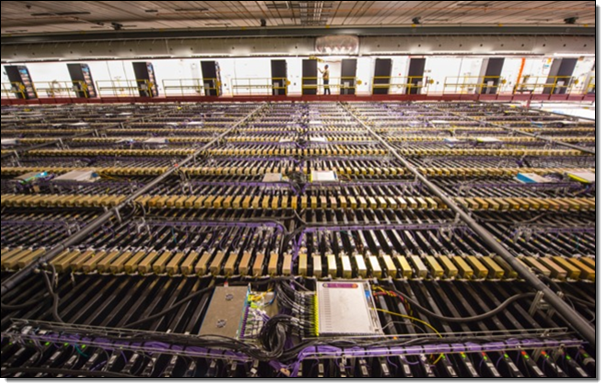

Deep in the woods at the Ash River Laboratory in northern Minnesota, close to the Canadian border, the NOvA experiment studies neutrino identities using the most intense particle beams sent from Fermilab, near Chicago, Illinois, passing through the Earth’s crust and traveling over 500 miles. As the neutrinos travel the distance between the laboratories, they undergo a fundamental change in their identities. These changes are carefully measured by the massive NOvA detector.

The detector stands 53 feet tall and 180 feet long and weights 14,000 metric tons (over 30 million pounds). It acts as a gigantic digital camera to observe and capture the faint traces of light and energy that are left by particle interactions within the detector. The experiment captures two million “pictures” per second of these interactions. The pictures are analyzed by sophisticated software. The extreme sensitivity of the detector, electronics and software allow for individual neutrino interactions to be identified, classified and measured.

The NOvA experiment is being conducted by more than 200 scientists from 41 universities and research institutions from seven countries. It is hosted by Fermilab, the leading U.S. laboratory for high-energy and particle physics.

The new result as shown below is not only consistent with the dramatic change in neutrino identity as seen in previous measurements, but has an intriguing hint that the effect is not quite as large as the theoretically expected maximum. This result is an important part of NOvA’s overall physics goals, and takes a step towards solving the decades long mystery of why there is more matter than antimatter around in the universe we see.

AWS and NOvA

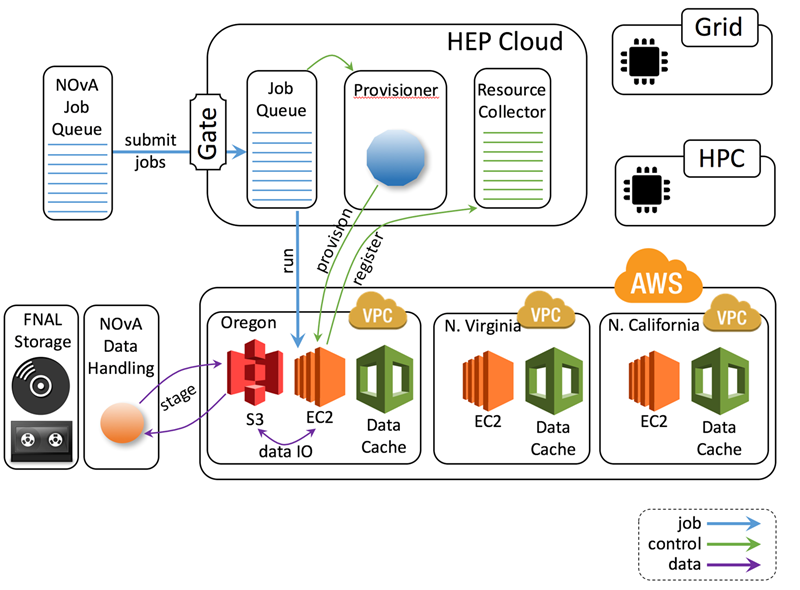

NOvA and other neutrino experiments hosted at Fermilab need to analyze over 10 PB of data each year as part of their ongoing physics analysis efforts. Historically, these analyses have been performed using a combination of dedicated on premises computing centers located at Fermilab, collaborating universities and grid federations. With the increase in data size, complexity of algorithms and the demand for large scale data processing, NOvA via Fermilab’s HEP Cloud used AWS storage and compute infrastructure in order to meet the peak demand for data/IO-intensive processing for the analysis. AWS Cloud Credits for Research Program helped immensely with the adoption and integration towards AWS Cloud.

Amazon S3 for Data Buffering

NOvA ran three major physics analysis campaigns on AWS as part of the critical path to physics results that is presented today at the Neutrino 2016 conference. Each of these analysis campaigns featured different degrees of data intensiveness.

The analysis applications consumed up to 1 GB of input per core hour of analysis and produced 1 GB of physics output. They ran at the scale of 7,500 concurrent cores on the EC2 Spot Market, for a total of over 400,000 core hours, more than doubling their processing capacity during critical weeks of a multi-month processing campaign.

By buffering the input and output data on Amazon S3, NOvA was able to feed data to the analyses at peak bandwidths above 1 GB/s, thus minimizing IO wait and cost. The image above depicts NOvA’s submission system for their data intensive workflows to the AWS infrastructure using HEP Cloud. Given the integration of the data management middleware with Amazon S3, the scientific applications will continue to use the known interfaces for handling massive amounts of data by the experiments.

The large data volume handling was also enabled by the recently upgraded peering point between Amazon in Oregon and the Energy Science Network (ESNet). This peering point provides a 100-Gbps path for data transport between AWS and the national laboratories and was used to transfer more than 100 TB of input and 150 TB of output at bandwidths ranging 5 to 12 Gbps between Fermilab and AWS. With the strengthening of the Data Egress Waiver program for the publicly funded ISP, AWS is becoming an outstanding resource for data-intensive science.

Peter Shanahan (Co-spokesperson of the NOvA experiment) told me:

Peter Shanahan (Co-spokesperson of the NOvA experiment) told me:

Our experience with Amazon Web Services shows its potential as a reliable way to meet our peak data processing needs at times of high demand.

I hope that you enjoyed this brief insight into the ways in which AWS is helping to explore the nature of our universe!

— Sanjay Padhi, Ph.D. – AWS Scientific Computing