AWS News Blog

New Mechanical Turk Resource Center

|

|

.quote20090501 { width: 80%; border: 1px dotted black; padding: 8px; margin-left: 60px; margin-bottom: 10px; }

We’ve put together a Mechanical Turk Resource Center jam-packed with interesting and useful information.

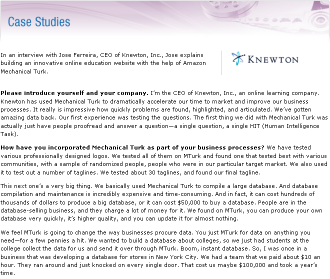

You can start by reading about How Mechanical Turk Can Help Your Business. Then you can read about how requesters are using Mechanical Turk on our customer interviews page. We’ve got some great stories from companies and schools including Casting Words, SnapMyLife, Channel Intelligence, Knewton, and the Stanford AI Lab.

We also added a guide to choosing the right tools, a getting-started checklist, and a best practices guide.

I just spent some time going through the case studies. There’s a lot of really good information and advice inside for anyone using or contemplating the use of Mechanical Turk.

I just spent some time going through the case studies. There’s a lot of really good information and advice inside for anyone using or contemplating the use of Mechanical Turk.

In the first interview you will learn how and why CastingWords was founded, and how they use the Mechanical Turk workforce to transcribe audio and to grade the resulting work.

From there you can read about how SnapMyLife uses the same workforce to moderate uploaded pictures within 3 minutes, 24 hours a day, 7 days a week, at an estimated cost savings of 50% over other outsourcing methods.

Next, continue on to the Channel Intelligence interview and read about how they have integrated Mechanical Turk into a product classification system at a cost savings of 85%. I really like this quote from the interview:

Moving right along, the CEO of Knewton talks about how they used Mechanical Turk to accelerate their business processes and reduce their time to market. They needed a database of colleges:

Knewton also used Turk to help them choose their company logo and the accompanying tagline. They test their questions, and even have their web site proofread and checked for link errors. They even paid people to spend over three hours taking one of their sample tests. He notes that they had earmarked $70,000 for site QA, but ended up spending less than $10K (via Mechanical Turk) on QA, market research, product enhancements, and so forth.

In the last interview, Stanford University Ph.D. candidate Rion Snow talks about how he used Mechanical Turk to compare natural language annotations made by experts against similar work by the Turk workforce. As he says:

Rion says that Mechanical Turk allows them to collect about 10,000 annotations per day. In previous projects he spent a lot of time simply finding and organizing a workforce so this is a big step forward for him. He sees the Turk workforce as an “always-on, always-available army of workers.”

New and existing Mechanical Turk Requesters will find a lot of helpful information in the new Amazon Mechanical Turk Best Practices Guide. This 12-page document provides guidelines for planning, designing, and testing HITs. For example, it advises the use of a comment box on each HIT. This box gives Workers the ability to provide their thoughts on the tasks that they complete. The guide also recommends that Requester organizations designate a specific person as the Mechanical Turk administrator. This person can design the HITs, interact with the community, receive and respond to feedback, and process the results. There’s information about designing HITs and the associated instructions, setting an equitable reward for successful completion of a HIT, and lots more.

New and existing Mechanical Turk Requesters will find a lot of helpful information in the new Amazon Mechanical Turk Best Practices Guide. This 12-page document provides guidelines for planning, designing, and testing HITs. For example, it advises the use of a comment box on each HIT. This box gives Workers the ability to provide their thoughts on the tasks that they complete. The guide also recommends that Requester organizations designate a specific person as the Mechanical Turk administrator. This person can design the HITs, interact with the community, receive and respond to feedback, and process the results. There’s information about designing HITs and the associated instructions, setting an equitable reward for successful completion of a HIT, and lots more.

On a slightly lighter note, Aaron Koblin recently emailed me to make sure that I was aware of his newest Turk-powered project, the Bicycle Built for 2,000. Aaron and his colleague Daniel Massey collected 2,088 voice recordings (at a cost of 6 cents per recording) which were then assembled to form the finished product. Here’s a video of the process in action:

If you are doing or have done something unique, cool, and valuable using Mechanical Turk, we’d love to hear from you. Leave a comment or send us some email.

— Jeff;