AWS News Blog

CloudTrail Update – Capture and Process Amazon S3 Object-Level API Activity

I would like to show you how several different AWS services can be used together to address a challenge faced by many of our customers. Along the way I will introduce you to a new AWS CloudTrail feature that launches today and show you how you can use it in conjunction with CloudWatch Events.

The Challenge

Our customers store many different types of mission-critical data in Amazon Simple Storage Service (Amazon S3) and want to be able to track object-level activity on their data. While some of this activity is captured and stored in the S3 access logs, the level of detail is limited and log delivery can take several hours. Customers, particularly in financial services and other regulated industries, are asking for additional detail, delivered on a more timely basis. For example, they would like to be able to know when a particular IAM user accesses sensitive information stored in a specific part of an S3 bucket.

In order to meet the needs of these customers, we are now giving CloudTrail the power to capture object-level API activity on S3 objects, which we call Data events (the original CloudTrail events are now called Management events). Data events include “read” operations such as GET, HEAD, and Get Object ACL as well as “write” operations such as PUT and POST. The level of detail captured for these operations is intended to provide support for many types of security, auditing, governance, and compliance use cases. For example, it can be used to scan newly uploaded data for Personally Identifiable Information (PII), audit attempts to access data in a protected bucket, or to verify that the desired access policies are in effect.

Processing Object-Level API Activity

Putting this all together, we can easily set up a Lambda function that will take a custom action whenever an S3 operation takes place on any object within a selected bucket or a selected folder within a bucket.

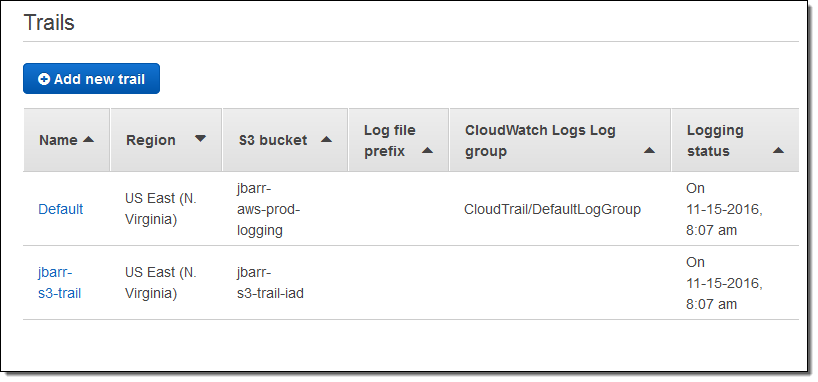

Before starting on this post, I created a new CloudTrail trail called jbarr-s3-trail:

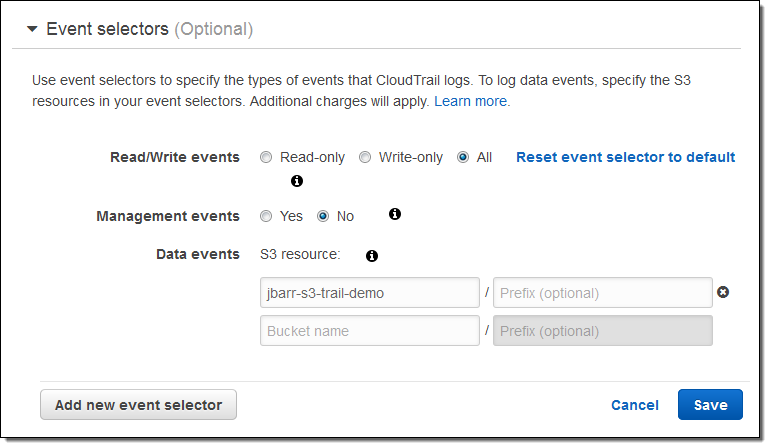

I want to use this trail to log object-level activity on one of my S3 buckets (jbarr-s3-trail-demo). In order to do this I need to add an event selector to the trail. The selector is specific to S3, and allows me to focus on logging the events that are of interest to me. Event selectors are a new CloudTrail feature and are being introduced as part of today’s launch, in case you were wondering.

I indicate that I want to log both read and write events, and specify the bucket of interest. I can limit the events to part of the bucket by specifying a prefix, and I can also specify multiple buckets. I can also control the logging of Management events:

CloudTrail supports up to 5 event selectors per trail. Each event selector can specify up to 50 S3 buckets and optional bucket prefixes.

I set this up, opened my bucket in the S3 Console, uploaded a file, and took a look at one of the entries in the trail. Here’s what it looked like:

{

"eventVersion": "1.05",

"userIdentity": {

"type": "Root",

"principalId": "99999999999",

"arn": "arn:aws:iam::99999999999:root",

"accountId": "99999999999",

"username": "jbarr",

"sessionContext": {

"attributes": {

"creationDate": "2016-11-15T17:55:17Z",

"mfaAuthenticated": "false"

}

}

},

"eventTime": "2016-11-15T23:02:12Z",

"eventSource": "s3.amazonaws.com",

"eventName": "PutObject",

"awsRegion": "us-east-1",

"sourceIPAddress": "72.21.196.67",

"userAgent": "[S3Console/0.4]",

"requestParameters": {

"X-Amz-Date": "20161115T230211Z",

"bucketName": "jbarr-s3-trail-demo",

"X-Amz-Algorithm": "AWS4-HMAC-SHA256",

"storageClass": "STANDARD",

"cannedAcl": "private",

"X-Amz-SignedHeaders": "Content-Type;Host;x-amz-acl;x-amz-storage-class",

"X-Amz-Expires": "300",

"key": "ie_sb_device_4.png"

}

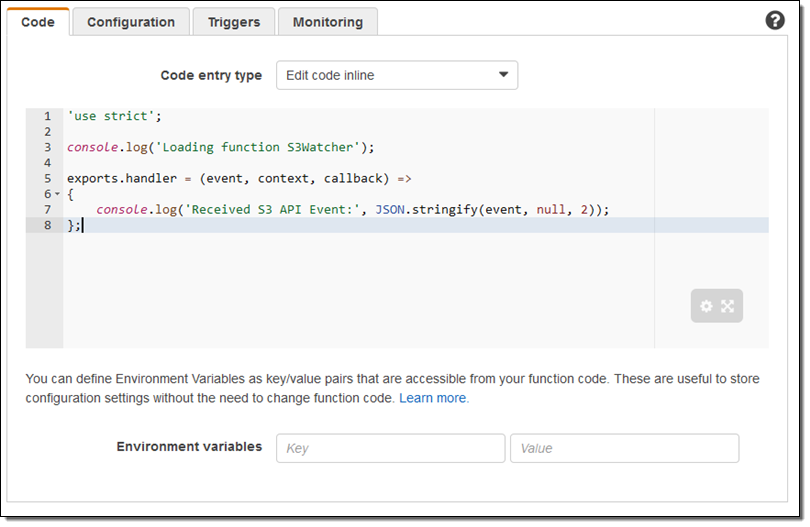

Then I create a simple Lambda function:

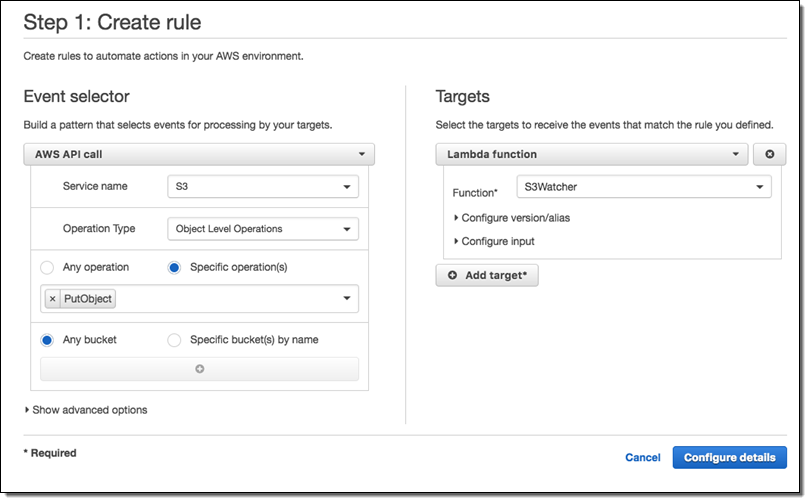

Next, I create a CloudWatch Events rule that matches the function name of interest (PutObject) and invokes my Lambda function (S3Watcher):

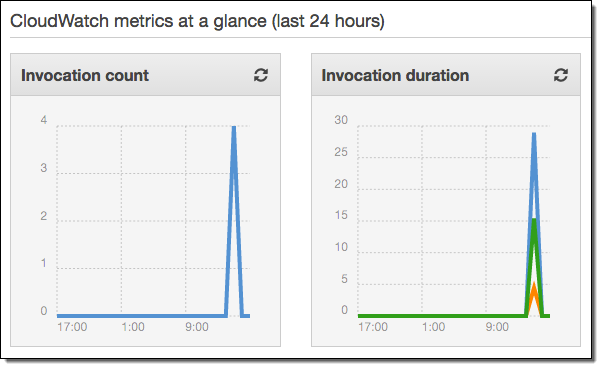

Now I upload some files to my bucket and check to see that my Lambda function has been invoked as expected:

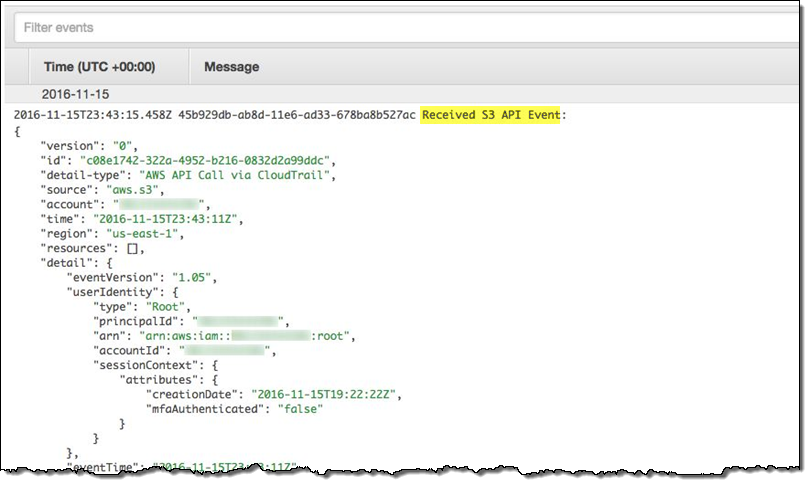

I can also find the CloudWatch entry that contains the output from my Lambda function:

Pricing and Availability

Data events are recorded only for the S3 buckets that you specify, and are charged at the rate of $0.10 per 100,000 events. This feature is available in all commercial AWS Regions.

— Jeff;