AWS News Blog

AWS at Trading Technologies

Imagine having the opportunity to rebuild a complex, legacy-filled platform from the ground up, moving it from the desktop to the cloud in the process. Imagine being able to replace a complex upgrade model that mandated the use of separate source code branches for each customer with a single, cloud-based application. Sound like a challenge?

The Situation

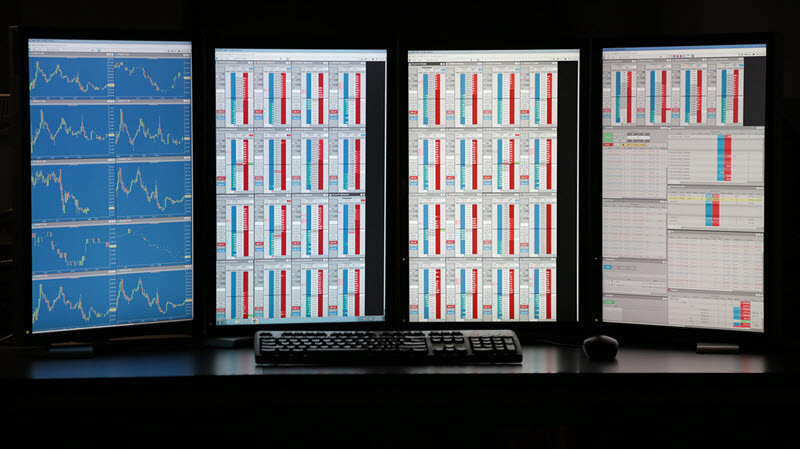

I recently spoke with Rick Lane of Trading Technologies to learn more about his experience doing just that. Rick and his team (initially 12 developers) decided to rebuild a monolithic desktop application that had been accumulating legacy functionality for close to 20 years. Built before the era of hosted software, this application allows derivative traders to enter orders and transmit them to a global network of data centers (also run by Trading Technologies) for high-speed execution. This is a business where microseconds count and delays in order execution can take the profit out of a trade.

I recently spoke with Rick Lane of Trading Technologies to learn more about his experience doing just that. Rick and his team (initially 12 developers) decided to rebuild a monolithic desktop application that had been accumulating legacy functionality for close to 20 years. Built before the era of hosted software, this application allows derivative traders to enter orders and transmit them to a global network of data centers (also run by Trading Technologies) for high-speed execution. This is a business where microseconds count and delays in order execution can take the profit out of a trade.

Due to the sensitivity of the trades, each customer performed their own acceptance tests and security reviews. This resulted in long delays between release and deployment. Further, since each customer proceeded at their own pace, source code control became a nightmare.

Each of the data centers is situated in close proximity to a futures exchange in a particular city (New York, Chicago, Tokyo, and so forth). The low-latency, high access to the exchange is a must for trading. In fact, Rick told me that some of his customers burn their trading logic and algorithms into custom chips, allowing them to make a trading decision in single-digit microseconds or less.

To the Cloud

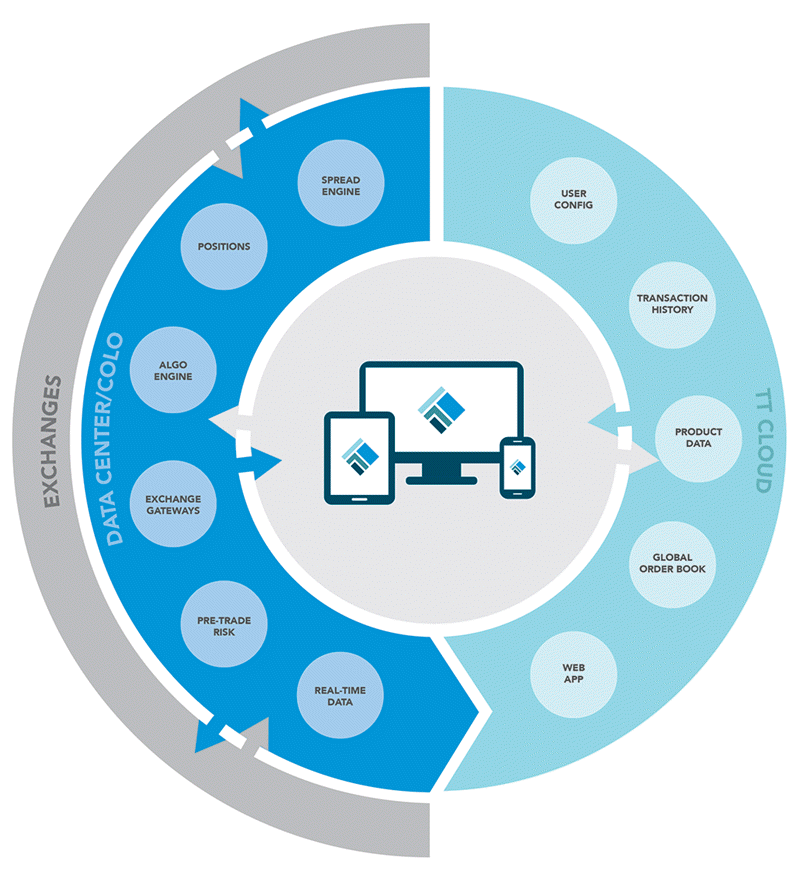

Faced with the need to provide customers with immediate access to new functionality and a more modern platform, Rick and company decided to start afresh and to design a modern, cloud-based system from scratch. They retained their existing colocation relationships with global exchanges for trade execution and used AWS to host the client side of the application.

Faced with the need to provide customers with immediate access to new functionality and a more modern platform, Rick and company decided to start afresh and to design a modern, cloud-based system from scratch. They retained their existing colocation relationships with global exchanges for trade execution and used AWS to host the client side of the application.

Hosting on the cloud allows them to deliver upgrades to customers on a continuous basis. It also allows them to build a system that lets them remove and replace any operational component without any down time.

Sophisticated traders have absolutely no tolerance for availability issues of any sort. To this end, the application spans multiple Availability Zones across multiple AWS regions. It can even sustain the loss of an entire AWS region (an exceptionally unlikely occurrence, to be sure). Trading Technologies created individual Amazon Virtual Private Cloud (Amazon VPC)s for each of their data centers.

The finished app is hosted by AWS Elastic Beanstalk. It can run in the browser or on the desktop via the OpenFin HTML5 runtime:

Data Storage Challenges

Financial regulations require companies like Trading Technologies’ customers to retain an audit trail for trading activity that goes back up to seven years. Given that a single trader can create millions of auditable data points in a single day, this is an onerous requirement, but one that must be taken very seriously.

To meet this need, Trading Technologies stores all encrypted data in a global Cassandra cluster. They host several nodes in each Availability Zone and replicate data globally using software-based Vyatta routers. Enough redundancy is in place to maintain a quorum even if an entire region becomes inaccessible. Rick plans to look at Amazon DynamoDB as a possible store for some sorts of trading data. They are also planning to push the trading data in to Amazon Redshift so that it can accessed by way of SQL queries.

Because this mountain of data is now available online in real time, traders can easily pull up their historical trading data from home or from their mobile devices. They can evaluate their automated trading strategies, which, Rick says, is “eye-opening” for them.

Customer Benefits

Trading Technologies’ trading customers see many benefits from this new system. They get access to new functionality more quickly, and in a continuous delivery model instead of the old cyclic model. Trading Technologies is able to provide customers with access to UAT (User Acceptance Test) instances of the application so that they can easily evaluate new functionality before rolling it out within their organizations.

Due to the more efficient development process and deployment model, the new system beats the old one on price! The multi-tenant, shared-resource model made possible by AWS has reduced the need for expensive, dedicated hardware in the Trading Technologies data centers.

As I mentioned earlier, the original platform contained a lot of legacy functionality and code. Unfortunately, there was no way for Trading Technologies to know which parts of their application were the most heavily used, and which ones were gathering dust. The new platform is heavily instrumented and provides information that can be used to guide the investment in new features and the deprecation of old ones.

Inside the App

The app makes use of a number of AWS services. Multiple services are hosted on Elastic Beanstalk; the front page of the application makes calls to 5 or 6 separate services. It also uses Amazon CloudWatch (for monitoring of cloud and hosted resources) and AWS CloudFormation (to set up stacks of AWS resources in rubber-stamp fashion).

A new search function (powered by Amazon CloudSearch, backed up by Amazon Relational Database Service (RDS) for MySQL) allows traders to quickly and easily locate the proper contract out of millions that are available.

Inside, the app is written in a combination of C++, Java, and Scala. The front end uses HTML5 and JavaScript. Chef is used for deployment and Apache Zookeeper helps out with some clustering and failover tasks.

Here’s a big-picture look at the overall architecture:

Thanks, Rick

I would like to thank Rick for taking the time out of his busy schedule to share so much information with me. Congrats on the launch of your new trading platform!

— Jeff;