Category: CloudFormation

CloudWatch Logs Subscription Consumer + Elasticsearch + Kibana Dashboards

Many of the things that I blog about lately seem to involve interesting combinations of two or more AWS services and today’s post is no exception. Before I dig in, I’d like to briefly introduce all of the services that I plan to name-drop later in this post. Some of this will be review material, but I do like to make sure that every one of my posts makes sense to someone who knows little or nothing about AWS.

- Amazon Kinesis is a fully-managed service for processing real-time data streams. Read my introductory post, Amazon Kinesis – Real-Time Processing of Streaming Big Data to learn more.

- The Kinesis Connector Library helps you to connect Kinesis to other AWS and non-AWS services.

- AWS CloudFormation helps you to define, create, and manage a set of related AWS resources using a template.

- CloudWatch Logs allow you to store and monitor operating system, application, and custom log files. My post, Store and Monitor OS & Application Log Files with Amazon CloudWatch, will tell you a lot more about this feature.

- AWS Lambda runs your code (currently Node.js or Java) in response to events. It manages the compute resources for you so that you can focus on the code. Read AWS Lambda – Run Code in the Cloud to learn more. Many developers are using Lambda (often in conjunction with the new Amazon API Gateway) to build highly scalable microservices that are invoked many times per second.

- VPC Flow Logs give you access to network traffic related to a VPC, VPC subnet, or Elastic Network Interface. Once again, I have a post for this: VPC Flow Logs – Log and View Network Traffic Flows.

- Finally, AWS CloudTrail records AWS API calls for your account and delivers the log files to you. Of course, I have a post for that: AWS CloudTrail – Capture AWS API Activity.

The last three items above have an important attribute in common — they can each create voluminous streams of event data that must be efficiently stored, index, and visualized in order to be of value.

Visualize Event Data

Today I would like to show you how you can use Kinesis and a new CloudWatch Logs Subscription Consumer to do just that. The subscription consumer is a specialized Kinesis stream reader. It comes with built-in connectors for Elasticsearch and S3, and can be extended to support other destinations.

We have created a CloudFormation template that will launch an Elasticsearch cluster on EC2 (inside of a VPC created by the template), set up a log subscription consumer to route the event data in to ElasticSearch, and provide a nice set of dashboards powered by the Kibana exploration and visualization tool. We have set up default dashboards for VPC Flow Logs, Lambda, and CloudTrail; you can customize them as needed or create other new ones for your own CloudWatch Logs log groups.

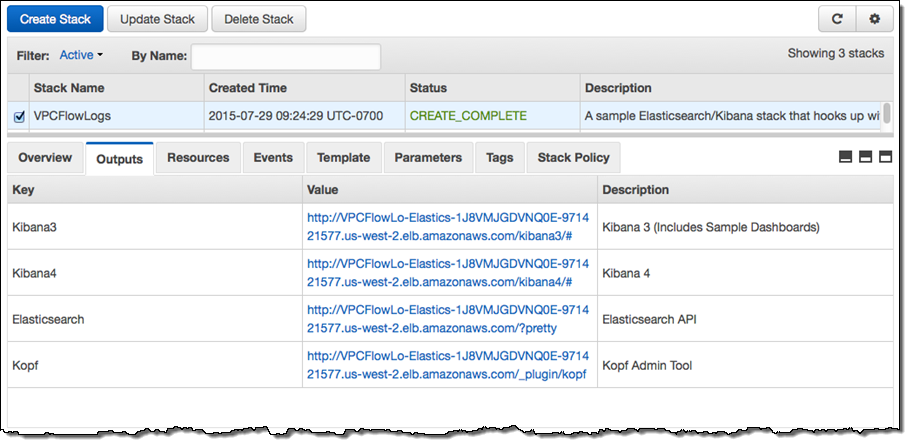

The stack takes about 10 minutes to create all of the needed resources. When it is ready, the Output tab in the CloudFormation Console will show you the URLs for the dashboards and administrative tools:

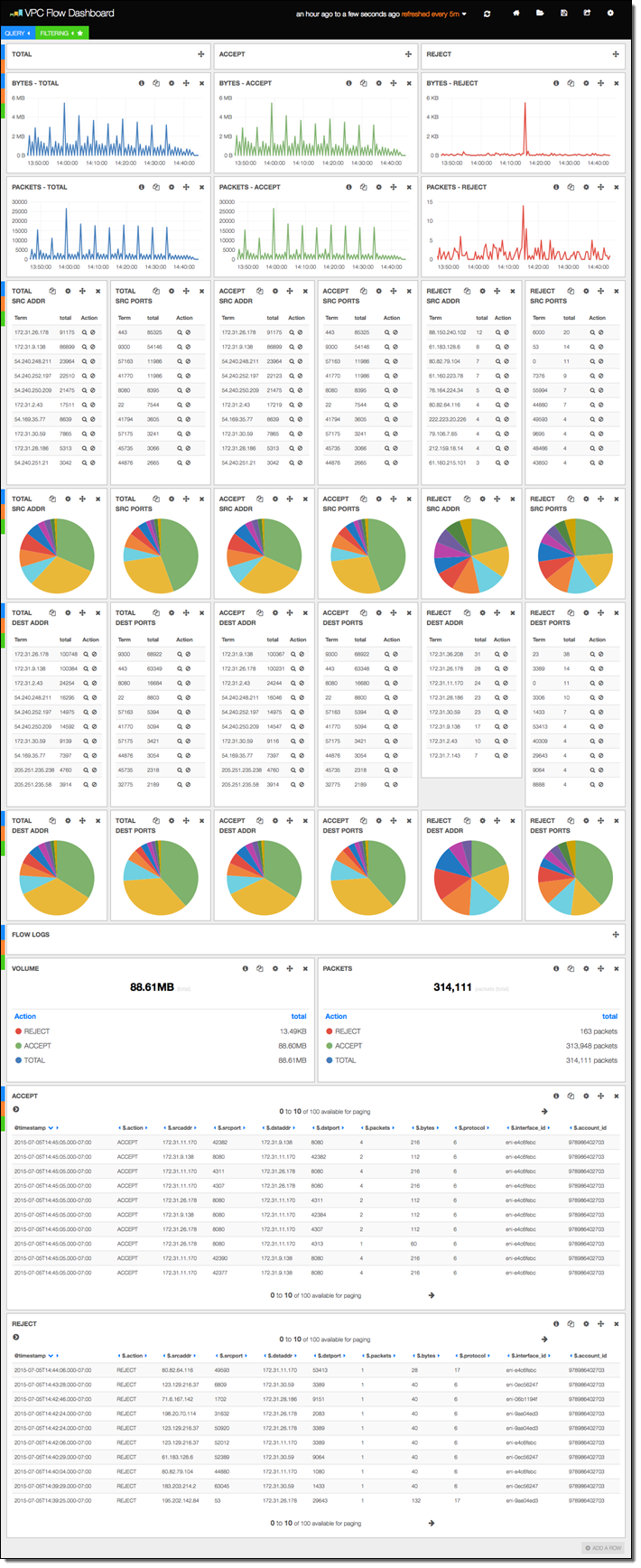

The stack includes versions 3 and 4 of Kibana, along with sample dashboards for the older version (if you want to use Kibana 4, you’ll need to do a little bit of manual configuration). The first sample dashboard shows the VPC Flow Logs. As you can see, it includes a considerable amount of information:

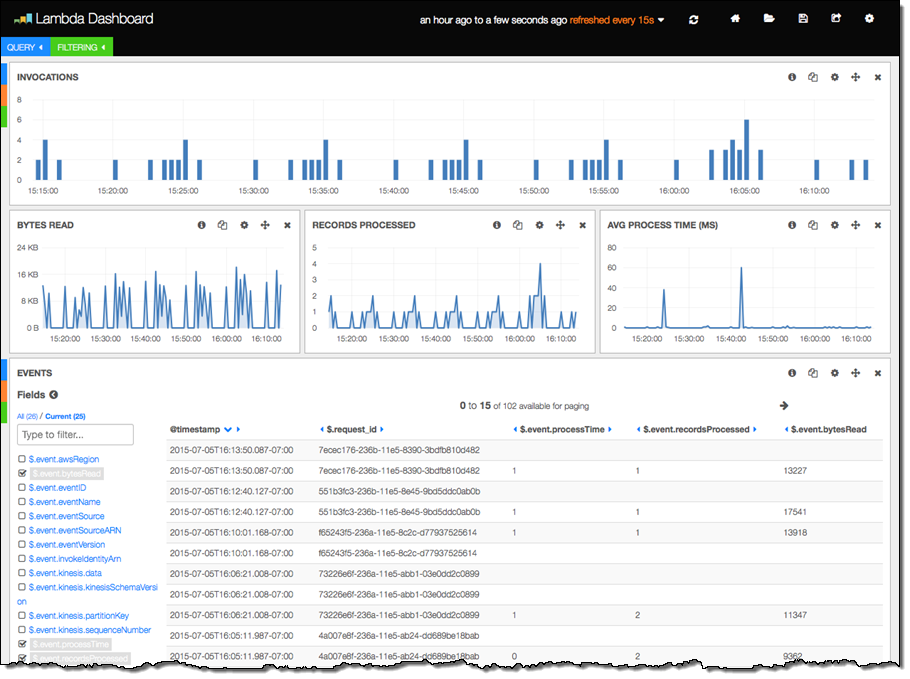

The next sample displays information about Lambda function invocations, augmented by data generated by the function itself:

The final three columns were produced by the following code in the Lambda function. The function is processing a Kinesis stream, and logs some information about each invocation:

exports.handler = function(event, context) {

var start = new Date().getTime();

var bytesRead = 0;

event.Records.forEach(function(record) {

// Kinesis data is base64 encoded so decode here

payload = new Buffer(record.kinesis.data, 'base64').toString('ascii');

bytesRead += payload.length;

// log each record

console.log(JSON.stringify(record, null, 2));

});

// collect statistics on the function's activity and performance

console.log(JSON.stringify({

"recordsProcessed": event.Records.length,

"processTime": new Date().getTime() - start,

"bytesRead": bytesRead,

}, null, 2));

context.succeed("Successfully processed " + event.Records.length + " records.");

};

There’s a little bit of magic happening behind the scenes here! The subscription consumer noticed that the log entry was a valid JSON object and instructed Elasticsearch to index each of the values. This is cool, simple, and powerful; I’d advise you to take some time to study this design pattern and see if there are ways to use it in your own systems.

For more information on configuring and using this neat template, visit the CloudWatch Logs Subscription Consumer home page.

Consume the Consumer

You can use the CloudWatch Logs Subscription Consumer in your own applications. You can extend it to add support for other destinations by adding another connector (use the Elasticsearch and S3 connectors as examples and starting points).

— Jeff;

New AWS Quick Start – SAP Business One, version for SAP HANA

We have added another AWS Quick Start Reference Deployment. The new SAP Business One, Version for SAP HANA document will show you how to get on the fast track to plan, deploy, and configure this enterprise resource planning (ERP) solution. It is powered by SAP HANA, SAP’s in-memory database.

We have added another AWS Quick Start Reference Deployment. The new SAP Business One, Version for SAP HANA document will show you how to get on the fast track to plan, deploy, and configure this enterprise resource planning (ERP) solution. It is powered by SAP HANA, SAP’s in-memory database.

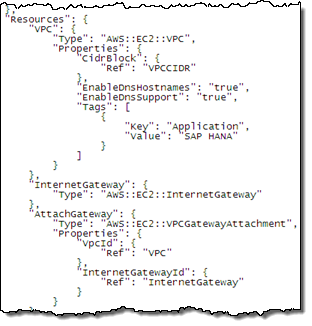

This deployment builds on our existing SAP HANA on AWS Quick Start. It makes use of Amazon Elastic Compute Cloud (EC2) and Amazon Virtual Private Cloud, and is launched via a AWS CloudFormation template.

The CloudFormation template creates the following resources, all within a new or existing VPC:

- A NAT instance in the public subnet to support inbound SSH access and outbound Internet access.

- A Microsoft Windows Server instance in the public subnet for

downloading SAP HANA media and to provide a remote desktop connection to the SAP Business One client instance.

downloading SAP HANA media and to provide a remote desktop connection to the SAP Business One client instance. - Security groups and IAM roles.

- A SAP HANA system installed with Amazon Elastic Block Store (EBS) volumes configured to meet HANA’s performance requirements.

- SAP Business One, version for SAP HANA, client and server components.

The document will help you to choose the appropriate EC2 instance types for both production and non-production scenarios. It also includes a comprehensive, step-by-step walk-through of the entire setup process. During the process, you will need to log in to the Windows instance using an RDP client in order to download and stage the SAP media.

After you make your choices, you simply launch the template, fill in the blanks, and sit back while the resources are created and configured. Exclusive of the media download (a manual step), this process will take about 90 minutes.

The quick start reference guide is available now and you can read it today!

— Jeff;

New Quick Start – Windows PowerShell Desired State Configuration (DSC) on AWS

PowerShell Desired State Configuration (DSC) is a powerful tool for system administrators. Introduced as part of Windows Management Framework 4.0, it helps to automate system setup and maintenance for Windows Server 2008 R2 and Windows Server 2012 R2, Windows 7, Windows 8.1, and Linux environments. It can install or remove server roles and features, manage registry settings, environment variables, files, directories, and services, and processes. It can also manage local users and groups, install and manage MSI and EXE packages, and run PowerShell scripts. DSC can discover the system configuration on a given instance, and it can also fix a configuration that has drifted away from the desired state.

PowerShell Desired State Configuration (DSC) is a powerful tool for system administrators. Introduced as part of Windows Management Framework 4.0, it helps to automate system setup and maintenance for Windows Server 2008 R2 and Windows Server 2012 R2, Windows 7, Windows 8.1, and Linux environments. It can install or remove server roles and features, manage registry settings, environment variables, files, directories, and services, and processes. It can also manage local users and groups, install and manage MSI and EXE packages, and run PowerShell scripts. DSC can discover the system configuration on a given instance, and it can also fix a configuration that has drifted away from the desired state.

We have just published a new Quick Start Reference Deployment to make it easier for you to take advantage of PowerShell Desired State Configuration in your AWS environment.

This new document will show you how to:

- Use AWS CloudFormation and PowerShell DSC to bootstrap your servers and applications from scratch.

- Deploy a highly available PowerShell DSC pull server environment on AWS.

- Detect and remedy configuration drift after your application stack has been deployed.

This detailed (24 page) document contains all of the information that you will need to get started. The deployed pull server is robust and fault-tolerant; it includes a pair of web servers and Active Directory domain controllers. It can be accessed from on-premises devices and from instances running in the AWS Cloud.

— Jeff;

Rapidly Deploy SharePoint on AWS With New Deployment Guide and Templates

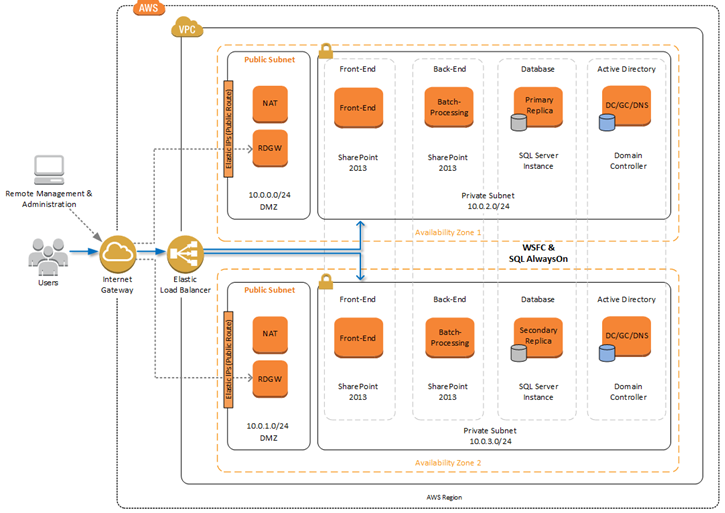

Building on top of our earlier work to bring Microsoft SharePoint to AWS, I am happy to announce that we have published a comprehensive Quick Start Reference and a set of AWS CloudFormation templates.

Building on top of our earlier work to bring Microsoft SharePoint to AWS, I am happy to announce that we have published a comprehensive Quick Start Reference and a set of AWS CloudFormation templates.

As part of today’s launch, you get a reference deployment, architectural guidance, and a fully automated way to deploy a production-ready installation of SharePoint with a couple of clicks in under an hour, all in true self-service form.

Before you run this template, you need to run our SQL Quick Start (also known as “Microsoft Windows Server Failover Clustering and SQL Server AlwaysOn Availability Groups”). It will set up Microsoft SQL Server 2012 or 2014 instances configured as a Windows Server Failover Cluster.

The template we are announcing today runs on top of this cluster. The template deploys and configures all of the “moving parts” including the Microsoft Active Directory Domain Services infrastructure and a SharePoint farm comprised of multiple Amazon Elastic Compute Cloud (EC2) instances spread across several Availability Zones within a Amazon Virtual Private Cloud.

Reference Deployment Architecture

The Reference Deployment document will walk you through all of the steps necessary to end up with a highly available SharePoint Server 2013 environment! If you use the default parameters, you will end up with the following environment, all running in the AWS Cloud.

The reference deployment incorporates the best practices for SharePoint deployment and AWS security. It contains the following AWS components:

- An Amazon Virtual Private Cloud spanning multiple Availability Zones, containing a pair of private subnets and a DMZ on a pair of public subnets.

- An Internet Gateway to allow external connections to the public subnets.

- EC2 instances in the DMZ with support for RDP to allow for remote administration.

- An Elastic Load Balancer to route traffic to the EC2 instances running the SharePoint front-end.

- Additional EC2 instances to run the SharePoint back-end.

- Additional EC2 instances to run the Active Directory Domain Controller.

- Preconfigured VPC security groups and Network ACLs

The document walks you through each component of the architecture and explains what it does and how it works. It also details an optional “Streamlined” deployment topology that can be appropriate for certain use cases along an “Office Web Apps” model that supports browser-based editing of Office documents that are stored in SharePoint libraries. There’s even an option to create an Intranet deployment that does not include an Internet-facing element.

The entire setup process is automated and needs almost no manual intervention. You will need to download SharePoint from a source that depends on your current licensing agreement with Microsoft. By default, the installation uses a trial key for deployment. In order to deploy a licensed version of SharePoint Server, you can use License Mobility Through Software Assurance.

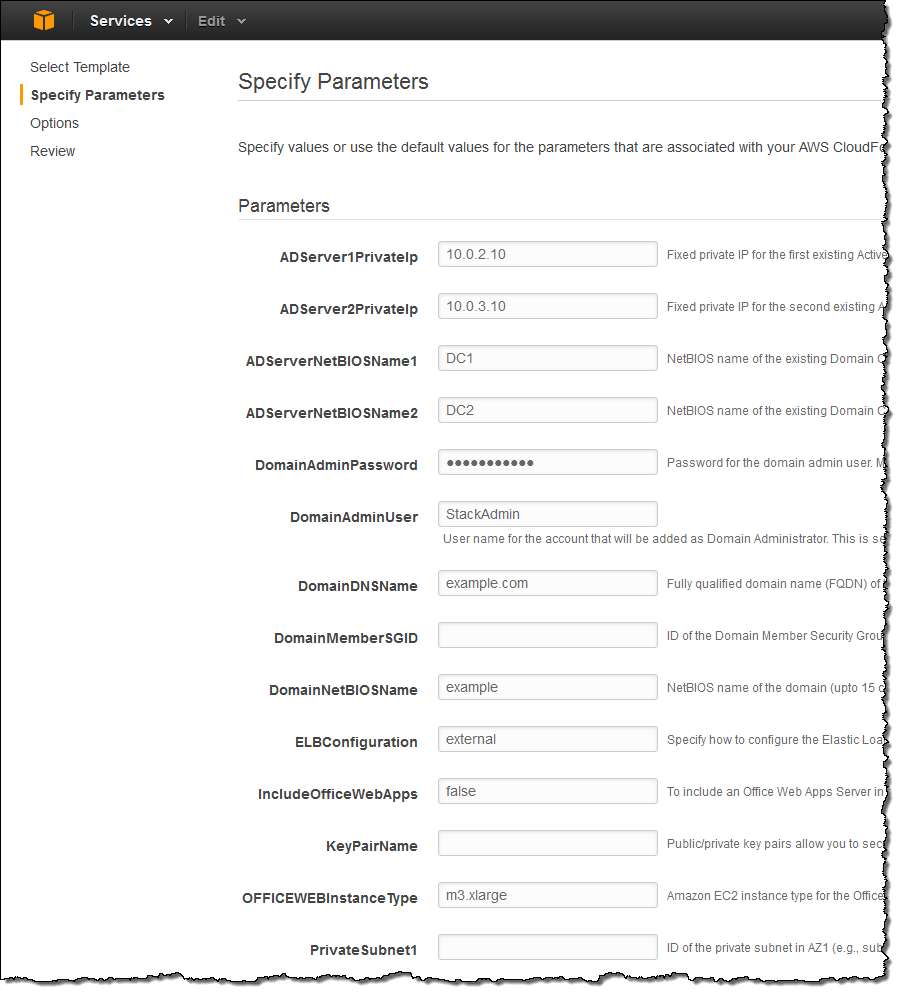

CloudFormation Template

The CloudFormation template will prompt you for all of the information needed to start the setup process:

The template is fairly complex (over 4600 lines of JSON) and is a good place to start when you are looking for examples of best practices for the use of CloudFormation to automate the instantiation of complex systems.

— Jeff;

Rapidly Deploy SAP HANA on AWS With New Deployment Guide and Templates

I have written about the SAP HANA database several times in the past year or two. Earlier this year we announced that it was certified for production deployment on AWS and that you can bring your existing SAP HANA licenses into the AWS cloud.

I have written about the SAP HANA database several times in the past year or two. Earlier this year we announced that it was certified for production deployment on AWS and that you can bring your existing SAP HANA licenses into the AWS cloud.

Today we are simplifying and automating the process of getting HANA up and running on AWS. We have published a comprehensive Quick Start Reference Deployment and a set of CloudFormation templates that provide you with a reference implementation, architectural guidance, and a fully automated way to deploy a production-ready instance of HANA in a single or multiple node configuration with a couple of clicks in under an hour, in true self-service form.

Reference Architecture

The Reference Deployment document will walk you through all of the steps. You can choose to create a single node environment for non-production use or a multi-node environment that is ready for production workloads.

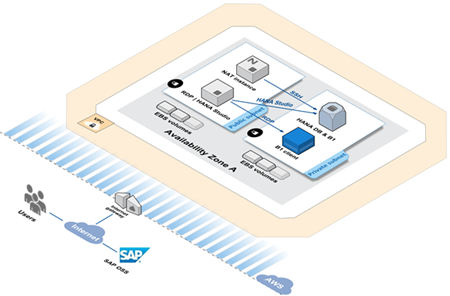

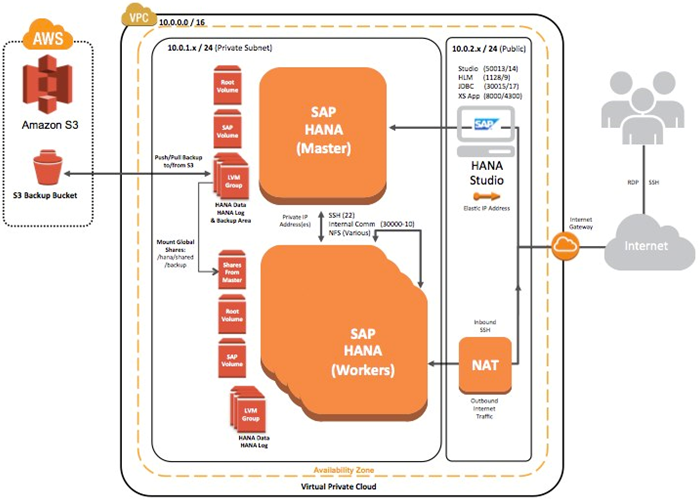

Here’s the entire multi-node architecture:

The reference implementation was designed to provide you with high performance, and it incorporates best practices for HANA deployment and AWS security. It contains the following AWS components:

- An Amazon Virtual Private Cloud (VPC) with public and private subnets.

- A NAT instance in the public subnet for outbound Internet connectivity and inbound SSH access.

- A Windows Server instance in the public subnet, preloaded with SAP HANA Studio and accessible via Remote Desktop.

- A Single or multi-node SAP HANA virtual appliance configured according to SAP best practices on a supported OS (SUSE Linux).

- An AWS Identity and Access Management (IAM) role with fine-grained permissions.

- An S3 bucket for backups.

- Preconfigured VPC security groups.

The single and multi-node implementations use EC2’s r3.8xlarge instance types. Each of these instances is packed with 244 GiB of RAM and 32 virtual CPUs, all on the latest Intel Xeon Ivy Bridge processors.

Both implementations include 2.4 TB of EBS storage, configured as a dozen 200 GB volumes connected in RAID 0 fashion. Non-production environments use standard EBS volumes; production environments use Provisioned IOPS volumes, each capable of delivering 2000 IOPS.

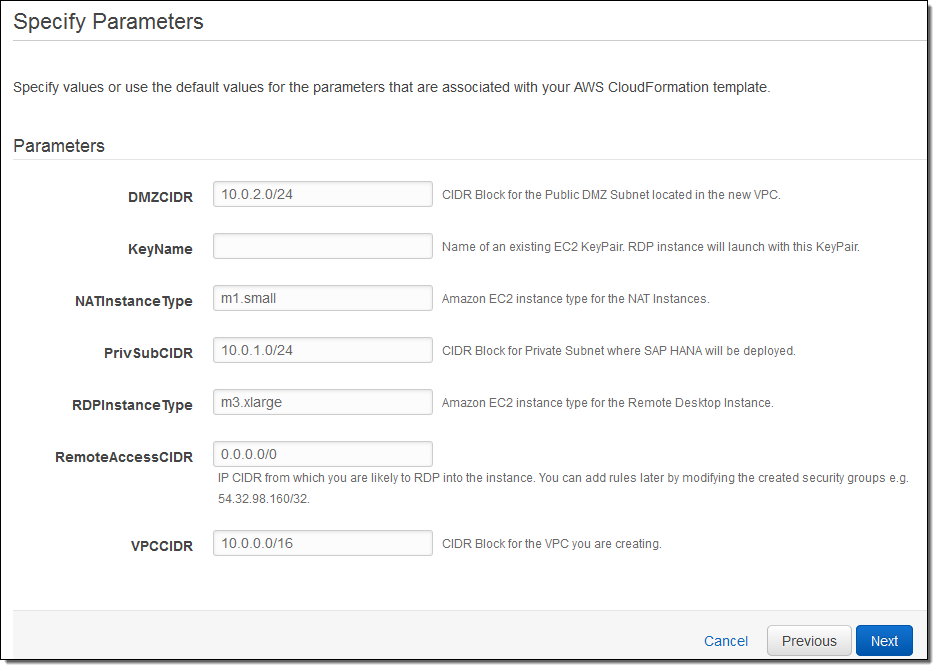

When you follow the instructions in the document and get to the point where you are ready to use the CloudFormation template, you will have the opportunity to supply all of the parameters that are needed to create the AWS resources and to set up SAP HANA:

For More Information

To learn more about this and other ways to get started on AWS with popular enterprise applications, visit our AWS Quick Start Reference Deployments page.

— Jeff;

AWS CloudTrail Update – Seven New Services & Support From CloudCheckr

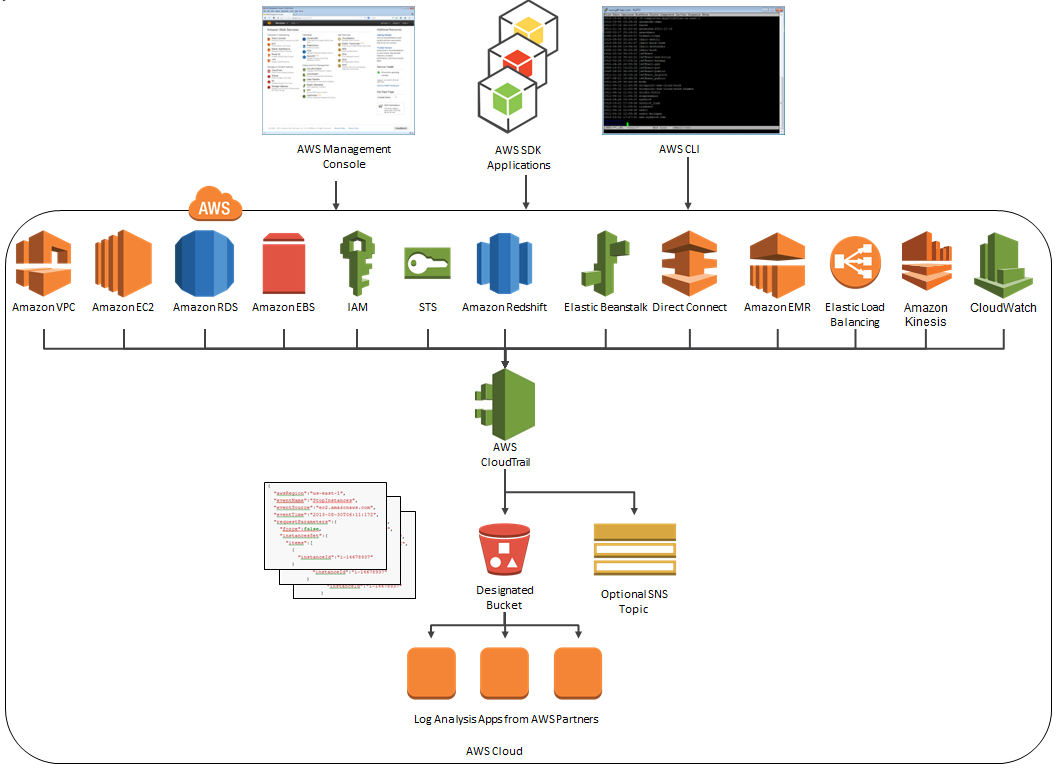

AWS CloudTrail records the API calls made in your AWS account and publishes the resulting log files to an Amazon S3 bucket in JSON format, with optional notification to an Amazon SNS topic each time a file is published.

Our customers use the log files generated CloudTrail in many different ways. Popular use cases include operational troubleshooting, analysis of security incidents, and archival for compliance purposes. If you need to meet the requirements posed by ISO 27001, PCI DSS, or FedRAMP, be sure to read our new white paper, Security at Scale: Logging in AWS, to learn more.

Over the course of the last month or so, we have expanded CloudTrail with support for additional AWS services. I would also like to tell you about the work that AWS partner CloudCheckr has done to support CloudTrail.

New Services

At launch time, CloudTrail supported eight AWS services. We have added support for seven additional services over the past month or so. Here’s the full list:

- Amazon EC2

- Elastic Block Store (EBS)

- Virtual Private Cloud (VPC)

- Relational Database Service (RDS)

- Identity and Access Management (IAM)

- Security Token Service (STS)

- Redshift

- CloudTrail

- Elastic Beanstalk – New!

- Direct Connect – New!

- CloudFormation – New!

- Elastic MapReduce – New!

- Elastic Load Balancing – New!

- Kinesis – New!

- CloudWatch – New!

Here’s an updated version of the diagram that I published when we launched CloudTrail:

News From CloudCheckr

CloudCheckr (an AWS Partner) integrates with CloudTrail to provide visibility and actionable information for your AWS resources. You can use CloudCheckr to analyze, search, and understand changes to AWS resources and the API activity recorded by CloudTrail.

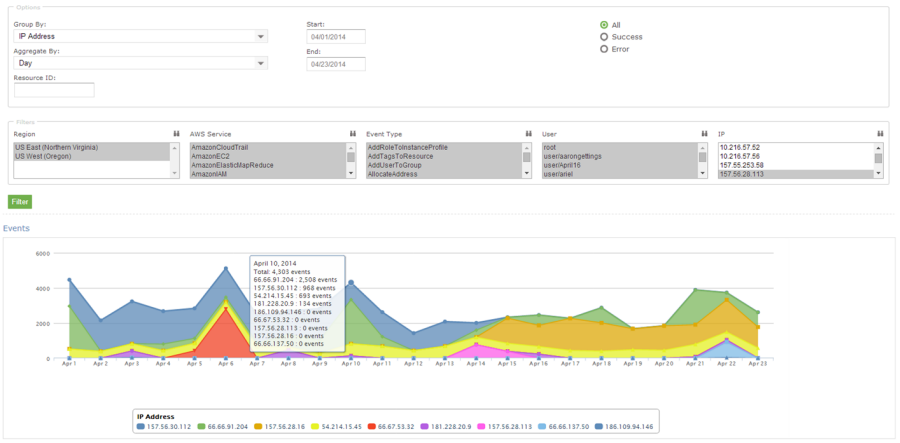

Let’s say that an AWS administrator needs to verify that a particular AWS account is not being accessed from outside a set of dedicated IP addresses. They can open the CloudTrail Events report, select the month of April, and group the results by IP address. This will display the following report:

As you can see, the administrator can use the report to identify all the IP addresses that are being used to access the AWS account. If any of the IP addresses were not on the list, the administrator could dig in further to determine the IAM user name being used, the calls being made, and so forth.

CloudCheckr is available in Freemium and Pro versions. You can try CloudCheckr Pro for 14 days at no charge. At the end of the evaluation period you can upgrade to the Pro version or stay with CloudCheckr Freemium.

— Jeff;