Category: Amazon RDS

Summer Startups: Sportaneous

Over the summer months, we’d like to share a few stories from startups around the world: what are they working on and how they are using the cloud to get things done. Today, we’re profiling Sportaneous from New York City!

The Story

I first learned about Sportaneous after reading about NYC Big Apps, an application contest launched by the city of New York, BigApps is a competition that is organized by Mayor Bloomberg. Its goal is to reward apps that improve NYC by using public data sets released by the local government.

Sportaneous jumped at the opportunity to enter the contest because their applications already offered users a database of public sports facilities to choose from, many of which are obtained from Park & Recreation data. Sportaneous makes it easy for busy people to play sports or engage in group fitness activities. Through the Sportaneous mobile app and website, a person can quickly view all sports games and fitness activities that have been proposed in her surrounding neighborhoods. The user can choose to join whichever game best fits her schedule, location, and skill level. Alternatively, a Sportaneous user may spontaneously propose a game herself (for example, a beginner soccer game in Central Park three hours from now), which allows all Sportaneous users in the Manhattan area to join the game until the maximum number of players has been reached.

From the over 50 applications that entered the NYC BigApps competition, Sportaneous won two of the main awards: the Popular Vote Grand Prize, based on over 9,500 people voting for their favorite app in the contest and the Second Overall Grand Prize voted on by a panel of very distinguished judges, including Jack Dorsey (Co-founder, Twitter), Naveen Selvadurai (Co-founder, Foursquare) and prominent tech investors in NYC.

Here is a video of Sportaneous in action:

From the CEO

I spoke to Omar Haroun, CEO and Co-Founder at Sportaneous about how they got started and ended up using AWS. He shared a bit about their humble beginnings and how their growth plans continued to include AWS:

We initially bootstrapped the service using a single EC2 instance. We used an off-the-shelf AMI with a backing EBS volume, so we could fine-tune the machine’s configuration as we started higher traffic numbers. We wanted a low cost, reliable hosting option which we knew had the ability to scale gracefully (and very quickly) when needed. EC2 allowed us to get up and running in a matter of hours, without forcing any design compromises which we’d later regret.

As traffic has grown and we’ve begun preparing for a public launch, we’re planning to move our MySQL databases to RDS and to take advantage of some additional ELB features (including SSL termination). RDS was also a no-brainer. We realize that any data-loss event would be devastating to our momentum, but we don’t have the resources for a full time DBA (or even a database expert). RDS and its cross-AZ replication takes a huge amount of pressure off of our shoulders.

Behind the Scenes

I asked Omar to tell me a bit about the technology behind Sportaneous. Here’s what he told me:

Our web app is written in Scala, using the very awesome Lift Framework. Our iPhone App is written in Objective-C. Both web app and iPhone app are thin clients on top of a backend implemented in Java, using the Hibernate persistence framework. Our EC2 boxes (which serve both our web app and our backend) run Jetty behind nginx.

He wrapped up on a very positive note:

Using EC2 with off the shelf AMIs, we went from zero to scalable, performant web app in under two hours.

The AWS Startup Challenge

We’re getting ready to launch this year’s edition of our own annual contest, the AWS Startup Challenge. You can sign up to get notified when we launch it, or you can follow @AWSStartups on Twitter.

— Jeff;

Now Available Amazon RDS for Oracle Database

A few months ago I told you that we were planning to support Oracle Database 11g (Release 2) via the Relational Database Service (RDS). That support is now ready to go, and you can start launching Database Instances today.

We’ve set this up so that you have lots of choices and plenty of flexibility to ensure a good operational and licensing fit. Here are the choices that you get to make:

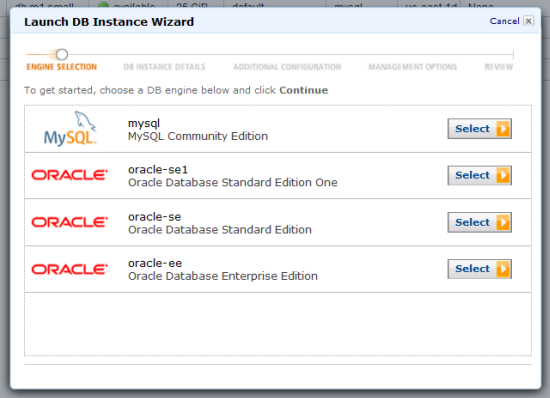

Database Edition

- Standard Edition One

- Standard Edition

- Enterprise Edition

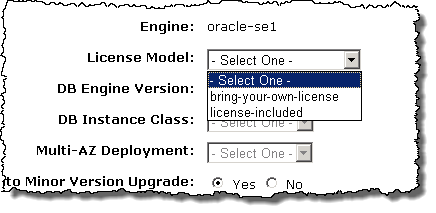

License Model

- Bring Your Own License (all editions)

- License Included (Standard Edition One)

Instance Class

- Small

- Large

- High-Memory Extra Large

- High-Memory Double Extra Large

- High-Memory Quadruple Extra Large

Pricing Options

- On-Demand DB Instances

- Reserved DB Instances

If you already have the appropriate Oracle Database licenses you can simply bring them in to the cloud. You can also launch Standard Edition One DB Instances with a license included at a slightly higher price.

The AWS Management Console‘s Launch DB Instance Wizard will lead you through all of the choices, starting from the database engine and edition:

If you select an Oracle database engine, you can choose your license model:

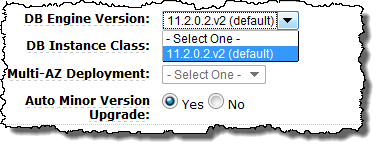

And your engine version:

All of the instances are preconfigured with a sensible set of default parameters that should be more than sufficient to get you started. You can use the RDS DB Parameter Groups to exercise additional control over a large number of database parameters.

As is generally the case with AWS, we’ll be adding even more functionality to this service in the months to come. Already on the drawing board is support for enhanced fault tolerance.

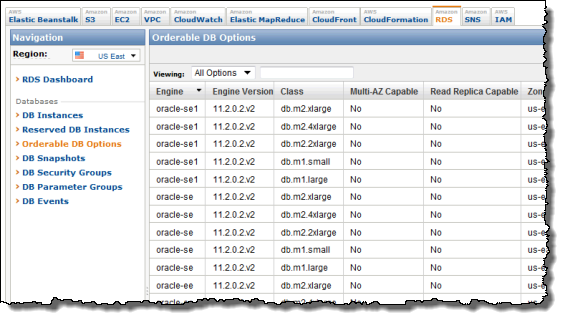

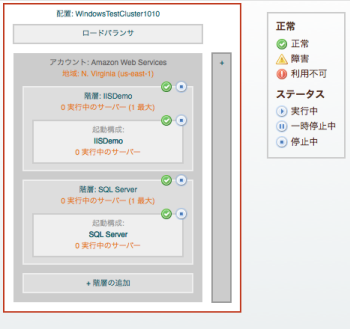

We have also added a new feature to the console that will make it easier for you to see the range of options available to you when you use RDS. Just click on Orderable DB Options to see which database engines, versions, instance classes, and options are available to you in each Region and Availability Zone:

You can get this information programmatically using the DescribeOrderableDBInstanceOptions function or the rds-describe-orderable-db-instance-options command.

When I demonstrate Amazon RDS to developers I get the sense that it really changes their conception of what a database is and how they can use it. They enter the room thinking of the database as a static entity, one that they create once in a great while and leave thinking that they can now create databases on a dynamic, as-needed basis for development, experimentation, testing, and the like.

Read more about Amazon RDS for Oracle Database!

— Jeff;

IAM: AWS Identity and Access Management – Now Generally Available

Our customers use AWS in many creative and innovative ways, continuously introducing new use cases and driving us to solve unexpected and complex problems. We are constantly improving our capabilities to make sure that we support a very wide variety of use cases and access patterns.

Our customers use AWS in many creative and innovative ways, continuously introducing new use cases and driving us to solve unexpected and complex problems. We are constantly improving our capabilities to make sure that we support a very wide variety of use cases and access patterns.

In particular, we want to make sure that developers at any level of experience and sophistication (from a student in a dorm room to an employee of a multinational corporation) have complete control over access to their AWS resources.

AWS Identity and Access Management (IAM) lets you manage users, groups of users, and access permissions for AWS services and resources. You can also use IAM to centrally manage security credentials such as access keys, passwords, and MFA devices. Effective immediately, IAM is now a Generally Available (GA) service!

Using IAM you can create users (representing a person, an organization, or an application, as desired) within an existing AWS Account. You can also group users to apply the same set of permissions. The groups can represent functional boundaries (development vs. test), organizational boundaries (main office vs. branch office), or job function (manager, tester, developer, or system administrator). Each user can be a member of multiple groups (branch office, manager). For maximum security, newly created users have no permissions. All permission control is accomplished using policy documents containing policy statements which grant or deny access to AWS service actions or resources.

IAM can be accessed through APIs, a command line interface, and through the AWS Management Console (I’ve written a separate post about the console support).

Here are some examples of the IAM command line interface in action. Let’s create a user that can create and manage other users and then use this user to create a couple of additional users. Then we’ll give one user the ability to access Amazon S3.

The iam-userlistbypath command lists all or some of the users in the account:

C:\>

There are no default users. Let’s create a user “jeff” using the iam-usercreate command (“/family” is a path that further qualifies the names):

AKIAIYPZGF3ABUC2LQELQ

bbYJpBtRQr635j8QVsCpstrLMS7Mf+ihsLabqEQL

The -k argument causes iam-usercreate to create an AWS access key (both the access key id and the secret access key) for each user. These keys are the credentials needed to access data controlled by the account. They can be inserted in to any application or tool that currently accepts an access key id and a secret access key. Note: It is important to capture and save the secret access key at this point; there’s no way to retrieve it if you lose it (you can create a new set of credentials if necessary).

We can use iam-userlistbypath to verify that we now have one user:

arn:aws:iam::889279108296:user/family/jeff

However, user “jeff” has no access because we have not granted him any permissions. The iam-useraddpolicy command is used to add permissions to a user. The iam-groupaddpolicy command can be used to do the same for a group. Let’s add a policy that gives me (user “jeff”) permission to use the IAM APIs on users under the “/app” path. I might not be the only user in my account that should have this permission so I’ll start by creating a group and granting the permissions to the group and then add “jeff” to the group.

I (identifying myself as user “jeff” using the credentials that I just created) can now create and manage users under the “/app” path. Let’s create users for two of my applications (“syndic8” and “backup”) using “/app” as the path. I can use the same command that I used to create user “jeff”:

kgRiohPeBGyY6iDx7qzqSzCyrang6YUo67etcGat

iXdFDaA15VUImTo2MrmErSvTloTeK4ERNIESw78R

I can list only the application users I created by providing an argument to iam-userlist:

arn:aws:iam::889279108296:user/app/syndic8

Neither “backup” nor “syndic8” have any permissions yet. I can use the access keys for user “jeff” to grant permission for the “backup” user to use all of the S3 APIs on any of my S3 resources:

This policy allows the user named “backup” to use all of the S3 APIs on any of my S3 resources, but not to access any other AWS service that my AWS Account has subscribed to.

The iam-listuserpolicies command displays the policies associated with a user; the -v option displays the contents of each policy:

{“Version”:”2008-10-17″,”Statement”:[{“Effect”:”Allow”,”Action”:[“s3:*”],”Resource”:[“arn:aws:s3::*”]}]}

So, by giving my user (“jeff”) the appropriate privileges, I can minimize the use of my AWS Account credentials for access to AWS services.

You can think of the AWS Account as you would think about the Unix root (superuser) account. To get full value from IAM you should start using it when you are the only developer and you only have one application, adding users, groups, and policies as your environment becomes more complex. You can protect the AWS Account using an MFA device, and you should always sign your AWS calls using the access keys from a particular user. Once you have fully adopted IAM there should be no reason to use the AWS Account’s credentials to make a call to AWS.

There are a number of other commands (fully documented in the IAM CLI Reference). Like all of the other AWS command-line tools, the IAM tools make use of the IAM APIs, all of which are documented in the IAM API Reference.

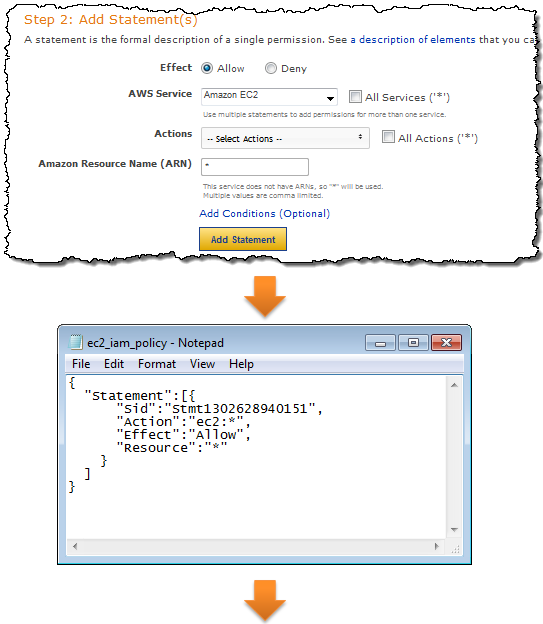

The AWS Policy Generator can be used to create policies for use with the IAM command line tools. After the policy is created it must be uploaded — use iam-useruploadpolicy instead of iam-useraddpolicy:

IAM controls access to each service in an appropriate way. You can control access to the actions (API functions) of any supported service. You can also control access to IAM, SimpleDB, SQS, S3, SNS, and Route 53 resources. The integration is done in a seamless fashion; all of the existing APIs continue to work as expected (subject, of course, to the permissions established by the use of IAM) and there is no need to change any of the application code. You may decide to create a unique set of credentials for each application using IAM. If you do this, you’ll need to embed the new credentials in each such application.

IAM currently integrates with Amazon EC2, Amazon RDS, Amazon S3, Amazon SimpleDB, Amazon SNS, Amazon SQS, Amazon VPC, Auto Scaling, Amazon Route 53, Amazon CloudFront, Amazon ElasticMapReduce, Elastic Load Balancing, AWS CloudFormation, Amazon CloudWatch, and Elastic Block Storage. IAM also integrates with itself as you saw in my example, you can use it to give certain users or groups the ability to perform IAM actions such as creation of new users.

The AWS Account retains control of all of the data. Also, all accounting still takes place at the AWS Account level, so all usage within the account will be rolled up in to a single bill.

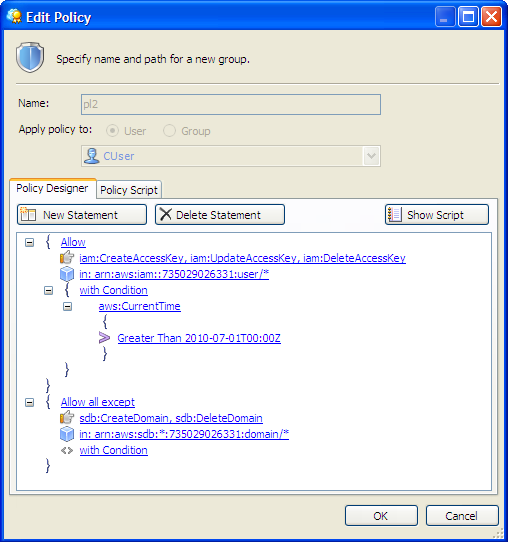

We have seen a wide variety of third-party tools and toolkits add support for IAM already. For example, the newest version of CloudBerry Explorer already supports IAM. Here’s a screen shot of their Policy Editor:

Here are some other applications and toolkits that also support IAM:

- Boto (Python toolkit) – Complete details described in this blog post.

- Ylastic – AWS Management GUI for mobile devices.

- S3 Browser – New Bucket Sharing Wizard.

- SDB Explorer – Complete details in this blog post.

- CloudWorks – AWS Management Tool with Japanese-language UI.

- Bucket Explorer Team Edition – More info here.

Eric Hammond’s article, Improving Security on EC2 With AWS Identity and Access Management (IAM), shows you how to use IAM to create a user that can create EBS snapshots and nothing more. As Eric says:

The release of AWS Identity and Access Management alleviates one of the biggest concerns security-conscious folks used to have when they started using AWS with a single key that gave complete access and control over all resources. Now the control is entirely in your hands.

The features that I have described above represent our first steps toward our long-term goals for IAM. However, we have a long (and very scenic) journey ahead of us and we are looking for additional software engineers, data engineers, development managers, technical program managers, and product managers to help us get there. If you are interested in a full-time position on the Seattle-based IAM team, please send your resume to aws-platform-jobs@amazon.com.

I think you’ll agree that IAM makes AWS an even better choice for any type of deployment. As always, please feel free to leave me a comment or to send us some email.

–Jeff;

Amazon RDS Backup and Maintenance Windows Shortened

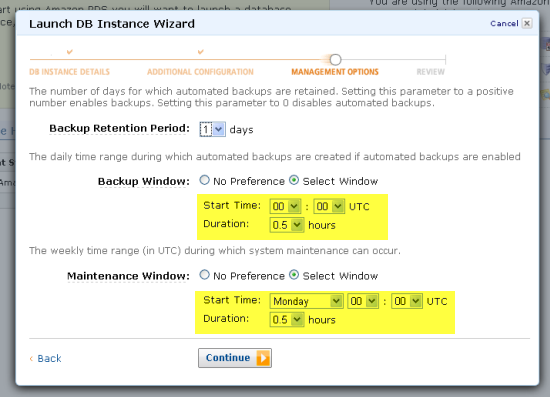

When you create an Amazon Relational Database Service (RDS) DB Instance you need to specify the desired times for the daily backup window and the weekly maintenance window:

If you have enabled backups for a particular DB Instance (by setting the Backup Retention Period to a non-zero value), Amazon RDS will create a snapshot backup at some point within the Backup Window. Effective immediately, we are reducing the duration of the Backup Window from two hours to thirty minutes.

We use the Maintenance Window to install patches and to take care of other maintenance issues on an as-needed basis. This doesn’t actually happen very often; we performed just two maintenance operations during the last year. Again, effective immediately, we are reducing the duration of the Maintenance Window from four hours to thirty minutes.

If you are running a Multi-AZ deployment of Amazon RDS, backups and maintenance occur on your standby instance, minimizing any impact on the primary instance.

If you have not defined a custom backup or maintenance window and used the default value provided by Amazon RDS for any of your existing DB Instances, note that the default window dates and times will be changed on March 14, 2011. Please refer to this forum post for more information.

— Jeff;

Now Open: AWS Region in Tokyo

I have made many visits to Japan over the last several years to speak at conferences and to meet with developers. I really enjoy the people, the strong sense of community, and the cuisine.

I have made many visits to Japan over the last several years to speak at conferences and to meet with developers. I really enjoy the people, the strong sense of community, and the cuisine.

Over the years I have learned that there’s really no substitute for sitting down, face to face, with customers and potential customers. You can learn things in a single meeting that might not be obvious after a dozen emails. You can also get a sense for the environment in which they (and their users or customers) have to operate. For example, developers in Japan have told me that latency and in-country data storage are of great importance to them.

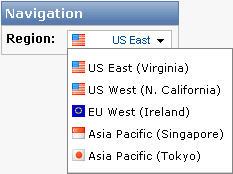

Long story short, we’ve just opened up an AWS Region in Japan, Tokyo to be precise. The new region supports Amazon EC2 (including Elastic IP Addresses, Amazon CloudWatch, Elastic Block Storage, Elastic Load Balancing, VM Import, and Auto Scaling), Amazon S3, Amazon SimpleDB, the Amazon Relational Database Service, the Amazon Simple Queue Service, the Amazon Simple Notification Service, Amazon Route 53, and Amazon CloudFront. All of the usual EC2 instance types are available with the exception of the Cluster Compute and Cluster GPU. The page for each service includes full pricing information for the Region.

Although I can’t share the exact location of the Region with you, I can tell you that private beta testers have been putting it to the test and have reported single digit latency (e.g. 1-10 ms) from locations in and around Tokyo. They were very pleased with the observed latency and performance.

Existing toolkit and tools can make use of the new Tokyo Region with a simple change of endpoints. The documentation for each service lists all of the endpoints for each service.

Existing toolkit and tools can make use of the new Tokyo Region with a simple change of endpoints. The documentation for each service lists all of the endpoints for each service.

This offering goes beyond the services themselves. We also have the following resources available:

- A Japanese-language version of the AWS website.

- A Japanese-language version of the AWS Simple Monthly Calculator with new region pricing.

- An AWS forum for Japanese-speaking developers.

- Japanese-speaking AWS Sales and Business Development teams.

- An AWS Premium Support team ready to support the Japanese-speaking market.

- A team of AWS Solutions Architects who can speak Japanese.

- An AWS blog in Japanese.

- A brand-new Japanese edition of my AWS Book.

Put it all together and developers in Japan can now build applications that respond very quickly and that store data within the country.

The JAWS-UG (Japan AWS User Group) is another important resource. The group is headquartered in Tokyo, with regional branches in Osaka and other cities. I have spoken at JAWS meetings in Tokyo and Osaka and they are always a lot of fun. I start the meeting with an AWS update. The rest of the meeting is devoted to short “lightning” talks related to AWS or to a product built with AWS. For example, the developer of the Cacoo drawing application spoke at the initial JAWS event in Osaka in late February. Cacoo runs on AWS and features real-time collaborative drawing.

The JAWS-UG (Japan AWS User Group) is another important resource. The group is headquartered in Tokyo, with regional branches in Osaka and other cities. I have spoken at JAWS meetings in Tokyo and Osaka and they are always a lot of fun. I start the meeting with an AWS update. The rest of the meeting is devoted to short “lightning” talks related to AWS or to a product built with AWS. For example, the developer of the Cacoo drawing application spoke at the initial JAWS event in Osaka in late February. Cacoo runs on AWS and features real-time collaborative drawing.

We’ve been working with some of our customers to bring their apps to the new Region ahead of the official launch. Here is a sampling:

Zynga is now running a number of their applications here. In fact (I promise I am not making this up) I saw a middle-aged man playing Farmville on his Android phone on the subway when I was in Japan last month. He was moving sheep and fences around with rapid-fire precision!

The enStratus cloud management and governance tools support the new region.

The enStratus cloud management and governance tools support the new region.

enStratus supports role-based access, management of encryption keys, intrusion detection and alerting, authentication, audit logging, and reporting.

All of the enStratus AMIs are available. The tools feature a fully localized user interface (Cloud Manager, Cluster Manager, User Manager, and Report) that can display text in English, Japanese, Korean, Traditional Chinese, and French.

enStratus also provides local currency support and can display estimated operational costs in JPY (Japan / Yen) and a number of other currencies.

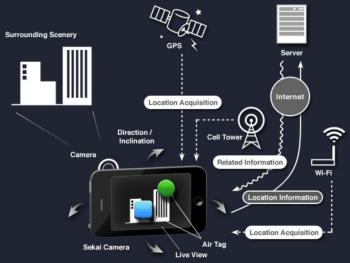

Sekai Camera is a very cool augmented reality application for iPhones and Android devices. It uses the built-in camera on each device to display a tagged, augmented version of what the camera is looking at. Users can leave “air tags” at any geographical location. The application is built on AWS and makes use of a number of services including EC2, S3, SimpleDB, SQS, and Elastic Load Balancing. Moving the application to the Tokyo Region will make it even more responsive and interactive.

Sekai Camera is a very cool augmented reality application for iPhones and Android devices. It uses the built-in camera on each device to display a tagged, augmented version of what the camera is looking at. Users can leave “air tags” at any geographical location. The application is built on AWS and makes use of a number of services including EC2, S3, SimpleDB, SQS, and Elastic Load Balancing. Moving the application to the Tokyo Region will make it even more responsive and interactive.

G-Mode Games is running a multi-user version of Tetris in the new Region. The game is available for the iPhone and the iPod and allows you to play against another person.

G-Mode Games is running a multi-user version of Tetris in the new Region. The game is available for the iPhone and the iPod and allows you to play against another person.

Cloudworks is a management tool for AWS built in Japan, and with a Japanese language user interface. It includes a daily usage report, scheduled jobs, and a history of all user actions. It also supports AWS Identity and Access Management (IAM) and copying of AMIs from region to region.

Cloudworks is a management tool for AWS built in Japan, and with a Japanese language user interface. It includes a daily usage report, scheduled jobs, and a history of all user actions. It also supports AWS Identity and Access Management (IAM) and copying of AMIs from region to region.

Browser 3Gokushi is a well-established RPG (Role-Playing Game) that is now running in the new region.

Browser 3Gokushi is a well-established RPG (Role-Playing Game) that is now running in the new region.

Here’s some additional support that came in after the original post:

- RightScale has been supporting our Japanese customers during the months leading up to today’s release. You can read more about what they do to move to a new region in their newest blog post: RightScale Global: Next Stop Japan.

- The S3 Browser now supports the Tokyo Region.

- A new version of S3 Backup has been released, with support for the Tokyo Region.

- The latest snapshot builds of Cyberduck for both Mac and Windows support the new Tokyo region. See the Cyberduck Amazon S3 Howto for more information.

- CloudBerry Explorer now supports the Tokyo Region and sports a localized user interface. Read more.

- Scalarium is ready to roll in Tokyo, and includes several graphical themes designed specifically for the Japanese market.

- The SDB Explorer for Amazon SimpleDB now supports the Tokyo Region per their blog post.

- Zmanda Cloud Backup now supports the Tokyo region; more info here.

Here are some of the jobs that we have open in Japan:

- Developer Support Engineer

- Developer Support Operations Manager

- Solutions Architect

- Senior Solutions Architect

- Business Development Manager

- Strategic Alliances Manager

- Technical Account Manager

- Senior Sales Manager

- Enterprise Sales Representative

- Technical Sales Representative

- Managing Director, Enterprise Sales

— Jeff;

Note: Tetris and 1985~2011 Tetris Holding. Tetris logos, Tetris theme song and Tetriminos are trademarks of Tetris Holding. The Tetris trade dress is owned by Tetris Holding. Licensed to The Tetris Company. Game Design by Alexey Pajitony. Original Logo Design by Roger Dean. All Rights Reserved. Sub-licensed to Electronic Arts Inc. and G-mode, Inc.

Upcoming Event: AWS Tech Summit, London

I’m very pleased to invite you all to join the AWS team in London, for our first Tech Summit of 2011. We’ll take a quick, high level tour of the Amazon Web Services cloud platform before diving into the technical detail of how to build highly available, fault tolerant systems, host databases and deploy Java applications with Elastic Beanstalk.

We’re also delighted to be joined by three expert customers who will be discussing their own, real world use of our services:

- Francis Barton, from Costcutter

- Richard Churchill, from Servicetick

- Richard Holland, from Eagle Genomics

So if you’re a developer, architect, sysadmin or DBA, we look forward to welcoming you to the Congress Centre in London on the 17th of March.

We had some great feedback from our last summit in November, and this event looks set to be our best yet.

The event is free, but you’ll need to register.

~ Matt

Rack and the Beanstalk

AWS Elastic Beanstalk manages your web application via Java, Tomcat and the Amazon cloud infrastructure. This means that in addition to Java, Elastic Beanstalk can host applications developed with languages compatible with the Java VM.

This includes tools such as Clojure, Scala and JRuby – in this post we start to think out of the box, and show you how to run any Rack based Ruby application (including Rails and Sinatra) on the Elastic Beanstalk platform. You get all the benefits of deploying to Elastic Beanstalk: autoscaling, load balancing, versions and environments, with the joys of developing in Ruby.

Getting started

We’ll package a new Rails app into a Java .war file which will run natively through JRuby on the Tomcat application server. There is no smoke and mirrors here – Rails will run natively on JRuby, a Ruby implementation written in Java.

Java up

If you’ve not used Java or JRuby before, you’ll need to install them. Java is available for download, or via your favourite package repository and is usually already installed on Mac OS X. The latest version of JRuby is available here. It’s just a case of downloading the latest binaries for your platform (or source, if you are so inclined), and unpacking them into your path – full details here. I used v1.5.6 for this post.

Gem cutting

Ruby applications and modules are often distributed as Rubygems. JRuby maintains a separate Rubygem library, so we’ll need to install a few gems to get started including Rails, the Java database adaptors and warbler, which we’ll use to package our application for deployment to AWS Elastic Beanstalk. Assuming you added the jruby binaries to your path, you can run the following on your command line:

jruby -S gem install rails

jruby -S gem install warbler

jruby -S gem install jruby-openssl

jruby -S gem install activerecord-jdbcsqlite3-adapter

jruby -S gem install activerecord-jdbcmysql-adapter

To skip the lengthy documentation generation, just throw ‘–no-ri –no-rdoc‘ on the end of each of these commands.

A new hope

We can now create a new Rails application, and set it up for deployment under the JVM application container of Elastic Beanstalk. We can use a preset template, provided by jruby.org, to get us up and running quickly. Again, on the command line, run:

jruby -S rails new aws_on_rails -m http://jruby.org/rails3.rb

This will create a new Rails application in a directory called ‘aws_on_rails’. Since it’s so easy with Rails, let’s make our example app do something interesting. For this, we’ll need to first setup our database configuration to use our Java database drivers. To do this, just define the gems in the application’s Gemfile, just beneath the line that starts gem ‘jdbc-sqlite3’:

gem ‘activerecord-jdbcmysql-adapter’, :require => false

gem ‘jruby-openssl’

Now we setup the database configuration details – add these to your app’s config/database.yml file.

development:

adapter: jdbcsqlite3

database: db/development.sqlite3

pool: 5

timeout: 5000

production:

adapter: jdbcmysql

driver: com.mysql.jdbc.Driver

username: admin

password: <password>

pool: 5

timeout: 5000

url: jdbc:mysql://<hostname>/<db-name>

If you don’t have a MySQL database, you can create one quickly using the Amazon Relational Database Service. Just log into the AWS Management Console, go to the RDS tab, and click ‘Launch DB instance’. you can find more details about Amazon RDS here. The hostname for the production settings above are listed in the console as the database ‘endpoint’. Be sure to create the RDS database in the same region as Elastic Beanstalk, us-east and setup the appropriate security group access.

Application

We’ll create a very basic application that lets us check in to a location. We’ll use Rails’ scaffolding to generate a simple interface, a controller and a new model.

jruby -S rails g scaffold Checkin name:string location:string

Then we just need to migrate our production database, ready for the application to be deployed to Elastic Beanstalk:

jruby -S rake db:migrate RAILS_ENV=production

Finally, we just need to set up the default route. Add the following to config/routes.rb:

root :to => “checkins#index”

This tells Rails how to respond to the root URL, which is used by the Elastic Beanstalk load balancer by default to monitor the health of your application.

Deployment

We’re now ready to package our application, and send it to Elastic Beanstalk. First of all, we’ll use warble to package our application into a Java war file.

jruby -S warble

This will create a new war file, named after your application, located in the root directory of your application. Head over to the AWS Management Console, click on the Elastic Beanstalk tab, and select ‘Create New Application’. Setup your Elastic Beanstalk application with a name, URL and container type, then upload the Rails war file.

After Elastic Beanstalk has provisioned your EC2 instances, load balancer and autoscaling groups, your application will start under Tomcat’s JVM. This step can take some time but once your app is launched, you can view it at the Elastic Beanstalk URL.

Congrats! You are now running Rails on AWS Elastic Beanstalk.

By default, your application will launch under Elastic Beanstalk in production mode, but you can change this and a wide range of other options using the warbler configuration settings. You can adjust the number of instances and autoscaling settings from the Elastic Beanstalk console.

Since Elastic Beanstalk is also API driven, you can automate the configuration, packaging and deployment as part of your standard build and release process.

~ Matt

Amazon RDS: MySQL 5.5 Now Available

The Amazon Relational Database Service (RDS) now supports version 5.5 of MySQL. This new version of MySQL includes InnoDB 1.1, and offers a number of performance improvements including enhanced use of multiple cores, better I/O handling with multiple read and write threads, and enhanced monitoring. InnoDB 1.1 includes a number of improvements over the prior version including faster recovery, multiple buffer pool instances, and asynchronous I/O.

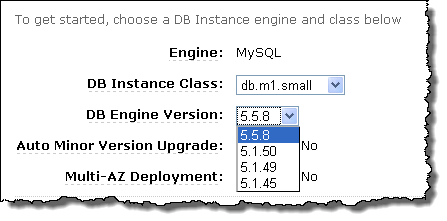

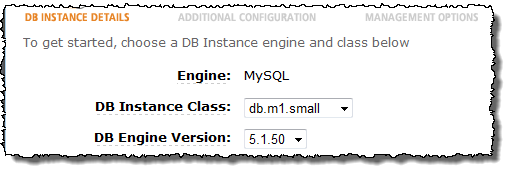

As you can see from the screen shot above, you can start to use version 5.5 of MySQL by selecting it from the menu in the AWS Management Console when you create your DB Instance. In order to upgrade an existing DB Instance from MySQL 5.1 to MySQL 5.5 you must export the database using mysqldump and then import it into a newly created MySQL 5.5 DB Instance. We hope to provide an upgrade function in the future.

We do not currently support MySQL 5.5’s semi-synchronous replication, pluggable authentication, or proxy users. If these features would be of value to you, perhaps you could leave a note in the RDS Forum.

To create a new MySQL 5.5 DB Instance, just choose the DB Engine Version of 5.5.8 in the AWS Management Console. Because it is so easy to create a new DB Instance, you should have no problem testing your code for compatibility with this new version before using it in production scenarios.

— Jeff;

Coming Soon – Oracle Database 11g on Amazon Relational Database Service

As part of our continued effort to make AWS even more powerful and flexible, we are planning to support Oracle Database 11g Release 2 via the Amazon Relational Database Service (RDS) beginning in the second quarter of 2011.

Amazon RDS makes it easy for you to create, manage, and scale a relational database without having to worry about capital costs, hardware, operating systems, backup tapes, or patch levels. Thousands of developers are already using multiple versions of MySQL via RDS. The RDS tab of the AWS Management Console, the Command-Line tools, and the RDS APIs will all support the use of the Oracle Database as the “Database Engine” parameter.

As with today’s MySQL offering, Amazon RDS running Oracle Database will reduce administrative overhead and expense by maintaining database software, taking continuous backups for point-in-time recovering, and exposing key operational metrics via Amazon CloudWatch. It will also allow scaling of compute and storage capacity to be done with a few clicks of the AWS Management Console. Concepts applicable to MySQL on RDS, including backup windows, DB Parameter Groups, and DB Security Groups will also apply to Oracle Database on RDS.

You will be able to pay for your use of Oracle Database 11g in several different ways:

If you don’t have any licenses, you’ll be able to pay for your use of Oracle Database 11g on an hourly basis without any up-front fees or mandatory long-term commitments. The hourly rate will depend on the Oracle Database edition and the DB Instance size. You will also be able to reduce your hourly rate by purchasing Reserved DB Instances.

If you have existing Oracle Database licenses you can bring them to the cloud and use them pursuant to Oracle licensing policies without paying any additional software license or support fees.

I think that this new offering and the flexible pricing models will be of interest to enterprises of all shapes and sizes, and I can’t wait to see how it will be put to use.

If you would like to learn more about our plans by visiting our new Oracle Database on Amazon RDS page. You’ll be able to sign up to be notified when this new offering is available and you’ll also be able to request a briefing from an AWS associate.

In anticipation of this offering, you can visit the Amazon RDS page to learn more about the benefits of Amazon RDS and see how you can deploy a managed MySQL database today in minutes. Since the user experience of Amazon RDS will be similar across the MySQL and Oracle offerings, this is a great way to get started with Amazon RDS in ahead of the forthcoming Oracle Database offering.

— Jeff;

New Webinar: High Availability Websites

As part of a new, monthly hands on series of webinars, I’ll be giving a technical review of building, managing and maintaining high availability websites and web applications using Amazons cloud computing platform.

Hosting websites and web applications is a very common use of our services, and in this webinar we’ll take a hands-on approach to websites of all sizes, from personal blogs and static sites to complex multi-tier web apps.

Join us on January 28 at 10:00 AM (GMT) for this 60 minute, technical web-based seminar, where we’ll aim to cover:

- Hosting a static website on S3

- Building highly available, fault tolerant websites on EC2

- Adding multiple tiers for caching, reverse proxies and load balancing

- Autoscaling and monitoring your website

Using real world case studies and tried and tested examples, well explore key concepts and best practices for working with websites and on-demand infrastructure.

The session is free, but you’ll need to register!

See you there.

~ Matt