Category: Amazon VPC

Launch Relational Database Service Instances in the Virtual Private Cloud

You can now launch Amazon Relational Database Service (RDS) DB instances inside of a Virtual Private Cloud (VPC).

Some Background

The Relational Database Service takes care of all of the messiness associated with running a relational database. You don’t have to worry about finding and configuring hardware, installing an operating system or a database engine, setting up backups, arranging for fault detection and failover, or scaling compute or storage as your needs change.

The Virtual Private Cloud lets you create a private, isolated section of the AWS Cloud. You have complete control over IP address ranges, subnetting, routing tables, and network gateways to your own data center and to the Internet.

Here We Go

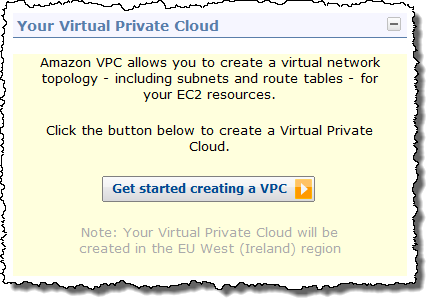

Before you launch an RDS DB Instance inside of a VPC, you must first create the VPC and partition its IP address range in to the desired subnets. You can do this using the VPC wizard pictured above, the VPC command line tools, or the VPC APIs.

Then you need to create a DB Subnet Group. The Subnet Group should have at least one subnet in each Availability Zone of the target Region; it identifies the subnets (and the corresponding IP address ranges) where you would like to be able to run DB Instances within the VPC. This will allow a Multi-AZ deployment of RDS to create a new standby in another Availability Zone should the need arise. You need to do this even for Single-AZ deployments, just in case you want to convert them to Multi-AZ at some point.

You can create a DB Security Group, or you can use the default. The DB Security Group gives you control over access to your DB Instances; you can allow access from EC2 instances with specific EC2 Security Group or VPC Security Groups membership, or from designated ranges of IP addresses. You can also use VPC subnets and the associated network Access Control Lists (ACLs) if you’d like. You have a lot of control and a lot of flexibility.

The next step is to launch a DB Instance within the VPC while referencing the DB Subnet Group and a DB Security Group. With this release, you are able to use the MySQL DB engine (we plan to additional options over time). The DB Instance will have an Elastic Network Interface using an IP address selected from your DB Subnet Group. You can use the IP address to reach the instance if you’d like, but we recommend that you use the instance’s DNS name instead since the IP address can change during failover of a Multi-AZ deployment.

Upgrading to VPC

If you are running an RDB DB Instance outside of a VPC, you can snapshot the DB Instance and then restore the snapshot into the DB Subnet Group of your choice. You cannot, however, access or use snapshots taken from within a VPC outside of the VPC. This is a restriction that we have put in to place for security reasons.

Use Cases and Access Options

You can put this new combination (RDS + VPC) to use in a variety of ways. Here are some suggestions:

- Private DB Instances Within a VPC – This is the most obvious and straightforward use case, and is a perfect way to run corporate applications that are not intended to be accessed from the Internet.

- Public facing Web Application with Private Database – Host the web site on a public-facing subnet and the DB Instances on a private subnet that has no Internet access. The application server and the RDB DB Instances will not have public IP addresses.

Your Turn

You can launch RDS instances in your VPCs today in all of the AWS Regions except AWS GovCloud (US). What are you waiting for?

— Jeff;

New – Elastic Network Interfaces in the Virtual Private Cloud

If you look closely at the services and facilities provided by AWS, you’ll see that we’ve chosen to factor architectural components that were once considered elemental (e.g. a server) into multiple discrete parts that you can instantiate and control individually.

For example, you can create an EC2 instance and then attach EBS volumes to it on an as-needed basis. This is more dynamic and more flexible than procuring a server with a fixed amount of storage.

Today we are adding additional flexibility to EC2 instances running in the Virtual Private Cloud. First, we are teasing apart the IP addresses (and important attributes associated with them) from the EC2 instances and calling the resulting entity an ENI, or Elastic Network Interface. Second, we are giving you the ability to create additional ENIs, and to attach a second ENI to an instance (again, this is within the VPC).

Each ENI lives within a particular subnet of the VPC (and hence within a particular Availability Zone) and has the following attributes:

- Description

- Private IP Address

- Elastic IP Address

- MAC Address

- Security Group(s)

- Source/Destination Check Flag

- Delete on Termination Flag

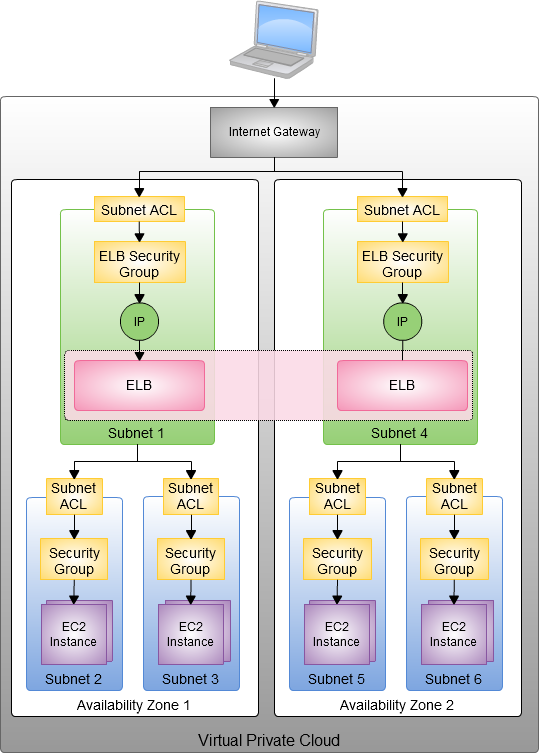

A very important consequence of this new model (and one took me a little while to fully understand) is that the idea of launching an EC2 instance on a particular VPC subnet is effectively obsolete. A single EC2 instance can now be attached to two ENIs, each one on a distinct subnet. The ENI (not the instance) is now associated with a subnet.

Similar to an EBS volume, ENIs have a lifetime that is independent of any particular EC2 instance. They are also truly elastic. You can create them ahead of time, and then associate one or two of them with an instance at launch time. You can also attach an ENI to an instance while it is running (we sometimes call this a “hot attach”). Unless the Delete on Termination flag is set, the ENI will remain alive and well after the instance is terminated. We’ll create a ENI for you at launch time if you don’t specify one, and we’ll set the Delete on Terminate flag so you won’t have to manage it. Net-net: You don’t have to worry about this new level of flexibility until you actually need it.

You can put this new level of addressing and security flexibility to use in a number of different ways. Here are some that we’ve already heard about:

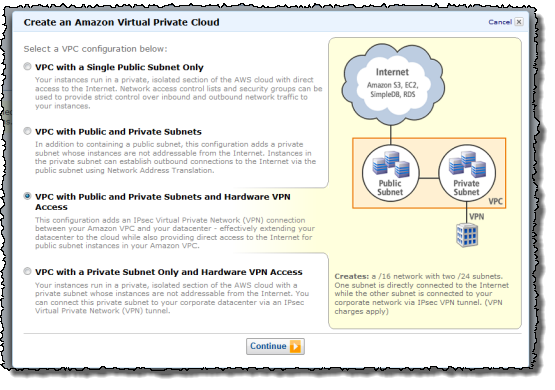

Management Network / Backnet – You can create a dual-homed environment for your web, application, and database servers. The instance’s first ENI would be attached to a public subnet, routing 0.0.0.0/0 (all traffic) to the VPC’s Internet Gateway. The instance’s second ENI would be attached to a private subnet, with 0.0.0.0 routed to the VPN Gateway connected to your corporate network. You would use the private network for SSH access, management, logging, and so forth. You can apply different security groups to each ENI so that traffic port 80 is allowed through the first ENI, and traffic from the private subnet on port 22 is allowed through the second ENI.

Multi-Interface Applications – You can host load balancers, proxy servers, and NAT servers on an EC2 instance, carefully passing traffic from one subnet to the other. In this case you would clear the Source/Destination Check Flag to allow the instances to handle traffic that wasn’t addressed to them. We expect vendors of networking and security products to start building AMIs that make use of two ENIs.

MAC-Based Licensing – If you are running commercial software that is tied to a particular MAC address, you can license it against the MAC address of the ENI. Later, if you need to change instances or instance types, you can launch a replacement instance with the same ENI and MAC address.

Low-Budget High Availability – Attach a ENI to an instance; if the instance dies launch another one and attach the ENI to it. Traffic flow will resume within a few seconds.

Here is a picture to show you how all of the parts — VPC, subnets, routing tables, and ENIs fit together:

I should note that attaching two public ENIs to the same instance is not the right way to create an EC2 instance with two public IP addresses. There’s no way to ensure that packets arriving via a particular ENI will leave through it without setting up some specialized routing. We are aware that a lot of people would like to have multiple IP addresses for a single EC2 instance and we plan to address this use case in 2012.

The AWS Management Console includes Elastic Network Interface support:

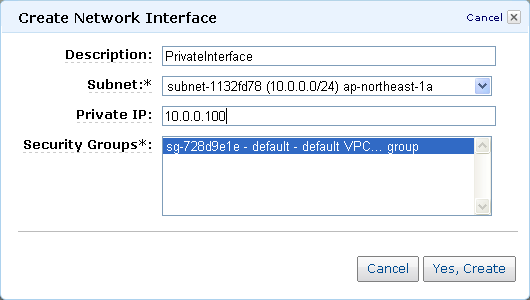

The Create Network Interface button prompts for the information needed to create a new ENI:

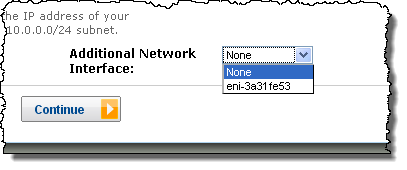

You can specify an additional ENI when you launch an EC2 instance inside of a VPC:

You can attach an ENI to an existing instance:

As always, I look forward to getting your thoughts on this new feature. Please feel free to leave a comment!

— Jeff;

New – AWS Elastic Load Balancing Inside of a Virtual Private Cloud

The popular AWS Elastic Load Balancing Feature is now available within the Virtual Private Cloud (VPC). Features such as SSL termination, health checks, sticky sessions and CloudWatch monitoring can be configured from the AWS Management Console, the command line, or through the Elastic Load Balancing APIs.

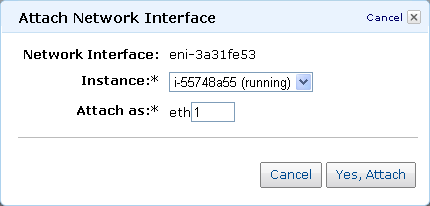

When you provision an Elastic Load Balancer for your VPC, you can assign security groups to it. You can place ELBs into VPC subnets, and you can also use subnet ACLs (Access Control Lists). The EC2 instances that you register with the Elastic Load Balancer do not need to have public IP addresses. The combination of the Virtual Private Cloud, subnets, security groups, and access control lists gives you precise, fine-grained control over access to your Load Balancers and to the EC2 instances behind them and allows you to create a private load balancer.

Here’s how it all fits together:

When you create an Elastic Load Balancer inside of a VPC, you must designate one or more subnets to attach. The ELB can run in one subnet per Availability Zone; we recommend (as shown in the diagram above) that you set aside a subnet specifically for each ELB. In order to allow for room (IP address space) for each ELB to grow as part of the intrinsic ELB scaling process, the subnet must have at least 8 free IP addresses.

We think you will be able to put this new feature to use right away. We are also working on additional enhancements, including IPv6 support for ELB in VPC and the ability to use Elastic Load Balancers for internal application tiers.

— Jeff;

Launch EC2 Spot Instances in a Virtual Private Cloud

Over the past two months, we have had the opportunity to share several exciting developments regarding Spot Instances. We have told you how to Run Amazon Elastic MapReduce on EC2 Spot Instances, we published Four New Amazon EC2 Spot Instance Videos, and we outlined the excitement around Scientific Computing with EC2 Spot Instances. Others in the community have also shared their experiences with Spot Instances. You may have read about Cycle Computing running a 30,000 core molecular modeling workload on Spot for $1279/hour or Harvard Medical School moving some of their workload to Spot after a day of engineering, saving roughly 50% in cost.

In typical Amazon fashion, we like to keep the momentum going. We’ve combined two popular AWS features, Amazon EC2 Spot Instances and the Amazon Virtual Private Cloud (Amazon VPC). You can now create a private, isolated section of the AWS cloud and make requests for Spot Instances to be launched within it. With this new feature, you get the flexibility and cost benefits of Spot Instances along with the control and advanced security options of the VPC.

Based on feedback from customers in the community, we believe this feature will be ideal for use cases like scientific computing, financial services, media encoding, and “big data.” As an example, we have received a number of requests from members the scientific community who have been mining petabytes of confidential (e.g. human genome or sensitive customer data) and/or proprietary data (e.g. patentable). Traditionally they have set up their own software VPN connection and launch Spot Instances. Now they can leverage all of the security and flexibility benefits associated with VPC.

We have also heard a number of customers looking for ways to integrate on-premise and cloud solutions, and to burst into the cloud. These customers now can leverage VPC and Spot for a great low cost solution to this “computation gap.”

Launching Spot instances into an Amazon VPC is similar to launching Spot instances, but you need to specify the VPC you would like to run your Spot Instances within. Launching Spot Instances into Amazon VPC requires special capacity behind the scenes, which means that the Spot Price for Spot Instances in an Amazon VPC will float independently from those launched outside of Amazon VPC.

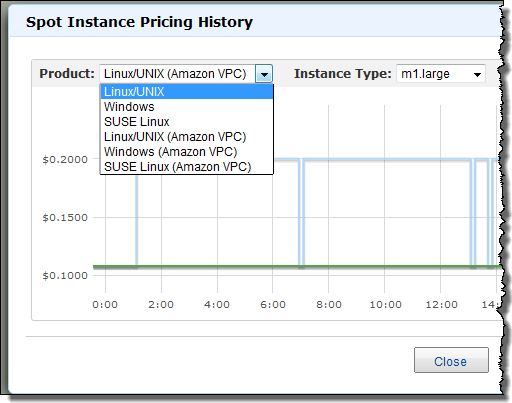

The AWS Management Console includes complete support for this new feature combo. You can examine the spot price history for EC2 instances launched within a VPC:

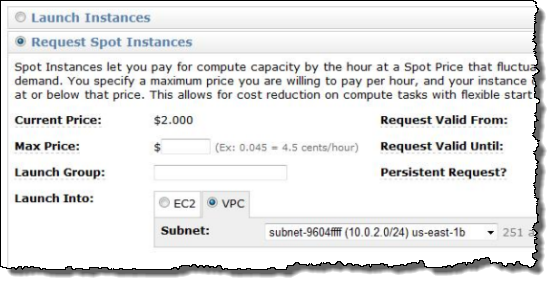

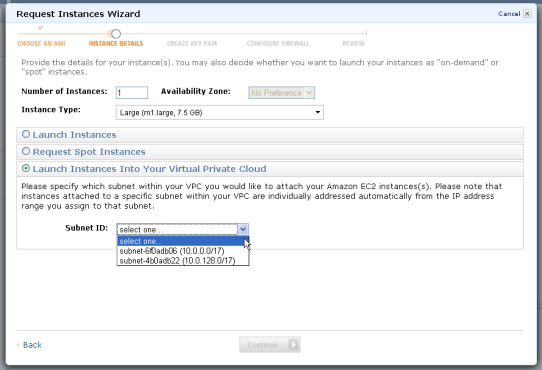

You can use the console’s Request Instances Wizard to make a request to launch Spot Instances in any of your VPCs at the maximum price of your choice (just be sure to choose VPC for the Launch Into option):

For more information on using Spot Instances in VPC, please visit the EC2 User’s Guide.

If you want to learn more about the ins and outs of Spot Instances, I recommend that you spend a few minutes watching the following videos:

Getting Started With Spot Instances

Deciding on Your Spot Bidding Strategy

How to Manage Spot Instance Interruption

There is also a video coming soon on the how to launch a Spot Instance within VPC, so check back at the Spot Instance web page again soon. I will tweet when it becomes available (please follow me (@jeffbarr) for more details).

As I mentioned, the Spot service has been rapidly evolving, and we would love to get your feedback on the next features youd love to see. Please feel free to email spot-instance-feedback@amazon.com if you have more feedback. Alternatively, to learn more about Spot, please visit the Spot Instance page for more details.

Jeff;

Amazon VPC – Far More Than Everywhere

Today we are marking the Virtual Private Cloud (VPC) as Generally Available, and we are also releasing a big bundle of new features (see my recent post, A New Approach to Amazon EC2 Networking for more information on the last batch of features including subnets, Internet access, and Network ACLs).

You can now build highly available AWS applications that run in multiple Availability Zones within a VPC, with multiple (redundant) VPN connections if you’d like. You can even create redundant VPCs. And, last but not least, you can do all of this in any AWS Region.

Behind the Scenes!

There’s a story behind the title of this post, and I thought I would share it with you. A few months ago the VPC Program Manager told me that they were working a project called “VPC Everywhere.” The team was working to make the VPC available in multiple Availability Zones of every AWS Region. I added “VPC Everywhere” to my TODO list, and that was that.

Last month he pinged me to let me know that VPC Everywhere was getting ready to launch, and to make sure that I was ready to publish a blog post at launch time. I replied that I was ready to start, and asked him to confirm my understanding of what I had to talk about.

He replied that VPC Everywhere actually included quite a few new features and sent me the full list. Once I saw the list, I realized that I would need to set aside a lot more time in order to give this release the attention that it deserves. I have done so, and here’s my post!

Here’s what’s new today:

- The Virtual Private Cloud has exited beta, and is now Generally Available.

- The VPC is available in multiple Availability Zones in every AWS Region.

- A single VPC can now span multiple Availability Zones.

- A single VPC can now support multiple VPN connections.

- You can now create more than one VPC per Region in a single AWS account.

- You can now view the status of each of your VPN connections in the AWS Management Console. You can also access it from the command line and via the EC2 API.

- Windows Server 2008 R2 is now supported within a VPC, as are Reserved Instances for Windows with SQL Server.

- The Yamaha RTX1200 router is now supported.

Let’s take a look at each new feature!

General Availability

The “beta” tag is gone! During the beta period many AWS customers have used the VPC to create their own isolated networks within AWS. We’ve done our best to listen to their feedback and to use it to drive our product planning process.

VPC Everywhere

You can now create VPCs in any Availability Zone in any of the five AWS Regions (US East, US West, Europe, Singapore, or Tokyo). Going forward, we plan to make VPC available at launch time when we open up additional Regions (several of which are on the drawing board already). Data transfer between VPC and non-VPC instances in the same Region, regardless of Availability Zone, is charged at the usual rate of $0.01 per Gigabyte.

Multiple Availability Zone Support

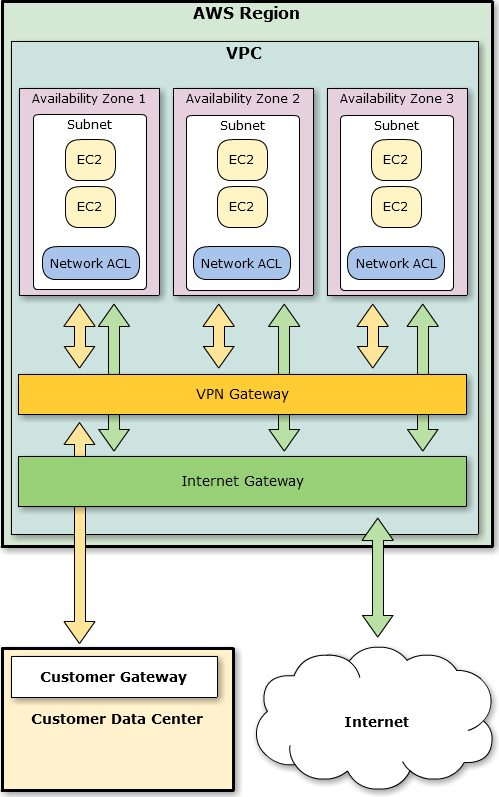

You can now create a VPC that spans multiple Availability Zones in a Region. Since each VPC can have multiple subnets, you can put each subnet in a distinct Availability Zone (you can’t create a subnet that spans multiple Zones though). VPN Gateways are regional objects, and can be accessed from any of the subnets (subject, of course, to any Network ACLs that you create). Here’s what this would look like:

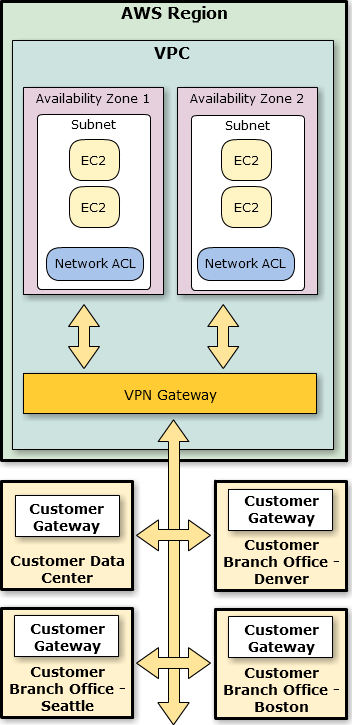

Multiple Connection Support

You can now create multiple VPN connections to a single VPC. You can use this new feature to configure a second Customer Gateway to create a redundant connection to the same external location. You can also use it to implement what is often described as a “branch office” scenario by creating VPN connections to multiple geographic locations. Here’s what that would look like:

By default you can create up to 10 connections per VPC. You can ask for more connections using the VPC Request Limit Increase form.

Multiple VPCs per Region

You can now create multiple, fully-independent VPCs in a single Region without having to use additional AWS accounts. You can, for example, create production networks, development networks, staging networks, and test networks as needed. At this point, each VPC is completely independent of all others, to the extent that multiple VPCs in a single account can even contain overlapping IP address ranges. However, we are aware of a number of interesting scenarios where it would be useful to peer two or more VPCs together, either within a single AWS account or across multiple accounts owned by different customers. We’re thinking about adding this functionality in a future release and your feedback would be very helpful.

By default you can create up to 5 VPCs. You can ask for additional VPCs using the VPC Request Limit Increase form.

VPN Connection Status

You can now check the status of each of your VPN Connections from the command line or from the VPC tab of the AWS Management Console. The displayed information includes the state (Up, Down, or Error), descriptive error text, and the time of the last status change.

Windows Server 2008 R2 and Reserved Instances for Windows SQL Server

Windows Server 2008 R2 is now available for use within your VPC. You can also purchase Reserved Instances for Windows SQL Server, again running within your VPC.

Third-Party Support

George Reese of enStratus emailed me last week to let me know that they are supporting VPC in all of the AWS Regions with their cloud management and governance product.

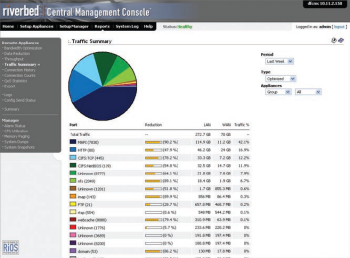

Bruno from Riverbed dropped me a note to tell me that their Cloud Steelhead WAN optimization product is now available in all of the AWS Regions and that it can be used within a VPC. Their product can be used to migrate data into and out of AWS and to move data between Regions.

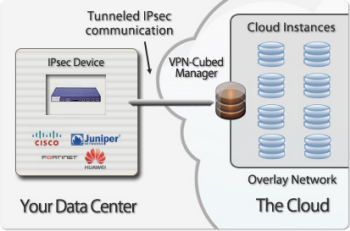

Patrick from cohesiveFT sent along information about the vpcPLUS edition of their VPN-Cubed product. Among other things, you can use VPN-Cubed to federate VPCs running in multiple AWS Regions. The vpcPLUS page contains a number of very informative diagrams as well.

Update: Matt and Craig from Citrix wrote to let me know that Citrix XenApp is now available for use in all Regions. Per their blog post, “Users can connect directly to XenApp from anywhere they have an internet connection, and a single secure network backend tunnel connects XenApp to any on-premise company data that is required by the applications.” There’s also a more technical blog post and a list of AMI IDs here.

Keep Moving

Even after this feature-rich release, we still have plenty of work ahead of us. I won’t spill all the beans, but I will tell you that we are working to support Elastic MapReduce. Elastic Load Balancing, and the Relational Database Service inside of a VPC.

Would you be interested in helping to make this happen? It just so happens that we have a number of openings on the EC2 / VPC team:

- Software Development Manager- Amazon Virtual Private Cloud (Herndon, VA).

- Software Development Engineer – AWS (Herndon, VA).

And there you have it – VPC Everywhere, and a lot more! What do you think?

— Jeff;

PS – The diagrams in this post were created using a tool called Cacoo, a very nice, browser-based collaborative editing tool that was demo’ed to me on my most recent visit to Japan. It runs on AWS, of course.

IAM: AWS Identity and Access Management – Now Generally Available

Our customers use AWS in many creative and innovative ways, continuously introducing new use cases and driving us to solve unexpected and complex problems. We are constantly improving our capabilities to make sure that we support a very wide variety of use cases and access patterns.

Our customers use AWS in many creative and innovative ways, continuously introducing new use cases and driving us to solve unexpected and complex problems. We are constantly improving our capabilities to make sure that we support a very wide variety of use cases and access patterns.

In particular, we want to make sure that developers at any level of experience and sophistication (from a student in a dorm room to an employee of a multinational corporation) have complete control over access to their AWS resources.

AWS Identity and Access Management (IAM) lets you manage users, groups of users, and access permissions for AWS services and resources. You can also use IAM to centrally manage security credentials such as access keys, passwords, and MFA devices. Effective immediately, IAM is now a Generally Available (GA) service!

Using IAM you can create users (representing a person, an organization, or an application, as desired) within an existing AWS Account. You can also group users to apply the same set of permissions. The groups can represent functional boundaries (development vs. test), organizational boundaries (main office vs. branch office), or job function (manager, tester, developer, or system administrator). Each user can be a member of multiple groups (branch office, manager). For maximum security, newly created users have no permissions. All permission control is accomplished using policy documents containing policy statements which grant or deny access to AWS service actions or resources.

IAM can be accessed through APIs, a command line interface, and through the AWS Management Console (I’ve written a separate post about the console support).

Here are some examples of the IAM command line interface in action. Let’s create a user that can create and manage other users and then use this user to create a couple of additional users. Then we’ll give one user the ability to access Amazon S3.

The iam-userlistbypath command lists all or some of the users in the account:

C:\>

There are no default users. Let’s create a user “jeff” using the iam-usercreate command (“/family” is a path that further qualifies the names):

AKIAIYPZGF3ABUC2LQELQ

bbYJpBtRQr635j8QVsCpstrLMS7Mf+ihsLabqEQL

The -k argument causes iam-usercreate to create an AWS access key (both the access key id and the secret access key) for each user. These keys are the credentials needed to access data controlled by the account. They can be inserted in to any application or tool that currently accepts an access key id and a secret access key. Note: It is important to capture and save the secret access key at this point; there’s no way to retrieve it if you lose it (you can create a new set of credentials if necessary).

We can use iam-userlistbypath to verify that we now have one user:

arn:aws:iam::889279108296:user/family/jeff

However, user “jeff” has no access because we have not granted him any permissions. The iam-useraddpolicy command is used to add permissions to a user. The iam-groupaddpolicy command can be used to do the same for a group. Let’s add a policy that gives me (user “jeff”) permission to use the IAM APIs on users under the “/app” path. I might not be the only user in my account that should have this permission so I’ll start by creating a group and granting the permissions to the group and then add “jeff” to the group.

I (identifying myself as user “jeff” using the credentials that I just created) can now create and manage users under the “/app” path. Let’s create users for two of my applications (“syndic8” and “backup”) using “/app” as the path. I can use the same command that I used to create user “jeff”:

kgRiohPeBGyY6iDx7qzqSzCyrang6YUo67etcGat

iXdFDaA15VUImTo2MrmErSvTloTeK4ERNIESw78R

I can list only the application users I created by providing an argument to iam-userlist:

arn:aws:iam::889279108296:user/app/syndic8

Neither “backup” nor “syndic8” have any permissions yet. I can use the access keys for user “jeff” to grant permission for the “backup” user to use all of the S3 APIs on any of my S3 resources:

This policy allows the user named “backup” to use all of the S3 APIs on any of my S3 resources, but not to access any other AWS service that my AWS Account has subscribed to.

The iam-listuserpolicies command displays the policies associated with a user; the -v option displays the contents of each policy:

{“Version”:”2008-10-17″,”Statement”:[{“Effect”:”Allow”,”Action”:[“s3:*”],”Resource”:[“arn:aws:s3::*”]}]}

So, by giving my user (“jeff”) the appropriate privileges, I can minimize the use of my AWS Account credentials for access to AWS services.

You can think of the AWS Account as you would think about the Unix root (superuser) account. To get full value from IAM you should start using it when you are the only developer and you only have one application, adding users, groups, and policies as your environment becomes more complex. You can protect the AWS Account using an MFA device, and you should always sign your AWS calls using the access keys from a particular user. Once you have fully adopted IAM there should be no reason to use the AWS Account’s credentials to make a call to AWS.

There are a number of other commands (fully documented in the IAM CLI Reference). Like all of the other AWS command-line tools, the IAM tools make use of the IAM APIs, all of which are documented in the IAM API Reference.

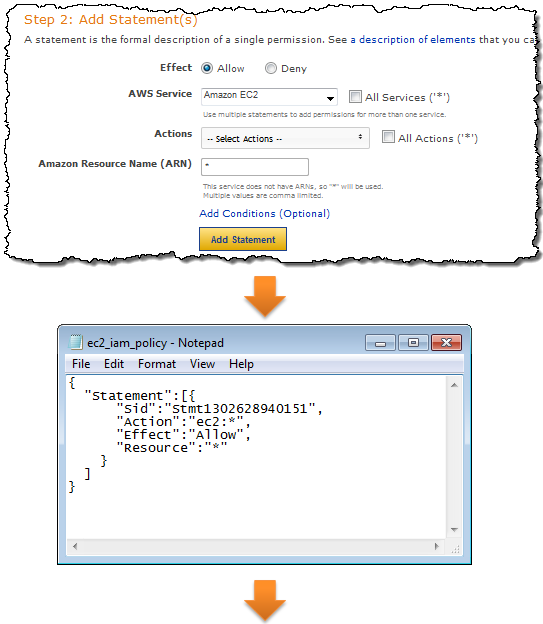

The AWS Policy Generator can be used to create policies for use with the IAM command line tools. After the policy is created it must be uploaded — use iam-useruploadpolicy instead of iam-useraddpolicy:

IAM controls access to each service in an appropriate way. You can control access to the actions (API functions) of any supported service. You can also control access to IAM, SimpleDB, SQS, S3, SNS, and Route 53 resources. The integration is done in a seamless fashion; all of the existing APIs continue to work as expected (subject, of course, to the permissions established by the use of IAM) and there is no need to change any of the application code. You may decide to create a unique set of credentials for each application using IAM. If you do this, you’ll need to embed the new credentials in each such application.

IAM currently integrates with Amazon EC2, Amazon RDS, Amazon S3, Amazon SimpleDB, Amazon SNS, Amazon SQS, Amazon VPC, Auto Scaling, Amazon Route 53, Amazon CloudFront, Amazon ElasticMapReduce, Elastic Load Balancing, AWS CloudFormation, Amazon CloudWatch, and Elastic Block Storage. IAM also integrates with itself as you saw in my example, you can use it to give certain users or groups the ability to perform IAM actions such as creation of new users.

The AWS Account retains control of all of the data. Also, all accounting still takes place at the AWS Account level, so all usage within the account will be rolled up in to a single bill.

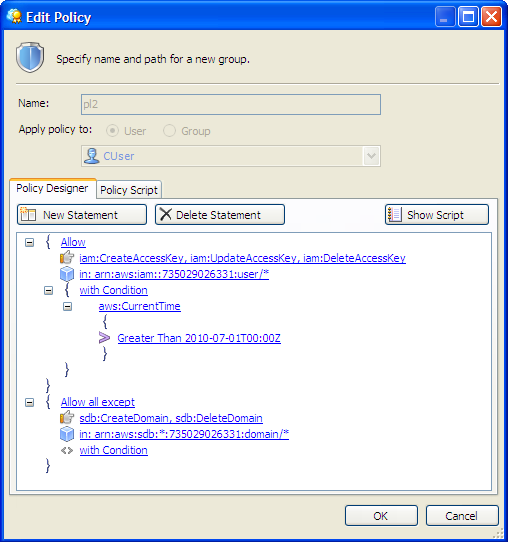

We have seen a wide variety of third-party tools and toolkits add support for IAM already. For example, the newest version of CloudBerry Explorer already supports IAM. Here’s a screen shot of their Policy Editor:

Here are some other applications and toolkits that also support IAM:

- Boto (Python toolkit) – Complete details described in this blog post.

- Ylastic – AWS Management GUI for mobile devices.

- S3 Browser – New Bucket Sharing Wizard.

- SDB Explorer – Complete details in this blog post.

- CloudWorks – AWS Management Tool with Japanese-language UI.

- Bucket Explorer Team Edition – More info here.

Eric Hammond’s article, Improving Security on EC2 With AWS Identity and Access Management (IAM), shows you how to use IAM to create a user that can create EBS snapshots and nothing more. As Eric says:

The release of AWS Identity and Access Management alleviates one of the biggest concerns security-conscious folks used to have when they started using AWS with a single key that gave complete access and control over all resources. Now the control is entirely in your hands.

The features that I have described above represent our first steps toward our long-term goals for IAM. However, we have a long (and very scenic) journey ahead of us and we are looking for additional software engineers, data engineers, development managers, technical program managers, and product managers to help us get there. If you are interested in a full-time position on the Seattle-based IAM team, please send your resume to aws-platform-jobs@amazon.com.

I think you’ll agree that IAM makes AWS an even better choice for any type of deployment. As always, please feel free to leave me a comment or to send us some email.

–Jeff;

Amazon EC2 Dedicated Instances

We continue to listen to our customers, and we work hard to deliver the services, features, and business models based on what they tell us is most important to them. With hundreds of thousands of customers using Amazon EC2 in various ways, we are able to see trends and patterns in the requests, and to respond accordingly. Some of our customers have told us that they want more network isolation than is provided by “classic EC2.” We met their needs with Virtual Private Cloud (VPC). Some of those customers wanted to go even further. They have asked for hardware isolation so that they can be sure that no other company is running on the same physical host.

We’re happy to oblige!

Today we are introducing a new EC2 concept the Dedicated Instance. You can now launch Dedicated Instances within a Virtual Private Cloud on single-tenant hardware. Let’s take a look at the reasons why this might be desirable, and then dive in to the specifics, including pricing.

Background

Amazon EC2 uses a technology commonly known as virtualization to run multiple operating systems on a single physical machine. Virtualization ensures that each guest operating system receives its fair share of CPU time, memory, and I/O bandwidth to the local disk and to the network using a host operating system, sometimes known as a hypervisor. The hypervisor also isolates the guest operating systems from each other so that one guest cannot modify or otherwise interfere with another one on the same machine. We currently use a highly customized version of the Xen hypervisor. As noted in the AWS Security White Paper, we are active participants in the Xen community and track all of the latest developments.

While this logical isolation works really well for the vast majority of EC2 use cases, some of our customers have regulatory or restrictions that require physical isolation. Dedicated Instances have been introduced to address these requests.

The Specifics

Each Virtual Private Cloud (VPC) and each EC2 instance running in a VPC now has an associated tenancy attribute. Leaving the attribute set to the value “default” specifies the existing behavior: a single physical machine may run instances launched by several different AWS customers.

Setting the tenancy of a VPC to “dedicated” when the VPC is created will ensure that all instances launched in the VPC will run on single-tenant hardware. The tenancy of a VPC cannot be changed after it has been created.

You can also launch Dedicated Instances in a non-dedicated VPC by setting the instance tenancy to “dedicated” when you call RunInstances. This gives you a lot of flexibility; you can continue to use the default tenancy for most of your instances, reserving dedicated tenancy for the subset of instances that have special needs.

This is supported for all EC2 instance types with the exception of Micro, Cluster Compute, and Cluster GPU.

It is important to note that launching a set of instances with dedicated tenancy does not in any way guarantee that they’ll share the same hardware (they might, but you have no control over it). We actually go to some trouble to spread them out across several machines in order to minimize the effects of a hardware failure.

Pricing

When you launch a Dedicated Instance, we can’t use the remaining “slots” on the hardware to run instances for other AWS users. Therefore, we incur an opportunity cost when you launch a single Dedicated Instance. Put another way, if you run one Dedicated Instance on a machine that can support 10 instances, 9/10ths of the potential revenue from that machine is lost to us.

In order to keep things simple (and to keep you from wasting your time trying to figure out how many instances can run on a single piece of hardware), we add a $10/hour charge whenever you have at least one Dedicated Instance running in a Region. When figured as a per-instance cost, this charge will asymptotically approach $0 (per instance) for customers that run hundreds or thousands of instances in a Region.

We also add a modest premium to the On-Demand pricing for the instance to represent the added value of being able to run it in a dedicated fashion. You can use EC2 Reserved Instances to lower your overall costs in situations where at least part of your demand for EC2 instances is predictable.

— Jeff;

A New Approach to Amazon EC2 Networking

You’ve been able to use the Amazon Virtual Private Cloud to construct a secure bridge between your existing IT infrastructure and the AWS cloud using an encrypted VPN connection. All communication between Amazon EC2 instances running within a particular VPC and the outside world (the Internet) was routed across the VPN connection.

Today we are releasing a set of features that expand the power and value of the Virtual Private Cloud. You can think of this new collection of features as virtual networking for Amazon EC2. While I would hate to be innocently accused of hyperbole, I do think that today’s release legitimately qualifies as massive, one that may very well change the way that you think about EC2 and how it can be put to use in your environment.

The features include:

- A new VPC Wizard to streamline the setup process for a new VPC.

- Full control of network topology including subnets and routing.

- Access controls at the subnet and instance level, including rules for outbound traffic.

- Internet access via an Internet Gateway.

- Elastic IP Addresses for EC2 instances within a VPC.

- Support for Network Address Translation (NAT).

- Option to create a VPC that does not have a VPN connection.

You can now create a network topology in the AWS cloud that closely resembles the one in your physical data center including public, private, and DMZ subnets. Instead of dealing with cables, routers, and switches you can design and instantiate your network programmatically. You can use the AWS Management Console (including a slick new wizard), the command line tools, or the APIs. This means that you could store your entire network layout in abstract form, and then realize it on demand.

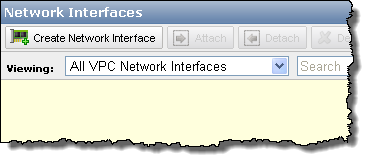

VPC Wizard

The new VPC Wizard lets you get started with any one of four predefined network architectures in under a minute:

The following architectures are available in the wizard:

The following architectures are available in the wizard:

- VPC with a single public subnet – Your instances run in a private, isolated section of the AWS cloud with direct access to the Internet. Network access control lists and security groups can be used to provide strict control over inbound and outbound network traffic to your instances.

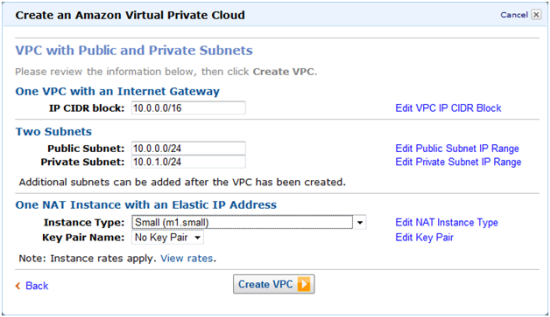

- VPC with public and private subnets – In addition to containing a public subnet, this configuration adds a private subnet whose instances are not addressable from the Internet. Instances in the private subnet can establish outbound connections to the Internet via the public subnet using Network Address Translation.

- VPC with Internet and VPN access – This configuration adds an IPsec Virtual Private Network (VPN) connection between your VPC and your data center effectively extending your data center to the cloud while also providing direct access to the Internet for public subnet instances in your VPC.

- VPC with VPN only access – Your instances run in a private, isolated section of the AWS cloud with a private subnet whose instances are not addressable from the Internet. You can connect this private subnet to your corporate data center via an IPsec Virtual Private Network (VPN) tunnel.

You can start with one of these architectures and then modify it to suit your particular needs, or you can bypass the wizard and build your VPC piece-by-piece. The choice is yours, as is always the case with AWS.

After you choose an architecture, the VPC Wizard will prompt you for the IP addresses and other information that it needs to have in order to create the VPC:

Your VPC will be ready to go within seconds; you need only launch some EC2 instances within it (always on a specific subnet) to be up and running.

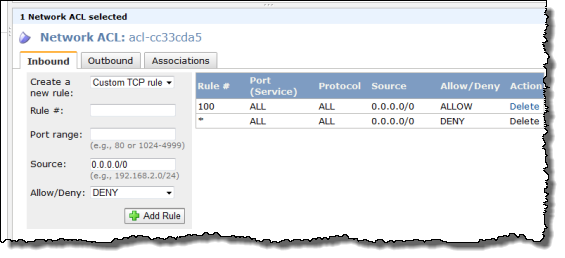

Route Tables

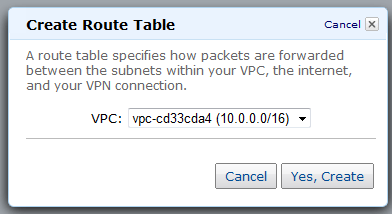

Your VPC will use one or more Route Tables to direct traffic to and from the Internet and VPN Gateways (and your NAT instance, which I haven’t told you about yet) as desired., based on the CIDR block of the destination. Each VPC has a default, or main routing table. You can create additional routing tables and attach them to individual subnets if you’d like:

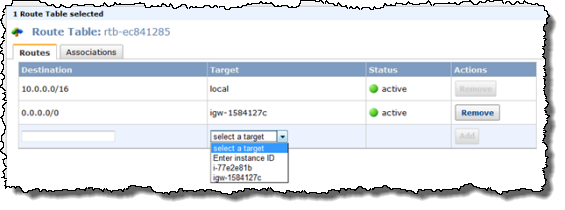

Internet Gateways

You can now create an Internet Gateway within your VPC in order to give you the ability to route traffic to and from the Internet using a Routing Table (see below). It can also be used to streamline access to other parts of AWS, including Amazon S3 (in the absence of an Internet Gateway you’d have to send traffic out through the VPN connection and then back across the public Internet to reach S3).

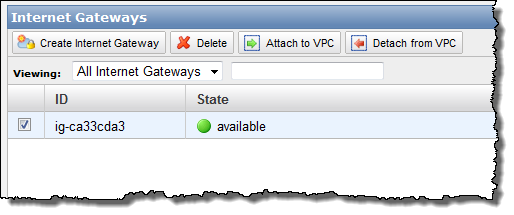

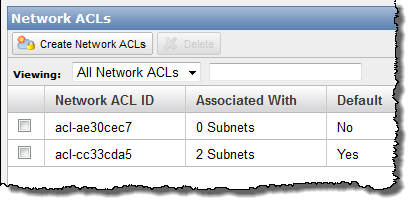

Network ACLs

You can now create and attach a Network ACL (Access Control List) to your subnets if you’d like. You have full control (using a combination of Allow and Deny rules) of the traffic that flows in to and out of each subnet and gateway. You can filter inbound and outbound traffic, and you can filter on any protocol that you’d like:

You can also use AWS Identity and Access Management to restrict access to the APIs and resources related to setting up and managing Network ACLs.

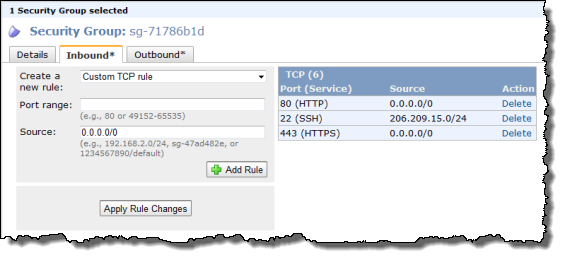

Security Groups

You can now use Security Groups on the EC2 instances that your launch within your VPC. When used in a VPC, Security Groups gain a number of powerful new features including outbound traffic filtering and the ability to create rules that can match any IP protocol including TCP, UDP, and ICMP.

You can also change (add and remove) these security groups on running EC2 instances. The AWS Management Console sports a much-improved user interface for security groups; you can now make multiple changes to a group and then apply all of them in one fell swoop.

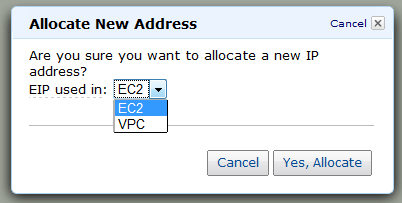

Elastic IP Addresses

You can now assign Elastic IP Addresses to the EC2 instances that are running in your VPC, with one small caveat: these addresses are currently allocated from a separate pool and you can’t assign an existing (non-VPC) Elastic IP Address to an instance running in a VPC.

NAT Addressing

You can now launch a special “NAT Instance” and route traffic from your private subnet to it in. Doing this allows the private instances to initiate outbound connections to the Internet without revealing their IP addresses. A NAT Instance is really just an EC2 instance running a NAT AMI that we supply; you’ll pay the usual EC2 hourly rate for it.

ISV Support

Several companies have been working with these new features and have released (or are just about to release) some very powerful new tools. Here’s what I know about:

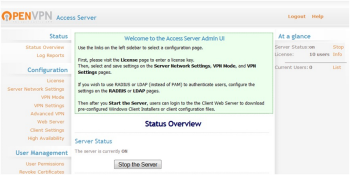

The OpenVPN Access Server is now available as an EC2 AMI and can be launched within a VPC. This is a complete, software-based VPN solution that you can run within a public subnet of your VPC. You can use the web-based administrative GUI to check status, control networking configuration, permissions, and other settings.

The OpenVPN Access Server is now available as an EC2 AMI and can be launched within a VPC. This is a complete, software-based VPN solution that you can run within a public subnet of your VPC. You can use the web-based administrative GUI to check status, control networking configuration, permissions, and other settings.

CohesiveFT’s VPN-Cubed product now supports a number of new scenarios.

CohesiveFT’s VPN-Cubed product now supports a number of new scenarios.

By running the VPN-Cubed manager in the public section of a VPC, you can connect multiple IPsec gateways to your VPC.You can even do this using security appliances from vendors like Cisco, ASA, Juniper, Netscreen, and SonicWall, and you don’t need BGP.

VPN-Cubed also lets you run grid and clustering products that depend on support for multicast protocols.

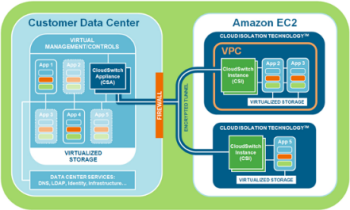

CloudSwitch further enhances VPC’s security and networking capabilities. They support full encryption of data and rest and in transit, key management, and network encryption between EC2 instances and between a data center and EC2 instances. The net-net is complete isolation of virtual machines, data, and communications with no modifications to the virtual machines or the networking configuration.

CloudSwitch further enhances VPC’s security and networking capabilities. They support full encryption of data and rest and in transit, key management, and network encryption between EC2 instances and between a data center and EC2 instances. The net-net is complete isolation of virtual machines, data, and communications with no modifications to the virtual machines or the networking configuration.

The The Riverbed Cloud Steelhead extends Riverbeds WAN optimization solutions to the VPC, making it easier and faster to migrate and access applications and data in the cloud. Available on an elastic, subscription-based pricing model with a portal-based management system.

The The Riverbed Cloud Steelhead extends Riverbeds WAN optimization solutions to the VPC, making it easier and faster to migrate and access applications and data in the cloud. Available on an elastic, subscription-based pricing model with a portal-based management system.

Pricing

I think this is the best part of the Virtual Private Cloud: you can deploy a feature-packed private network at no additional charge! We don’t charge you for creating a VPC, subnet, ACLs, security groups, routing tables, or VPN Gateway, and there is no charge for traffic between S3 and your Amazon EC2 instances in VPC. Running Instances (including NAT instances), Elastic Block Storage, VPN Connections, Internet bandwidth, and unmapped Elastic IPs will incur our usual charges.

Internet Gateways in VPC has been a high priority for our customers, and Im excited about all the new ways VPC can be used. For example, VPC is a great place for applications that require the security provided by outbound filtering, network ACLs, and NAT functionality. Or you could use VPC to host public-facing web servers that have VPN-based network connectivity to your intranet, enabling you to use your internal authentication systems. I’m sure your ideas are better than mine; leave me a comment and let me know what you think!

— Jeff;

Updates to the AWS SDKs

We’ve made some important updates to the AWS SDK for Java the AWS SDK for PHP, and the AWS SDK for .NET. The newest versions of the respective SDKs are available now.

AWS SDK for Java

The AWS SDK for Java now supports the new Amazon S3 Multipart Upload feature in two different ways. First, you can use the new APIs — InitiateMultipartUpload, UploadPart, CompleteMultipartUpload, and so forth. Second, you can use the SDK’s new TransferManager class. This class implements an asynchronous, higher level interface for uploading data to Amazon S3. The TransferManager will use multipart uploads if the object to be uploaded is larger than a configurable threshold. You can simply initiate the transfer (using the upload method) and proceed. Your application can poll the TransferManager to track the status of the upload.

The SDK’s PutObject method can now provide status updates via a new ProgressListener interface. This can be used to implement a status bar or for other tracking purposes.

We’ve also fixed a couple of bugs.

AWS SDK for PHP

The AWS SDK for PHP now supports even more services. We’ve added support for Elastic Load Balancing, the Relational Database Service, and the Virtual Private Cloud.

We have also added support for the S3 Multipart Upload, and for CloudFront Custom Origins, and you can now stream to (writing) or from (reading) an open file when transferring an S3 object. You can also seek to a specific file position before initating a streaming transfer.

The 1000-item limit has been removed from the convenience functions; get_bucket_filesize, get_object_list, delete_all_objects, delete_all_object_versions, and delete_bucket will now operate on all of the entries in a bucket.

We’ve also fixed a number of bugs.

AWS SDK for .NET

The AWS SDK for .NET now supports the Amazon S3 Multipart Upload feature using the new APIs — InitiateMultipartUpload, UploadPart, CompleteMultipartUpload, etc.as well as a new TransferUtility class that automatically determines when to upload objects using the Multipart Upload feature.

Weve also added support for the CloudFront Custom Origins and fixed a few bugs.

These SDKs (and a lot of other things) are produced by the AWS Developer Resource team. They are hiring and have the following open positions:

- Ruby Software Development Engineer

- Software Development Engineer

- Software Development Engineer – Windows/.NET

— Jeff;

AWS Management Console Support for the Amazon Virtual Private Cloud

The AWS Management Console now supports the Amazon Virtual Private Cloud (VPC). You can now create and manage a VPC and all of the associated resources including subnets, DHCP Options Sets, Customer Gateways, VPN Gateways and the all-important VPN Connection from the comfort of your browser.

Put it all together and you can create a secure, seamless bridge between your existing IT infrastructure and the AWS cloud in a matter of minutes. You’ll need to get some important network addressing information from your network administrator beforehand, and you’ll will need their help to install a configuration file for your customer gateway.

Here are some key VPC terms that you should know before you should read the rest of this post (these were lifted from the Amazon VPC Getting Started Guide):

VPC – An Amazon VPC is an isolated portion of the AWS cloud populated by infrastructure, platform, and application services that share common security and interconnection. You define a VPC’s address space, security policies, and network connectivity.

Subnet – A segment of a VPC’s IP address range that Amazon EC2 instances can be attached to.

VPN Connection – A connection between your VPC and data center, home network, or co-location facility. A VPN connection has two endpoints: a Customer Gateway and a VPN Gateway.

Customer Gateway – Your side of a VPN connection that maintains connectivity.

VPN Gateway – The Amazon side of a VPN connection that maintains connectivity.

Let’s take a tour through the new VPC support in the console. As usual, it starts out with a new tab in the console’s menu bar:

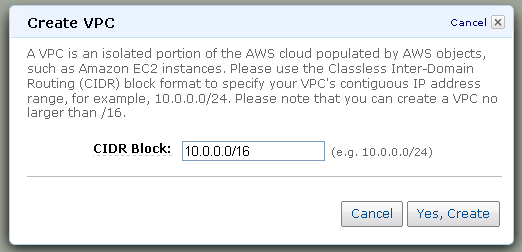

The first step is to create a VPC by specifying its IP address range using CIDR notation. I’ll create a “/16” to allow up to 65536 instances (the actual number will be slightly less because VPC reserves a few IP addresses in each subnet) in my VPC:

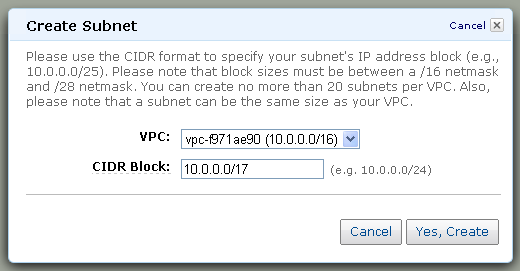

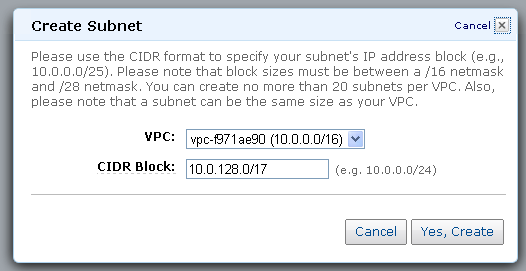

The next step is to create one or more subnets within the IP address range of the VPC. I’ll create a pair, each one covering half of the overall IP address range of my VPC:

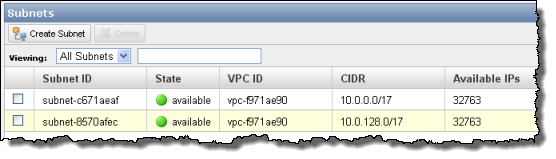

The console shows all of the subnets and the number of available IP addresses in each one:

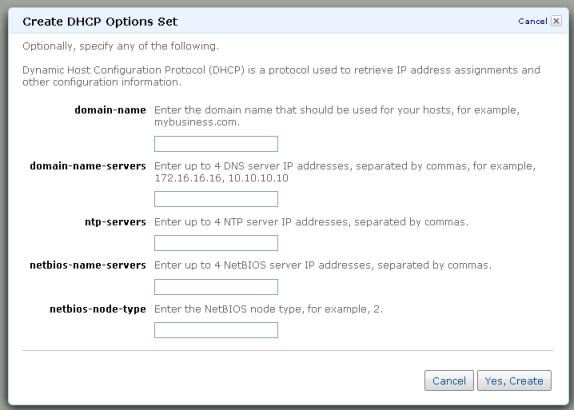

You can choose to create a DHCP Option Set for additional control of domain names, IP addresses, NTP servers, and NetBIOS options. In many cases the default option set will suffice.

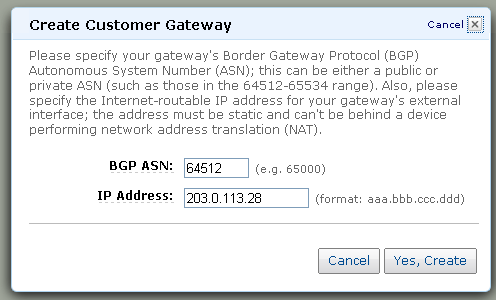

And the next step is to create a Customer Gateway to represent the VPN device on the existing network (be sure to use the BGP ASN and IP Address of your own network):

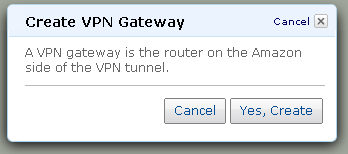

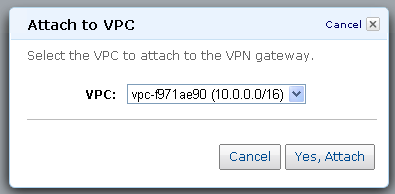

We’re almost there! The next step is to create a VPN Gateway (to represent the VPN device on the AWS cloud) and to attach it to the VPC:

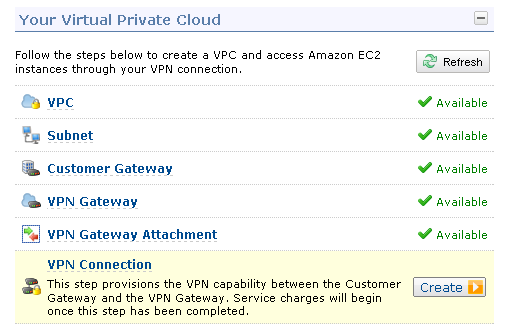

The VPC Console Dashboard displays the status of the key elements of the VPC:

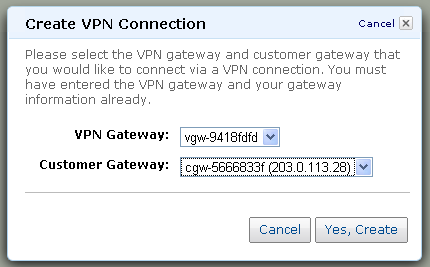

With both ends of the connection ready, the next step is to make the connection between your existing network and the AWS cloud:

This step (as well as some of the others) can take a minute or two to complete.

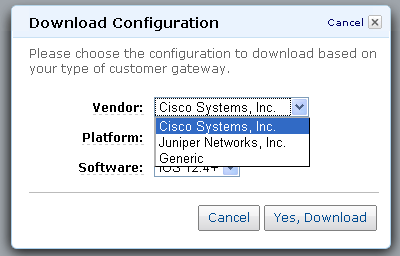

Now it is time to download the configuration information for the customer gateway.

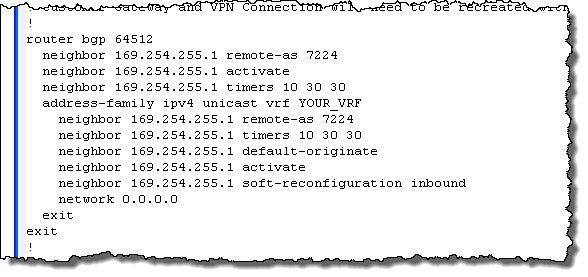

The configuration information is provided as a text file suitable for use with the specified type of customer gateway:

Once the configuration information has been loaded into the customer gateway, the VPN tunnel can be established and it will be possible to make connections from within the existing network to the newly launched EC2 instances.

I think that you’ll agree that this new feature really simplifies the process of setting up a VPC, making it accessible to just about any AWS user. What do you think?

–Jeff;