Category: Security

The New AWS Security Blog

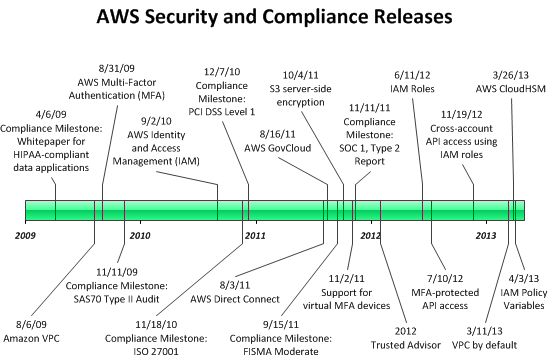

The AWS team works non-stop to improve the security of our services. As you can see from the timeline below, many of our recent releases have made it easier for you to secure your cloud resources.

The new AWS Security Blog is your one-stop shop for best practices, how-to guides, customer stories, and more. Like the existing Java, Mobile, and Ruby blogs, the AWS Security Blog focuses on a single topic. We are thinking about creating other blogs of this type; please leave a comment to suggest a topic.

Here’s a timeline of the most recent security and compliance releases:

The following table provides more detail:

| Date | Security or Compliance Event | Description |

| 4/3/13 | IAM Policy Variables | Create policies containing variables that will be dynamically evaluated using context from the authenticated user’s session. |

| 3/26/13 | AWS CloudHSM | Use dedicated Hardware Security Module (HSM) appliances within the AWS Cloud. |

| 3/11/13 | VPC by default | EC2 instances will be launched in a VPC for new customers. |

| 11/19/12 | Cross-account API access using IAM roles | Delegate temporary API access to AWS services and resources within your AWS account without having to share long-term security credentials. |

| 7/10/12 | MFA-protected API access | Enforce MFA authentication for AWS service APIs via AWS Identity and Access Management (IAM) policies. |

| 6/11/12 | IAM Roles | Simplifies the process for your applications to secure access AWS service APIs from EC2 instances. |

| 1/30/12 | AWS Trusted Advisor | Self-service access to proactive alerts that identify opportunities to save money, improve system performance, or close security gaps. |

| 11/11/11 | Compliance Milestone: SOC 1, Type 2 Report | |

| 11/2/11 | Support for virtual MFA devices | Use your existing smartphone, tablet, or computer running any application that supports the open TOTP standard. |

| 10/4/11 | S3 server-side encryption | Request encrypted storage when you store a new object in Amazon S3 or when you copy an existing object. |

| 9/15/11 | Compliance Milestone: FISMA Moderate | |

| 8/16/11 | AWS GovCloud | AWS Region designed to allow US government agencies and customers to move more sensitive workloads into the cloud by addressing their specific regulatory and compliance requirements. |

| 8/3/11 | AWS Direct Connect | Enables you to bypass the public Internet when connecting to AWS. |

| 12/7/10 | Compliance Milestone: PCI DSS Level 1 | |

| 11/18/10 | Compliance Milestone: ISO 27001 | |

| 9/2/10 | AWS Identity and Access Management (IAM) | Enables you to securely control access to AWS services and resources for your users. |

| 11/11/09 | Compliance Milestone: SAS70 Type II Audit | |

| 8/31/09 | AWS Multi-Factor Authentication (MFA) | Provides an extra level of security that you can apply to your AWS environment. |

| 8/26/09 | Amazon VPC | Provision a logically isolated section of the Amazon Web Services (AWS) Cloud where you can launch AWS resources in a virtual network that you define. |

| 4/6/09 | Compliance milestone: whitepaper for HIPAA-compliant data applications |

Check out the AWS Security Blog and let us know what you think.

— Jeff;

AWS CloudHSM – Secure Key Storage and Cryptographic Operations

Back in the early days of AWS, I would often receive questions that boiled down to “This sounds really interesting, but what about security?”

We created the AWS Security & Compliance Center to publish information about the various reports, certifications, and independent attestations that we’ve earned and to provide you with additional information about the security features that we’ve built in to AWS including Identity and Access Management, Multi-Factor Authentication, Key Rotation, support for server-side and client-side encryption in Amazon S3, and SSL support in the Elastic Load Balancer. The Security & Compliance Center is also home to the AWS Risk and Compliance White Paper and the AWS Overview of Security Processes.

Today we are adding another powerful security option, the AWS CloudHSM service. While the items listed above are more than adequate in most situations, some of our customers are in situations where contractual or regulatory needs mandate additional protection for their keys. The CloudHSM service helps these customers to meet strict requirements for key management without sacrificing application performance.

What is an HSM and What Does it Do?

HSM is short for Hardware Security Module. It is a piece of hardware — a dedicated appliance that provides secure key storage and a set of cryptographic operations within a tamper-resistant enclosure. You can store your keys within an HSM and use them to encrypt and decrypt data while keeping them safe and sound and under your full control. You are the only one with access to the keys stored in an HSM.

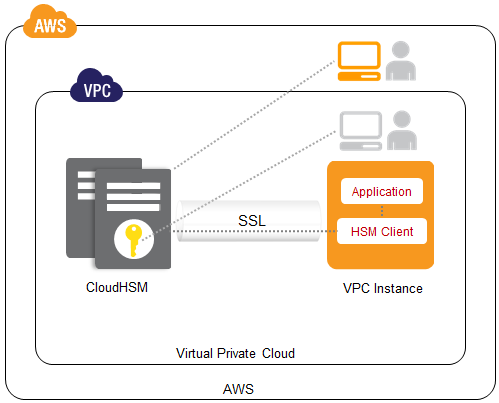

The AWS CloudHSM Service

The AWS CloudHSM service brings the benefits of HSMs to the cloud. You retain full control of the keys and the cryptographic operations performed by the HSM(s) you create, including exclusive, single-tenant access to each one. Your cryptographic keys are protected by a tamper-resistant HSM that is designed to meet a number of international and US Government standards including NIST FIPS 140-2 and Common Criteria EAL4+.

Each of your CloudHSMs has an IP address within your Amazon Virtual Private Cloud (VPC). You’ll receive administrator credentials for the appliance, allowing you to create and manage cryptographic keys, create user accounts, and perform cryptographic operations using those accounts. We do not have access to your keys; they remain under your control at all times. In Luna SA terminology, we have Admin credentials and you have both HSM Admin and HSM Partition Owner credentials.

AWS CloudHSM is now available in multiple Availability Zones in the US East (Northern Virginia) and EU West (Ireland) Regions. We’ll be making them available in other Regions throughout 2013 based on customer demand.

Inside the AWS CloudHSM

We are currently providing the Luna SA HSM appliance from SafeNet, Inc. The appliances run version 5 of the Luna SA software.

Once your AWS CloudHSM is provisioned, you can access it through a number of standard APIs including PCKS #11 (Cryptographic Token Interface Standard), the Microsoft Cryptography API (CAPI), and the Java JCA/JCE (Java Cryptography Architecture / Java Cryptography Extensions). The Luna SA client provides these APIs to your applications and implements each call by connecting to your CloudHSM using a mutually authenticated SSL connection. If you have existing applications that run on top of these APIs, you can use them with CloudHSM in short order.

Getting Started

The CloudHSM service is available today in the US-East and EU-West regions. You’ll pay an one-time upfront fee (currently $5,000 per HSM), an hourly rate (currently $1.88 per hour or $1,373 per month on average for CloudHSM service in the US-East region). Consult the CloudHSM pricing page for more info.

To get started with CloudHSM or to learn more, contact us. You can also schedule a short trial period using the same link.

In most cases we can satisfy requests for one or two CloudHSMs per customer within a few business days. Requests for more than two may take several weeks.

Learn More

To learn more about CloudHSM, read the CloudHSM FAQ, the CloudHSM Getting Started Guide, and the CloudHSM Home Page.

— Jeff;

The AWS CISO on AWS Security

As you can tell by looking at the AWS Security and Compliance Center, we take security seriously. You can find information about our certifications and accreditations in the center, along with links to four security white papers.

I would also like to recommend a new AWS video to you. In the video, AWS VP and CISO Stephen Schmidt discusses security and privacy in the AWS Cloud, in concrete and specific terms:

Here are my favorite quotes from the video:

No hard drive leaves our facilities intact. Period.

I run security for the company. I don’t have access to our data centers because I don’t need to be there on a regular basis.

If you happen to talk to someone who doesn’t quite grasp what cloud security means, please share this video with them and let me know what happens.

— Jeff;

Updated AWS Security White Paper; New Risk and Compliance White Paper

We have updated the AWS Security White Paper and we’ve created a new Risk and Compliance White Paper. Both are available now.

The AWS Security White Paper describes our physical and operational security principles and practices.

The AWS Security White Paper describes our physical and operational security principles and practices.

It includes a description of the shared responsibility model, a summary of our control environment, a review of secure design principles, and detailed information about the security and backup considerations related to each part of AWS including the Virtual Private Cloud, EC2, and the Simple Storage Service.

The new AWS Risk and Compliance White Paper covers a number of important topics including (again) the shared responsibility model, additional information about our control environment and how to evaluate it, and detailed information about our certifications and third-party attestations. A section on key compliance issues addresses a number of topics that we are asked about on a regular basis.

The new AWS Risk and Compliance White Paper covers a number of important topics including (again) the shared responsibility model, additional information about our control environment and how to evaluate it, and detailed information about our certifications and third-party attestations. A section on key compliance issues addresses a number of topics that we are asked about on a regular basis.

The AWS Security team and the AWS Compliance team are complimentary organizations and are responsible for the security infrastructure, practices, and compliance programs described in these white papers. The AWS Security team is headed by our Chief Information Security Officer and is based outside of Washington, DC. Like most parts of AWS, this team is growing and they have a number of open positions:

- IT Security Software Development Engineer

- Software Development Engineer – Amazon Web Services

- IT Security Software Development Engineer

We also have a number of security-related positions open in Seattle:

- Software Development Engineer – EC2 Network Security

- Senior Software Development Engineer – EC2 Network Security

- Senior Software Development Engineer – EC2

- Application Security Engineer

- Software Development Engineer – AWS Identity & Access

- Software Development Engineer – AWS Identity & Access

- Software Development Engineer – AWS Identity & Access

- Software Development Engineer

- Software Development Engineer

- System Engineer

- Principal Product Manager – AWS Identity and Access Management

— Jeff;

AWS Achieves PCI DSS 2.0 Validated Service Provider Status

If your application needs to process, store, or transmit credit card data, you are probably familiar with the Payment Card Industry Data Security Standard, otherwise known as PCI DSS. This standard specifies best practices and security controls needed to keep credit card data safe and secure during transit, processing, and storage. Among other things, it requires organizations to build and maintain a secure network, protect cardholder data, maintain a vulnerability management program, implement strong security measures, test and monitor networks on a regular basis, and to maintain an information security policy.

If your application needs to process, store, or transmit credit card data, you are probably familiar with the Payment Card Industry Data Security Standard, otherwise known as PCI DSS. This standard specifies best practices and security controls needed to keep credit card data safe and secure during transit, processing, and storage. Among other things, it requires organizations to build and maintain a secure network, protect cardholder data, maintain a vulnerability management program, implement strong security measures, test and monitor networks on a regular basis, and to maintain an information security policy.

I am happy to announce that AWS has achieved validated Level 1 service provider status for PCI DSS. Our compliance to PCI DSS v2 has been validated as compliant by an independent Quality Security Assessor (QSA). AWS’s status as a validated Level 1 Service Provider means that merchants and other service providers now have access to a computing platform that been verified to conform to PCI standards. Merchants and services providers with a need to certify against PCD DSS and to maintain their own certification can now leverage the benefits of the AWS cloud and even simplify their own PCI compliance efforts by relying on AWS’s status as a validated service provider. Our validation covers the services that are typically used to manage a cardholder environment including the Amazon Elastic Compute Cloud (EC2), the Amazon Simple Storage Service (S3), Amazon Elastic Block Storage (EBS), and the Amazon Virtual Private Cloud (VPC).

Our Qualified Service Assessor has submitted a complete Report on Compliance and a fully executed Attestation of Compliance to Visa as of November 30, 2010. AWS will appear on Visa’s list of validated service providers in the near future.

Until recently, it was unthinkable to even consider the possibility of attaining PCI compliance within a virtualized, multi-tenant environment. PCI DSS version 2.0, the newest version of DSS published in late October 2010, did provide guidance for dealing with virtualization but did not provide any guidance around multi-tenant environments. However, even without multi-tenancy guidance, we were able to work with our PCI assessor to document our security management processes, PCI controls, and compensating controls to show how our core services effectively and securely segregate each AWS customer within their own protected environment. Our PCI assessor found our security and architecture conformed with the new PCI standard and verified our compliance.

Even if your application doesn’t process, store, or transmit credit card data, you should find this validation helpful since PCI DSS is often viewed as a good indicator of the ability of an organization to secure any type of sensitive data. We expect that our enterprise customers will now consider moving even more applications and critical data to the AWS cloud as a result of this announcement.

Update: Many people have asked us if they need to launch some sort of special PCI compliant environment. They do not need to do so. The entire infrastructure that supports EC2, S3, EBS and VPC is compliant and there is no separate environment or special API to use. Any server or storage object deployed in these services is in a PCI compliant environment, globally.

Learn more by reading our new PCI DSS FAQ.

— Jeff;

Security, compliance, and governance in the cloud with AWS and Freedom OSS

- Werner Vogels, Amazon Web Services CTO

- Max Yankelevich, Freedom OSS Chief Cloud Architect

- Joel Davne, Freedom OSS CEO

- Yours truly — Steve Riley, Amazon Web Services technical evangelist

If you’re in Chicago, please join us and learn how, together with AWS and Freedom OSS, you can:

- Establish a single, flexible interface to initiate both self-service as well as engineer-assisted activities on the cloud.

- Significantly reduce the time to market for mission-critical applications on AWS and reap the full benefits of cloud computing.

- Use smart cards to insure compliance with NIST FIPS 140-2, ISO 11568, and FFIEC standards such HIPAA, PCI, SAS, and GLBA

- Use DDoS prevention solutions to incorporate attack and provision defenses in real-time to protect your data stored in the cloud

Max and Joel, from Freedom OSS, will appear live. Werner and Steve, from AWS, will appear “via satellite” — or, to be more technically accurate, “via cloud.”

Reserve your spot today: visit our announcement page for the full agenda, event venue details, and to register.

Getting Secure *In* the Cloud: Hosted Two-factor Authentication

To help you understand why I think this is so cool, a brief security lesson is in order. Three important principles are necessary to ensure that the right people are doing the right things in any information system:

- Identification. This is how you assert who you are to your computer. Typically it’s your user name; it could also be the subject name on a digital certificate.

- Authentication. This is how you prove your identity assertion. The computer won’t believe you until you can demonstrate knowledge of a secret that the computer can then verify. Typically it’s your password; it could also be the private key associated with a digital certificate. Authentication sequences never send the actual secrets over the wire; instead, the secrets are used to compute a difficult-to-reverse message. Since (presumably) only you know your secret, your claim is valid.

- Authorization. This is what you’re allowed to do once the computer grants you access.

Unfortunately, humans aren’t very good at generating decent secrets and frequently fail at keeping them secret. Multi-factor authentication mitigates this carbon problem by requiring an additional burden of proof. Authentication factors come in many varieties:

- Something you know. A password; a PIN; a response to a challenge.

- Something you have. A token; a smartcard; a mobile phone; a passport; a wristband.

- Something you are or do. A tamper- and theft-resistant biometric characteristic; the distinct pattern of the way you type on a keyboard; your gait. (Note: I disqualify fingerprints as authenticators because they aren’t secret: you leave yours everywhere you go and are easy to forge. Fingerprints are identifiers.)

Strong authentication combines at least two of these. My preference is for one from the “know” category and one from the “have” category because individually the elements are useless and because the combination is easy to deploy (you’d quickly tire of having to walk 100 paces in front of your computer each time you logged on!).

Several products in the “have” category compete for your attention. The DS3 CloudAS supports many common tokens so that you have a choice of whose to use. In some cases you might require using a dedicated hardware device that generates a random time-sequenced code. My favorite item in the “have” category is a mobile phone. Let me illustrate why.

Mobile phones provide out-of-band authentication. Phishing succeeds because bad guys get you to reveal your password and then log into your bank account and clear you out. Imagine that a bank’s website incorporates transaction authentication by sending a challenge to your pre-registered mobile phone and then waits for you to enter that challenge on the web page before it proceeds. This technique pretty much eliminates phishing as an attack vector — an attacker would need to know your ID, know your password, and steal your phone. Indeed, the idea isn’t really imaginary: it’s already in place in many banks around the world. (These are the smart banks who realize that two-factor authentication just for logon isn’t sufficient.)

> Steve <

Updated: AWS Security Whitepaper

- A description of the AWS control environment

- A list of our SAS-70 Type II Control Objectives

- Some discussion of risk management and shared responsibility principles

- Greater visibility into our monitoring and communication processes and our employee lifecycle

- Descriptions of our physical security, environmental safeguards, configuration management, and business continuity management processes and plans

- Updated summaries of new AWS security features

- Additional detail about the security attributes of various AWS components

The additional information and greater level of detail should help to answer many common questions. As always, feel free to reach out to us if you’re still needing more information.

> Steve <

What’s New in AWS Security: Vulnerability Reporting and Penetration Testing

Security is a top priority for Amazon Web Services. Providing a trustworthy infrastructure for you to develop and deploy applications is a responsibility we take very seriously. One important aspect of gaining your trust is being open and transparent about our security processes and continually working toward achieving industry-recognized certifications. Other important aspects include providing you with mechanisms for contacting us about potential security issues and enabling you to conduct security tests of the applications you deploy on AWS. I’m pleased to announce today two new policies: one that outlines our vulnerability reporting process and one that describes how to receive permission to conduct penetration tests of the applications running on your EC2 instances.

A new page in the AWS Security Center describes our vulnerability reporting process. The process is high-priority for us, it’s human-driven, and is governed by a service level commitment. Like other technology providers, we believe in the concept of responsible disclosure: let’s work together to protect everyone.

Another page in the Security Center describes our penetration testing procedure. Normally, conducting such tests violates our Acceptable Use Policy because these tests are often indistinguishable from real attacks. However, to ensure higher degrees of application security, external testing is an important phase of development and deployment. We put the procedure in place so that we won’t respond to your testing as if your instances were under attack.

The e-mail address aws-security@amazon.com is your single point of contact for all things security-related. If you need to contact us about a particularly sensitive issue, you can encrypt your message with our PGP public key. And, of course, if you suspect abuse of EC2 or other AWS services, our abuse reporting process remains in place.

Finally, a small navigational change. We’ve moved the bulletins off the main page and onto a separate security bulletin list and changed the format so that all bulletins are displayed rather than just the most recent five.

As always, we welcome your comments and feedback. We’re here to help you succeed!

> Steve <

Building three-tier architectures with security groups

Update (17 June): I’ve changed the command-line examples to reflect current capabilities of our SOAP and Query APIs. They do, in fact, allow specifying a protocol and port range when you’re using another security group as the traffic origin. Our Management Console will support this functionality at a later date.

During a recent webcast an attendee asked a question about building multi-tier architectures on AWS. Unlike with traditional on-premise physical deployments, AWS’s virtualization of compute, storage, and network elements requires that you think differently about how to build network segregation into your projects. There are no distinct physical networks, no VLANs, and no DMZs. So how can you construct the equivalent of traditional three-tier architectures?

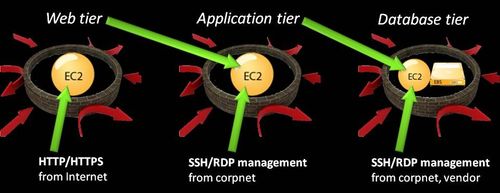

Our security whitepaper alludes to the possibility (pp. 5-6, November 2009 edition). In my security presentations I show this diagram to illustrate conceptually how a three-tier architecture can be built:

Security groups: a quick review

Before we explore how to define the architecture, let’s take a moment to review some critical details about how security groups work.

A security group is a semi-stateful firewall (more on this in a moment) that contains one or more rules defining which traffic is permitted into an instance. Rules contain the following elements:

- The permitted protocol (TCP or UDP)

- The permitted destination port range (more on this in a moment, too)

- The permitted source IP address range or originating security group

Now there are three particular aspects I’d like to call your attention to. First: security groups are semi-stateful because changes made to their rules don’t apply to any in-progress connections. Say that you currently have a rule permitting inbound traffic to port 3579/tcp, and that there are right now five inbound connections to this port. If you delete the rule from the group, the group blocks any new inbound requests to port 3579/tcp but doesn’t terminate the existing five connections. This behavior is intentional; I want to ensure everyone understands this. In all other respects, security groups behave like traditional stateful firewalls.

The second aspect is our terminology for port ranges. This often confuses people new to AWS. The traditional usage of the words “from” and “to” in security-speak describes traffic direction: “from” indicates the source and “to” indicates the destination. This isn’t the case when defining rules for security groups. Instead, security group rules concern themselves only with destination ports; that is, the ports on your instances listening for incoming connections. The “from port” and “to port” in a security group rule indicate the starting and ending port numbers for occasions when you need to define a range of listening ports. In most cases you need to allow only a single port, so the values for “from port” and “to port” will be the same.

This leads to the third aspect I’d like to discuss: how to define traffic sources. The most common method is to specify a protocol along with an individual source IP address, a range of IP addresses using CIDR notation, or the entire Internet (using 0.0.0.0/0). The other way to define a traffic source is to supply the name of some other security group you’ve already created. Here’s the magic jewel for creating three-tier architectures; it’s this capability that answered the person’s question on the webcast.

Defining the security groups for a three-tier architecture

If you’re an API aficionado, you can use these eight simple calls to create the three required security groups to implement this architecture:

ec2-authorize WebSG -P tcp -p 80 -s 0.0.0.0/0

ec2-authorize WebSG -P tcp -p 443 -s 0.0.0.0/0

ec2-authorize WebSG -P tcp -p 22|3389 -s CorpNetec2-authorize AppSG -P tcp|udp -p AppPort|AppPort-Range -o WebSG

ec2-authorize AppSG -P tcp -p 22|3389 -s CorpNetec2-authorize DBSG -P tcp|udp -p DBPort|DBPort-Range -o AppSG

ec2-authorize DBSG -P tcp -p 22|3389 -s CorpNet

ec2-authorize DBSG -P tcp -p 22|3389 -s VendorNet

Note here the interesting distinction in the parameters used with the commands. If the rule permits a source IP address or range, the parameter is “-s” which indicates source. If the rule permits some other security group, the parameter is “-o” which indicates origin. Neat, huh?

The color coding in the rule list helps you visualize how the rules relate to each other:

- The first three statements define WebSG, the security group for the web tier. The first two rules in the group permit inbound traffic to destination ports 80/tcp and 443/tcp from any node on the Internet. The third rule in the group permits inbound traffic to management ports (22/tcp for SSH, 3389/tcp for RDP) from the IP address range of your internal corporate network — this is optional, but probably a good idea if you ever need to administer your instances :)

- The second two statements define AppSG, the security group for the application tier. The second rule in the group permits inbound traffic to management ports from your corpnet. The first rule in the group permits inbound traffic from WebSG — the origin — to the application’s listening port(s).

- The final three statements define DBSG, the security group for the database tier. The second and third rules in the group permit inbound traffic to management ports from your corpnet and from your database vendors network (required for certain third-party database products). The first rule in the group permits inbound traffic from AppSG — the origin — to the database’s listening port(s).

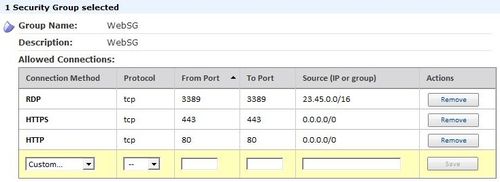

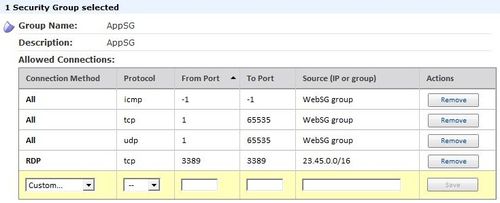

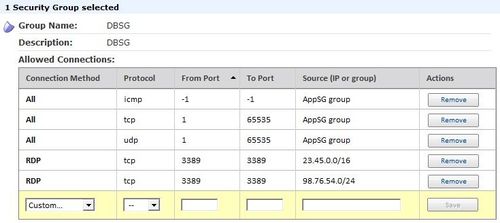

Of course, not everyone’s a programmer (your humble author included), so here are some screen shots showing how to define these security groups using the AWS Management Console. Please be aware that using the Console produces different results, which I’ll describe in a moment.

WebSG permitting HTTP from the Internet, HTTPS from the Internet, and RDP from our sample corpnet address range:

AppSG permitting connections from instances in WebSG and RDP from our sample corpnet address range:

DBSG permitting connections from instances in AppSG and RDP from our sample corpnet and vendor address ranges:

Important. The AWS APIs and the Management Console behave differently when defining security groups as origins:

- Management console: When you define a rule using the name of a security group in the “Source (IP or group)” column, you can’t define specific protocols or ports. The console automatically expands your single rule into the three you see: one for all ICMP, one for all TCP, and one for all UDP. If you remove one of them, the console will remove the other two. If you wish to further limit inbound traffic on those instances, feel free to use a software firewall such as iptables or the Windows Firewall.

- SOAP and Query APIs: With the APIs, rules containing security group origins can include protocol and port specifications. The result is only the rules you define, not the three broad automatic rules like the console creates. This provides you with greater control and reduces potential exposure, so I’d recommend using the APIs rather than the Console. As of now, while the Console correctly displays whatever rules you define with the APIs, please don’t modify API-created rules because the Console’s behavior will override your changes. We’re working to make the Console support the same functionality as the APIs.

More information

The latest API documentation provides details and examples of how to configure rules in security groups. To learn more, please see:

- Network security concepts (from the Amazon EC2 User Guide)

- Using security groups (from the Amazon EC2 User Guide)

- SOAP and Query API examples (from the Amazon EC2 Developer Guide)

- SOAP API syntax (from the Amazon EC2 API Reference)

- Query API syntax (from the Amazon EC2 API Reference)

I hope this short tutorial has been useful for you and provides information you can use as you plan migrations to or new implementations in AWS. Over time, I’d like to write more short security and privacy related guides which I’ll post here and in our Security Center. If you have comments or suggestions about content you’d like to see, please let us know. We’re here to make sure you succeed!

> Steve <