Category: government

Automating Governance on AWS

IT governance identifies risk and determines the identity controls to mitigate that risk. Traditionally, IT governance has required long, detailed documents and hours of work for IT managers, security or audit professionals, and admins. Automating governance on AWS offers a better way.

Let’s consider a world where only two things matter: customer trust and cost control. First, we need to identify the risks to mitigate:

- Customer Trust: Customer trust is essential. Allowing customer data to be obtained by unauthorized third parties is damaging to customers and the organization.

- Cost: Doing more with your money is critical to success for any organization.

Then, we identify what policies we need to mitigate those risks:

- Encryption at rest should be used to avoid interception of customer data by unauthorized parties.

- A consistent instance family should be used to allow for better Reserved Instance (RI) optimization.

Finally, we can set up automated controls to make sure those policies are followed. With AWS, you have several options:

Encrypting Amazon Elastic Block Store (EBS) volumes is a fairly straightforward configuration. However, validating that all volumes have been encrypted may not be as easy. Despite how easily volumes can be encrypted, it is still possible to overlook or intentionally not encrypt a volume. AWS Config Rules can be used to automatically and in real time check that all volumes are encrypted. Here is how to set that up:

- Open AWS Console

- Go to Services -> Management Tools -> Config

- Click Add Rule

- Click encrypted-volumes

- Click Save

Once the rule has finished being evaluated, you can see a list of EBS volumes and whether or not they are compliant with this new policy. This example uses a built-in AWS Config Rule, but you can also use custom rules where built-in rules do not exist.

When you get several Amazon Elastic Compute Cloud (Amazon EC2) instances spun up, eventually you will want to think about purchasing Reserved Instances (RIs) to take advantage of the cost savings they provide. Focus on one or a few instance families and purchase RIs of different sizes within those families. The reason to do this is because RIs can be split and combined into different sizes if the workload changes in the future. Reducing the number of instance families used increases the likelihood that a future need can be accommodated by resizing existing RIs. To enforce this policy, an IAM rule can be used to allow creation of EC2 instances which are in only the allowed families. See below:

It’s just that simple.

With AWS automated controls, system administrators don’t need to memorize every required control, security admins can easily validate that policies are being followed, and IT managers can see it all on a dashboard in near real time – thereby decreasing organizational risk and freeing up resources to focus on other critical tasks.

To learn more about Automating Governance on AWS, read our white paper, Automating Governance: A Managed Service Approach to Security and Compliance on AWS.

A guest blog by Joshua Dayberry, Senior Solutions Architect, Amazon Web Services Worldwide Public Sector

AWS Convenes Data-Driven Justice Technology and Research Consortium

AWS has joined law enforcement, health, and public officials from more than 60 communities across the United States to help keep low-risk, non-dangerous offenders out of the criminal justice system. As part of the White House’s Data Driven Justice Initiative (DDJI), we are pleased to support these innovative communities who are working to use data to make more informed decisions in an effort to safely reduce local jail populations and connect people to effective care.

As part of the DDJI, AWS will serve as the convener of a Technology and Research Consortium, bringing together data scientists, researchers, and private sector innovators to identify technology solutions. The first meeting was held last week at the AWS office in Washington, DC where we were joined by more than 40 thought leaders to define the challenges that communities face and the opportunities that the DDJI will afford criminal justice and health officials through collaboration. Moving forward, these thought leaders will share technology best practices, local initiatives, and data sets, and define research criteria that will help achieve the goals of the DDJI.

One of the jurisdictions that has used this approach to positive effect is Miami-Dade, Florida, which has been leveraging health and criminal justice data to produce meaningful insights. Through research, they found that 97 people with serious mental illness accounted for $13.7 million in services over four years, spending more than 39,000 days in either jail, emergency rooms, state hospitals, or psychiatric facilities in their county. As a result, the county provided training to their police officers and 911 dispatchers around how to de-escalate mental health-related calls which led to significant reductions in arrests and diverted more people to mental health services instead of the criminal justice system. In the past five years, Miami-Dade police have responded to nearly 50,000 calls for service for people in mental health crises, but have made only 109 arrests, diverting more than 10,000 people to services or safely stabilizing situations without arrest. The jail population fell from over 7,000 to just over 4,700, and the county was able to close an entire jail facility, saving nearly $12 million a year.

Since the White House DDJI announcement, several more counties have committed to joining the initiative. The technology and research consortium will work to help get these new counties onboarded as well as share best practices like Miami-Dade, who are already implementing data-driven initiatives. The goal is to cascade knowledge and build a new standard for data sharing and analytics in all participating jurisdictions.

Moving forward, AWS will provide the cloud infrastructure to facilitate individual-level data exchange among practitioners in participating DDJI jurisdictions and provide a data repository for de-identified health and criminal justice data. This will enable practitioners and researchers to access and analyze these data sets to better understand populations of people who are frequently incarcerated, identify opportunities for early intervention, and evaluate which programs are most effective.

“The White House Data Driven Justice Initiative is a prime example of the public and private sector coming together to innovate for the greater good. By using cloud technology to collect and analyze data, this consortium hopes to derive actionable insights that will help reduce unnecessary incarcerations,” said Teresa Carlson, VP of AWS Worldwide Public Sector. “AWS continues to support the justice and public safety community, and views this collaboration as one more step in our commitment to provide secure, agile, and cost effective solutions to our customers.”

A Minimalistic Way to Tackle Big Data Produced by Earth Observation Satellites

The explosion of Earth Observation (EO) data has driven the need to find innovative ways for using that data. We sat down with Grega Milcinski from Sinergise to discuss Sentinel-2. During its six month pre-operational phase, Sentintel-2 has already produced more than 200 TB of data, more than 250 trillion pixels, yet the major part of this data is never used at all, probably up to 90 percent.

What is a Sentinel Hub?

The Sentinel-2 data set acquires images of whole landmass of Earth every 5-10 days (soon-to-be twice as often) with a 10-meter resolution and multi-spectral data, all available for free. This opens the doors for completely new ways of remote sensing processes. We decided to tackle the technical challenge of processing EO data our way – to create web services, which make it possible for everyone to get data in their favorite GIS applications using standard WMS and WCS services. We call this the Sentinel Hub.

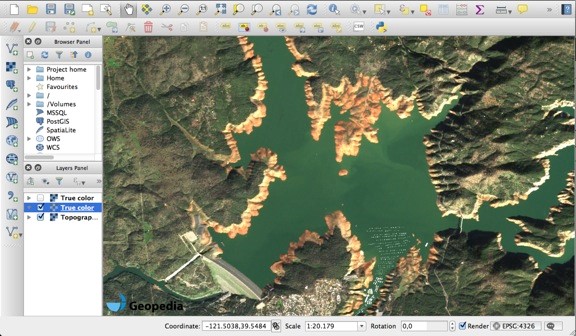

Figure 1 – low water levels of Lake Oroville, US, 26th of January 2016, served via WMS in QGIS

How did AWS help?

In addition to research grants, which made it easier to start this process, there were three important benefits from working with AWS: public data sets for managing data, auto scaling features for our services, and advanced AWS services, especially AWS Lambda. AWS’s public data sets, such as Landsat and Sentinel are wonderful. Having all data, on a global scale, available in a structured way, easily accessible in Amazon Simple Storage Service (Amazon S3), removes a major hurdle (and risk) when developing a new application.

Why did you decide to set up a Sentinel public data set?

We were frustrated with how Sentinel data was distributed for mass use. We then came across the Landsat archive on AWS. After contacting Amazon about similar options for Sentinel, we were provided a research grant to establish the Sentinel public data set. It was a worthwhile investment because we can now access the data in an efficient way. And as others are able to do the same, it will hopefully benefit the EO ecosystem overall.

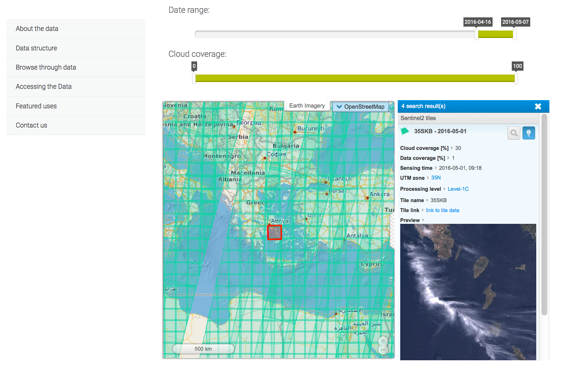

Figure 2 – Data browser on Sentinel-2 at AWS

How did you approach setting up Sentinel Hub service?

It is not feasible to process the entire archive of imagery on a daily basis, so we tried a different approach. We wanted to be able to process the data in real-time, once a request comes. When a user asks for imagery at some location, we query the meta-data to see what is available, set criteria, download the required data from S3, decompress it, re-project, create a final product, and deliver it to the user within seconds.

You mentioned Lambda. How do you make use of it?

It is impossible to predict what somebody will ask for and be ready for it in advance. But once a request happens, we want the system to perform all steps in the shortest time possible. Lambda can help as it makes it possible to empower a large number of processes simultaneously. We also use it to ingest the data and process hundreds of products. In addition to Lambda, we have leveraged AWS’s auto scaling features to seamlessly scale our rendering services. This greatly reduces running costs in off-peak periods and also provides a good user experience when the loads are increased. Having a powerful, yet cost-efficient, infrastructure in place allows us to focus on developing new features.

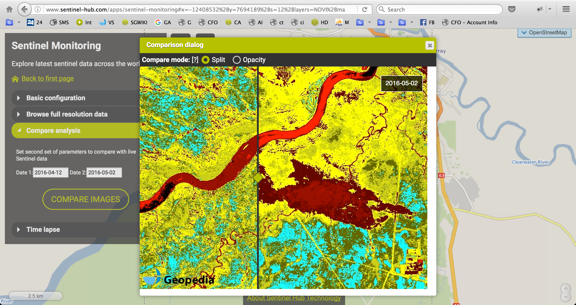

Figure 3 – image improved with atmospheric correction and cloud replacement

Figure 4 – user-defined image computation

Can you estimate cost benefits of this process?

We make use of the freely available data within Amazon public data sets, which directly saves us money. And by orchestrating software and AWS infrastructure, we are able to process in real-time so we do not have any storage costs. We estimate that we are able to save more than hundreds of thousands of dollars annually.

How can the Sentinel Hub service be used?

Anyone can now easily get Sentinel-2 data in their GIS application. You simply need the URL address of our WMS service, configure what is interesting, and then the data is there without any hassle, extra downloading, time consuming processing, reprocessing, and compositing. However, the real power of EO comes when it is integrated in web applications, providing a wider context. As there are many open-source GIS tools available, anybody can build these added value services. To demonstrate the case, we have built a simple application, called Sentinel Monitoring, where one can observe the changes in the land all across the globe.

Figure 5 – NDVI image of the Alberta wildfires, showing the burned area on 2nd of May. By changing the date, it is possible to see how the fires spread throughout the area.

What uses of Sentinel-2 on AWS do you expect others to build?

There are lots of possible use cases for EO data from Sentinel-2 imagery. The most obvious example is a vegetation-monitoring map for farmers. By identifying new construction, you can get useful input for property taxation purposes, especially in developing countries, where this information is scarce. The options are numerous and the investment needed to build these applications has never been smaller – the data is free and easily accessible on AWS. One can simply integrate one of the services in the system and the results are there.

Get access and learn more about Sentinel-2 services here. And visit www.aws.amazon.com/opendata to learn more about public data sets provided by AWS.

Smart City Data Analysis: Pushing Data into the Cloud

Cloud computing can help cities use big data and analytics to analyze and gain intelligence from data. In the previous smart cities posts, we mentioned how cities can acquire data from different sources, such as IoT devices, sensors, mobile applications, and interactions with citizens (read the past blogs here and here). This data, after it has fulfilled its primary purpose, might be given a new life as a source for analytics to influence further projects.

Think about the potential years (if not centuries) of data stored in city archives. From demographics and schools to transportation and GIS data, this data can be combined with real-time data and machine learning to ascertain information about the cities in order to make a meaningful impact.

How can cities easily push data into the cloud to be analyzed? While analyzing massive amounts of quickly changing data is not always an easy job, a lot of the complexity can be resolved by using AWS managed services:

- Amazon Kinesis makes it easy to load and analyze near real-time streaming data, such as fraud detection, inventory alerts in a critical care unit or a blood bank. This opens up the possibility of detecting device failure in a traffic system or a medical unit to provide a corrective response action.

- With Amazon Simple Storage Service (Amazon S3), you can host massive data sets in a cost-effective way. S3 can be the target storage for a process that involves scanning incoming documents that can be processed to feed the analytic engine or be stored in a common relational database.

- S3 can work beyond a simple storage environment (with careful prefixes which are self-documenting) to become an integration platform where you can look at the data and be able to apply polices and workflows. Additional AWS services that makes this happen include Amazon Simple Notification Service (SNS), Amazon Simple Queue Service (SQS), and Amazon Simple Workflow Service (SWF).

- Another option is to take advantage of Amazon Elastic Block Store (EBS), which provides persistent block-level storage volumes with different possibilities in terms of IOPS, or Elastic File System, which is useful as a NFS-v4 compatible shared storage that grows and shrinks automatically as you add and remove files.

- With Amazon Elastic MapReduce (Amazon EMR), you can quickly launch clusters of Hadoop in minutes, resize them, and terminate them when their analysis is completed. Or, you can decide to keep the cluster available to continuously process data when it arrives.

- Additionally, for more secure workloads in smart cities, such as census data, polling data, and medical sciences, the EMR Filesystem (EMRFS) can read objects from and write objects to Amazon S3 using S3 server-side encryption with AWS Key Management Service keys (SSE-KMS). You can launch a 10-node Hadoop cluster for as little as $0.11 per hour. Because EMR has native support for EC2 Spot Instances, you can also save 50-80% on the cost of the underlying EC2 instances. This reduces the cost barrier of big data analysis for cities of all sizes.

As an example scenario to analyze massive amount of documents, we can imagine a document data extraction flow as depicted below:

The flexibility of tools and services is important for data analytics in order to gain insights about information, hidden patterns, and eventually refine your algorithms until you find the answers you were looking for. This gives cities of all sizes the ability to perform their big data analysis for real-time streaming data, historical analysis, or a mixed approach without the burden of an expensive investment.

This technology can be widely used in many smart city applications, such as:

- Early alert systems (bad weather, tsunami, earthquakes, infections, and pollen count).

- Adaptive traffic light systems that consider pedestrian counts, road work, school holidays, and nearby key locations, such as tourist hot spots.

- Monitoring systems for government agencies to complement their counter terrorism efforts.

- Internet of Things (IoT) driven sensors with adaptive waste management for a more eco-friendly smart city.

- Intelligent simulation taking advantage of analytics to model smarter cars and housing.

- Smart health care.

We hope the availability of this technology will trigger new innovative solutions in city management that will lead to improved citizen services.

Post authored by Giulio Soro, Senior Solutions Architect, AWS, Steven Bryen, Manager, Solutions Architect, AWS, and Pratim Das, Specialist SA – Analytics, EME, AWS

Launch of the AWS Asia Pacific Region: What Does it Mean for our Public Sector Customers?

With the launch of the AWS Asia Pacific (Mumbai) Region, Indian-based developers and organizations, as well as multinational organizations with end users in India, can achieve their mission and run their applications in the AWS Cloud by securely storing and processing their data with single-digit millisecond latency across most of India.

The new Mumbai Region consists of two Availability Zones (AZ) at launch, which includes one or more geographically distinct data centers, each with redundant power, networking, and connectivity. Each AZ is designed to be resilient to issues in another Availability Zone, enabling customers to operate production applications and databases that are more highly available, fault tolerant, and scalable than would be possible from a single data center.

The Mumbai Region supports Amazon Elastic Compute Cloud (EC2) (C4, M4, T2, D2, I2, and R3 instances are available) and related services including Amazon Elastic Block Store (EBS), Amazon Virtual Private Cloud, Auto Scaling, and Elastic Load Balancing.

In addition, AWS India supports three edge locations – Mumbai, Chennai, and New Delhi. The locations support Amazon Route 53, Amazon CloudFront, and S3 Transfer Acceleration. AWS Direct Connect support is available via our Direct Connect Partners. For more details, please see the official release here and Jeff Barr’s post here.

To celebrate the launch of the new region, we hosted a Leaders Forum that brought together over 150 government and education leaders to discuss how cloud computing and the expansion of our global infrastructure has the potential to change the way the governments and educators of India design, build, and run their IT workloads.

The speakers shared their insights gained from our 10-year cloud journey, trends on adoption of cloud by our customers globally, and our experience working with government and education leaders around the world.

Please see pictures from the event below.

.jpg)

U.S. Department of State Awards Enterprise-Wide IoT Platform and Applications Contract to C3 IoT, Running on AWS GovCloud

C3 IoT™ announced that the U.S. Department of State awarded the company a multi-year contract up to $25 million to provide the C3 IoT enterprise application development platform. In collaboration with AWS, C3 IoT , an Advanced Tier APN Partner, will deploy the C3 IoT Platform™ and software applications on AWS GovCloud (US), an isolated AWS region designed to host sensitive data and regulated workloads in the cloud. This ensures compliance with U.S. government requirements, including International Traffic in Arms Regulations (ITAR) and Federal Risk and Authorization Management Program (FedRAMP).

This is the federal government’s first enterprise-wide contract to deploy predictive analytics and energy management technology globally, which will help achieve and maintain statutory, executive order, and departmental energy and sustainability goals.

Cost savings, efficiency, and improved innovation

Enterprise-wide contracts like this one will allow the Department of State to enhance operational efficiencies by taking advantage of real-time access to all telemetry, enterprise, and extraprise data across 22,000+ Department facilities worldwide and leveraging predictive analytics on a global scale. With C3 IoT’s machine learning-based platform and software application suite, the State Department can predict impending failures of critical facility equipment, automatically monitor, analyze, and manage energy usage across all assets, and assess the health of the sensor and device infrastructure. Whether for enhanced situational awareness or faster response time, IoT gives access to real-time information previously not available.

World-leading organizations are using C3 IoT and AWS Internet of Things (IoT) technologies to enable smarter, data-driven offerings. AWS works with our public sector customers, applying IoT capabilities and solutions to challenges and opportunities to improve government services including transportation, public safety, and city services. From parking to officer safety to water management, agencies can achieve cost savings, efficiency, and improved innovation.

Secure access to devices

In addition to increased efficiency, with AWS GovCloud (US), the device connections and data are secure. We provide authentication and end-to-end encryption throughout all points of connection, meaning the data is never exchanged between devices and AWS IoT without proven identity using mutual authentication and encryption. And, the AWS GovCloud (US) region is only managed by and accessible to U.S. persons. Secure access to devices and applications is enabled by applying policies with granular permissions.

“With sensors being deployed across 22,000 buildings worldwide, the State Department is ready to employ big data analytics to reveal insights that will drive operational efficiencies across the agency and deliver substantial taxpayer savings,” said Ed Abbo, President and CTO, C3 IoT. “By transforming its operations and standardizing energy management best practices, the State Department is becoming a strong environmental steward and global leader in the emerging Internet of Things.”

AWS Public Sector Summit – Washington DC Recap

The AWS Public Sector Summit in Washington DC brought together over 4,500 attendees to learn from top cloud technologists in the government, education, and nonprofit sectors.

Day 1

The Summit began with a keynote address from Teresa Carlson, VP of Worldwide Public Sector at AWS. During her keynote, she announced that AWS now has over 2,300 government customers, 5,500 educational institutions, and 22,000 nonprofits and NGOs. And AWS GovCloud (US) usage has grown 221% year-over-year since launch in Q4 2011. To continue to help our customers meet their missions, AWS announced the Government Competency Program, the GovCloud Skills Program, the AWS Educate Starter Account, and the AWS Marketplace for the U.S. Intelligence Community during the Summit. See more details about each announcement below.

- AWS Government Competency Program: AWS Government Competency Partners have deep experience working with government customers to deliver mission-critical workloads and applications on AWS. Customers working with AWS Government Competency Partners will have access to innovative, cloud-based solutions that comply with the highest AWS standards.

- AWS GovCloud (US) Skill Program: The AWS GovCloud (US) Skill Program provides customers with the ability to readily identify APN Partners with experience supporting workloads in the AWS GovCloud (US) Region. The program identifies APN Consulting Partners with experience in architecting, operating and managing workloads in GovCloud, and APN Technology Partners with software products that are available in AWS GovCloud (US).

- AWS Educate Starter Account: AWS Educate announced the AWS Educate Starter Account that gives students more options when joining the program and does not require a credit card or payment option. The AWS Educate Starter Account provides the same training, curriculum, and technology benefits of the standard AWS Account.

- AWS Marketplace for the U.S. Intelligence Community: We have launched the AWS Marketplace for the U.S. Intelligence Community (IC) to meet the needs of our IC customers. The AWS Marketplace for the U.S. IC makes it easy to discover, purchase, and deploy software packages and applications from vendors with a strong presence in the IC in a cloud that is not connected to the public Internet.

Watch the full video of Teresa’s keynote here.

Teresa was then joined onstage by three other female IT leaders: Deborah W. Brooks, Co-Founder & Executive Vice Chairman, The Michael J. Fox Foundation for Parkinson’s Research, LaVerne H. Council, Chief Information Officer, Department of Veterans Affair, and Stephanie von Friedeburg, Chief Information Officer and Vice President, Information and Technology Solutions, The World Bank Group.

Each of the speakers addressed how their organization is paving the way for disruptive innovation. Whether it was using the cloud to eradicate extreme poverty, serving and honoring veterans, or fighting Parkinson’s Disease, each demonstrated patterns of innovation that help make the world a better place through technology.

The day 1 keynote ended with the #Smartisbeautiful call to action encouraging everyone in the audience to mentor and help bring more young girls into IT.

Day 2

The second day’s keynote included a fireside chat with Andy Jassy, CEO of Amazon Web Services. Watch the Q&A here.

Andy addressed the crowd and shared Amazon’s passion for public sector, the expansion of AWS regions around the globe, and the customer-centric approach AWS has taken to continue to innovate and address the needs of our customers.

We also announced the winners of the third City on a Cloud Innovation Challenge, a global program to recognize local and regional governments and developers that are innovating for the benefit of citizens using the AWS Cloud. See a list of the winners here.

Videos, slides, photos, and more

AWS Summit materials are now available online for your reference and to share with your colleagues. Please view and download here:

- Watch the Day 1 Keynote featuring Teresa Carlson, VP of Worldwide Public Sector, AWS, The Department of Veterans Affairs, The World Bank Group, and The Michael J. Fox Foundation

- Watch the Day 2 Fireside Chat with Andy Jassy, CEO of AWS

- View and download slides from the breakout sessions

- Read the event press coverage

- Access the videos from the two-day event – with more videos coming soon

We hope to see you next year at the AWS Public Sector Summit in Washington, DC on June 13-14, 2017.

Intelligent Transportation and the Cloud

AWS recently participated in the ITS America annual conference in San Jose, California. At the conference, thought leaders and decision makers from both the private and public sector gather to discuss challenges, innovation, safety, policy, and best practices in transportation across the country.

ITS America is an advocate for today’s leading industries—helping to marry tech and transportation. ITS America is working to advance safety, efficiency and sustainability, as well as putting transportation at the center of the Internet of Things (IoT).

The conference centered around three themes: Wheels & Things, Infrastructure of Things, and Show Me the Money!

- Wheels & Things – focused on all of the “things” using, altering, or creating new intelligent transportation systems and technologies.

- Infrastructure of Things – focused on all of the “stuff” that “wheels and things” interact with. From physical hardware like lights, poles, signs, and intersections to the network backbone, this theme addressed what the future of traffic management will look like leveraging cloud and smart transportation technologies of the future.

- Show Me the Money – focused on what the future of intelligent transportation’s back end will look like, as well as new business models. This included the evolving trend away from CapEx to OpEx and the collaboration taking place between the private and public sectors today.

Key Takeaways

Transportation is more than concrete and steel – Former Secretary of Transportation Rodney Slater participated in a Q&A and explained that transportation is more than the physical infrastructure of concrete and steel. Instead, it is what connects us to each other and to the world. Having smart, safe and reliable transportation available to the masses is what will drive opportunity and equal access throughout the country.

Sensors, data and the internet of things – Today, every government asset is a sensor, and data is what makes a city “smart.” Cloud technology enables cities to collect, store, and analyze data of all kinds. The insights that can be derived through this analysis are limitless, helping cities identify gaps, issues, and trends that will help them deploy their resources more effectively and focus on their mission.

Frank DiGiammarino, Director, AWS State and Local Government, Amazon Web Services delivered the closing keynote and spoke to how cloud technology is enabling the future of smart transportation. The keynote highlighted smart transportation solutions born in the cloud, such as smart parking, connected intersections, smart routing, fleet monitoring, and connected vehicles. These solutions are important across both the private and public sector because infrastructure is critical to economic growth. Just as traditional infrastructure was the catalyst for growth in the 20th century, cloud and digital technology will be the catalyst for the 21st century. The keynote closed with the question “What mission are you going to take on?” There are so many problems to solve and so many cool things that we can do. What will your contribution be to the future of our country’s transportation? Together we will make a difference.

We are excited to continue to support transportation initiatives and power the innovations and technologies of tomorrow. For more information, check out AWS and Transportation and ITSAmerica2016.org.

AWS GovCloud (US) Earns DoD Cloud Computing SRG Impact Level 4 Authorization

We are pleased to announce that the AWS GovCloud (US) Region has been granted a Provisional Authorization (PA) without conditions by the Defense Information Systems Agency (DISA) for Impact Level 4 workloads as defined in the Department of Defense (DoD) Cloud Computing (CC) Security Requirements Guide (SRG). AWS was the first Cloud Service Provider (CSP) to obtain an Impact Level 4 PA in August 2014, which paved the way for DoD pilot workloads and applications in the cloud. This allows DoD mission owners to continue to leverage AWS for their mission critical production applications.

By achieving this, we are continuing to allow the DoD to utilize AWS to run their production Impact Level 4 workloads in the AWS environment, including, but not limited to, export controlled data, privacy information, protected health information, and other information requiring explicit CUI designation, such as electronic medical records or munitions management systems. AWS enables a growing number of military organizations and their partners to process, store, and transmit DoD data by leveraging the secure AWS environment.

This third-party accreditation, sponsored by DISA, supports the DoD’s cloud initiative to migrate DoD web sites and applications from physical servers and networks within DoD networks and data centers into lower cost IT services. We are working with DoD agencies to help them realize the benefits and innovations of the cloud, while remaining secure with their sensitive data. By achieving IL 4, AWS enables customers to more rapidly develop and deliver secure solutions for their end users utilizing the AWS GovCloud (US)’s secure, reliable, and flexible cloud computing platform.

Additionally, AWS GovCloud (US) recently became one of the first cloud providers to receive a P-ATO from the Joint Authorization Board (JAB) under the FedRAMP High baseline. Learn more about how AWS GovCloud (US) helps agencies meet mandates, reduce costs, drive efficiencies, and increase innovation across the DoD.

Call for Computer Vision Research Proposals with New Amazon Bin Image Data Set

Amazon Fulfillment Centers are bustling hubs of innovation that allow Amazon to deliver millions of products to over 100 countries worldwide with the help of robotic and computer vision technologies. Today, the Amazon Fulfillment Technologies team is releasing the Amazon Bin Image Data Set, which is made up of over 1,000 images of bins inside an Amazon Fulfillment Center. Each image is accompanied by metadata describing the contents of the bin in the image. The Amazon Bin Image Data Set can now be accessed by anyone as an AWS Public Data Set. This is an interim, limited release of the data. Several hundred thousand images will be released by the fall of 2016.

Call for Research Proposals

The Amazon Academic Research Awards (AARA) program is soliciting computer vision research proposals for the first time. The AARA program funds academic research and related contributions to open source projects by top academic researchers throughout the world.

Proposals can focus on any relevant area of computer vision research. There is particular interest in recognition research, including, but not limited to, large scale, fine-grained instance matching, apparel similarity, counting items, object detection and recognition, scene understanding, saliency and segmentation, real-time detection, image captioning, question answering, weakly supervised learning, and deep learning. Research that can be applied to multiple problems and data sets is preferred and we specifically encourage submissions that could make use of the Amazon Bin Image Data Set from Amazon Fulfillment Technologies. We expect that future calls will focus on other topics.

Awards Structure and Process

Awards are structured as one-year unrestricted gifts to academic institutions, and can include up to 20,000 USD in AWS Promotional Credits. Though the funding is not extendable, applicants can submit new proposals for subsequent calls.

Project proposals are reviewed by an internal awards panel and the results are communicated to the applicants approximately 2.5 months after the submission deadline. Each project will also be assigned an Amazon computer vision researcher contact. The researchers are encouraged to maintain regular communication with the contact to discuss ongoing research and progress made on the project. The researchers are also encouraged to publish the outcome of the project and commit any related code to open source code repositories.

Learn more about the submission requirements, mechanism, and deadlines for the project proposals here. Applications (and questions) should be submitted to aara-submission@amazon.com as a single PDF file by October 1, 2016. Please, include [AARA 2016 CV Submission] in the subject. Awards panel will announce the decisions about 2.5 months later.