Category: Education

Automating Governance on AWS

IT governance identifies risk and determines the identity controls to mitigate that risk. Traditionally, IT governance has required long, detailed documents and hours of work for IT managers, security or audit professionals, and admins. Automating governance on AWS offers a better way.

Let’s consider a world where only two things matter: customer trust and cost control. First, we need to identify the risks to mitigate:

- Customer Trust: Customer trust is essential. Allowing customer data to be obtained by unauthorized third parties is damaging to customers and the organization.

- Cost: Doing more with your money is critical to success for any organization.

Then, we identify what policies we need to mitigate those risks:

- Encryption at rest should be used to avoid interception of customer data by unauthorized parties.

- A consistent instance family should be used to allow for better Reserved Instance (RI) optimization.

Finally, we can set up automated controls to make sure those policies are followed. With AWS, you have several options:

Encrypting Amazon Elastic Block Store (EBS) volumes is a fairly straightforward configuration. However, validating that all volumes have been encrypted may not be as easy. Despite how easily volumes can be encrypted, it is still possible to overlook or intentionally not encrypt a volume. AWS Config Rules can be used to automatically and in real time check that all volumes are encrypted. Here is how to set that up:

- Open AWS Console

- Go to Services -> Management Tools -> Config

- Click Add Rule

- Click encrypted-volumes

- Click Save

Once the rule has finished being evaluated, you can see a list of EBS volumes and whether or not they are compliant with this new policy. This example uses a built-in AWS Config Rule, but you can also use custom rules where built-in rules do not exist.

When you get several Amazon Elastic Compute Cloud (Amazon EC2) instances spun up, eventually you will want to think about purchasing Reserved Instances (RIs) to take advantage of the cost savings they provide. Focus on one or a few instance families and purchase RIs of different sizes within those families. The reason to do this is because RIs can be split and combined into different sizes if the workload changes in the future. Reducing the number of instance families used increases the likelihood that a future need can be accommodated by resizing existing RIs. To enforce this policy, an IAM rule can be used to allow creation of EC2 instances which are in only the allowed families. See below:

It’s just that simple.

With AWS automated controls, system administrators don’t need to memorize every required control, security admins can easily validate that policies are being followed, and IT managers can see it all on a dashboard in near real time – thereby decreasing organizational risk and freeing up resources to focus on other critical tasks.

To learn more about Automating Governance on AWS, read our white paper, Automating Governance: A Managed Service Approach to Security and Compliance on AWS.

A guest blog by Joshua Dayberry, Senior Solutions Architect, Amazon Web Services Worldwide Public Sector

Developing an Emergency Communications Plan with Georgia Tech

A guest post by Adam Arrowood, Georgia Tech Cyber Security, Georgia Institute of Technology

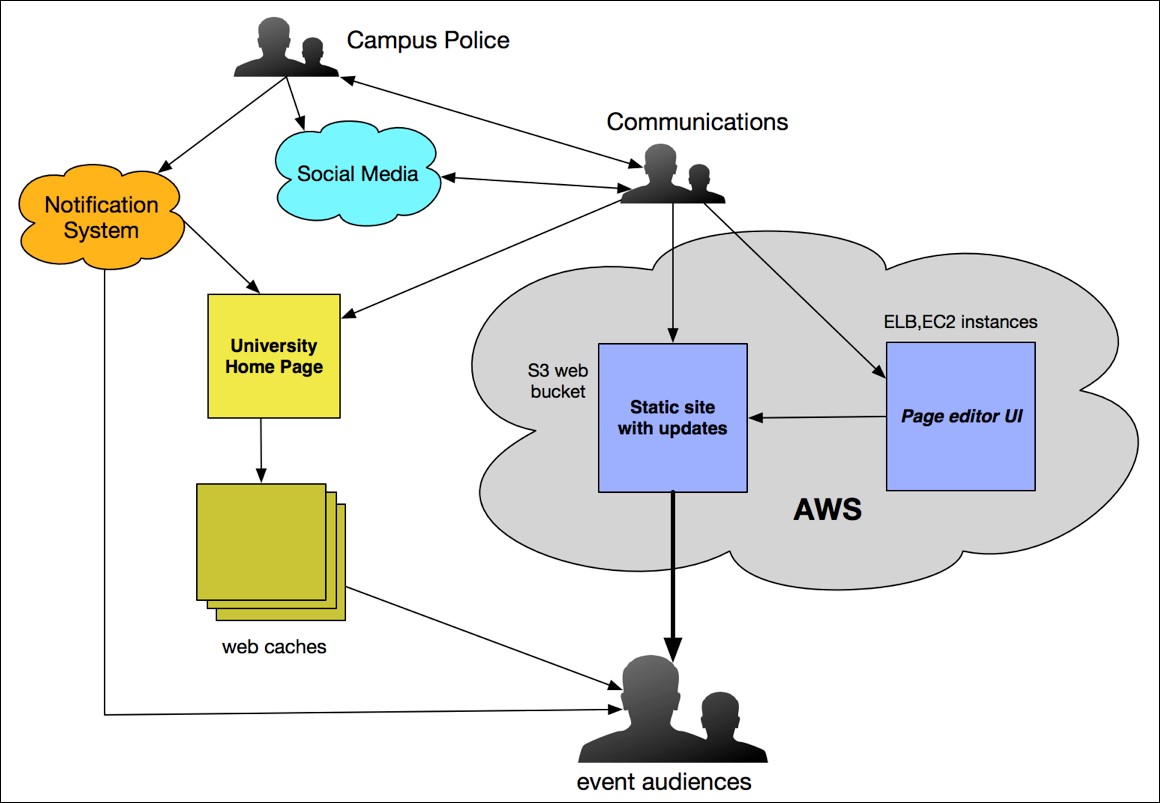

The Georgia Tech Office of Information Technology has taken a first step into cloud computing and emergency preparedness by deciding to host our crucial emergency communications website on Amazon Web Services (AWS).

Georgia Tech maintains an on-premises main campus website that acts as the focus of our institute’s communications. We architected the site to use a content management system for easier editing and web caches for handling a large amount of external web traffic. However, many situations, from a campus-wide lock down to a weather-related closing, could easily cause traffic to exceed the limits of our on-campus systems. Preparing for such emergency communication scenarios led our IT office to look to the cloud and AWS for a solution.

Developing an emergency communications plan

Our first step was to gather our IT, cyber security, communications, and emergency planning experts and create communication plans for foreseeable emergency situations, such as an active shooter, tornado, or a bomb threat. Our plans explain who communicates what, when, and over what channels. For most campus emergencies, all of our communication channels, from Facebook to Twitter to our off-campus-hosted SMS notification service, will direct all users to one single voice: our emergency website.

One initial concern was to design a website architecture that could handle the large number of webpage requests we could expect in the event of an emergency. There are three basic ways of managing such a heavy traffic spike:

- Over-design – Create a robust infrastructure that has significantly greater capacity than needed for average daily use.

- Scale Rapidly – Create a flexible infrastructure that can quickly allocate additional CPU, memory, and bandwidth.

- Redirect or bounce – Send visitors off your local resources to somewhere that can easily handle the greater traffic demands.

With the first two options unavailable to us due to time and monetary constraints, we chose to redirect our emergency web traffic to an external resource. Given that rapid, automated scaling is the bread-and-butter of cloud computing, Amazon Web Services was a logical decision.

Georgia Tech’s AWS choice

There are several different cloud-based techniques and technologies that can be used to create scalable systems capable of handling an emergency traffic spike; we chose Amazon Simple Storage Service (Amazon S3). Amazon S3 website hosting provides several clear advantages:

- Amazon S3 website hosting is easy to set up. The AWS Management Console was all that was needed to get the initial S3 bucket and site hosting set up.

- Traffic scaling is automatically handled by the existing Amazon S3 infrastructure. Since Amazon S3 holds trillions of objects and regularly peaks at millions of requests per second, serving data for many major corporations, our extra emergency web traffic will not overload their system.

- It is affordable, both during inactivity (pennies per month) and in the event of an emergency. For example, in the case of an emergency lasting three hours, involving 30,000 people reloading our homepage every twenty seconds, our total cost should be less than $30.

- Amazon S3 provides for automatic versioning, allowing us to keep a copy of the page at every stage of an emergency event without extra effort or coding.

- The limitation of static-HTML-only web pages forced our web designers to focus on what matters most for communication during crises: a quick loading, mobile-first design (one not weighed down by heavy CMS demands). After all, the worst case scenario could involve thousands of people sheltered under desks, reloading our site every few seconds on their smartphones.

Our communication staff are first trained in hand-editing HTML via a native S3 client. For easier use, we also created a small web application that provides a simple GUI to edit the emergency page. The application is hosted on two Amazon Elastic Compute Cloud (Amazon EC2) instances, both hosted behind an Elastic Load Balancer.

Benefits

The main benefit of our AWS architecture is our expanded ability to handle large numbers of web visitors in the event of a crisis. In addition, even this simple system has allowed our IT team to become comfortable using several AWS technologies, including: VPCs, EC2, EBS, AMIs, and IAM.

Another unforeseen advantage was having this project serve as an exploratory opportunity for our campus’ purchasing department, too, allowing us to figure out the right and legal way for us to consume AWS resources, lowering hurdles for future AWS-hosted projects.

Amazon S3 has so far worked well for Georgia Tech given that it has the capabilities we need and is affordable. The Amazon sales and engineering teams are helpful, and we are moving forward with our communications plan. We have a rock-solid website to redirect users to, and we are hopeful that we are ready if and when emergencies arise.

A Minimalistic Way to Tackle Big Data Produced by Earth Observation Satellites

The explosion of Earth Observation (EO) data has driven the need to find innovative ways for using that data. We sat down with Grega Milcinski from Sinergise to discuss Sentinel-2. During its six month pre-operational phase, Sentintel-2 has already produced more than 200 TB of data, more than 250 trillion pixels, yet the major part of this data is never used at all, probably up to 90 percent.

What is a Sentinel Hub?

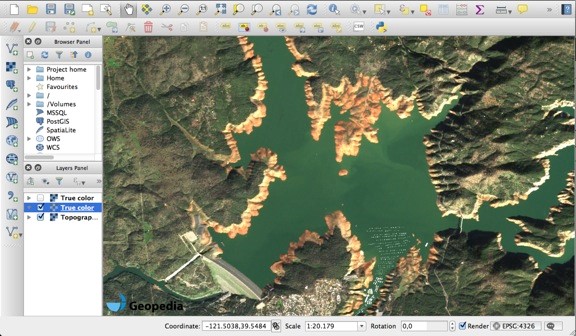

The Sentinel-2 data set acquires images of whole landmass of Earth every 5-10 days (soon-to-be twice as often) with a 10-meter resolution and multi-spectral data, all available for free. This opens the doors for completely new ways of remote sensing processes. We decided to tackle the technical challenge of processing EO data our way – to create web services, which make it possible for everyone to get data in their favorite GIS applications using standard WMS and WCS services. We call this the Sentinel Hub.

Figure 1 – low water levels of Lake Oroville, US, 26th of January 2016, served via WMS in QGIS

How did AWS help?

In addition to research grants, which made it easier to start this process, there were three important benefits from working with AWS: public data sets for managing data, auto scaling features for our services, and advanced AWS services, especially AWS Lambda. AWS’s public data sets, such as Landsat and Sentinel are wonderful. Having all data, on a global scale, available in a structured way, easily accessible in Amazon Simple Storage Service (Amazon S3), removes a major hurdle (and risk) when developing a new application.

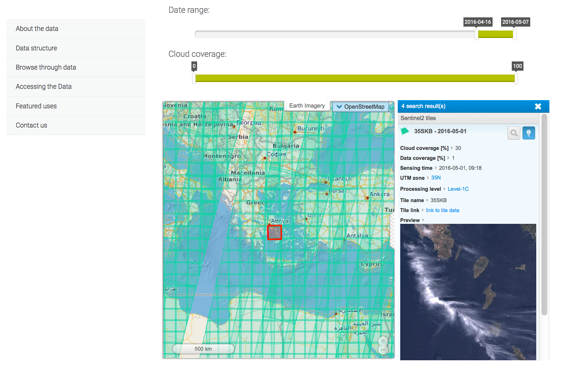

Why did you decide to set up a Sentinel public data set?

We were frustrated with how Sentinel data was distributed for mass use. We then came across the Landsat archive on AWS. After contacting Amazon about similar options for Sentinel, we were provided a research grant to establish the Sentinel public data set. It was a worthwhile investment because we can now access the data in an efficient way. And as others are able to do the same, it will hopefully benefit the EO ecosystem overall.

Figure 2 – Data browser on Sentinel-2 at AWS

How did you approach setting up Sentinel Hub service?

It is not feasible to process the entire archive of imagery on a daily basis, so we tried a different approach. We wanted to be able to process the data in real-time, once a request comes. When a user asks for imagery at some location, we query the meta-data to see what is available, set criteria, download the required data from S3, decompress it, re-project, create a final product, and deliver it to the user within seconds.

You mentioned Lambda. How do you make use of it?

It is impossible to predict what somebody will ask for and be ready for it in advance. But once a request happens, we want the system to perform all steps in the shortest time possible. Lambda can help as it makes it possible to empower a large number of processes simultaneously. We also use it to ingest the data and process hundreds of products. In addition to Lambda, we have leveraged AWS’s auto scaling features to seamlessly scale our rendering services. This greatly reduces running costs in off-peak periods and also provides a good user experience when the loads are increased. Having a powerful, yet cost-efficient, infrastructure in place allows us to focus on developing new features.

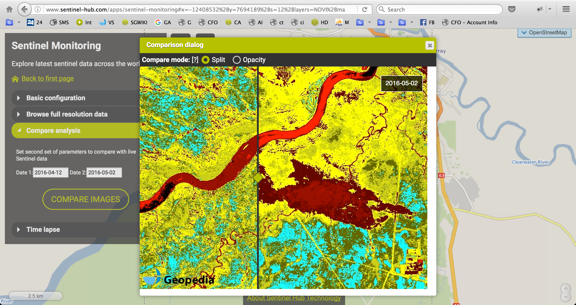

Figure 3 – image improved with atmospheric correction and cloud replacement

Figure 4 – user-defined image computation

Can you estimate cost benefits of this process?

We make use of the freely available data within Amazon public data sets, which directly saves us money. And by orchestrating software and AWS infrastructure, we are able to process in real-time so we do not have any storage costs. We estimate that we are able to save more than hundreds of thousands of dollars annually.

How can the Sentinel Hub service be used?

Anyone can now easily get Sentinel-2 data in their GIS application. You simply need the URL address of our WMS service, configure what is interesting, and then the data is there without any hassle, extra downloading, time consuming processing, reprocessing, and compositing. However, the real power of EO comes when it is integrated in web applications, providing a wider context. As there are many open-source GIS tools available, anybody can build these added value services. To demonstrate the case, we have built a simple application, called Sentinel Monitoring, where one can observe the changes in the land all across the globe.

Figure 5 – NDVI image of the Alberta wildfires, showing the burned area on 2nd of May. By changing the date, it is possible to see how the fires spread throughout the area.

What uses of Sentinel-2 on AWS do you expect others to build?

There are lots of possible use cases for EO data from Sentinel-2 imagery. The most obvious example is a vegetation-monitoring map for farmers. By identifying new construction, you can get useful input for property taxation purposes, especially in developing countries, where this information is scarce. The options are numerous and the investment needed to build these applications has never been smaller – the data is free and easily accessible on AWS. One can simply integrate one of the services in the system and the results are there.

Get access and learn more about Sentinel-2 services here. And visit www.aws.amazon.com/opendata to learn more about public data sets provided by AWS.

Launch of the AWS Asia Pacific Region: What Does it Mean for our Public Sector Customers?

With the launch of the AWS Asia Pacific (Mumbai) Region, Indian-based developers and organizations, as well as multinational organizations with end users in India, can achieve their mission and run their applications in the AWS Cloud by securely storing and processing their data with single-digit millisecond latency across most of India.

The new Mumbai Region consists of two Availability Zones (AZ) at launch, which includes one or more geographically distinct data centers, each with redundant power, networking, and connectivity. Each AZ is designed to be resilient to issues in another Availability Zone, enabling customers to operate production applications and databases that are more highly available, fault tolerant, and scalable than would be possible from a single data center.

The Mumbai Region supports Amazon Elastic Compute Cloud (EC2) (C4, M4, T2, D2, I2, and R3 instances are available) and related services including Amazon Elastic Block Store (EBS), Amazon Virtual Private Cloud, Auto Scaling, and Elastic Load Balancing.

In addition, AWS India supports three edge locations – Mumbai, Chennai, and New Delhi. The locations support Amazon Route 53, Amazon CloudFront, and S3 Transfer Acceleration. AWS Direct Connect support is available via our Direct Connect Partners. For more details, please see the official release here and Jeff Barr’s post here.

To celebrate the launch of the new region, we hosted a Leaders Forum that brought together over 150 government and education leaders to discuss how cloud computing and the expansion of our global infrastructure has the potential to change the way the governments and educators of India design, build, and run their IT workloads.

The speakers shared their insights gained from our 10-year cloud journey, trends on adoption of cloud by our customers globally, and our experience working with government and education leaders around the world.

Please see pictures from the event below.

.jpg)

AWS Public Sector Summit – Washington DC Recap

The AWS Public Sector Summit in Washington DC brought together over 4,500 attendees to learn from top cloud technologists in the government, education, and nonprofit sectors.

Day 1

The Summit began with a keynote address from Teresa Carlson, VP of Worldwide Public Sector at AWS. During her keynote, she announced that AWS now has over 2,300 government customers, 5,500 educational institutions, and 22,000 nonprofits and NGOs. And AWS GovCloud (US) usage has grown 221% year-over-year since launch in Q4 2011. To continue to help our customers meet their missions, AWS announced the Government Competency Program, the GovCloud Skills Program, the AWS Educate Starter Account, and the AWS Marketplace for the U.S. Intelligence Community during the Summit. See more details about each announcement below.

- AWS Government Competency Program: AWS Government Competency Partners have deep experience working with government customers to deliver mission-critical workloads and applications on AWS. Customers working with AWS Government Competency Partners will have access to innovative, cloud-based solutions that comply with the highest AWS standards.

- AWS GovCloud (US) Skill Program: The AWS GovCloud (US) Skill Program provides customers with the ability to readily identify APN Partners with experience supporting workloads in the AWS GovCloud (US) Region. The program identifies APN Consulting Partners with experience in architecting, operating and managing workloads in GovCloud, and APN Technology Partners with software products that are available in AWS GovCloud (US).

- AWS Educate Starter Account: AWS Educate announced the AWS Educate Starter Account that gives students more options when joining the program and does not require a credit card or payment option. The AWS Educate Starter Account provides the same training, curriculum, and technology benefits of the standard AWS Account.

- AWS Marketplace for the U.S. Intelligence Community: We have launched the AWS Marketplace for the U.S. Intelligence Community (IC) to meet the needs of our IC customers. The AWS Marketplace for the U.S. IC makes it easy to discover, purchase, and deploy software packages and applications from vendors with a strong presence in the IC in a cloud that is not connected to the public Internet.

Watch the full video of Teresa’s keynote here.

Teresa was then joined onstage by three other female IT leaders: Deborah W. Brooks, Co-Founder & Executive Vice Chairman, The Michael J. Fox Foundation for Parkinson’s Research, LaVerne H. Council, Chief Information Officer, Department of Veterans Affair, and Stephanie von Friedeburg, Chief Information Officer and Vice President, Information and Technology Solutions, The World Bank Group.

Each of the speakers addressed how their organization is paving the way for disruptive innovation. Whether it was using the cloud to eradicate extreme poverty, serving and honoring veterans, or fighting Parkinson’s Disease, each demonstrated patterns of innovation that help make the world a better place through technology.

The day 1 keynote ended with the #Smartisbeautiful call to action encouraging everyone in the audience to mentor and help bring more young girls into IT.

Day 2

The second day’s keynote included a fireside chat with Andy Jassy, CEO of Amazon Web Services. Watch the Q&A here.

Andy addressed the crowd and shared Amazon’s passion for public sector, the expansion of AWS regions around the globe, and the customer-centric approach AWS has taken to continue to innovate and address the needs of our customers.

We also announced the winners of the third City on a Cloud Innovation Challenge, a global program to recognize local and regional governments and developers that are innovating for the benefit of citizens using the AWS Cloud. See a list of the winners here.

Videos, slides, photos, and more

AWS Summit materials are now available online for your reference and to share with your colleagues. Please view and download here:

- Watch the Day 1 Keynote featuring Teresa Carlson, VP of Worldwide Public Sector, AWS, The Department of Veterans Affairs, The World Bank Group, and The Michael J. Fox Foundation

- Watch the Day 2 Fireside Chat with Andy Jassy, CEO of AWS

- View and download slides from the breakout sessions

- Read the event press coverage

- Access the videos from the two-day event – with more videos coming soon

We hope to see you next year at the AWS Public Sector Summit in Washington, DC on June 13-14, 2017.

Connecting Students Everywhere to a Cloud Education

![]()

There are more than 18,000,000 exciting cloud computing jobs globally, with long-term career prospects and high earning potential. And that number is growing. But a problem remains: how do industry and education give more students access to the learning they need to be the generation of cloud-ready workers?

AWS Educate was founded in 2015 with the vision of giving every student who dreams of a cloud career an educational path to achieve their goal. Students have no-cost access to training, curriculum, and cloud computing technology needed to freely experiment, innovate, and learn in the cloud. Today, more than 500 institutions have joined the program.

At last week’s AWS Public Sector Summit, AWS Educate announced the AWS Educate Starter Account that gives students more options when joining the program and does not require a credit card or payment option. The AWS Educate Starter Account provides the same training, curriculum, and technology benefits of the standard AWS Account.

AWS Educate Starter Accounts include added safeguards, such as the no credit card requirement for account creation and a cap on credits available to the user. Regardless of income level or geographic location, students can learn in the cloud on their own terms. You can find more details here, or check out the AWS Educate Starter Account FAQs.

Ready to connect your students to a cloud education? Visit awseducate.com to learn more about program benefits and to join.

Call for Computer Vision Research Proposals with New Amazon Bin Image Data Set

Amazon Fulfillment Centers are bustling hubs of innovation that allow Amazon to deliver millions of products to over 100 countries worldwide with the help of robotic and computer vision technologies. Today, the Amazon Fulfillment Technologies team is releasing the Amazon Bin Image Data Set, which is made up of over 1,000 images of bins inside an Amazon Fulfillment Center. Each image is accompanied by metadata describing the contents of the bin in the image. The Amazon Bin Image Data Set can now be accessed by anyone as an AWS Public Data Set. This is an interim, limited release of the data. Several hundred thousand images will be released by the fall of 2016.

Call for Research Proposals

The Amazon Academic Research Awards (AARA) program is soliciting computer vision research proposals for the first time. The AARA program funds academic research and related contributions to open source projects by top academic researchers throughout the world.

Proposals can focus on any relevant area of computer vision research. There is particular interest in recognition research, including, but not limited to, large scale, fine-grained instance matching, apparel similarity, counting items, object detection and recognition, scene understanding, saliency and segmentation, real-time detection, image captioning, question answering, weakly supervised learning, and deep learning. Research that can be applied to multiple problems and data sets is preferred and we specifically encourage submissions that could make use of the Amazon Bin Image Data Set from Amazon Fulfillment Technologies. We expect that future calls will focus on other topics.

Awards Structure and Process

Awards are structured as one-year unrestricted gifts to academic institutions, and can include up to 20,000 USD in AWS Promotional Credits. Though the funding is not extendable, applicants can submit new proposals for subsequent calls.

Project proposals are reviewed by an internal awards panel and the results are communicated to the applicants approximately 2.5 months after the submission deadline. Each project will also be assigned an Amazon computer vision researcher contact. The researchers are encouraged to maintain regular communication with the contact to discuss ongoing research and progress made on the project. The researchers are also encouraged to publish the outcome of the project and commit any related code to open source code repositories.

Learn more about the submission requirements, mechanism, and deadlines for the project proposals here. Applications (and questions) should be submitted to aara-submission@amazon.com as a single PDF file by October 1, 2016. Please, include [AARA 2016 CV Submission] in the subject. Awards panel will announce the decisions about 2.5 months later.

If the Tool You Want Doesn’t Exist, Build Your Own

If the tool you want doesn’t exist, Amazon Web Services (AWS) gives you the platform to build your own. That was the case with Wayne State University as they struggled with finding a portal that allowed them choice, innovation, and mobile support (at an affordable cost).

Wayne State’s Challenge: Finding the Right Tool for the Job

Previously, Wayne State had a traditional intranet portal, where faculty, staff, and students would log in to register for classes, check grades, and learn about what was happening on campus. With this original system, they were often tied down by hardware maintenance fees, an inability to scale, and an old way of communicating marked by laundry lists of links and little engagement and interaction with users. The university wanted to make their portal a destination for people to do business and collaborate with Wayne State. In February of 2012, they made the decision to look at other portals, but could not find exactly what they were looking for in the market.

The Solution: Building the Right Tool for the Job

Wayne State decided to build their own portal, using AWS as a platform.

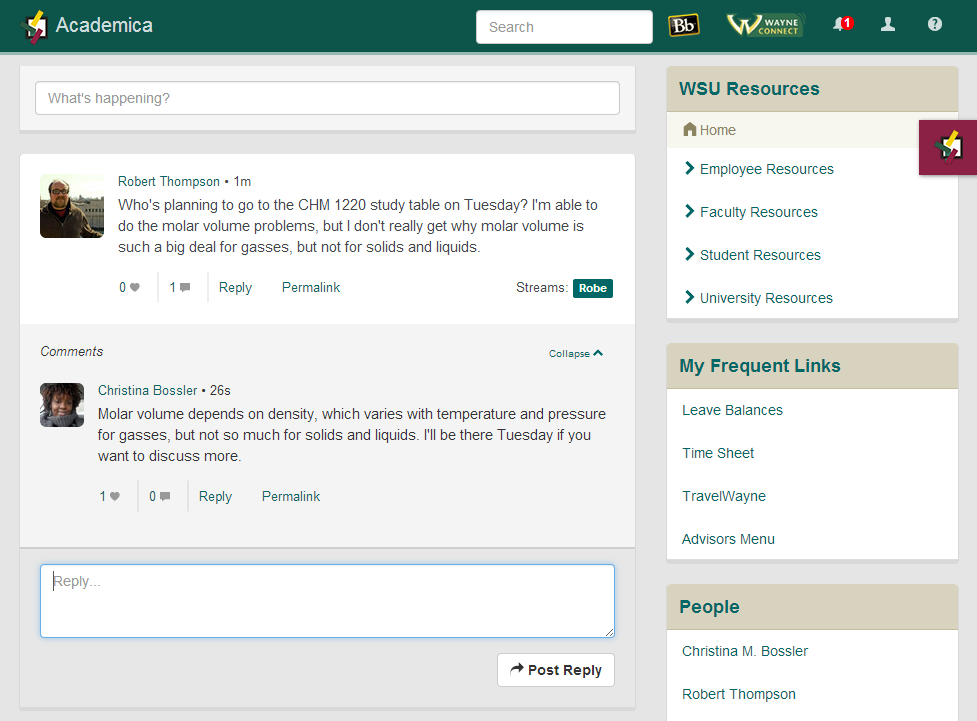

The university built an Enterprise Social Portal (ESP) called Academica that provides students with access to all key student facing university systems, including email, Blackboard LMS, payment systems, course tools, and admissions application status. The platform is cross-device, cross-platform and responsive on all devices. In addition to basic core features of a portal such as SSO, there is a social media aspect with a “Twitter-like” real-time messaging platform for the school that allows for hashtags and other social mechanisms. See the image below.

“With cloud hosting, the sky is the limit. We can now adapt to the needs of the campus,” Rob Thompson, Senior Director, Academic, Core & Intelligence Applications, Computing & Information Technology, Wayne State University.

Why AWS?

Wayne State made the strategic decision to build on AWS as part of their next-generation platform using Amazon Elastic Compute Cloud (Amazon EC2) and Amazon Simple Storage Service (Amazon S3). AWS offers the easiest, most cost-effective and scalable development environment they could find. Some of the benefits of developing it in house include: mobile support, responsiveness, flexibility, and it’s a modern framework. Other benefits they have realized include:

Cost Savings – For Wayne State, they historically see the highest traffic to their portal for a 15 minute period right before the winter semester. “What we would have to do is buy all the hardware to handle the requests at the peak level for a 15-20 minute window. And for the other 364 days of the year, it would sit idle. Now we are actually saving money on days with less demand, but we can quickly scale to handle the spikes in traffic when needed.”

“We save money on the licensing costs, we have halved our maintenance costs by going to AWS. We no longer buy for the high-water mark. We provide a consistent user experience, no matter what day of the year,” Mike Gregorowicz, Systems Architect, Wayne State University, added.

Scalability – By looking at the needs of their campus and finding new ways to better their services, they can now easily scale to 75,000+ users and well beyond that. “By far, the most powerful thing for us has been dynamic scaling. We can scale up and scale out at times to meet the limits of spot instances, resulting in even more savings,” Mike said.

Zero Downtime – Rob and Mike can do updates any time of day with zero downtime. Although they prefer to do updates on the weekends, they know that, in emergency situations, they can make updates without disrupting their visitors.

Shareable – When they first started building and deployed the portal, it caught the attention of other schools who needed this type of innovation on campus. This model allows them to share their platform with other schools. They are now able to commercialize their product and share their model through Merit Network, a non-profit organization providing research and education networking services, as well as other services. Since deploying Academica at Wayne State, they have even received interest from schools around the globe.

Learn how you can realize some of these benefits with AWS by visiting the “Cloud Computing for Education” page.

What Data Egress Means for Higher Education: A Q&A with Internet2

In March, we announced that AWS is offering a data egress discount to qualified researchers and academic customers, making it easier for researchers to use its cloud storage, computing, and database services by waiving data egress fees. We had the opportunity to sit down with Andrew Keating, Director of NET+ Cloud Services at Internet2 to discuss the impact of the data egress for higher education and how the cloud is transforming research in the academic world. Andrew received his Ph.D. from UC Berkeley and prior to his current role worked at the university building cyber infrastructure and programs to support data-intensive research.

Internet2, a member-owned advanced technology community, provides a collaborative environment for U.S. research and education organizations to solve common technology challenges and to develop innovative solutions in support of their educational, research, and community service missions.

What does Internet2 hear from its member institutions about how they are using cloud computing and the benefits it provides them?

Andrew: Over the past few years, universities have shifted their thinking from whether to deploy cloud services to a conversation about how to do so strategically and effectively. Several universities have adopted “cloud-first” strategies to move all or most of their enterprise IT services to the cloud. Even those universities that do not specifically call out “cloud first” are moving significantly in that direction.

Researchers have been using cloud services before the term became popular, in the sense that cross-institutional collaboration and sharing of research data sets has been taking place for decades. These days, the significant shift is that researchers are looking to commercial cloud providers as an alternative to building their own “clouds” through on-premises hardware. The efficiencies and time-to-research gains they are making are already substantial and they are able to more effectively use their grant dollars.

What are researchers using the cloud for?

Andrew: At the high end of data intensive research, the cloud is enabling more efficient deployment of storage and compute capabilities and on-demand capabilities are dramatically reducing the time it takes to begin a research project. In some respects, physicists, astronomers, and others with data or compute intensive needs have had these capabilities through supercomputing centers for some time. What’s different for them are the economic efficiencies of being able to spin up a virtual supercomputer on demand, as well as not having to deal with the hardware installation, maintenance, or waiting for a schedule slot to open up.

In my opinion, the biggest and most immediate impact of the cloud on research is making storage and compute capabilities more accessible to researchers broadly and this would previously have only been available through a supercomputing center. The cloud lets researchers get to work almost immediately.

What can researchers do now that wasn’t possible before the cloud? How does cloud help them?

Andrew: Cloud services have reduced the administrative and technical barriers to engaging in research activities and scientific discovery. Researchers are able to deploy the resources they need on demand without purchasing, maintaining, or administering hardware. We are also beginning to see the impact of cloud-based machine learning and analytics that will further transform scientific discovery. Even as recently as a few years ago, researchers analyzing a data set would need to have an idea about patterns or trends they wanted to find in the data. Machine learning is increasingly helping detect patterns or correlations in large data sets, and the specialized skills and domain expertise of the researcher can be focused on understanding and interpreting the meaning of those machine-generated observations or patterns.

Why is data egress so important to researchers?

Andrew: Reducing or eliminating data egress charges for researchers eliminates one more perceived barrier to cloud service adoption. When a researcher receives a grant, does the work, and decides to store the data on AWS, having to come back later and pay to move it out presents some conceptual and practical problems. For one, the researcher may have exhausted the grant funds, so depending on the amount of the charge there could be a financial burden. More conceptually, there was unease in the research community about the perception that data was “held hostage” or that their research work product would not be accessible to them or their colleagues. We are happy that AWS listened to its customers and responded to the needs of researchers who identified data egress charges as a barrier to broader adoption of cloud services.

Thank you for your time, Andrew! Read more about how AWS helps researchers in the cloud here and higher education here.

Looking Deep into our Universe with AWS

The International Centre for Radio Astronomy Research (ICRAR) in Western Australia has recently announced a new scientific finding using innovative data processing and visualization techniques developed on AWS. Astronomers at ICRAR have been involved in the detection of radio emissions from hydrogen in a galaxy more than 5 billion light years away. This is almost twice the previous record for the most distant hydrogen emissions observed, and has important implications for understanding how galaxies have evolved over time.

Figure 1: The Very Large Array on the Plains of San Agustin Credit: D. Finley, NRAO/AUI/NSF

Working with large and fast growing data sets like these requires new scalable tools and platforms that ICRAR is helping to develop for the astronomy community. The Data Intensive Astronomy (DIA) program at ICRAR, led by Dr Andreas Wicenec, used AWS to experiment with new methods of analyzing and visualizing data coming from the Very Large Array (VLA) at the National Radio Astronomy Observatory in New Mexico. AWS has enabled the DIA team to quickly prototype new data processing pipelines and visualization tools without spending millions in precious research funds that are better spent on astronomers than computers. As data volumes grow, the team can scale their processing and visualization tools to accommodate that growth. In this case, the DIA team prototyped and built a platform to process 10s of TBs of raw data and reduce this data to a more manageable size. They then make that data available in what they refer to as a ‘data cube.’

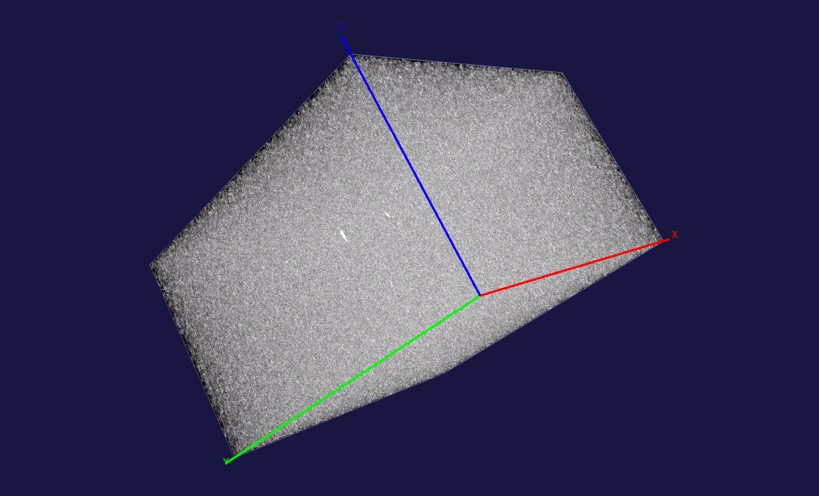

Figure 2: An image of the full data cube constructed by ICRAR

This image shows the full data cube constructed by the team at ICRAR. The cube provides a data visualization model that allows astronomers, like Dr. Attila Popping and his team at ICRAR, to search in either space or time through large images created from observations made with the VLA. Astronomers can interact with the data cube in real-time and stream it to their desktop. Having access to the full data set like this in an interactive fashion makes it possible to find new objects of interest that would otherwise not be seen or would be much more difficult to find.

ICRAR estimates that the amount of network, compute, and storage capacity required to shift and crunch this data would have made this work infeasible. By using AWS, they were able to quickly and cheaply build their new pipelines, and then scale them as massive amounts of data arrived from their instruments. They used the Amazon Elastic Compute Cloud (Amazon EC2) Spot market, accessing AWS’s spare capacity at 50-90% less than the standard on-demand pricing, thereby reducing costs even further and leading the way for many researchers as they look to leverage AWS in their own fields of research. When super-science projects like the Square Kilometer Array (SKA) come online, they’ll be ready. Without the cloud to enable their experiments, they would still be investigating how to do the experiments, instead of actually conducting them.

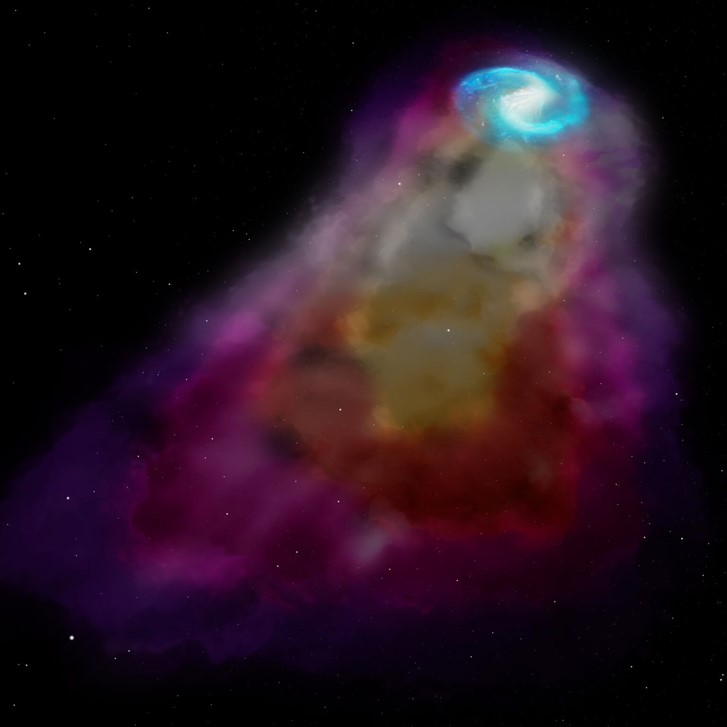

We are privileged to work with customers who are pushing the boundaries of science using AWS, and expanding our understanding of the universe at the same time. In Figure 3, you can see an artist’s impression of the newly discovered hydrogen emissions from a galaxy more than 5 billion light years away from Earth. To learn more about the work performed by the ICRAR team, please read their media release.

Figure 3: An artist’s impression of the discovered hydrogen emissions

To learn more about how customers are using AWS for Scientific Computing, please visit our Scientific Computing on AWS website.

Images provided by and used with permission of the International Centre of Radio Astronomy Research.