Category: Education

Whiteboard with an SA: Amazon Virtual Private Cloud

How should you design your first VPC? Amazon Virtual Private Cloud (VPC for short) lets you provision an isolated section of the cloud to run your AWS resources in a network you control. When planning your VPC network design, there are several design considerations to explore.

In this video, Warren Santner, AWS Solutions Architect (SA), shows you how to plan and deploy your VPC to have complete control over your virtual networking environment, including selection of your own IP address range, creation of subnets, and configuration of route tables and network gateways. He will walk you through several design patterns taking into account these three criteria:

- Who are your users?

- What systems are accessing your resources?

- Where are they accessing these resources?

Watch this whiteboarding video to learn how to design your first VPC.

Continue to whiteboard with our AWS Worldwide Public Sector Solutions Architects for step-by-step instructions and demos on topics important to you in our YouTube Channel. Have a question about cloud computing? Our public sector Solutions Architects are here to help! Send us your question at aws-wwps-blog@amazon.com.

AWS Public Sector Month in Review – August

Here is the first edition of the AWS Public Sector Month in Review. Each month we will be curating all of the content published for the education, government, and nonprofit communities including blogs, white papers, webinars, and more.

Let’s take a look at what happened in August:

All – Government, Education, & Nonprofits

- 2016 GovLoop Guide – Government and the Internet of Things: Your Questions Answered

- Cloud Adoption Maturity Model: Guidelines to Develop Effective Strategies for Your Cloud Adoption Journey

- Building a Microsoft BackOffice Server Solution on AWS with AWS CloudFormation

- Exploring the Possibilities of Earth Observation Data

- California Apps for Ag Hackathon: Solving Agricultural Challenges with IoT

Education

Government

- Jeff Bezos Joins Defense Secretary Ash Carter’s New Defense Innovation Advisory Board

- Acquia, an APN Technology Partner, Leverages AWS to Achieve FedRAMP Compliance

- Ransomware Fightback Takes to the Cloud

- City on a Cloud Innovation Challenge: Partners in Innovation and Cloud Innovation Leadership Award Winners

Nonprofits

New Customer Success Stories

Latest YouTube Videos

Whiteboard with our AWS Worldwide Public Sector Solutions Architects for step-by-step instructions and demos on topics important to you. Have a question about cloud computing? Our public sector SAs are here to help! Send us your question at aws-wwps-blog@amazon.com.

- AWS SA Whiteboarding | Amazon Virtual Private Cloud (VPC)

- AWS Q&A with an SA: | How should I design my first VPC?

- AWS SA Whiteboarding | AWS Direct Connect

- AWS SA Whiteboarding | Tagging Demo

- AWS Q&A with an SA: | What are tags and what can I do with them?

- AWS SA Whiteboarding | AWS Code Deployment

- AWS Q&A with an SA | How do I easily deploy my code on AWS?

Recent Webinars

- Geospatial Analytics in the Cloud with ENVI and Amazon Web Services

- FedRAMP High & AWS GovCloud(US): Meet FISMA High Requirements

- Running Microsoft Workloads in the AWS Cloud

Upcoming Events

Attend one of our upcoming events and meet with AWS experts to get all of your questions answered. Register for one of the events below:

- September 12- 13 – Massachusetts Digital Summit – Boston, MA

- September 12-14 – LWDA – Potsdam

- September 12 -14 – EO Open Science – Frascati, Italy

- September 13- 14 – UK Defence Symposium – London

- September 16-17 – New York Digital Summit – New York, NY

- September 17-21 – NASCIO Annual Conference – Orlando, FL

- September 18-21 – ISM for Human Services – Phoenix, AZ

- September 19 – AWS Agriculture Analysis in the Cloud Day at The Ohio State University – Columbus, OH

- September 19-20 – SC CJIS Users Conference – Myrtle Beach, SC

- September 20- 21 – DonorPerfect Conference – Philadelphia, PA

- September 22 – AWS Summit – Rio de Janeiro

- September 28 – AWS Summit – Lima

- September 29 – Tech & Tequila MeetUp: Tapping into Tech Hubs – Arlington, VA

Keep checking back with us. But in the meantime, are you following us on social media? Follow along to stay up to the date with all of the real-time AWS news for government and education.

Industry, Teaching, and Jobs: How One Instructor Keeps Learning to Prepare Students for Cloud Careers

To fuel the pipeline of technologists entering the workforce, the British Columbia Institute of Technology (BCIT), one of Canada’s largest post-secondary polytechnics, is constantly looking for ways to bring innovative technology to their students. Whether it is equipping students with cloud computing resources through AWS Educate or partnering with technology companies to understand the skills necessary to succeed post-graduation, BCIT is an example of the importance of industry and education working together to meet the increasing demand for cloud employees.

To fuel the pipeline of technologists entering the workforce, the British Columbia Institute of Technology (BCIT), one of Canada’s largest post-secondary polytechnics, is constantly looking for ways to bring innovative technology to their students. Whether it is equipping students with cloud computing resources through AWS Educate or partnering with technology companies to understand the skills necessary to succeed post-graduation, BCIT is an example of the importance of industry and education working together to meet the increasing demand for cloud employees.

Different than a college or university, BCIT’s approach combines small classes, applied academics and hands-on experience so that students are ready to launch their careers from day one. With 300 new students in computer science each year, BCIT creates a custom curriculum geared toward achieving a high placement rate for their students. This past year, 75% of BCIT students secured jobs in a related field upon graduation. How do they achieve such a high placement rate for their students? They bring the cloud into the classroom and continue tweaking the courses to provide the most innovative, up-to-date technology resources to their students.

Cloud in the Classroom

“We brought in our first cloud computing class four years ago, and recently we made the decision to only teach AWS. Instead of breadth, we decided to give students depth on AWS so they can easily transition into the workforce,” Dr. Bill Klug, Cloud Computing Option Head & Instructor at BCIT, said. “And AWS Educate is really important to give students the opportunity to work on the platform, so they don’t have to pay to consume the resources.”

Every class has a lab with it, mostly built as two hour lectures with two hour labs. During the labs, students are tasked with different assignments and projects, such as creating a virtual private cloud (VPC) with two parts: a public and a private subnet. For example, in the public subnet, they set up an OpenVPN server, a NAT instance, and a web server. In the private subnet, they set up a database server.

Students are using their AWS Educate credits for compute resources used in labs, including Amazon Elastic Compute Cloud (Amazon EC2), Amazon Simple Storage Service (Amazon S3), and Amazon Route 53. These exercises move from theory into practice, helping students get the skills they need to secure a job after graduation.

AWS Educate provides students and educators with the resources needed to accelerate cloud-related learning endeavors. Students are able to access cloud content, training, collaboration tools, and AWS technology at no cost to prepare them for a future as cloud entrepreneurs.

Cloud Outside the Classroom

Last summer, Dr. Klug approached Cloudreach, an APN Premier Consulting Partner, to see if he could work for them for five months to learn how they use the AWS Cloud. From January to May, he shadowed DevOps engineers to learn the types of workloads customers are moving to the cloud and what skillsets employees need to maintain to be able to provide the best resources for their customers’ needs. He then took these methods back to the classroom to make sure his department was delivering the training required.

“Building these relationships between the university and technology companies provides value to both of us. They know that they are getting someone that is properly trained for the work they will be doing right out of school, and we can tailor our curriculum to the kinds of skills needed,” Dr. Klug said.

As a result of his sabbatical and in developing the courses to match what is needed, BCIT created an “option,” or a minor, in cloud computing, the first of its kind in Canada. This option includes AWS Educate for classes, such as “Cloud Computing Platforms,” ”DevOps Engineering,” and “Programming in the Cloud.”

Ready to help your students skill up on cloud to get them ready for cloud careers? Visit the AWS Educate web page to sign up your institution or class — or let your student know about individual student accounts.

MalariaSpot: Diagnose Diseases with Video Games

More than one billion people in the world entertain themselves with apps and video games. Only a hobby? For Miguel Luengo Oroz, the answer is no. Miguel and his team from the Technical University of Madrid (UPM) have resolved to use the collective intelligence of players from around the world to help diagnose diseases that kill thousands of people every day.

Parasites rather than spaceships

The idea originated in 2012. “While I was working for the United Nations in global health challenges, it caught my attention how tough and manual the process of diagnosing malaria was,” explains Miguel. “It can take up to 30 minutes to identify and count the parasites in a blood sample that cause the disease. There are not enough specialists in the world to diagnose all the cases!”

Miguel, a great fan of videogames had an idea: “Why not create a video game in which rather than shooting spaceships we search for parasites?” And MalariaSpot was born, a game available for computer and mobiles in which the “malaria hunter” has one minute to detect the parasites in a real, digitalized blood sample.

Since its launch, more than 100,000 people in 100 countries have “hunted” one and a half million parasites, and the results are promising. The number of clicks made by many players in the same image sample combined by artificial intelligence shows a count as precise as the one of an expert, but quicker.

“We published a study that probed that the collective diagnosis by the use of a videogame is not a crazy thing, but now it needs to be assessed from a medical point of view,” explains Miguel. His team cooperates with a clinic in Mozambique and has done some tests in real time and has achieved the first collaborative remote diagnosis of malaria from Africa.

The technology platform to host the game was the key. “We needed a flexible infrastructure that worked from anywhere in the world. We usually have traffic spikes when we appear in media or when we do campaigns in social networks, and we saw that Amazon Web Services (AWS) offered a good solution for auto scaling based on demand,” Miguel said.

Miguel and his team use the AWS Research Grants program that allows students, teachers, and researchers to transfer their activities to the cloud and innovate rapidly at a low cost. “We can now test different services without having to worry about the bill,” explains Miguel.

From the White House to neighborhood schools

The MalariaSpot project has attracted the recognition of entities such as the Singularity University of NASA, the Office of Science and Technology of the White House, and the Massachusetts Institute of Technology (MIT), which has named Miguel one of the ten Spaniards under 35 with potential to change the world through technology.

But one of the greatest rewards for Miguel and his team comes from much closer to home. They enjoy visiting schools all over Spain and helping awaken unsuspected scientific vocations. “Today’s kids are digital natives. They are used to seeing and analyzing complex images on a screen,” says Miguel. This shows the educational value and awareness of videogames. During the last World Malaria Day on April 25th, thousands of Spanish students participated in “Olympic malaria video games,” playing the new game MalariaSpot Bubbles. School teams competed to become the best virtual hunters of malaria parasites.

“With MalariaSpot, we have even be able to reach kids who were not very good at biology. In a workshop that we ran in a school last year, the kid who won was the worst troublemaker out of his whole class,” explains Miguel (with a smile).

The future of medical diagnosis is not only defined in laboratories. “We are at a turning point where technology allows ubiquitous connectivity. We, and the rest of our generation, are responsible to direct all the possibilities that technology offers us to initiatives that make a real impact on the lives of people. And what better than health.”

With MalariaSpot and her “younger sister,” TuberSpot, Miguel and his young team of researchers are contributing so that in five years, 5% of video games are used to analyze medical images. Their objective? “Achieve a low cost diagnosis of global diseases, accessible to any person anywhere around the planet.”

Moving Buildings Leads to the All-In Move to the Cloud for Pacific Northwest College of Art

For Pacific Northwest College of Art (PNCA), an arts college with under 1,000 students, their move to the cloud began with a physical move. When a renovated post office became the new classroom for students and office for the team, they were faced with deciding whether to bring their servers with them or leave them behind.

“We had to decide if we wanted to put together a big proposal to ask for $100,000 to $200,000 to build out a server room in the new building or we could start paying a couple of thousands of dollars monthly and create a new model without having to make the big financial request,” Brennen Florey, senior web developer and one of the technology co-directors at PNCA, said.

Prior to the move, their servers sat in a small closet with an air conditioner blowing on them. They were at the end of their life cycle, and these servers needed an upgrade. They were anxious to move their “old-school environment” to a new, advanced architecture to better serve the students, but still needed to have the trust and security to meet mandates, such as the Family Educational Rights and Privacy Act (FERPA), to continue to protect student data.

“Education as an institution can be a little hesitant to adopt new technology, but technology supports education. The old content and concept of doing things with the service closets was not furthering our mission to better educate our students and give them the resources to be successful,” Brennen said. “Why not leverage the most modern tool and technology to educate, and not have to build or manage hardware?”

With a willingness to consider cloud technology, the team, Brennen, Jason Williams, and Teresa Christiansen, set a goal to make a fresh start in their new building and decided to leave the servers behind. They started by moving their web applications to Amazon Elastic Compute Cloud (Amazon EC2), then they began moving their mission-critical apps as well. Their journey evolved with new AWS releases and innovations. Since the infrastructure shaped how they delivered those web apps, they then began using Amazon Route 53, Amazon Simple Storage Service (Amazon S3), Amazon CloudFront, Amazon Simple Notification Service (SNS), and Amazon Simple Queue Service (SQS). They started by “dipping their toes in the water,” achieving small successes and then let them grow.

“We were able to start small and build small successes and the Amazon model allowed us to continue to grow. You can set up one service or 35. You don’t need a big ramp to make a radical change,” Jason Williams, Senior Application Engineer and Technology Co-Director at PNCA, said.

To make the case to the CFO and other leadership to go all-in on AWS, the small technology team had to prove the value and deliver across the board. “We needed to show no downtime and no weakness,” Brennen said. “The case was made when the leadership saw that as we slowly made the move, the stability was there, there were obvious improvements with speed and capabilities, and we were saving money along the way. It paid off for the organization.”

“We didn’t begin the process as AWS certified experts, and there was a learning curve and an educational period, “ Jason said, “ But the move to the cloud challenged us to grow as professionals and actually shaped what kind of department we had from who we hired to what we prioritized.”

In the first 6-12 months, the college had almost 80% of their applications on AWS. One application in particular was their learning management system, Homeroom, which they built themselves. It started as a small application sitting on a server beneath a desk. Every time an update needed to be made, it went down. “We were very much at the mercy of a single box, but as we moved onto the Amazon architecture, Homeroom entered a new era of stability, which meant a more powerful toolset being delivered to the school,” Brennen said. These benefits were seen by faculty, staff, and students.

Ransomware Fightback Takes to the Cloud

Guest post by Raj Samani, EMEA CTO Intel Security (@Raj_Samani)

“How many visitors do you expect to access the No More Ransom Portal?”

This was the simple question asked prior to this law enforcement (Europol’s European Cybercrime Centre, Dutch Police) and private industry (Kaspersky Lab, Intel Security) portal going live, which I didn’t have a clue how to answer. What do YOU think? How many people do you expect to access a website dedicated to fighting ransomware?

If you said 2.6 million visitors in the first 24 hours, then please let me know six numbers you expect to come up in the lottery this weekend (I will spend time until the numbers are drawn to select the interior of my new super yacht). I have been a long-time advocate of cloud technology, and its benefit of rapid scalability came to the rescue when our visitor numbers blew expected numbers out of the water. To be honest, if we had attempted to host this site internally, my capacity estimates would have resulted in the portal crashing within the first hour of operation. That would have been embarrassing and entirely my fault.

Indeed my thoughts on the use of cloud computing technology are well documented in various blogs, my work within the Cloud Security Alliance, and the book I recently co-authored. I have often used the phrase, “Cloud computing in the future will keep our lights on and water clean.” The introduction of Amazon Web Services (AWS) and the AWS Marketplace into the No More Ransom Initiative to host the online portal demonstrates that the old myth, “one should only use the cloud for non-critical services,” needs to be quickly archived into the annals of history.

To ensure such an important site was ready for the large influx of traffic at launch, we had around the clock support out of Australia and the U.S. (thank you, Ben Potter and Nathan Case from AWS!), which meant everything was running as it should and we could handle millions of visitors on our first day. This, in my opinion, is the biggest benefit of the cloud. Beyond scalability, and the benefits of outsourcing the management and the security of the portal to a third party, an added benefit was that my team and I could focus our time on developing tools to decrypt ransomware victims’ systems, conduct technical research, and engage law enforcement to target the infrastructure to make such keys available.

AWS also identified controls to reduce the risk of the site being compromised. With the help of Barracuda, they implemented these controls and regularly test the portal to reduce the likelihood of an issue.

Thank you, AWS and Barracuda, and welcome to the team! This open initiative is intended to provide a non-commercial platform to address a rising issue targeting our digital assets for criminal gain. We’re thrilled that we are now able to take the fight to the cloud.

Building a Microsoft BackOffice Server Solution on AWS with AWS CloudFormation

An AWS DevOps guest blog post by Bill Jacobi, Senior Solutions Architect, AWS Worldwide Public Sector

Last month, AWS released the AWS Enterprise Accelerator: Microsoft Servers on the AWS Cloud along with a deployment guide and CloudFormation template. This blog post will explain how to deploy complex Windows workloads and how AWS CloudFormation solves the problems related to server dependencies.

This AWS Enterprise Accelerator solution deploys the four most requested Microsoft servers ─ SQL Server, Exchange Server, Lync Server, and SharePoint Server ─ in a highly available multi-AZ architecture on AWS. It includes Active Directory Domain Services as the foundation. By following the steps in the solution, you can take advantage of the email, collaboration, communications, and directory features provided by these servers on the AWS IaaS platform.

There are a number of dependencies between the servers in this solution, including:

- Active Directory

- Internet access

- Dependencies within server clusters, such as needing to create the first server instance before adding additional servers to the cluster.

- Dependencies on AWS infrastructure, such as sharing a common VPC, NAT gateway, Internet gateway, DNS, routes, and so on.

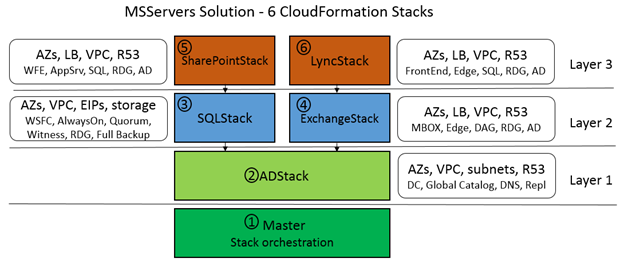

The infrastructure and servers are built in three logical layers. The Master template orchestrates the stack builds with one stack per Microsoft server and manages inter-stack dependencies. Each of the CloudFormation stacks use PowerShell to stand up the Microsoft servers at the OS level. Before it configures the OS, CloudFormation configures the AWS infrastructure required by each Windows server. Together, CloudFormation and PowerShell create a quick, repeatable deployment pattern for the servers. The solution supports 10,000 users. Its modularity at both the infrastructure and application level enables larger user counts.

Managing Stack Dependencies

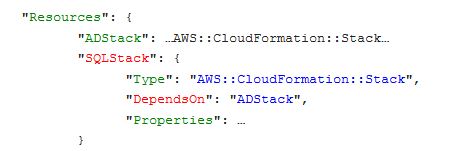

To explain how we enabled the dependencies between the stacks,the SQLStack is dependent on ADStack since SQL Server is dependent on Active Directory and, similarly, SharePointStack is dependent on SQLStack, both as required by Microsoft. Lync is dependendent on Exchange since both servers must extend the AD schema independently. In Master, these server dependencies are coded in CloudFormation as follows:

and

The “DependsOn” statements in the stack definitions forces the order of stack execution to match the diagram. Lower layers are executed and successfully completed before the upper layers. If you do not use “DependsOn”, CloudFormation will execute your stacks in parallel. An example of parallel execution is what happens after ADStack returns SUCCESS. The two higher-level stacks, SQLStack and ExchangeStack, are executed in parallel at the next level (layer 2). SharePoint and Lync are executed in parallel at layer 3. The arrows in the diagram indicate stack dependencies.

Passing Parameters Between Stacks

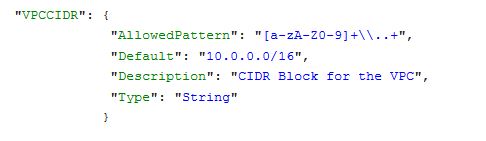

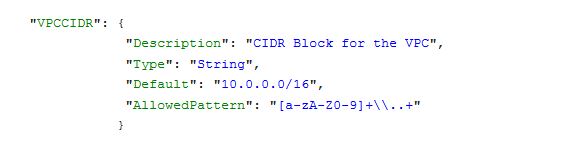

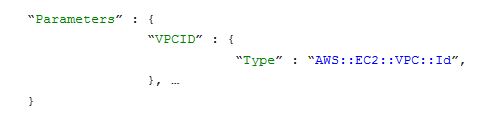

If you have concerns about how to pass infrastructure parameters between the stack layers, let’s use an example in which we want to pass the same VPCCIDR to all of the stacks in the solution. VPCCIDR is defined as a parameter in Master as follows:

By defining VPCCIDR in Master and soliciting user input for this value, this value is then passed to ADStack by the use of an identically named and typed parameter between Master and the stack being called.

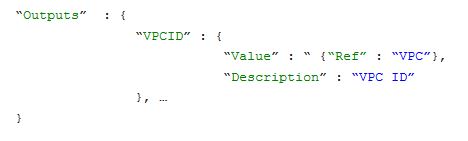

After Master defines VPCCIDR, ADStack can use “Ref”: “VPCCIDR” in any resource (such as the security group, DomainController1SG) that needs the VPC CIDR range of the first domain controller. Instead of passing commonly-named parameters between stacks, another option is to pass outputs from one stack as inputs to the next. For example, if you want to pass VPCID between two stacks, you could accomplish this as follows. Create an output like VPCID in the first stack:

In the second stack, create a parameter with the same name and type:

Managing Dependencies Between Resources Inside a Stack

All of the dependencies so far have been between stacks. Another type of dependency is one between resources within a stack. In the Microsoft servers case, an example of an intra-stack dependency is the need to create the first domain controller, DC1, before creating the second domain controller, DC2.

DC1, like many cluster servers, must be fully created first so that it can replicate common state (domain objects) to DC2. In the case of the Microsoft servers in this solution, all of the servers require that a single server (such as DC1 or Exch1) must be fully created to define the cluster or farm configuration used on subsequent servers.

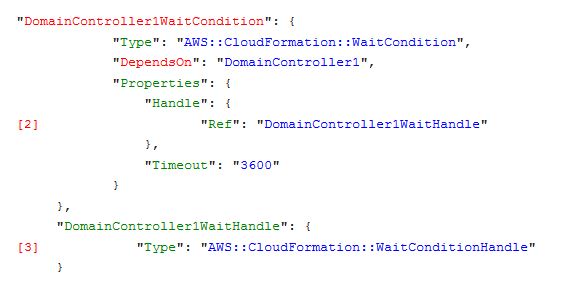

Here’s another intra-stack dependency example: The Microsoft servers must fully configure the Microsoft software on the Amazon EC2 instances before those instances can be used. So there is a dependency on software completion within the stack after successful creation of the instance, before the rest of stack execution (such as deploying subsequent servers) can continue. These intra-stack dependencies like “software is fully installed” are managed through the use of wait conditions. Wait conditions are resources just like EC2 instances that allow the “DependsOn” attribute mentioned earlier to manage dependencies inside a stack. For example, to pause the creation of DC2 until DC1 is complete, we configured the following “DependsOn” attribute using a wait condition. See (1) in the following diagram:

The WaitCondition (2) depends on a CloudFormation resource called a WaitHandle (3), which receives a SUCCESS or FAILURE signal from the creation of the first domain controller:

SUCCESS is signaled in (4) by cfn-signal.exe –exit-code 0 during the “finalize” step of DC1, which enables CloudFormation to execute DC2 as an EC2 resource via the wait condition.

If the timeout had been reached in step (2), this would have automatically signaled a FAILURE and stopped stack execution of ADStack and the Master stack.

As we have seen in this blog post, you can create both nested stacks and nested dependencies and can pass parameters between stacks by passing standard parameters or by passing outputs. Inside a stack, you can configure resources that are dependent on other resources through the use of wait conditions and the cfn-signal infrastructure. The AWS Enterprise Accelerator solution uses both techniques to deploy multiple Microsoft servers in a single VPC for a Microsoft BackOffice solution on AWS.

In a future blog post on the AWS DevOps Blog, we will illustrate how PowerShell can be used to bootstrap and configure Windows instances with downloaded cmdlets, all integrated into CloudFormation stacks.

This post was originally published on the AWS DevOps Blog. For similar posts and insights for Developers, DevOps Engineers, Sysadmins, and Architects, visit the AWS DevOps Blog. And learn more from Bill in the “Running Microsoft Workloads in the AWS Cloud” Webinar here.

Cloud Transformation Maturity Model: Guidelines to Develop Effective Strategies for Your Cloud Adoption Journey

The Cloud Transformation Maturity Model offers a guideline to help organizations develop an effective strategy for their cloud adoption journey. This model defines characteristics that determine the stage of maturity, transformation activities within each stage that must be completed to move to the next stage, and outcomes that are achieved across four stages of organizational maturity, including project, foundation, migration, and optimization.

Where are you on your journey? Follow the table below to determine what stage you are in:

Advice from All-In Customers

We also want to share advice from our customers across various industries who have decided to go all-in on the AWS Cloud.

“One of the things we’ve learned along the way is the culture change that is needed to bring people along on that cloud journey and really transforming the organization, not only convincing them that the technology is the right way to go, but winning over the hearts and minds of the team to completely change direction.”

— Mike Chapple, Sr. Director for IT Service Delivery, Notre Dame

“For other systems we saw that we could make fast configurations inside Amazon that would make those systems run faster. It was a huge success. And the thing about that type of success is that it breeds more interest in getting that kind of success. So after starting a proof of concept with AWS, we immediately began to expand until fully migrated on AWS”

— Eric Geiger, VP of IT Ops, Federal Home Loan Bank of Chicago

“The greatest thing about AWS for us is that it really scales with the business needs, not only from a capacity perspective, but also from a regional expansion perspective so that we can take our business model, roll it out across the region and eventually across the globe.”

We want to help your organization through your journey. Contact our sales and business development teams at https://aws.amazon.com/contact-us/ or take the “Guide to Cloud Success” training brought to you by the GovLoop Academy.

And also check out the Cloud Transformation Maturity Model whitepaper here to continue to learn more.

California Apps for Ag Hackathon: Solving Agricultural Challenges with IoT

The Apps for Ag Hackathon is an agricultural-focused hackathon designed to solve real-world farming problems through the application of technology. In partnership with the University of California Division of Agriculture and Natural Resources, the US Department of Agriculture (USDA), and the California State Fair, AWS sponsored the Hackathon to find ways to help farmers improve soil health, curb insect infestations and boost water efficiency through Internet of Things (IoT) technologies. The 48-hour event brought together teams from across Northern California, including commercial, federal, state and local, and education organizations.

The goal of the Hackathon was to create sensor-enabled apps to help farmers do things like track water use and fight insect invasions. We provided credits for teams to develop and build their solutions on AWS. Also, Intel provided several Internet of Things (IoT) Kits to help participants build sensor-based solutions on AWS. Additionally, AWS technologists provided onsite architectural guidance and team mentoring.

Check out the winning teams below and the innovative applications built to solve agricultural challenges:

- First Place – GivingGarden, a hyper-local produce sharing app with a big vision.

- Second Place – Sense and Protect, IoT sensors and a mobile task management app to increase farm worker safety and productivity.

- Third Place – ACP STAR SYSTEM, a geo and temporal database and platform for tracking Asian Citrus Psyllid and other invasive pests.

- Fourth Place – Compostable, an app and IoT device that diverts food waste from landfills and turns it into fertilizer and fuel so that it can go back to the farm.

At the Hackathon, we worked closely with many young computer science students and helped them understand the benefits of cloud computing. Experimenting on AWS Lambda allowed the students to deploy serverless applications on AWS quickly. This greatly reduced the time spent focusing on infrastructure and allowed the teams to focus on developing their application. The IoT kits also enabled rapid development and prototyping of solutions. In addition, the teams took advantage of AWS Elastic Beanstalk for rapid delivery of application code.

Working with several states, participants and mentors were able to share information and cross-pollinate ideas, which not only provided value to the development teams at the Hackathon, but will also be valuable to other states in the future.

Learn more about how AWS IoT makes it easy to use AWS services like AWS Lambda, Amazon Kinesis, Amazon S3, Amazon Machine Learning, Amazon DynamoDB, Amazon CloudWatch, AWS CloudTrail, and Amazon Elasticsearch Service with built-in Kibana integration, to build IoT applications that gather, process, analyze, and act on data generated by connected devices, without having to manage infrastructure.

We are hosting an Agriculture Analysis in the Cloud Day with The Ohio State University’s 2016 Farm Science Review. You’re invited to attend the event on September 19th, 2016 from 8:30am – 6:30pm EDT (Breakfast and Lunch are provided), taking place at OSU in the Nationwide & Ohio Farm Bureau 4-H Center. Register now (seats are limited).

Exploring the Possibilities of Earth Observation Data

Recently, we have been sharing stories of how customers like Zooniverse and organizations like Sinergise have used the Sentinel-2 data made available via Amazon Simple Storage Service (Amazon S3). From disaster relief to vegetation monitoring to property taxation, this data set allows for organizations to build tools and services with the data that improve citizen’s lives.

We recently connected with Andy Wells from Sterling Geo and looked back at ways the world has changed in the past decade and looked ahead at the possibilities using Earth Observation data.

The world has been using satellite imagery for over 20 years, but the average organization today is just starting to reap the benefits of remote sensing. But from start to finish they need to consider: Where do I get the data from? And what do I do with all of the data?

As a big data use case, it doesn’t get much bigger than this. The physical amount of data captured weekly, daily, and hourly is astronomical and the world keeps spinning. And since the world keeps spinning, it also keeps changing.

“To say there is a tsunami of data is an understatement. And the physical amount of data is only going to go up exponentially. Businesses can get swamped considering: Where do I buy? How do I buy? Why do I buy?” said Andy Wells, Managing Director of SterlingGeo. “But there is an enormous opportunity to evolve governments, educational institutes, and charities by translating that data into something they may need.”

Data is just data unless it is turned into information that can inform better decisions. Without the technology to help make sense of all the data, it never turns into useful information.

Moving from a static model to a dynamic model allows you to keep up with the influx of data coming from satellite imagery and Internet of Things (IoT) technologies. This is a giant leap forward and a big part of what Andy and his team are doing.

Looking at the ‘What Ifs’ – The Art of the Possible

What happens if you could put your analytics engine on the cloud? Instead of an organization investing money up front, running software locally, and training a person without ever seeing value, organizations can turn to the cloud. All of a sudden, you don’t have to invest in the software up front. You just have to run the compute process to turn raw data into information.

“The magic bit of it is that by leveraging the cloud, we can present the result to the end user in a form that is just right for them. It could be in a map or an image in a simple email, taking the heavy lifting off the customer and just providing them with the business information that users need to actually make decisions,” Andy said.

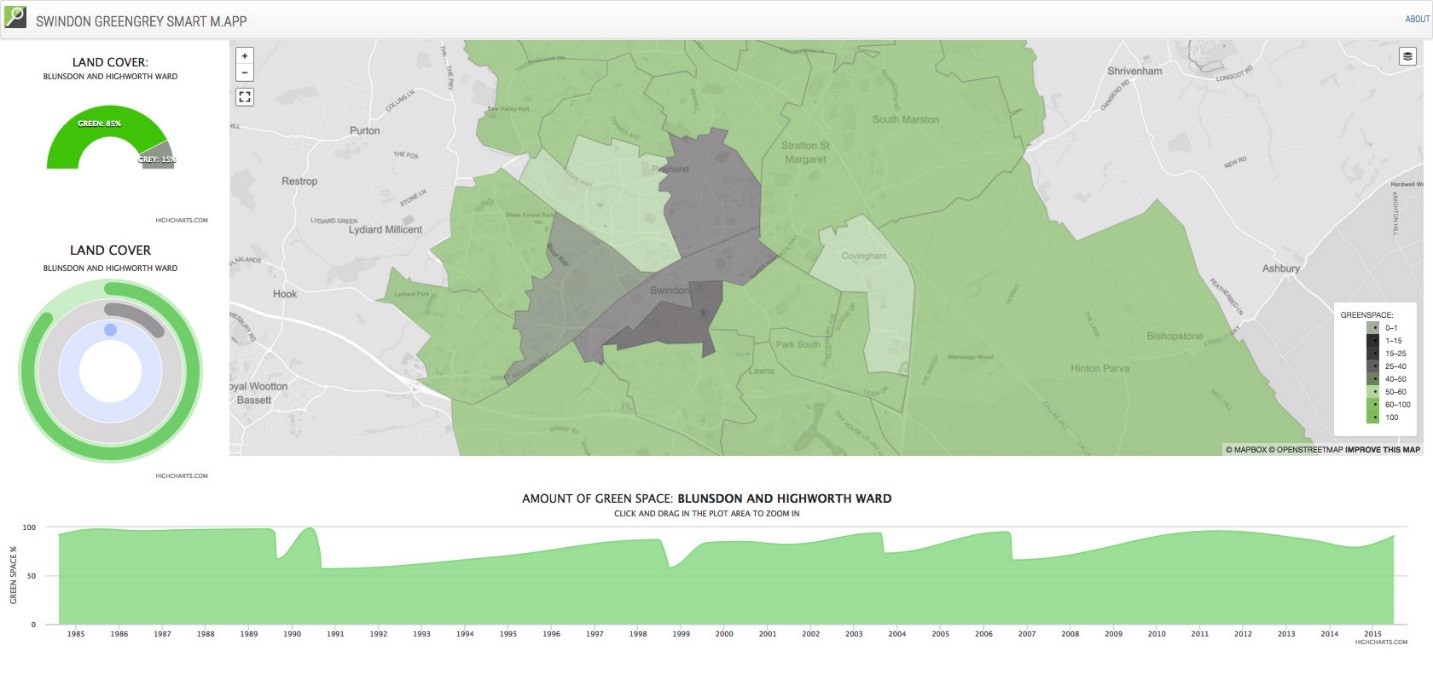

For example, Sentinel-2 data can be analyzed for greenness. The human eye sees in red, green, and blue light, but satellites see in a wider spectrum. So when plants photosynthesize, they put off more near-infrared light, meaning we can actually monitor how healthy plants are, and how much greenery is in a city, and then run an algorithm to see the greenness of an area.

By connecting this algorithm to Sentinel-2 and Landsat data, you can watch how your city has evolved over time in terms greenness, the general heath of a city based on open space, such as parks. Knowing this information allows city leaders to understand if they have paved over too many gardens and parks or where they may need to have more houses or bigger roads. A pilot service of this has now been deployed to numerous local government bodies within a UK Space Agency Programme called Space for Smarter Government.

Being forward-thinking allows companies to solve challenges throughout the world with existing data. The cloud allows them to focus on their mission, without having to worry about where the data is coming from. Leveraging the compute power of AWS gives organizations what they need to make decisions quickly and at a low cost. Andy said, “The good news is, this is not 10 years down the road. These possibilities can be realized today.”