Category: Nonprofit

Launch of the AWS Asia Pacific Region: What Does it Mean for our Public Sector Customers?

With the launch of the AWS Asia Pacific (Mumbai) Region, Indian-based developers and organizations, as well as multinational organizations with end users in India, can achieve their mission and run their applications in the AWS Cloud by securely storing and processing their data with single-digit millisecond latency across most of India.

The new Mumbai Region consists of two Availability Zones (AZ) at launch, which includes one or more geographically distinct data centers, each with redundant power, networking, and connectivity. Each AZ is designed to be resilient to issues in another Availability Zone, enabling customers to operate production applications and databases that are more highly available, fault tolerant, and scalable than would be possible from a single data center.

The Mumbai Region supports Amazon Elastic Compute Cloud (EC2) (C4, M4, T2, D2, I2, and R3 instances are available) and related services including Amazon Elastic Block Store (EBS), Amazon Virtual Private Cloud, Auto Scaling, and Elastic Load Balancing.

In addition, AWS India supports three edge locations – Mumbai, Chennai, and New Delhi. The locations support Amazon Route 53, Amazon CloudFront, and S3 Transfer Acceleration. AWS Direct Connect support is available via our Direct Connect Partners. For more details, please see the official release here and Jeff Barr’s post here.

To celebrate the launch of the new region, we hosted a Leaders Forum that brought together over 150 government and education leaders to discuss how cloud computing and the expansion of our global infrastructure has the potential to change the way the governments and educators of India design, build, and run their IT workloads.

The speakers shared their insights gained from our 10-year cloud journey, trends on adoption of cloud by our customers globally, and our experience working with government and education leaders around the world.

Please see pictures from the event below.

.jpg)

AWS Public Sector Summit – Washington DC Recap

The AWS Public Sector Summit in Washington DC brought together over 4,500 attendees to learn from top cloud technologists in the government, education, and nonprofit sectors.

Day 1

The Summit began with a keynote address from Teresa Carlson, VP of Worldwide Public Sector at AWS. During her keynote, she announced that AWS now has over 2,300 government customers, 5,500 educational institutions, and 22,000 nonprofits and NGOs. And AWS GovCloud (US) usage has grown 221% year-over-year since launch in Q4 2011. To continue to help our customers meet their missions, AWS announced the Government Competency Program, the GovCloud Skills Program, the AWS Educate Starter Account, and the AWS Marketplace for the U.S. Intelligence Community during the Summit. See more details about each announcement below.

- AWS Government Competency Program: AWS Government Competency Partners have deep experience working with government customers to deliver mission-critical workloads and applications on AWS. Customers working with AWS Government Competency Partners will have access to innovative, cloud-based solutions that comply with the highest AWS standards.

- AWS GovCloud (US) Skill Program: The AWS GovCloud (US) Skill Program provides customers with the ability to readily identify APN Partners with experience supporting workloads in the AWS GovCloud (US) Region. The program identifies APN Consulting Partners with experience in architecting, operating and managing workloads in GovCloud, and APN Technology Partners with software products that are available in AWS GovCloud (US).

- AWS Educate Starter Account: AWS Educate announced the AWS Educate Starter Account that gives students more options when joining the program and does not require a credit card or payment option. The AWS Educate Starter Account provides the same training, curriculum, and technology benefits of the standard AWS Account.

- AWS Marketplace for the U.S. Intelligence Community: We have launched the AWS Marketplace for the U.S. Intelligence Community (IC) to meet the needs of our IC customers. The AWS Marketplace for the U.S. IC makes it easy to discover, purchase, and deploy software packages and applications from vendors with a strong presence in the IC in a cloud that is not connected to the public Internet.

Watch the full video of Teresa’s keynote here.

Teresa was then joined onstage by three other female IT leaders: Deborah W. Brooks, Co-Founder & Executive Vice Chairman, The Michael J. Fox Foundation for Parkinson’s Research, LaVerne H. Council, Chief Information Officer, Department of Veterans Affair, and Stephanie von Friedeburg, Chief Information Officer and Vice President, Information and Technology Solutions, The World Bank Group.

Each of the speakers addressed how their organization is paving the way for disruptive innovation. Whether it was using the cloud to eradicate extreme poverty, serving and honoring veterans, or fighting Parkinson’s Disease, each demonstrated patterns of innovation that help make the world a better place through technology.

The day 1 keynote ended with the #Smartisbeautiful call to action encouraging everyone in the audience to mentor and help bring more young girls into IT.

Day 2

The second day’s keynote included a fireside chat with Andy Jassy, CEO of Amazon Web Services. Watch the Q&A here.

Andy addressed the crowd and shared Amazon’s passion for public sector, the expansion of AWS regions around the globe, and the customer-centric approach AWS has taken to continue to innovate and address the needs of our customers.

We also announced the winners of the third City on a Cloud Innovation Challenge, a global program to recognize local and regional governments and developers that are innovating for the benefit of citizens using the AWS Cloud. See a list of the winners here.

Videos, slides, photos, and more

AWS Summit materials are now available online for your reference and to share with your colleagues. Please view and download here:

- Watch the Day 1 Keynote featuring Teresa Carlson, VP of Worldwide Public Sector, AWS, The Department of Veterans Affairs, The World Bank Group, and The Michael J. Fox Foundation

- Watch the Day 2 Fireside Chat with Andy Jassy, CEO of AWS

- View and download slides from the breakout sessions

- Read the event press coverage

- Access the videos from the two-day event – with more videos coming soon

We hope to see you next year at the AWS Public Sector Summit in Washington, DC on June 13-14, 2017.

Call for Computer Vision Research Proposals with New Amazon Bin Image Data Set

Amazon Fulfillment Centers are bustling hubs of innovation that allow Amazon to deliver millions of products to over 100 countries worldwide with the help of robotic and computer vision technologies. Today, the Amazon Fulfillment Technologies team is releasing the Amazon Bin Image Data Set, which is made up of over 1,000 images of bins inside an Amazon Fulfillment Center. Each image is accompanied by metadata describing the contents of the bin in the image. The Amazon Bin Image Data Set can now be accessed by anyone as an AWS Public Data Set. This is an interim, limited release of the data. Several hundred thousand images will be released by the fall of 2016.

Call for Research Proposals

The Amazon Academic Research Awards (AARA) program is soliciting computer vision research proposals for the first time. The AARA program funds academic research and related contributions to open source projects by top academic researchers throughout the world.

Proposals can focus on any relevant area of computer vision research. There is particular interest in recognition research, including, but not limited to, large scale, fine-grained instance matching, apparel similarity, counting items, object detection and recognition, scene understanding, saliency and segmentation, real-time detection, image captioning, question answering, weakly supervised learning, and deep learning. Research that can be applied to multiple problems and data sets is preferred and we specifically encourage submissions that could make use of the Amazon Bin Image Data Set from Amazon Fulfillment Technologies. We expect that future calls will focus on other topics.

Awards Structure and Process

Awards are structured as one-year unrestricted gifts to academic institutions, and can include up to 20,000 USD in AWS Promotional Credits. Though the funding is not extendable, applicants can submit new proposals for subsequent calls.

Project proposals are reviewed by an internal awards panel and the results are communicated to the applicants approximately 2.5 months after the submission deadline. Each project will also be assigned an Amazon computer vision researcher contact. The researchers are encouraged to maintain regular communication with the contact to discuss ongoing research and progress made on the project. The researchers are also encouraged to publish the outcome of the project and commit any related code to open source code repositories.

Learn more about the submission requirements, mechanism, and deadlines for the project proposals here. Applications (and questions) should be submitted to aara-submission@amazon.com as a single PDF file by October 1, 2016. Please, include [AARA 2016 CV Submission] in the subject. Awards panel will announce the decisions about 2.5 months later.

IRS 990 Filing Data Now Available as an AWS Public Data Set

We are excited to announce that over one million electronic IRS 990 filings are available via Amazon Simple Storage Service (Amazon S3). Filings from 2011 to the present are currently available and the IRS will add new 990 filing data each month.

Form 990 is the form used by the United States Internal Revenue Service (IRS) to gather financial information about nonprofit organizations. By making electronic 990 filing data available, the IRS has made it possible for anyone to programmatically access and analyze information about individual nonprofits or the entire nonprofit sector in the United States. This also makes it possible to analyze it in the cloud without having to download the data or store it themselves, which lowers the cost of product development and accelerates analysis.

Each electronic 990 filing is available as a unique XML file in the “irs-form-990” S3 bucket in the AWS US East (N. Virginia) region. Information on how the data is organized and what it contains is available on the IRS 990 Filings on AWS Public Data Set landing page.

Users of the data can easily access individual XML files or write scripts to organize 990 data into a database using Amazon Relational Database Service (Amazon RDS) or into a data warehouse using Amazon Redshift. Amazon Elastic MapReduce (Amazon EMR) can also be used to quickly process the entire set of filings for analysis.

Collaborating with the IRS allows us to improve access to this valuable data. Making machine-readable data available in bulk on Amazon S3 is an efficient way to empower a variety of users to analyze the data using whatever tools or services they prefer. We look forward to seeing what new services people are able to create to analyze the 990 filing data available on Amazon S3.

Looking Deep into our Universe with AWS

The International Centre for Radio Astronomy Research (ICRAR) in Western Australia has recently announced a new scientific finding using innovative data processing and visualization techniques developed on AWS. Astronomers at ICRAR have been involved in the detection of radio emissions from hydrogen in a galaxy more than 5 billion light years away. This is almost twice the previous record for the most distant hydrogen emissions observed, and has important implications for understanding how galaxies have evolved over time.

Figure 1: The Very Large Array on the Plains of San Agustin Credit: D. Finley, NRAO/AUI/NSF

Working with large and fast growing data sets like these requires new scalable tools and platforms that ICRAR is helping to develop for the astronomy community. The Data Intensive Astronomy (DIA) program at ICRAR, led by Dr Andreas Wicenec, used AWS to experiment with new methods of analyzing and visualizing data coming from the Very Large Array (VLA) at the National Radio Astronomy Observatory in New Mexico. AWS has enabled the DIA team to quickly prototype new data processing pipelines and visualization tools without spending millions in precious research funds that are better spent on astronomers than computers. As data volumes grow, the team can scale their processing and visualization tools to accommodate that growth. In this case, the DIA team prototyped and built a platform to process 10s of TBs of raw data and reduce this data to a more manageable size. They then make that data available in what they refer to as a ‘data cube.’

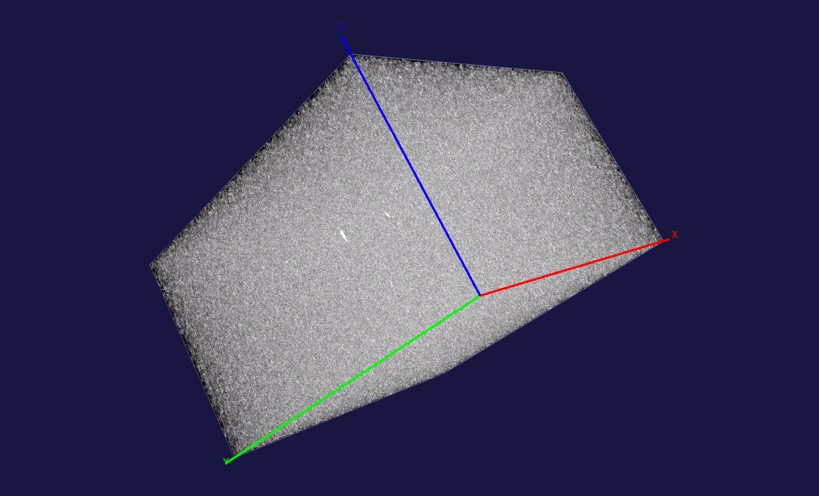

Figure 2: An image of the full data cube constructed by ICRAR

This image shows the full data cube constructed by the team at ICRAR. The cube provides a data visualization model that allows astronomers, like Dr. Attila Popping and his team at ICRAR, to search in either space or time through large images created from observations made with the VLA. Astronomers can interact with the data cube in real-time and stream it to their desktop. Having access to the full data set like this in an interactive fashion makes it possible to find new objects of interest that would otherwise not be seen or would be much more difficult to find.

ICRAR estimates that the amount of network, compute, and storage capacity required to shift and crunch this data would have made this work infeasible. By using AWS, they were able to quickly and cheaply build their new pipelines, and then scale them as massive amounts of data arrived from their instruments. They used the Amazon Elastic Compute Cloud (Amazon EC2) Spot market, accessing AWS’s spare capacity at 50-90% less than the standard on-demand pricing, thereby reducing costs even further and leading the way for many researchers as they look to leverage AWS in their own fields of research. When super-science projects like the Square Kilometer Array (SKA) come online, they’ll be ready. Without the cloud to enable their experiments, they would still be investigating how to do the experiments, instead of actually conducting them.

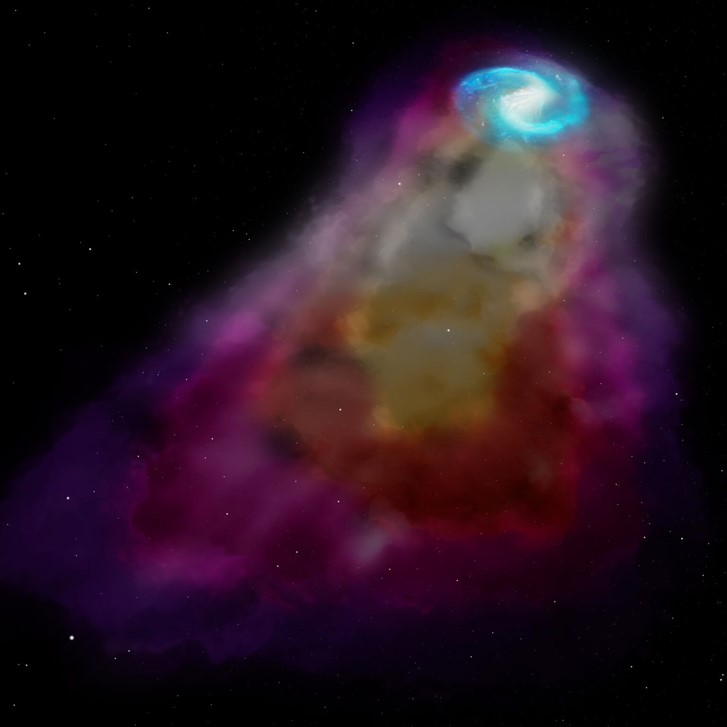

We are privileged to work with customers who are pushing the boundaries of science using AWS, and expanding our understanding of the universe at the same time. In Figure 3, you can see an artist’s impression of the newly discovered hydrogen emissions from a galaxy more than 5 billion light years away from Earth. To learn more about the work performed by the ICRAR team, please read their media release.

Figure 3: An artist’s impression of the discovered hydrogen emissions

To learn more about how customers are using AWS for Scientific Computing, please visit our Scientific Computing on AWS website.

Images provided by and used with permission of the International Centre of Radio Astronomy Research.

Alces Flight: Build your self-service supercomputer in minutes

We are excited to announce that Alces Flight has made hundreds of science and HPC applications available in the AWS Marketplace, making it easy for any researcher to spin up any size High Performance Computing (HPC) cluster in the AWS Cloud.

In the past, researchers often had to wait in line for the computing power for scientific discovery. With Alces Flight, you can take your compute projects from 0 to 60 in a few minutes. Researchers and scientists can quickly spin up multiple nodes with pre-installed compilers, libraries, and hundreds of scientific computing applications. Flight comes with a catalog of more than 750 built-in scientific computing applications, libraries and versions, covering nearly everything from engineering, chemistry and genomics, to statistics and remote sensing.

After designing and managing hundreds of HPC workflows for national and academic supercomputing centers in the United Kingdom, Alces built and validated HPC workflows tailored to researchers and automated applications built for supercomputing centers. They are now making that catalog available in the AWS Marketplace.

Take Flight today with AWS & Alces Flight

The Alces Flight portfolio of Software-as-a-Service (SaaS) offerings provides researchers with on-demand HPC clusters or ‘self-service supercomputers’ in minutes. With hundreds of science and HPC apps available in the AWS Marketplace, Alces Flight helps you automatically create a research-ready environment that complements Amazon Elastic Block Store (EBS), Amazon Simple Storage Service (Amazon S3), and Amazon Elastic Compute Cloud (Amazon EC2) resources in the AWS Cloud.

Researchers get:

- Instant access to a popular catalog of hundreds of HPC and scientific computing apps that rival those available at supercomputing centers.

- Access to HPC clusters available for use any time, from anywhere, at virtually any scale, and at the lowest possible cost with Amazon EC2 Spot Instances pricing.

- Instant on-demand access to powerful scientific computing tools that make global collaboration easy.

Read more about this announcement on Jeff Barr’s blog here. And get started and see the Alces Flight portfolio of apps in the AWS Marketplace.

The Evolution of High Performance Computing: Architectures and the Cloud

A guest blog by Jeff Layton, Principal Tech, AWS Public Sector

In High Performance Computing (HPC), users are performing computations that no one ever thought would be considered. For example, there are researchers performing a statistical analysis of the voting records of the Supreme Court, sequencing genomes of humans, plants, and animals, creating deep learning networks for object and facial recognition so that cars and Unmanned Aerial Vehicles (UAVs) can guide themselves, searching for new planets in the galaxy, looking for trends in human behavioral patterns, analyzing social patterns in user habits, targeting advertisement development and placement, and thousands of other applications.

From lotions to aircrafts, the products and services that are connected with HPC touch us each and every day, and we often don’t even realize it.

A great number of these applications are coming from the use of the massive amount of data that has been collected and stored. This is true of classic HPC applications or new HPC applications, such as deep learning that need massive data sets for learning and large stat sets for testing the model. These are very data-driven applications and their scale is getting larger every day.

A key feature of this “new” HPC is that it needs to be flexible and scalable to accommodate these new applications and the associated sea of data. New applications and algorithms are developed each year and their characteristics can vary widely, resulting in the need for increasingly diverse hardware support and new software architectures.

The cloud allows users to dynamically create architectures as they are needed, using the right amount of compute power (CPU or GPU), network, databases, data storage, and analysis tools. Rather than the classic model of fitting the application software to the hardware, the cloud allows the application software to define the infrastructure.

The cloud has a number of capabilities that map to the evolving nature of HPC, including:

- Scale and Elasticity

- Code as Infrastructure

- Ability to experiment

Scale and Elasticity

Thousands upon thousands of compute resources, massive storage capacity, and high-performance network resources are available worldwide via the cloud.

Combining scale and elasticity creates a capability for HPC cloud users that doesn’t exist for centralized shared HPC resources. If resources can be provisioned and scaled as needed and there is a large pool of resources, then waiting in job queues are a thing of the past. Each HPC user in the cloud can have access to their own set of HPC resources, such as compute, networking, and storage resources for their own specific applications with no need to share the resources with other users. They have zero queue time and can create architectures that their applications need.

Code as Infrastructure

Cloud computing also features the ability to build or assemble architectures or systems using only software (code), in which software serves as the template for provisioning hardware. Instead of having to assemble physical hardware in a specific location and manage such things as cabling, cabling labels, switch configuration, router software, and patching, HPC in the cloud allows the various components to be specified by writing a small amount of code, making it easy to expand or contract or even re-architect on-the-fly.

Code as infrastructure addresses the classic HPC problem of inflexible hardware and architecture. However, if a classic cluster architecture is needed, then that can be easily created in the cloud. If a different application needs a Hadoop architecture or perhaps a Spark architecture, then those too can be created. Only the software changes.

Ability to Experiment

As HPC continues to evolve, new applications are being developed that take advantage of experimentation, test, and iteration. These applications may involve new architectures or even re-thinking how the applications are written (re-interpretation). Having access to modular, fungible resources as a set of building blocks that can be configured and reconfigured as-needed is crucial for this new approach.

This will become even more important as HPC moves forward because the new wave of applications are heavily oriented toward massive data. Pattern recognition, machine learning, and deep learning are examples of these new applications and being able to create new architectures will allow these applications to flourish and develop based on the scale and flexibility of the cloud and corresponding economics.

See how HPC is used for open data and scientific computing here: www.aws.amazon.com/scico and www.aws.amazon.com/opendata. And check out Jeff’s previous blog The Evolution of High Performance Computing.

Rapidly Recover Mission-Critical Systems in a Disaster

Due to common hardware and software failures, human errors, and natural phenomena, disasters are inevitable, but IT infrastructure loss shouldn’t be. With the AWS cloud, you can rapidly recover mission-critical systems while optimizing your Disaster Recovery (DR) budget.

Thousands of public sector customers, like St Luke’s Anglican School in Australia and the City of Asheville in North Carolina, rely on AWS to enable faster recovery of their on-premises IT systems without unnecessary hardware, power, bandwidth, cooling, space, and administration costs associated with managing duplicate data centers for DR.

The AWS cloud lets you back up, store, and recover IT systems in seconds by supporting popular DR approaches from simple backups to hot standby solutions that failover at a moment’s notice. And with 12 regions (and 5 more coming this year!) and multiple AWS Availability Zones (AZs), you can recover from disasters anywhere, any time. The following figure shows a spectrum for the four scenarios, arranged by how quickly a system can be available to users after a DR event.

These four scenarios include:

- Backup and Restore – This simple and low cost DR approach backs up your data and applications from anywhere to the AWS cloud for use during recovery from a disaster. Unlike conventional backup methods, data is not backed up to tape. Amazon Elastic Compute Cloud (Amazon EC2) computing instances are only used as needed for testing. With Amazon Simple Storage Service (Amazon S3), storage costs are as low as $0.015/GB stored for infrequent access.

- Pilot Light – The idea of the pilot light is an analogy that comes from gas heating. In that scenario, a small flame that’s always on can quickly ignite the entire furnace to heat up a house. In this DR approach, you simply replicate part of your IT structure for a limited set of core services so that the AWS cloud environment seamlessly takes over in the event of a disaster. A small part of your infrastructure is always running simultaneously syncing mutable data (as databases or documents), while other parts of your infrastructure are switched off and used only during testing. Unlike a backup and recovery approach, you must ensure that your most critical core elements are already configured and running in AWS (the pilot light). When the time comes for recovery, you can rapidly provision a full-scale production environment around the critical core.

- Warm Standby – The term warm standby is used to describe a DR scenario in which a scaled-down version of a fully functional environment is always running in the cloud. A warm standby solution extends the pilot light elements and preparation. It further decreases the recovery time because some services are always running. By identifying your business-critical systems, you can fully duplicate these systems on AWS and have them always on.

- Multi-Site – A multi-site solution runs on AWS as well as on your existing on-site infrastructure in an active- active configuration. The data replication method that you employ will be determined by the recovery point that you choose, either Recovery Time Objective (the maximum allowable downtime before degraded operations are restored) or Recovery Point Objective (the maximum allowable time window whereby you will accept the loss of transactions during the DR process).

Learn more about using AWS for DR in this white paper. And also continue to learn about backup and restore architectures, both using partner products and solutions, that assist in backup, recovery, DR, and continuity of operations (COOP) at the AWS Public Sector Summit in Washington, DC on June 20-21, 2016. Learn more about the complimentary event and register here.

Bring Your Own Windows 7 Licenses for Amazon Workspaces

Guest post by Len Henry, Senior Solutions Architect, Amazon Web Services

Amazon WorkSpaces is our managed virtual desktop service in the cloud. You can easily provision cloud-based desktops and allow users to access your applications and resources from any supported device. The Bring Your Own Windows 7 Licenses (BYOL) feature of Amazon Workspaces furthers our commitment to providing you with lower costs and greater control of your IT resources.

If you are a Microsoft Volume License license-holder with tools and processes for managing Windows desktop solutions, you can reduce the cost for your WorkSpaces (up to 16% less per month) and you can use your existing Desktop image for your Workspaces. Let’s get started.

Architectural Designs

Your WorkSpaces can access your on-premises resources when you extend your network into AWS. You can also extend your existing Active Directory into AWS. This white paper describes how you achieve connectivity and the images below take you through different points of connection.

Figure 1 Amazon WorkSpaces when using an AWS Directory Service and a VPN Connection

Figure 2 Amazon WorkSpaces when using an AWS Directory Service and a Direct Connect

As a part of the implementation, you will create a Dedicated VPC. You will also create a Dedicated Directory Service (the Dedicated Directory option will not be present until the WorkSpaces team enables the BYOL account). You can use AWS Workspaces with your existing Active Directory or one of the AWS Directory Services.

You can extend your Active Directory into AWS by deploying additional Domain controllers into the AWS cloud or using our managed Directory Service’s AD Connector feature to proxy your existing Active Directory. We provide you with specific guidance on how to extend your on-premises network here. You can use our Directory Service to create three types of directories:

- Simple AD: Samba 4 powered Active Directory compatible directory in the cloud.

- Microsoft AD: Powered by Windows Server 2012 R2.

- AD Connector: Recommended for leveraging your on-premises Active Directory.

Your choice of Directory Service depends on the size of your Active Directory and your need for specific Active Directory features. Learn more here.

With BYOL, you use your 64 bit Windows 7 Desktop Image on hardware that is dedicated to you. We use your image to provision WorkSpaces and validate that it is compatible with our service.

Typical milestones (and suggested stakeholders) for your implementation:

You provide estimates to us of your initial and expected growth of active WorkSpaces. AWS selects resources for your WorkSpaces based on your needs. Your BYOL WorkSpaces are deployed on dedicated hardware to allow you to use your existing software license. Tools and AWS features include:

- OVA – You provide images for BYOL in the OVA industry standard format for Virtual Machines. You can use any of the following software to export to an OVA: Oracle VM VirtualBox, VMWare VSphere, Microsoft System Center 2012 Virtual Machine Manager, and Citrix XenServer.

- VM Import – You will use VM import in the AWS Command Line Interface (CLI) (AWS CLI). You execute import image after your OVA has been imported into Amazon Simple Storage Service (Amazon S3).

- VPC Wizard – You will create several VPC resources for your BYOL VPC. The VPC Wizard can create your VPC and configure public/private subnets and even a hardware VPN.

- AWS Health Check Website – You can use this site to check if your local network meets the requirements for using WorkSpaces. You also get a suggestion for the region you should deploy your WorkSpaces in.

A proof of concept (POC) with public bundles will give your team experience using and supporting WorkSpaces. A POC can help verify your network, security, and other configurations. By submitting a base Windows 7 image, you reduce the likelihood of your customizations impacting on-boarding. You can customize your image after on-boarding and you can have regularly scheduled meetings with your AWS account team to make it easier to coordinate on your implementation.

With WorkSpaces, you can reduce the work necessary to manage a Virtual Desktop Infrastructure solution. This automation can help you to manage a large number of users. The Workspaces API provides you commands for typical WorkSpaces use cases: creating a WorkSpace, checking the health of a WorkSpace, and rebooting a WorkSpace. You can use the WorkSpaces API to create a portal for managing your WorkSpaces or for user self-service.

In order to ensure that you are ready to get started with BYOL, please reach out to your AWS account manager, solutions architect, or sales representative, or create a Technical Support case with Amazon WorkSpaces. Please contact us to get started using BYOL here.

Learn more about WorkSpaces and other enterprise applications at the complimentary AWS Public Sector Summit in Washington, DC June 20-21, 2016.

Highlights from the Nonprofit Technology Conference

A guest post by Victoria Anania, Communications and Development Coordinator, 501cTECH

From March 23rd-25th, the Nonprofit Technology Network (NTEN) held the Nonprofit Technology Conference in San Jose, California. It’s THE technology event of the year for the nonprofit sector. With over 2,000 nonprofit attendees and supporting technology exhibitors, it’s a once a year opportunity to bring together nonprofit staff from all over the country – and some as far away as the UK and China – to connect with one another, to learn from each other, and to discover new ways to use technology to impact social change.

With the generous support of Amazon Web Services, my colleague Marc Noel and I were fortunate enough to attend. We owe a huge thanks to them for their demonstrated commitment to supporting nonprofits and driving social change.

Up and Coming Trends

Following the conference, Marc and I caught up with Ash Shepherd, NTEN’s Education Director, who shared his insight on what was different at NTC this year and what direction the nonprofit sector seems to be going. Here are two big trends he noticed:

Shift in Data Focus: From a Big Push for Collecting Data to an Emphasis on Using Data

Over the last few years, organizations have focused on collecting as much data as possible. Data is great, but it needs to be evaluated and used in context. This year, there was an emphasis on:

- Data-driven storytelling

- Data-driven decision making

- Prioritizing what data is collected

What does this mean in an age when organizations can collect data on almost anything? I spoke with Chris Tuttle, a well-known thought leader in the “nonprofit tech” world and presenter at 16NTC, who had some helpful advice:

- Start with measurement, don’t collect data as an afterthought.

- Goals are vital, use analytics funnels to measure: how people find your content, how they engage, what actions people take and how you know those actions are completed.

- Vanity metrics, like registered users, downloads, and raw pageviews, don’t denote success, but they aren’t throwaway metrics – they help spot trends and understand how we might change our work in the moment.

Educational Presentations are Incorporating More Activities

This year, more than ever before, many sessions included hands-on activities. Recent professional programming is designed around the understanding that professionals don’t want to be spoon-fed information; they want to be practical. They are looking for ways to solve problems and become better at their jobs.

Activities during breakout sessions allowed attendees to:

- Share challenges they face at their jobs

- Immediately apply new knowledge they gained from the speakers

- Collaborate with peers to do strategic problem solving

This approach to professional development links back to the overarching goal of NTEN: to inspire nonprofit professionals to learn, connect, and impact change.

Great Resources

Want to keep learning about nonprofit technology? You can!

Social Media – Follow #16NTC on Twitter. This hashtag is still buzzing on Twitter weeks later. Full of lessons learned at breakout sessions, links to collaborative notes, and pictures of different events, it’s absolutely worth checking out.

Collaborative Notes – Every breakout session had a ready-to-go collaborative notes page where attendees could write down big takeaways to review later. They’re now available online!

Nonprofit Tech Clubs – Cities all over the US and Canada have tech clubs that are free, informal, volunteer-run groups of nonprofit professionals passionate about using technology to further their missions. They host regular events where professionals can get together to network and learn about the latest technology tools and trends. Sound interesting? Find your local tech club today! Marc and I are the organizers for the DC Tech Club and we’d love for you to attend one of our events if you’re in the DMV area! They’re usually held at the Amazon Web Services Washington, DC office near Union Station.