Category: Nonprofit

Global Nonprofit Leadership Summit Recap

The AWS Global Nonprofit Leadership Summit in London focused on how to achieve the United Nations’ sustainable development goals (SDGs) through technology.

We value and recognize the amazing contributions that organizations make in order to make this world a better place. By hearing from nonprofits, such as UNICEF, the American Heart Association, Conservation International, and many others, attendees were able to provide each other with technology approaches that have yielded improved outcomes and share big ideas for projects or programs that could greatly advance achievement of the SDGs, which include reversing climate change, ensuring equality for all, and eliminating poverty.

Why does the cloud matter?

- The cloud helps pave the way for disruptive innovation – This gives nonprofits the agility to fail fast and to try many things, pick what works, and continue moving forward. We innovate to give our customers the capabilities and cost savings to achieve more mission for the money.

- The cloud also helps drive an innovative ecosystem – Our innovation extends to our partners, our customers, and the entire ecosystem that is touched by the approach and technology. Many nonprofit organizations depend on a broad network of volunteers, donors, and staff. As AWS innovates, we seek to help our customers innovate as well.

- The cloud is helping to make the world a better place – Our customers change the world through medical research, monitoring tools that protect endangered species, empowering underserved youth through technology education, and much more. In addition, AWS is helping to improve government and spur economic development around the world though open data and public/private partnership initiatives and by bringing services to places that might not otherwise receive them. The goal is to connect the private, public, and social sectors to share our strengths and do more, together.

Nonprofits are all in the business of improving the well being of people and environmental ecosystems around the world. By using AWS’s inexpensive and highly scalable infrastructure technology to build websites, host core business and employee-facing systems, and manage outreach and fundraising, nonprofit organizations around the world can stop paying for computing power they aren’t using, and focus their resources on their important work.

From issue advocacy to charitable causes, from health and welfare to education, nonprofit organizations use AWS to radically reduce infrastructure costs, build their capacity, and reduce waste. The common denominator for all discussions was technology, and session after session, we heard about how it touches and improves lives in areas such as green activism, animal conservation, fighting heart disease, and finding exploited children. Through engagement with the AWS Nonprofit team, we have helped guide nonprofits globally on new ways to leverage data analytics capabilities, such as to hone in on specific human genomes to create personalized care that has a higher success rate of healing and recovery or to crawl image data for clues as to the location of abducted children. We have strategized with our customers and determined approaches for leveraging IoT sensors to monitor air quality, weather conditions for agricultural yields, and even smart donation solutions.

Partnerships in the Cloud: Bringing the big ideas

As part of the event, AWS committed $500,000 in AWS promotional credits to support the big ideas and new concepts brought forward by the nonprofits in attendance. AWS technical experts hosted office hours at the event to help the nonprofits build out their concepts and transform them into approaches, and will soon award the credits to these organizations.

Teresa Carlson, VP of Worldwide Public Sector at AWS, closed the event by encouraging the attending organizations to continue to collaborate with each other and share solutions. We intend to hold this event annually and we look forward to sharing the progress AWS and, most importantly, our customers have made in 2017.

“As we begin to see drastic changes in our climate and increasing levels of poverty and humanitarian crises around the world, AWS has taken action to ensure we are an active participant in sustainable development. We want to both be part of the solution and bring our resources to bear to enable others to reverse the compounding effects of the violence, hatred, and even indifference,” Teresa said.

World Elephant Day: Reaching the World with the Cloud

On August 12, 2012, the inaugural World Elephant Day was launched to bring attention to the urgent plight of Asian and African elephants. The elephant is loved, revered, and respected by people and cultures around the world, yet we balance on the brink of seeing the last of this magnificent creature.

Did you know that with the rate of ivory poaching and habitat conflict today, in 20-30 years there will be no wild elephants on the planet? Faced with these devastating statistics, World Elephant Day strives to bring awareness and education to people around the world through technology.

The Challenge: Build a scalable online presence to educate the world on elephant extinction

World Elephant Day began with a website and then expanded to include a social media presence, making it possible for their message to be accessed by anyone, no matter where they are in the world. But their challenge was: can the website scale to match the millions of users accessing the site on that one day each year? And if it can scale to those extremes, will it still be cost-effective for a nonprofit?

For the first two years, the traffic to the website was manageable, but as the issues escalated, so did incoming hits on the site. During the third year, they started to see significant growth with over 30,000 users and 70,000 page views, this meant the campaign was making an impact, but their server and hosting capabilities weren’t going to scale to match. The site crashed. For 6-8 hours no one was able to access the site due to the large influx of traffic. After experimenting with different hosting platforms, they struggled with balancing costs, scale, and usability. The following year their reach tripled to over a billion impressions across social media, over 50,000 users, and more than 90,000 page views. The site was down for even longer than the year before, blocking people from accessing the site and making more donations.

Working with AWS

This year, the organization turned to AWS. An elastic, cost-efficient website with no downtime was a top priority for their grass-roots campaign. “After the last few years, we knew this couldn’t happen again. So we started to research where we could find the service and support to handle this type of traffic in concentrated periods, like elections and other fundraising campaigns. That’s when we learned about AWS,” Patricia Sims, founder of World Elephant Day, said. “In our research, it was consistent that AWS is the go-to service of choice.”

World Elephant Day has received support through the AWS nonprofit grants program. This year, we worked with World Elephant Day to keep their website up and running, with no downtime, in order to reach even the most remote places on the earth to celebrate World Elephant Day.

With the stable, responsive, and faster site, they were able to provide a better user experience and increase donations far above last year helping solidify and improve their brand and message for elephant conservation that they can continue to build upon going forward.

“There is such power in the internet for us to reach out and make an impact in remote places, where organizations couldn’t have even dreamed possible years ago,” Patricia said,” One of the coolest things is that we saw a tiny village in northern India that suffers from issues with human elephant conflict. They were able to take a part in World Elephant Day and planted trees in and around crop areas to encourage elephants to not enter their village, but instead live safely outside it.”

Building a Microsoft BackOffice Server Solution on AWS with AWS CloudFormation

An AWS DevOps guest blog post by Bill Jacobi, Senior Solutions Architect, AWS Worldwide Public Sector

Last month, AWS released the AWS Enterprise Accelerator: Microsoft Servers on the AWS Cloud along with a deployment guide and CloudFormation template. This blog post will explain how to deploy complex Windows workloads and how AWS CloudFormation solves the problems related to server dependencies.

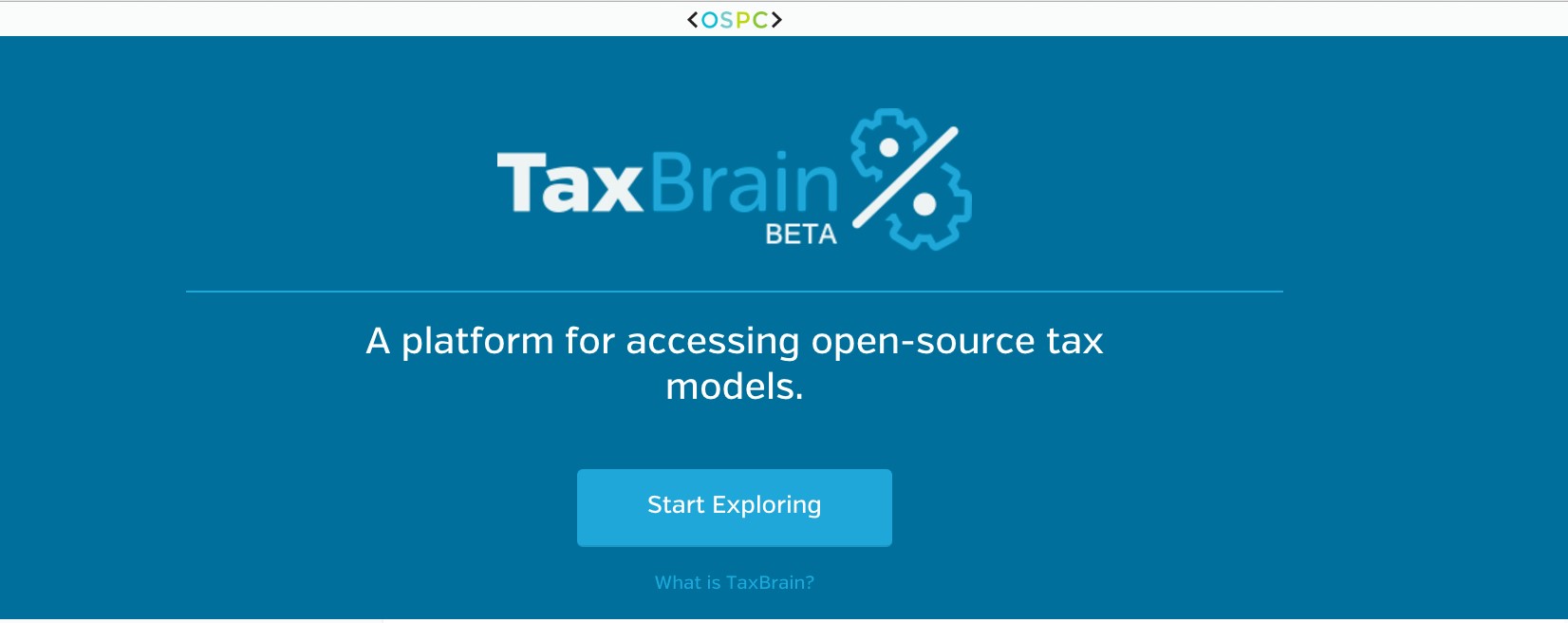

This AWS Enterprise Accelerator solution deploys the four most requested Microsoft servers ─ SQL Server, Exchange Server, Lync Server, and SharePoint Server ─ in a highly available multi-AZ architecture on AWS. It includes Active Directory Domain Services as the foundation. By following the steps in the solution, you can take advantage of the email, collaboration, communications, and directory features provided by these servers on the AWS IaaS platform.

There are a number of dependencies between the servers in this solution, including:

- Active Directory

- Internet access

- Dependencies within server clusters, such as needing to create the first server instance before adding additional servers to the cluster.

- Dependencies on AWS infrastructure, such as sharing a common VPC, NAT gateway, Internet gateway, DNS, routes, and so on.

The infrastructure and servers are built in three logical layers. The Master template orchestrates the stack builds with one stack per Microsoft server and manages inter-stack dependencies. Each of the CloudFormation stacks use PowerShell to stand up the Microsoft servers at the OS level. Before it configures the OS, CloudFormation configures the AWS infrastructure required by each Windows server. Together, CloudFormation and PowerShell create a quick, repeatable deployment pattern for the servers. The solution supports 10,000 users. Its modularity at both the infrastructure and application level enables larger user counts.

Managing Stack Dependencies

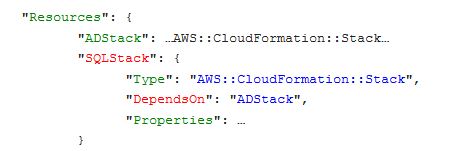

To explain how we enabled the dependencies between the stacks,the SQLStack is dependent on ADStack since SQL Server is dependent on Active Directory and, similarly, SharePointStack is dependent on SQLStack, both as required by Microsoft. Lync is dependendent on Exchange since both servers must extend the AD schema independently. In Master, these server dependencies are coded in CloudFormation as follows:

and

The “DependsOn” statements in the stack definitions forces the order of stack execution to match the diagram. Lower layers are executed and successfully completed before the upper layers. If you do not use “DependsOn”, CloudFormation will execute your stacks in parallel. An example of parallel execution is what happens after ADStack returns SUCCESS. The two higher-level stacks, SQLStack and ExchangeStack, are executed in parallel at the next level (layer 2). SharePoint and Lync are executed in parallel at layer 3. The arrows in the diagram indicate stack dependencies.

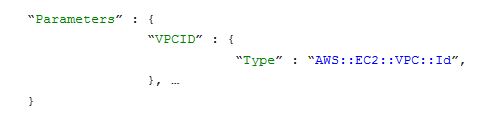

Passing Parameters Between Stacks

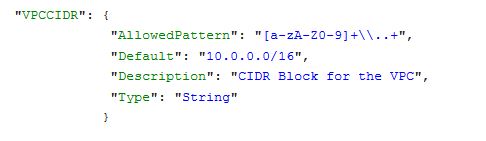

If you have concerns about how to pass infrastructure parameters between the stack layers, let’s use an example in which we want to pass the same VPCCIDR to all of the stacks in the solution. VPCCIDR is defined as a parameter in Master as follows:

By defining VPCCIDR in Master and soliciting user input for this value, this value is then passed to ADStack by the use of an identically named and typed parameter between Master and the stack being called.

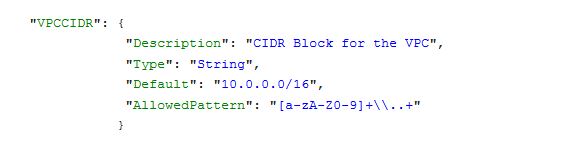

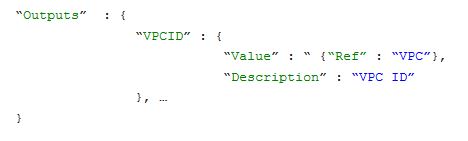

After Master defines VPCCIDR, ADStack can use “Ref”: “VPCCIDR” in any resource (such as the security group, DomainController1SG) that needs the VPC CIDR range of the first domain controller. Instead of passing commonly-named parameters between stacks, another option is to pass outputs from one stack as inputs to the next. For example, if you want to pass VPCID between two stacks, you could accomplish this as follows. Create an output like VPCID in the first stack:

In the second stack, create a parameter with the same name and type:

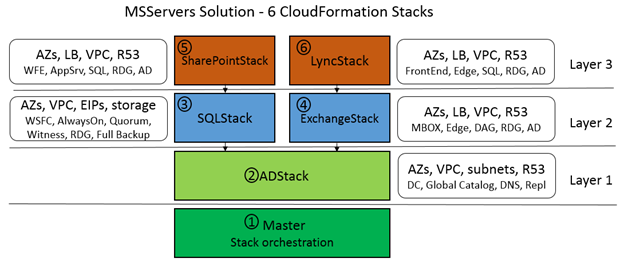

Managing Dependencies Between Resources Inside a Stack

All of the dependencies so far have been between stacks. Another type of dependency is one between resources within a stack. In the Microsoft servers case, an example of an intra-stack dependency is the need to create the first domain controller, DC1, before creating the second domain controller, DC2.

DC1, like many cluster servers, must be fully created first so that it can replicate common state (domain objects) to DC2. In the case of the Microsoft servers in this solution, all of the servers require that a single server (such as DC1 or Exch1) must be fully created to define the cluster or farm configuration used on subsequent servers.

Here’s another intra-stack dependency example: The Microsoft servers must fully configure the Microsoft software on the Amazon EC2 instances before those instances can be used. So there is a dependency on software completion within the stack after successful creation of the instance, before the rest of stack execution (such as deploying subsequent servers) can continue. These intra-stack dependencies like “software is fully installed” are managed through the use of wait conditions. Wait conditions are resources just like EC2 instances that allow the “DependsOn” attribute mentioned earlier to manage dependencies inside a stack. For example, to pause the creation of DC2 until DC1 is complete, we configured the following “DependsOn” attribute using a wait condition. See (1) in the following diagram:

The WaitCondition (2) depends on a CloudFormation resource called a WaitHandle (3), which receives a SUCCESS or FAILURE signal from the creation of the first domain controller:

SUCCESS is signaled in (4) by cfn-signal.exe –exit-code 0 during the “finalize” step of DC1, which enables CloudFormation to execute DC2 as an EC2 resource via the wait condition.

If the timeout had been reached in step (2), this would have automatically signaled a FAILURE and stopped stack execution of ADStack and the Master stack.

As we have seen in this blog post, you can create both nested stacks and nested dependencies and can pass parameters between stacks by passing standard parameters or by passing outputs. Inside a stack, you can configure resources that are dependent on other resources through the use of wait conditions and the cfn-signal infrastructure. The AWS Enterprise Accelerator solution uses both techniques to deploy multiple Microsoft servers in a single VPC for a Microsoft BackOffice solution on AWS.

In a future blog post on the AWS DevOps Blog, we will illustrate how PowerShell can be used to bootstrap and configure Windows instances with downloaded cmdlets, all integrated into CloudFormation stacks.

This post was originally published on the AWS DevOps Blog. For similar posts and insights for Developers, DevOps Engineers, Sysadmins, and Architects, visit the AWS DevOps Blog. And learn more from Bill in the “Running Microsoft Workloads in the AWS Cloud” Webinar here.

Cloud Transformation Maturity Model: Guidelines to Develop Effective Strategies for Your Cloud Adoption Journey

The Cloud Transformation Maturity Model offers a guideline to help organizations develop an effective strategy for their cloud adoption journey. This model defines characteristics that determine the stage of maturity, transformation activities within each stage that must be completed to move to the next stage, and outcomes that are achieved across four stages of organizational maturity, including project, foundation, migration, and optimization.

Where are you on your journey? Follow the table below to determine what stage you are in:

Advice from All-In Customers

We also want to share advice from our customers across various industries who have decided to go all-in on the AWS Cloud.

“One of the things we’ve learned along the way is the culture change that is needed to bring people along on that cloud journey and really transforming the organization, not only convincing them that the technology is the right way to go, but winning over the hearts and minds of the team to completely change direction.”

— Mike Chapple, Sr. Director for IT Service Delivery, Notre Dame

“For other systems we saw that we could make fast configurations inside Amazon that would make those systems run faster. It was a huge success. And the thing about that type of success is that it breeds more interest in getting that kind of success. So after starting a proof of concept with AWS, we immediately began to expand until fully migrated on AWS”

— Eric Geiger, VP of IT Ops, Federal Home Loan Bank of Chicago

“The greatest thing about AWS for us is that it really scales with the business needs, not only from a capacity perspective, but also from a regional expansion perspective so that we can take our business model, roll it out across the region and eventually across the globe.”

We want to help your organization through your journey. Contact our sales and business development teams at https://aws.amazon.com/contact-us/ or take the “Guide to Cloud Success” training brought to you by the GovLoop Academy.

And also check out the Cloud Transformation Maturity Model whitepaper here to continue to learn more.

California Apps for Ag Hackathon: Solving Agricultural Challenges with IoT

The Apps for Ag Hackathon is an agricultural-focused hackathon designed to solve real-world farming problems through the application of technology. In partnership with the University of California Division of Agriculture and Natural Resources, the US Department of Agriculture (USDA), and the California State Fair, AWS sponsored the Hackathon to find ways to help farmers improve soil health, curb insect infestations and boost water efficiency through Internet of Things (IoT) technologies. The 48-hour event brought together teams from across Northern California, including commercial, federal, state and local, and education organizations.

The goal of the Hackathon was to create sensor-enabled apps to help farmers do things like track water use and fight insect invasions. We provided credits for teams to develop and build their solutions on AWS. Also, Intel provided several Internet of Things (IoT) Kits to help participants build sensor-based solutions on AWS. Additionally, AWS technologists provided onsite architectural guidance and team mentoring.

Check out the winning teams below and the innovative applications built to solve agricultural challenges:

- First Place – GivingGarden, a hyper-local produce sharing app with a big vision.

- Second Place – Sense and Protect, IoT sensors and a mobile task management app to increase farm worker safety and productivity.

- Third Place – ACP STAR SYSTEM, a geo and temporal database and platform for tracking Asian Citrus Psyllid and other invasive pests.

- Fourth Place – Compostable, an app and IoT device that diverts food waste from landfills and turns it into fertilizer and fuel so that it can go back to the farm.

At the Hackathon, we worked closely with many young computer science students and helped them understand the benefits of cloud computing. Experimenting on AWS Lambda allowed the students to deploy serverless applications on AWS quickly. This greatly reduced the time spent focusing on infrastructure and allowed the teams to focus on developing their application. The IoT kits also enabled rapid development and prototyping of solutions. In addition, the teams took advantage of AWS Elastic Beanstalk for rapid delivery of application code.

Working with several states, participants and mentors were able to share information and cross-pollinate ideas, which not only provided value to the development teams at the Hackathon, but will also be valuable to other states in the future.

Learn more about how AWS IoT makes it easy to use AWS services like AWS Lambda, Amazon Kinesis, Amazon S3, Amazon Machine Learning, Amazon DynamoDB, Amazon CloudWatch, AWS CloudTrail, and Amazon Elasticsearch Service with built-in Kibana integration, to build IoT applications that gather, process, analyze, and act on data generated by connected devices, without having to manage infrastructure.

We are hosting an Agriculture Analysis in the Cloud Day with The Ohio State University’s 2016 Farm Science Review. You’re invited to attend the event on September 19th, 2016 from 8:30am – 6:30pm EDT (Breakfast and Lunch are provided), taking place at OSU in the Nationwide & Ohio Farm Bureau 4-H Center. Register now (seats are limited).

Exploring the Possibilities of Earth Observation Data

Recently, we have been sharing stories of how customers like Zooniverse and organizations like Sinergise have used the Sentinel-2 data made available via Amazon Simple Storage Service (Amazon S3). From disaster relief to vegetation monitoring to property taxation, this data set allows for organizations to build tools and services with the data that improve citizen’s lives.

We recently connected with Andy Wells from Sterling Geo and looked back at ways the world has changed in the past decade and looked ahead at the possibilities using Earth Observation data.

The world has been using satellite imagery for over 20 years, but the average organization today is just starting to reap the benefits of remote sensing. But from start to finish they need to consider: Where do I get the data from? And what do I do with all of the data?

As a big data use case, it doesn’t get much bigger than this. The physical amount of data captured weekly, daily, and hourly is astronomical and the world keeps spinning. And since the world keeps spinning, it also keeps changing.

“To say there is a tsunami of data is an understatement. And the physical amount of data is only going to go up exponentially. Businesses can get swamped considering: Where do I buy? How do I buy? Why do I buy?” said Andy Wells, Managing Director of SterlingGeo. “But there is an enormous opportunity to evolve governments, educational institutes, and charities by translating that data into something they may need.”

Data is just data unless it is turned into information that can inform better decisions. Without the technology to help make sense of all the data, it never turns into useful information.

Moving from a static model to a dynamic model allows you to keep up with the influx of data coming from satellite imagery and Internet of Things (IoT) technologies. This is a giant leap forward and a big part of what Andy and his team are doing.

Looking at the ‘What Ifs’ – The Art of the Possible

What happens if you could put your analytics engine on the cloud? Instead of an organization investing money up front, running software locally, and training a person without ever seeing value, organizations can turn to the cloud. All of a sudden, you don’t have to invest in the software up front. You just have to run the compute process to turn raw data into information.

“The magic bit of it is that by leveraging the cloud, we can present the result to the end user in a form that is just right for them. It could be in a map or an image in a simple email, taking the heavy lifting off the customer and just providing them with the business information that users need to actually make decisions,” Andy said.

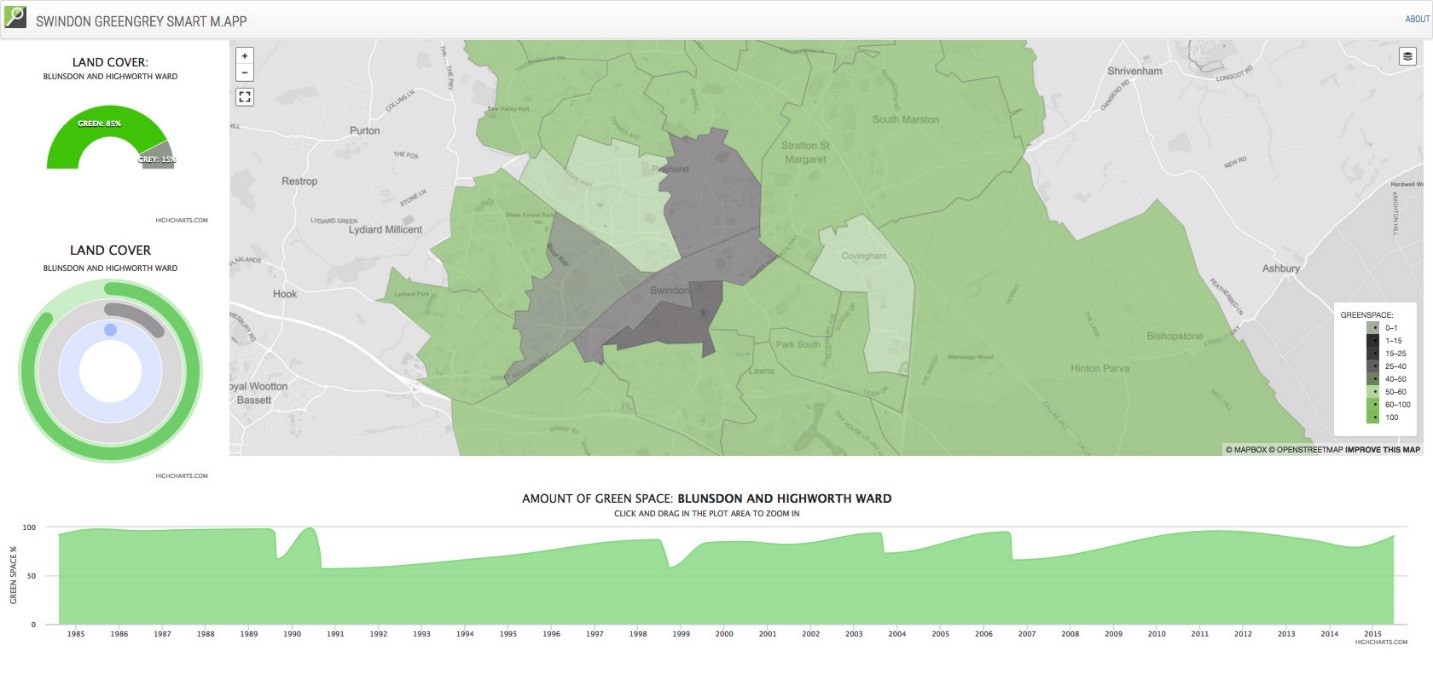

For example, Sentinel-2 data can be analyzed for greenness. The human eye sees in red, green, and blue light, but satellites see in a wider spectrum. So when plants photosynthesize, they put off more near-infrared light, meaning we can actually monitor how healthy plants are, and how much greenery is in a city, and then run an algorithm to see the greenness of an area.

By connecting this algorithm to Sentinel-2 and Landsat data, you can watch how your city has evolved over time in terms greenness, the general heath of a city based on open space, such as parks. Knowing this information allows city leaders to understand if they have paved over too many gardens and parks or where they may need to have more houses or bigger roads. A pilot service of this has now been deployed to numerous local government bodies within a UK Space Agency Programme called Space for Smarter Government.

Being forward-thinking allows companies to solve challenges throughout the world with existing data. The cloud allows them to focus on their mission, without having to worry about where the data is coming from. Leveraging the compute power of AWS gives organizations what they need to make decisions quickly and at a low cost. Andy said, “The good news is, this is not 10 years down the road. These possibilities can be realized today.”

Automating Governance on AWS

IT governance identifies risk and determines the identity controls to mitigate that risk. Traditionally, IT governance has required long, detailed documents and hours of work for IT managers, security or audit professionals, and admins. Automating governance on AWS offers a better way.

Let’s consider a world where only two things matter: customer trust and cost control. First, we need to identify the risks to mitigate:

- Customer Trust: Customer trust is essential. Allowing customer data to be obtained by unauthorized third parties is damaging to customers and the organization.

- Cost: Doing more with your money is critical to success for any organization.

Then, we identify what policies we need to mitigate those risks:

- Encryption at rest should be used to avoid interception of customer data by unauthorized parties.

- A consistent instance family should be used to allow for better Reserved Instance (RI) optimization.

Finally, we can set up automated controls to make sure those policies are followed. With AWS, you have several options:

Encrypting Amazon Elastic Block Store (EBS) volumes is a fairly straightforward configuration. However, validating that all volumes have been encrypted may not be as easy. Despite how easily volumes can be encrypted, it is still possible to overlook or intentionally not encrypt a volume. AWS Config Rules can be used to automatically and in real time check that all volumes are encrypted. Here is how to set that up:

- Open AWS Console

- Go to Services -> Management Tools -> Config

- Click Add Rule

- Click encrypted-volumes

- Click Save

Once the rule has finished being evaluated, you can see a list of EBS volumes and whether or not they are compliant with this new policy. This example uses a built-in AWS Config Rule, but you can also use custom rules where built-in rules do not exist.

When you get several Amazon Elastic Compute Cloud (Amazon EC2) instances spun up, eventually you will want to think about purchasing Reserved Instances (RIs) to take advantage of the cost savings they provide. Focus on one or a few instance families and purchase RIs of different sizes within those families. The reason to do this is because RIs can be split and combined into different sizes if the workload changes in the future. Reducing the number of instance families used increases the likelihood that a future need can be accommodated by resizing existing RIs. To enforce this policy, an IAM rule can be used to allow creation of EC2 instances which are in only the allowed families. See below:

It’s just that simple.

With AWS automated controls, system administrators don’t need to memorize every required control, security admins can easily validate that policies are being followed, and IT managers can see it all on a dashboard in near real time – thereby decreasing organizational risk and freeing up resources to focus on other critical tasks.

To learn more about Automating Governance on AWS, read our white paper, Automating Governance: A Managed Service Approach to Security and Compliance on AWS.

A guest blog by Joshua Dayberry, Senior Solutions Architect, Amazon Web Services Worldwide Public Sector

A Minimalistic Way to Tackle Big Data Produced by Earth Observation Satellites

The explosion of Earth Observation (EO) data has driven the need to find innovative ways for using that data. We sat down with Grega Milcinski from Sinergise to discuss Sentinel-2. During its six month pre-operational phase, Sentintel-2 has already produced more than 200 TB of data, more than 250 trillion pixels, yet the major part of this data is never used at all, probably up to 90 percent.

What is a Sentinel Hub?

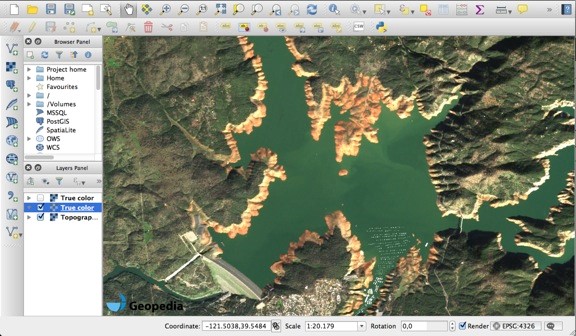

The Sentinel-2 data set acquires images of whole landmass of Earth every 5-10 days (soon-to-be twice as often) with a 10-meter resolution and multi-spectral data, all available for free. This opens the doors for completely new ways of remote sensing processes. We decided to tackle the technical challenge of processing EO data our way – to create web services, which make it possible for everyone to get data in their favorite GIS applications using standard WMS and WCS services. We call this the Sentinel Hub.

Figure 1 – low water levels of Lake Oroville, US, 26th of January 2016, served via WMS in QGIS

How did AWS help?

In addition to research grants, which made it easier to start this process, there were three important benefits from working with AWS: public data sets for managing data, auto scaling features for our services, and advanced AWS services, especially AWS Lambda. AWS’s public data sets, such as Landsat and Sentinel are wonderful. Having all data, on a global scale, available in a structured way, easily accessible in Amazon Simple Storage Service (Amazon S3), removes a major hurdle (and risk) when developing a new application.

Why did you decide to set up a Sentinel public data set?

We were frustrated with how Sentinel data was distributed for mass use. We then came across the Landsat archive on AWS. After contacting Amazon about similar options for Sentinel, we were provided a research grant to establish the Sentinel public data set. It was a worthwhile investment because we can now access the data in an efficient way. And as others are able to do the same, it will hopefully benefit the EO ecosystem overall.

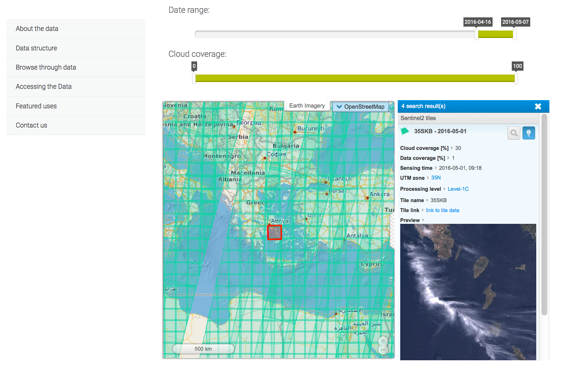

Figure 2 – Data browser on Sentinel-2 at AWS

How did you approach setting up Sentinel Hub service?

It is not feasible to process the entire archive of imagery on a daily basis, so we tried a different approach. We wanted to be able to process the data in real-time, once a request comes. When a user asks for imagery at some location, we query the meta-data to see what is available, set criteria, download the required data from S3, decompress it, re-project, create a final product, and deliver it to the user within seconds.

You mentioned Lambda. How do you make use of it?

It is impossible to predict what somebody will ask for and be ready for it in advance. But once a request happens, we want the system to perform all steps in the shortest time possible. Lambda can help as it makes it possible to empower a large number of processes simultaneously. We also use it to ingest the data and process hundreds of products. In addition to Lambda, we have leveraged AWS’s auto scaling features to seamlessly scale our rendering services. This greatly reduces running costs in off-peak periods and also provides a good user experience when the loads are increased. Having a powerful, yet cost-efficient, infrastructure in place allows us to focus on developing new features.

Figure 3 – image improved with atmospheric correction and cloud replacement

Figure 4 – user-defined image computation

Can you estimate cost benefits of this process?

We make use of the freely available data within Amazon public data sets, which directly saves us money. And by orchestrating software and AWS infrastructure, we are able to process in real-time so we do not have any storage costs. We estimate that we are able to save more than hundreds of thousands of dollars annually.

How can the Sentinel Hub service be used?

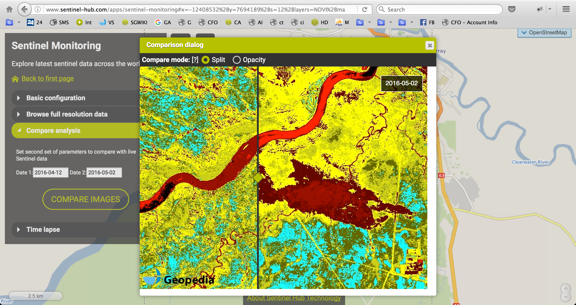

Anyone can now easily get Sentinel-2 data in their GIS application. You simply need the URL address of our WMS service, configure what is interesting, and then the data is there without any hassle, extra downloading, time consuming processing, reprocessing, and compositing. However, the real power of EO comes when it is integrated in web applications, providing a wider context. As there are many open-source GIS tools available, anybody can build these added value services. To demonstrate the case, we have built a simple application, called Sentinel Monitoring, where one can observe the changes in the land all across the globe.

Figure 5 – NDVI image of the Alberta wildfires, showing the burned area on 2nd of May. By changing the date, it is possible to see how the fires spread throughout the area.

What uses of Sentinel-2 on AWS do you expect others to build?

There are lots of possible use cases for EO data from Sentinel-2 imagery. The most obvious example is a vegetation-monitoring map for farmers. By identifying new construction, you can get useful input for property taxation purposes, especially in developing countries, where this information is scarce. The options are numerous and the investment needed to build these applications has never been smaller – the data is free and easily accessible on AWS. One can simply integrate one of the services in the system and the results are there.

Get access and learn more about Sentinel-2 services here. And visit www.aws.amazon.com/opendata to learn more about public data sets provided by AWS.

TaxBrain: A platform for accessing open-source tax models

What should we be spending tax money on? How much revenue are we going to raise? How does this impact the economy? To answer these questions and determine which policies to enact, policymakers rely on a predictive economic simulation model.

Historically, specialized teams in government have used proprietary tools to help guide which policies become law. They put in some inputs and wait for the outputs. Policymakers have to trust the tools and the teams, and often wait days, months, or years to get the information needed to help drive decision making.

This closed-source approach to estimating the costs and economic impact of policies raises some challenges. There is limited accessibility and transparency in the process, leaving the public and many policymakers in the dark on how policies are evaluated. The Open Source Policy Center (OSPC) at the American Enterprise Institute has worked to revolutionize this process in a way that is more transparent, accessible, and collaborative. They have incubated open source communities by bringing together economists, policy experts, and software developers to work on these types of tools. Through this collaborative, open source approach, TaxBrain was created using AWS.

TaxBrain is an interface to open source economic models for tax policy analysis created by OSPC. The TaxBrain web application allows policymakers, the media, and the public to have access to tax policy calculations and analyses. The website hosts computational models that are used in tax policy analysis for free and as open source. This democratizes access to information, allowing anyone to use their skills, passion, and expertise to analyze policies and give better information to policymakers.

OSPC creates communities who collaborate and contribute their skills to make the models, applications, and policies better, regardless of party alignment. Anyone from policymakers to the general public can use OSPC to learn about the effects of tax policy.

The website is hosted on the AWS Cloud, delivering scalability, cost-effectiveness, and undifferentiated heavy lifting to OSPC and their growing community. Access is given to the people to affect economic change.

“There is a high demand for a short period of time, as policymakers are working on a bill and creating many iterations. The demand goes way up for a while, but we are able to scale because we have access to AWS,” said Matt Jensen founder and managing director of the Open Source Policy Center (OSPC) at the American Enterprise Institute. “It allows our team and our community to focus on what we are good at, and AWS takes care of the hard part.”

Within the web page, users can input data to find outcomes about regular taxes, social security taxability, deductions, and more. Users create a policy reform by modifying tax law parameters, such as rates and deductions, adjust the economic baseline, and request the results. It is a computational expensive load, but all computational work and modeling is sent directly to AWS to churn out the answer and give it to OSPC. “We would not be able to run this off of a server sitting in our office,” Matt said. So even when there is an uneven load, it is still available to everyone in a cost-effective manner.

Learn more about how AWS helps nonprofit organizations achieve their mission without wasting precious resources here.

Zooniverse’s Open Source Answer to Disaster Relief

Zooniverse created the Planetary Response Network (PRN) to support relief efforts helping crisis victims. Beginning with the Nepal earthquake in 2015, the Zooniverse team knew the PRN would be helpful. The PRN takes satellite data pre-and-post disaster and uses that data to inform ground-based rescue teams where they need to go to be the most effective.

This data provides a ground-level view of big, global scale issues. Zooniverse aggregates data from 1.4M volunteers and 65 full time researchers analyze all of this data to derive value from it in an effort to reduce the impact of disasters.

We sat down with Sascha T. Ishikawa, Citizen Science Web Developer at Zooniverse, to talk about the PRN and their use of Sentinel-2 data for their project.

Can you tell us what AWS services you use to power PRN?

For all of our major services, we use Amazon Simple Storage Service (Amazon S3), Amazon Elastic Compute Cloud (Amazon EC2), and Amazon Kinesis. Everything Zooniverse and PRN lives in an S3 bucket, from third party data to websites, static websites, and data sets. All of our projects are open sourced. We have volunteers providing classifications on pre-verified data sources, like satellites, during a certain time around a disaster, which can create a backlog of data that needs to get processed and stored in a database. This firehose of data can take a while to get processed. To combat this challenge, we have EC2 instances ready to go to crunch all of the data. We then have Kinesis set up to listen to the stream of data classifications (a classification is when a volunteer sees an image or a video clip that needs to be classified or annotated, something like a collapsed building—see images below) and message board posts. Kinesis is at the heart of what we call “subject retirement.” When we have an image that has been seen by enough people, it gets taken offline to focus volunteers’ efforts on the remaining, unseen images.

You launched with 1,300 images and each one was checked 20 times within 2 hours. How do you handle such a quick burst of traffic? Did you need to scale up at any point to handle the enthusiastic response?

No doubt we had to scale up; all of this is handled with an elastic load balancer, which automatically distributes incoming application traffic across multiple Amazon EC2 instances in the cloud. Zooniverse experiences a constant trickle of data coming from volunteers, press releases, and media attention, but then it can spike to up to tens of thousands of classifications per hour. It has taken us a while to get that right so there is no down time. When the PRN launched over a 2-hour period, we experienced no down time!

Were you able to replicate some of the AWS architecture you’ve used from other citizen science projects for this initiative?

I have been using AWS since 2009, so I took my experience and definitely used it towards this project. The PRN was built using the Zooniverse project builder, so it is no different from a technology standpoint than our other projects with AWS (hear about Zooniverse’s other projects with AWS in this video). However, what is unique about this project is that it has a separate app that handles data processing to bring satellite images in. And we are constantly looking to improve and incorporate the latest technology.

What lessons from this response will you carry into your next emergency response effort?

PRN is our answer to disaster relief effort, which is an ongoing project that we will refine over the next month. The earliest event this was used for was the Nepal earthquake, when it was in its very early beta stage. It was a proof of concept that ended up proving to be useful, so the funding continued and we got to use it to help relief efforts in Nepal and Ecuador. The plan is to make this a standalone app and fetch data from the European Space Agency or Planet Labs.

You used Sentinel-2 data for a comparative data set. What are the advantages of Sentinel-2? How do you think Sentinel-2 data can be used in future emergency response efforts?

Having Sentinel-2 data available for anyone via Amazon S3 allows us to use it, often within hours of production. Although Sentinel-2 data has a lower spatial resolution (10m) when compared to Planet Labs (3-5m), it contains multi-spectral data (which Planet Labs does not) ranging from visual to near-infrared. The latter, although we didn’t use it for this last Ecuador earthquake, is more sensitive to vegetation, which could be a good indication of landslides. The reason we didn’t use near-infrared was because its spatial resolution is slightly less than the regular visual spectrum images. In short, we made a judgment call to focus on image spatial resolution over multi-spectral data.

Take a look at the images below, and here is an actual example of volunteers spotting some form of damage.

A screenshot of the PRN page covering the 2016 earthquake. The image is of Atacames Canton in the Esmeraldas province of Ecuador.

This screenshot shows a classification of subject 1998468, about 5 miles from Bahia de Caraquez Airport. The pre-quake image is from Sentinel 2 (through thin cloud or haze) and the post-quake image is from Planet Labs.

Thank you to Zooniverse for their work and their time talking with us! Check out Zooniverse’s other projects here.