Category: Education

Arizona State University Brings Voice-Technology Program to Campus

As part of the effort, engineering students moving into Tooker House, a new residence hall for engineers on the Tempe campus, can choose to receive a new Amazon Echo Dot, a hands-free, voice-controlled device used to play music, make calls, send and receive messages, provide information, read the news, set alarms, read audiobooks, and more.

With the use of the Amazon Echo Dot, students will become part of the first voice-enabled residential community on a university campus.

In addition, students can sign up for one of three courses that will teach new concepts focused on building voice-user interfaces with Alexa. These will be offered through the Ira Fulton School of Engineering. Incoming freshman engineering students will be able to build their own Alexa skills or capabilities, and join the growing community of voice developers globally.

ASU also introduced an ASU-specific Alexa skill to enhance the campus experience for students, faculty, staff and alumni. Anyone with an Alexa-enabled device can use the ASU Alexa skill to get information about the university and the campus.

Read more about the ASU program in their blog post, learn more about how you can use Alexa in education, and discover how other schools are using Alexa to build apps for their students.

The Boss: A Petascale Database for Large-Scale Neuroscience Powered by Serverless Technologies

The Intelligence Advanced Research Projects Activity (IARPA) Machine Intelligence from Cortical Networks (MICrONS) program seeks to revolutionize machine learning by better understanding the representations, transformations, and learning rules employed by the brain.

We spoke with Dean Kleissas, Research Engineer working on the IARPA MICrONS Project at the Johns Hopkins University Applied Physics Laboratory (JHU/APL), and he shared more about the project, what makes it unique, and how the team leverages serverless technology.

Could you tell us about the IARPA MICrONS Project?

This project partners computer scientists, neuroscientists, biologists, and other researchers from over 30 different institutions to tackle problems in neuroscience and computer science towards improving artificial intelligence. These researchers are developing machine learning frameworks informed and constrained by large-scale neuroimaging and experimentation at a spatial size and resolution never before achieved.

Why is this program different from other attempts to build machine learning based on biological principles?

While current approaches to neural network-based machine learning algorithms are “neurally inspired,” they are not “biofidelic” or “neurally plausible,” meaning they could not be directly implemented using a biological system. Previous attempts to incorporate the brain’s inner workings into machine learning have used statistical summaries of properties of the brain or measurements at low resolution (brain regions) or high resolution (individual neurons or populations of 100’s-1k neurons).

With MICrONS, researchers are attempting to inform machine learning frameworks by interrogating the brain at the “mesoscale,” the scale at which the hypothesized unit of computation, the cortical column, should exist. Teams will measure the functional (how a neuron fires) and structural (how neurons connect) properties of every neuron in a cubic millimeter of mammalian tissue. While a cubic millimeter may sound small, these datasets will be some of the largest ever collected and will contain about 50k-100k neurons and over 100 million synapses. On disk, this results in roughly 2-3 petabytes of image data to store and analyze per tissue sample.

To manage the challenges created by both the collaborative nature of this program and massive amounts of multi-dimensional imaging, the JHU/APL team developed and deployed a novel spatial database called the Boss.

What is the Boss and some of its key features?

The Boss is a multi-dimensional spatial database provided as a managed service on AWS. It stores image data of different modalities with associated annotation data, or the output of an analysis that has labeled source image data with unique 64-bit identifiers. The Boss leverages a storage hierarchy to balance cost with performance. Data is migrated using AWS Lambda from Amazon Simple Storage Service (Amazon S3) to a fast in-memory cache as needed. Image and annotation data is spatially indexed for efficient, arbitrary access to sub-regions of peta-scale datasets. The Boss provides Single Sign-On authentication for third-party integrations, a fine-grained access control system, built in 2D and 3D web-based visualization, a rich REST API, and the ability to auto-scale with varying load.

The Boss is able to auto-scale by leveraging serverless components to provide on-demand capacity. Since users can choose to perform different high bandwidth operations, like data ingest or image downsampling, we needed the Boss to scale to meet each team’s needs and also remain affordable and operate within a fixed budget.

How did your team leverage serverless services when building the data ingest system for the Boss?

During ingest, we move large amounts of data (ranging from terabytes to petabytes) from on-premises temporary storage into the Boss. These data are image stacks in various formats stored locally in different ways. The job of the ingest service is to upload these image files while converting them into the Boss’ internal 3D data representation that allows for more efficient IO and storage.

Since these workflows can be spikey, driven both by researcher’s progress and program timelines, we use serverless services. We do not have to maintain running servers when ingest workflows are not executing and can massively scale processing for short periods of time, on-demand.

We use Amazon S3 for both the temporary storage of image tiles as they are uploaded and the final storage of compressed, reformatted data. Amazon DynamoDB tracks upload progress and maintains indexes of reformatted data stored in the Boss. Amazon Simple Queue Service (SQS) provides scalable task queues so that our distributed upload client application can reliably transfer data into the Boss. Step Functions manage high-level workflows during ingest, such as populating task queues and downsampling data after upload. After working with Step Functions and finding the native JSON scheme challenging to maintain, we created an open source Python package called Heaviside to manage Step Function development and use. AWS Lambda provides scalable, on-demand compute to monitor and update ingest indexes, process and index image data as it is loaded into the Boss, and downsample data for visualization after ingest is complete. By leveraging these services we have been able to achieve sustained ingest rates of over 4gbps from a single user while managing our overall monthly costs.

Thanks for sharing, Dean! Learn more about the system, by watching Dean’s session at the AWS Public Sector Summit here.

Prince George’s County Summer Youth Enrichment Program: Creating Apps for Students

The Prince George’s County internship program culminated with the four teams presenting their apps built on Amazon Alexa, Amazon Lex, Echo Dot, and Echo Show. The applications addressed challenges faced by some public school students, such as reading impairments and language barriers.

Learn about the different challenges and solutions below:

Team I – B.A.S.E (Building Amazing Students Efficiently)

Challenge: Within grades K-5, some students struggle with inadequate comprehension of fundamental math skills.

Solution: Using the Amazon Echo Show, this team created an interactive game that helps students learn the fundamentals of math. By creating skills using the Amazon Echo Dot, students are able to access ad complete assignments, and become motivated to study. They also created a teacher dashboard, which allows teachers to track the real-time progress of their students.

Team II – T Cubed (Teachers Teaching for Tomorrow)

Challenge: On average, school counselors deal with 350-420 students. On the student side, some students do not know how to apply to colleges, scholarships, or financial aid.

Solution: T Cubed created two skills on the Amazon Echo Dot and Amazon Echo Show that help students explore careers and colleges, and guide them through all aspects of the college application process.

Team III – A Square Education through Voice Automation – WINNING TEAM

Challenge: English to Speakers of Other Languages (ESOL) students often have trouble learning the pre-requisites necessary to pass the class.

Solution: This team created four innovative games to help ESOL students better obtain information and language proficiency to pass the test.

Team IV- Simplexa English. Foreign Language. Made Simple

Challenge: With many different languages spoken by students, there is a need to alleviate the language barrier in the classroom.

Solution: Using Amazon Alexa, this team created a flash card-style game to test the basic English proficiency of ESOL students. This application promotes a more interactive experience.

AWS worked with the teams throughout the five-week internship, providing onsite technical support, training, AWS Developer accounts, and funding. Through the program, mentors and interns used AWS Educate online education accounts to learn Alexa programming and use of AWS cloud services.

“By the end of five weeks, the student interns had successfully created functioning apps for Amazon Alexa, Dot, and Show, taking full advantage of the AWS Cloud to quickly learn, develop, and deploy new applications. The program met its goal to prepare Prince George’s County students as the next generation of the IT workforce,” said Sandra Longs Hasty, Program Director, Prince George’s County.

Congratulations to all participating interns!

Building a Cloud-Specific Incident Response Plan

In order for your organization to be prepared before a security event occurs, there are unique security visibility, and automation controls that AWS provides. Incident response does not only have to be reactive. With the cloud, your ability to proactively detect, react, and recover can be easier, faster, cheaper, and more effective.

What is an incident?

An incident is an unplanned interruption to an IT service or reduction in the quality of an IT service. Through tools such as AWS CloudTrail, Amazon CloudWatch, AWS Config, and AWS Config Rules, we track, monitor, analyze, and audit events. If these tools identify an event, which is analyzed and qualified as an incident, that “qualifying event” will raise an incident and trigger the incident management process and any appropriate response actions necessary to mitigate the incident.

Setup your AWS environment to prevent a security event

We will walk you through a hypothetical incident response (IR) managed on AWS with the Johns Hopkins University Applied Physics Laboratory (APL).

APL’s scientists, engineers, and analysts serve as trusted advisors and technical experts to the government, ensuring the reliability of complex technologies that safeguard our nation’s security and advance the frontiers of space. APL’s mission requires reliable and elastic infrastructure with agility, while maintaining security, governance, and compliance. APL’s IT cloud team works closely with APL mission areas to provide cloud computing services and infrastructure, and they create the structure for security and incident response monitoring.

Whether it is an IR-4 “Incident Handling” or IR-9 “Information Spillage Response,” the below incident response approach from APL applies to all types of IR.

- Preparation: The preparation step is critical. Train IR handlers to be able to respond to cloud-specific events. Ensure logging is enabled using Amazon Elastic Compute Cloud (Amazon EC2), AWS CloudTrail, and VPC Flow Logs, collect and aggregate the logs centrally for correlation and analysis, and use AWS Key Management Service (KMS) to encrypt sensitive data at rest. You should consider multiple AWS sub accounts for isolation with AWS Organizations. With Organizations, you can create separate accounts along business lines or mission areas which also limits the “blast radius” should a breach occur. For governance, you can apply policies to each of those sub accounts from the AWS master account.

- Identification: Also known as Detection, you use behavioral-based rules for identifying and detecting breaches or spills, or, you can be notified about which user accounts and systems need “cleaning up.” You should open up a case number with AWS Support for cross-validation.

- Containment: Use AWS Command Line Interface (CLI) or software development kits for quick containment using pre-defined restrictive security groups. Save the current security group of the host or instance, then isolate the host using restrictive ingress and egress security group rules.

- Investigation: Once isolated, determine and analyze the correlation, threat, and timeline.

- Eradication: Secure wipe-files. Response times may be faster with automation. After secure wipe, delete any KMS data keys, if used.

- Recovery: Restore network access to original state.

- Follow-up: Verify deletion of data keys (if KMS was used), cross-validate with Amazon Support, and report findings and response actions.

Watch the Incident Response in the Cloud session from the AWS Public Sector Summit in Washington, DC here for a more detailed discussion with Conrad Fernandes, Cloud Cyber Security Lead, Johns Hopkins University Applied Physics Lab (JHU APL).

Building the Population of Future Engineers with AWS and Girls Who Code

AWS has been working with Girls Who Code, helping support their Summer Immersion Program, a 7-week summer camp where 10-11th grade girls learn coding skills and get introduced to cloud technology.

Over the summer, AWS worked with this growing population of future engineers by hosting an introduction to AWS course and workshop at four Girls Who Code summer camps in San Ramon, Washington D.C, and two New York City locations.

The students were energetic and curious to learn about cloud computing, building applications in the cloud, and how AWS can help them power their summer projects. As part of the program, each student was given an AWS Educate Starter Account, which they can use to begin experimenting with AWS.

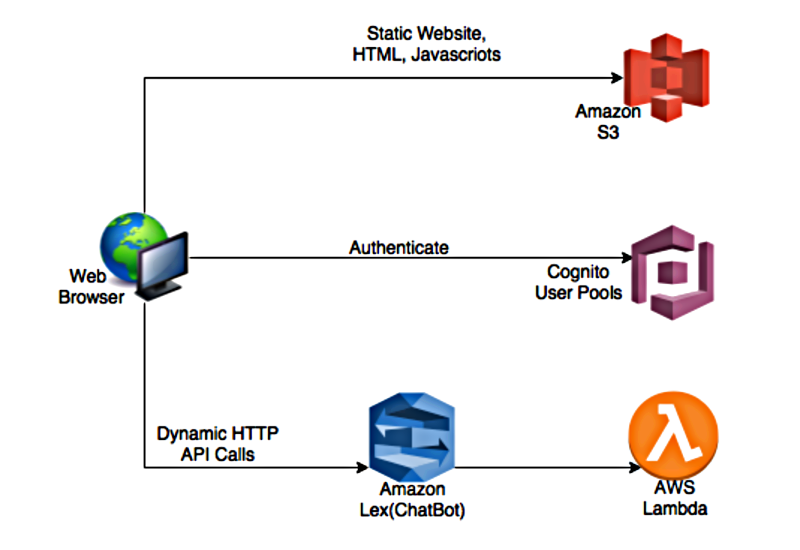

Their project was to build a static webpage and an application called Moviepedia. The students followed a series of steps to build their webpage hosted on Amazon Simple Storage Service (Amazon S3). Once they uploaded content and created the webpage, they integrated a chatbot into their webpage which automatically retrieves information about any movie. This bot has been written in NodeJS and utilizes TMDb for querying movies and returning desired results. The application architecture uses Amazon Lex, AWS Lambda, Amazon S3, and Amazon Cognito.

Best of luck to the participants in their journeys to become engineers. And thank you Girls Who Code for giving us this opportunity!

Learn more about the future of inclusive tech at #WePowerTech.

Kentucky Cloud Career Pathways and AWS Educate Prepare Students for Cloud Careers

To prepare students with the skills needed to address the massive growth and job opportunities in cloud, cyber security, and computer science, Amazon Web Services has collaborated with the Kentucky Education and Workforce Development Cabinet to create Kentucky Cloud Career Pathways.

This state-wide collaboration among government, education, nonprofit, and the private sector, provides blended learning and online learning, internships, apprenticeships, jobs, and other opportunities in cloud computing for Kentucky’s K-12 students and adult learners.

“Cloud computing provides not only the opportunity to create new companies with little or no capital needed, but also new career pathways for citizens,” said Teresa Carlson, Vice President Worldwide Public Sector, AWS. “Since launching our AWS Educate program, which helps educators and students use real-world technology in the classroom to prepare students to enter the cloud workforce, we’ve seen students around the world jump at the opportunity to get hands-on cloud experience. We are thrilled to be a part of Kentucky’s drive to develop cloud-enabled workforce, and hope that other states look to this model as an inspiration.”

As part of the program, AWS Educate will steer Kentucky students to private sector employers through the AWS Educate Job Board, which includes computer science jobs and internships from top technology companies.

AWS Educate is also working in partnership with Project Lead the Way (PLTW) to provide new cloud-based curriculum for schools and short videos for students showcasing various cloud computing careers. For teachers and faculty, new professional development opportunities will be provided starting in summer 2018 to help teachers bring cloud computing skills to their students.

Learn more about AWS Educate and how students, veterans, educators and hiring companies can access cloud content, training, collaboration tools, the job board, and AWS technology.

AWS EdStart Fueling the EdTech Startup Community

To help startups build innovative teaching and learning solutions using the AWS Cloud, AWS, along with the National Science Foundation (NSF), Education Technology Industry Netwrk (ETIN), and AT&T, sponsored the Start-up Pavilion and Pitch Fest at ISTE 2017. The Edtech Start-up Pavilion and Pitch Fest offers up-and-coming companies the opportunity to participate at ISTE. Pitch Fest contestants and winners received AWS Promotional Credits to accelerate their businesses and enhance the education industry.

AWS EdStart is an AWS program committed to the growth of educational technology (EdTech) companies who are building the next generation of online learning, analytics, and campus management solutions on the AWS Cloud.

Congratulations to the winners of the Pitch Fest: BrainCo and CodeSpark.

“BrainCo specializes in Brain Machine Interface (BMI) wearables and our products are changing the way we interact with the world,” said Max Newlon, research scientist, BrainCo.

BrainCo will use AWS to enhance its platform’s ability to use Machine Learning (ML) and also to scale globally as BrainCo sees an increase in demand for their product and solutions in international markets.

“AWS is where all of our crucial customer data, progress, and creative output is stored. Since kids are designing and coding thousands of games a day on our platform in every country in the world, it’s critical that our backend infrastructure be reliable, fast, and easy to deploy. AWS is a critical component of our tech stack and allows a small team to have global ambitions,” said Grant Hosford, Founder, CodeSpark.

CodeSpark will use their AWS Promotional Credits to host their player database, website, and app data for millions of players worldwide.

Startups and established EdTechs, like Remind, Instructure, Desire2Learn (D2L), and Ellucian, are not only scaling on AWS but also accelerating their market share through the AWS Education Competency program. Learn more.

Unlocking Healthcare and Life Sciences Research with AWS

From introductory material to in-depth architectures, the AWS Public Sector Summit featured sessions relevant to healthcare and life science researchers.

The full set of session videos are located here, along with slides to match, but in this post, we will recap healthcare and life sciences sessions with a focus on our customers, such as the American Heart Association, the NIH National Institute for Allergy and Infectious Diseases, and the National Marrow Donor Program, and how they use the AWS Cloud to unlock the value of data and share insights.

Harmonize, Search, Analyze, and Share Scientific Datasets on AWS

Cardiovascular researchers face a challenge: how to make multi-generational clinical research studies more broadly accessible for discovery and analysis than they are today. Many datasets have been created by different people at different times and don’t conform to a common standard. With varying naming conventions, units of measurement, and categories, datasets can have data quality issues.

To support dataset harmonization, search, analysis, and sharing of results and insights, the American Heart Association created the AHA Precision Medicine Platform using a combination of managed and serverless services such as Jupyter Notebooks and Apache Spark on Amazon EMR, Amazon Elasticsearch, Amazon S3, Amazon Athena, and Amazon Quicksight. AHA and AWS have worked together to implement these techniques to bring together researchers and practitioners from around the globe to access, analyze, and share volumes of cardiovascular and stroke data. They are working to accelerate research and generate evidence around the care of patients at risk of cardiovascular disease – the number one killer in the United States and a leading global health threat.

Watch the Harmonize, Search, Analyze, and Share Scientific Datasets on AWS video with Dr. Taha Kass-Hout, representing the American Heart Association (AHA), to learn more about datasets on AWS and this video on how AHA leveraged Amazon Alexa and Lex chat bots as part of a new initiative to engage communities and individuals to promote better heart health by easy voice-enabled tracking of activities and diet.

Next-Generation Medical Analysis

The NIH National Institute for Allergy and Infectious Diseases is working to make microbial genetics data available to microbiome researchers. They developed Nephele, a platform that allows researchers to perform large-scale analysis of data. Nephele uses standard infrastructure services, such as Amazon EC2 and Amazon S3, but also integrates serverless technologies like AWS Lambda for a cost-effective control-plane and resource provisioning.

Similarly, Dr. Caleb Kennedy from the National Marrow Donor Program defined a system for collecting vital information across a diverse set of participating clinics using standard data formats. They are looking to transform transplantation healthcare by integrating even more data into the system.

Watch the Next-Generation Medical Analysis video here to learn about how technology is enabling disruptive innovation in biomedical research and care.

IoT and AI Services in Healthcare

To help support the healthcare industry, AWS has Artificial Intelligence (AI) and Internet of Things (IoT) services enabling transformative new capabilities in healthcare. Learn more about IoT and AI Services in Healthcare and how these services can be applied in different scenarios. For instance, one AWS-savvy father is using Amazon Polly, Lex, and IoT buttons to create a verbal assistant for his autistic son.

Watch more of our sessions from the AWS Public Sector Summit here and learn more about genomics in the cloud at: https://aws.amazon.com/public-datasets/

AWS Joins the U.S. Department of State and the Unreasonable Group to Support the UN Sustainable Development Goals

World leaders at the United Nations agreed on a universal set of goals and indicators that would bring government, civil society, and the private sector together to end extreme poverty, inequality, and climate change by 2030.

Technology and cloud-based solutions will be a critical part of achieving the Global Goals for Sustainable Development (SDGs). AWS has teamed up with the Unreasonable Group and the U.S. Department of State’s Office of Global Partnerships to support the first cohort of startups participating in the Unreasonable Goals Sustainable Development Goals Accelerator program.

This program is focused on accelerating the achievement of the SDGs by bringing together 16 innovators from around the world who have developed highly scalable entrepreneurial solutions, each one positioned to solve one of the Global Goals.

With a commitment to making the world a better place, AWS experts spent three days on-site at the Aspen Institute’s Wye River resort with a team of corporate innovators, government influencers, and entrepreneurs. AWS advised and coached these business leaders on a range of topics including:

- How to leverage the AWS Cloud to drive better outcomes

- Strategies for selling to governments and nonprofits

- Options for distribution through the wider Amazon community

Participating businesses in the Accelerator will be enrolled in the AWS Activate program for startups, which includes $15,000 in AWS Promotional Credits as well as access to training.

Prince George’s County Teaches Students to Develop Apps Using Amazon Alexa

Prince George’s County created a summer internship for 20+ underserved high school and college students, focused on teaching the students how to develop apps using Amazon Alexa, Amazon Lex, Echo Dot, and Echo Show.

Starting this week, the 24 interns will work in teams of six to develop an application based on Amazon Alexa, Echo, Dot, and Show, utilizing AWS Lambda and other AWS cloud services. The applications will address challenges faced by some public school students, such as reading impairments. The teams will be led by six college students (all computer science majors) acting as mentors and advisors.

The teams will have five weeks to develop the program with the goal to roll the winning app out in schools within the county. This is a competition-based internship, with the winning application selected by a panel including the County Executive, County CAO, a School Board Member, an AWS representative, and others. The winning team will also have the opportunity to publish the skills on the Amazon Alexa site.

“This is the best part of my job working with the young adults and watching the light bulb come on and seeing the growth in them as well as confidence as professionals. The fact that we are using a concept that they can personally connect with is a winning strategy. When I shared with the teams that the device was the Amazon Alexa this year, they were so excited and that same day the brainstorming process was in motion. I can’t wait for everyone to see how amazing my students are and what creative ideas come from these future IT Professionals,” said Sandra Longs Hasty, Program Director, Prince George’s County.

AWS Educate, Amazon’s global initiative to provide students and educators with the resources needed to accelerate cloud-related learning endeavors, is offering developer account credits and online education accounts through mentors for the interns as part of the program.

Good luck to all of the interns!

Learn more about AWS Educate here and how we work to build skills, get engaged with the community, and inspire the next generation here.

The Prince George’s County internship program culminated with the four teams presenting their apps built on Amazon Alexa, Amazon Lex, Echo Dot, and Echo Show. The applications addressed challenges faced by some public school students, such as reading impairments and language barriers. Learn about the different challenges and solutions here.