Category: Amazon Glacier

AWS Storage Update – S3 & Glacier Price Reductions + Additional Retrieval Options for Glacier

Back in 2006, we launched S3 with a revolutionary pay-as-you-go pricing model, with an initial price of 15 cents per GB per month. Over the intervening decade, we reduced the price per GB by 80%, launched S3 in every AWS Region, and enhanced the original one-size-fits-all model with user-driven features such as web site hosting, VPC integration, and IPv6 support, while adding new storage options including S3 Infrequent Access.

Because many AWS customers archive important data for legal, compliance, or other reasons and reference it only infrequently, we launched Glacier in 2012, and then gave you the ability to transition data between S3, S3 Infrequent Access, and Glacier by using lifecycle rules.

Today I have two big pieces of news for you: we are reducing the prices for S3 Standard Storage and for Glacier storage. We are also introducing additional retrieval options for Glacier.

S3 & Glacier Price Reduction

As long-time AWS customers already know, we work relentlessly to reduce our own costs, and to pass the resulting savings along in the form of a steady stream of AWS Price Reductions.

We are reducing the per-GB price for S3 Standard Storage in most AWS regions, effective December 1, 2016. The bill for your December usage will automatically reflect the new, lower prices. Here are the new prices for Standard Storage:

| Regions | 0-50 TB

($ / GB / Month) |

51 – 500 TB

($ / GB / Month) |

500+ TB

($ / GB / Month) |

(Reductions range from 23.33% to 23.64%) |

$0.0230 | $0.0220 | $0.0210 |

(Reductions range from 20.53% to 21.21%) |

$0.0260 | $0.0250 | $0.0240 |

(Reductions range from 24.24% to 24.38%) |

$0.0245 | $0.0235 | $0.0225 |

(Reductions range from 16.36% to 28.13%) |

$0.0250 | $0.0240 | $0.0230 |

As you can see from the table above, we are also simplifying the pricing model by consolidating six pricing tiers into three new tiers.

We are also reducing the price of Glacier storage in most AWS Regions. For example, you can now store 1 GB for 1 month in the US East (Northern Virginia), US West (Oregon), or EU (Ireland) Regions for just $0.004 (less than half a cent) per month, a 43% decrease. For reference purposes, this amount of storage cost $0.010 when we launched Glacier in 2012, and $0.007 after our last Glacier price reduction (a 30% decrease).

The lower pricing is a direct result of the scale that comes about when our customers trust us with trillions of objects, but it is just one of the benefits. Based on the feedback that I get when we add new features, the real value of a cloud storage platform is the rapid, steady evolution. Our customers often tell me that they love the fact that we anticipate their needs and respond with new features accordingly.

New Glacier Retrieval Options

Many AWS customers use Amazon Glacier as the archival component of their tiered storage architecture. Glacier allows them to meet compliance requirements (either organizational or regulatory) while allowing them to use any desired amount of cloud-based compute power to process and extract value from the data.

Today we are enhancing Glacier with two new retrieval options for your Glacier data. You can now pay a little bit more to expedite your data retrieval. Alternatively, you can indicate that speed is not of the essence and pay a lower price for retrieval.

We launched Glacier with a pricing model for data retrieval that was based on the amount of data that you had stored in Glacier and the rate at which you retrieved it. While this was an accurate reflection of our own costs to provide the service, it was somewhat difficult to explain. Today we are replacing the rate-based retrieval fees with simpler per-GB pricing.

Our customers in the Media and Entertainment industry archive their TV footage to Glacier. When an emergent situation calls for them to retrieve a specific piece of footage, minutes count and they want fast, cost-effective access to the footage. Healthcare customers are looking for rapid, “while you wait” access to archived medical imagery and genome data; photo archives and companies selling satellite data turn out to have similar requirements. On the other hand, some customers have the ability to plan their retrievals ahead of time, and are perfectly happy to get their data in 5 to 12 hours.

Taking all of this in to account, you can now select one of the following options for retrieving your data from Glacier (The original rate-based retrieval model is no longer applicable):

Standard retrieval is the new name for what Glacier already provides, and is the default for all API-driven retrieval requests. You get your data back in a matter of hours (typically 3 to 5), and pay $0.01 per GB along with $0.05 for every 1,000 requests.

Expedited retrieval addresses the need for “while you wait access.” You can get your data back quickly, with retrieval typically taking 1 to 5 minutes. If you store (or plan to store) more than 100 TB of data in Glacier and need to make infrequent, yet urgent requests for subsets of your data, this is a great model for you (if you have less data, S3’s Infrequent Access storage class can be a better value). Retrievals cost $0.03 per GB and $0.01 per request.

Retrieval generally takes between 1 and 5 minutes, depending on overall demand. If you need to get your data back in this time frame even in rare situations where demand is exceptionally high, you can provision retrieval capacity. Once you have done this, all Expedited retrievals will automatically be served via your Provisioned capacity. Each unit of Provisioned capacity costs $100 per month and ensures that you can perform at least 3 Expedited Retrievals every 5 minutes, with up to 150 MB/second of retrieval throughput.

Bulk retrieval is a great fit for planned or non-urgent use cases, with retrieval typically taking 5 to 12 hours at a cost of $0.0025 per GB (75% less than for Standard Retrieval) along with $0.025 for every 1,000 requests. Bulk retrievals are perfect when you need to retrieve large amounts of data within a day, and are willing to wait a few extra hours in exchange for a very significant discount.

If you do not specify a retrieval option when you call InitiateJob to retrieve an archive, a Standard Retrieval will be initiated. Your existing jobs will continue to work as expected, and will be charged at the new rate.

To learn more, read about Data Retrieval in the Glacier FAQ.

As always, I am thrilled to be able to share this news with you, and I hope that you are equally excited!

If you want to learn more- we have a webinar coming up December 12th. Register here.

— Jeff;

Amazon Glacier Update – Third-Party SEC 17a-4(f) Assessment for Vault Lock

Amazon Glacier is designed to store any amount of archival or backup data with high durability. Amazon Glacier is a very cost-effective solution (as low as $0.007 per gigabyte per month) for data that is infrequently accessed, and where a retrieval time of several hours is acceptable.

Earlier this year we introduced a new Amazon Glacier compliance feature called Vault Lock (see my post, Create Write-Once-Read-Many Archive Storage with Amazon Glacier, to learn more). As I wrote at the time, this feature allows you to lock your Amazon Glacier vaults with compliance controls that are designed (per SEC Rule 17a-4(f)) to help meet the requirement that “electronic records must be preserved exclusively in a non-rewritable and non-erasable format.”

That announcement brought Amazon Glacier to the attention of AWS customers in the financial services industry. Large banks, broker-dealers, and securities clearinghouses have all expressed interest in this important new feature.

That announcement brought Amazon Glacier to the attention of AWS customers in the financial services industry. Large banks, broker-dealers, and securities clearinghouses have all expressed interest in this important new feature.

New Third-Party Assessment Report

Today I am pleased to be able to announce that we have received a third-party assessment report that speaks to Amazon Glacier’s ability to help meet the requirements of SEC 17a-4(f).

This assessment is provided by Cohasset Associates, a highly respected consulting firm with more than 40 years of experience and knowledge related to the legal, technical, and operational issues associated with the records management practices of companies regulated by the US SEC (Securities and Exchange Commission) and the US CFTC (Commodity Futures Trading Commission).

The full assessment (which is actually fairly interesting) provides a detailed look at the logic that Amazon Glacier uses to create immutable policies, along with a step-by-step examination and exposition of the controls that are used to protect Amazon Glacier vaults for compliance use cases once they have been locked (again, more information on this procedure can be found in the blog post that I referenced above).

View the Amazon Glacier with Vault Lock Assessment to learn more. For information about other compliance features, visit the AWS Compliance Center.

— Jeff;

AWS Storage Update – New Lower Cost S3 Storage Option & Glacier Price Reduction

Like all AWS services, the Amazon S3 team is always listening to customers in order to better understand their needs. After studying a lot of feedback and doing some analysis on access patterns over time, the team saw an opportunity to provide a new storage option that would be well-suited to data that is accessed infrequently.

The team found that many AWS customers store backups or log files that are almost never read. Others upload shared documents or raw data for immediate analysis. These files generally see frequent activity right after upload, with a significant drop-off as they age. In most cases, this data is still very important, so durability is a requirement. Although this storage model is characterized by infrequent access, customers still need quick access to their files, so retrieval performance remains as critical as ever.

New Infrequent Access Storage Option

In order to meet the needs of this group of customers, we are adding a new storage class for data that is accessed infrequently. The new S3 Standard – Infrequent Access (Standard – IA) storage class offers the same high durability, low latency, and high throughput of S3 Standard. You now have the choice of three S3 storage classes (Standard, Standard – IA, and Glacier) that are designed to offer 99.999999999% (eleven nines) of durability. Standard – IA has an availability SLA of 99%.

This new storage class inherits all of the existing S3 features that you know (and hopefully love) including security and access management, data lifecycle policies, cross-region replication, and event notifications.

Prices for Standard – IA start at $0.0125 / gigabyte / month (one and one-quarter US pennies), with a 30 day minimum storage duration for billing, and a $0.01 / gigabyte charge for retrieval (in addition to the usual data transfer and request charges). Further, for billing purposes, objects that are smaller than 128 kilobytes are charged for 128 kilobytes of storage. We believe that this pricing model will make this new storage class very economical for long-term storage, backups, and disaster recovery, while still allowing you to quickly retrieve older data if necessary.

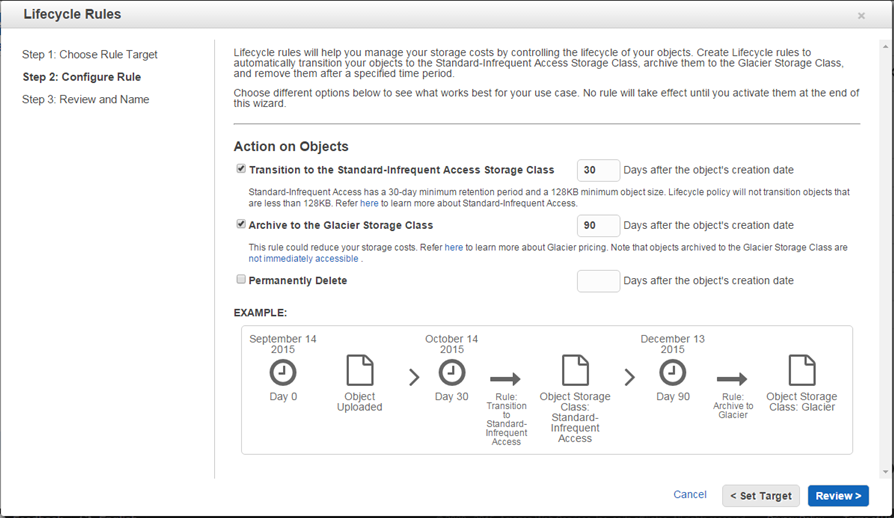

You can define data lifecycle policies that move data between Amazon S3 storage classes over time. For example, you could store freshly uploaded data using the Standard storage class, move it to Standard – IA 30 days after it has been uploaded, and then to Amazon Glacier after another 60 days have gone by.

The new Standard – IA storage class is simply one of several attributes associated with each S3 object. Because the objects stay in the same S3 bucket and are accessed from the same URLs when they transition to Standard – IA, you can start using Standard – IA immediately through lifecycle policies without changing your application code. This means that you can add a policy and reduce your S3 costs immediately, without having to make any changes to your application or affecting its performance.

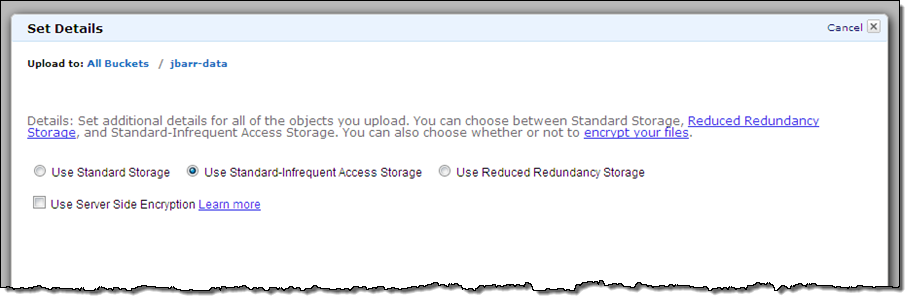

You can choose this new storage class (which is available today in all AWS regions) when you upload new objects via the AWS Management Console:

You can set up lifecycle rules for each of your S3 buckets. Here’s how you would establish the policies that I described above:

These functions are also available through the AWS Command Line Interface (CLI), the AWS Tools for Windows PowerShell, the AWS SDKs, and the S3 API.

Here’s what some of our early users have to say about S3 Standard – Infrequent Access:

Don MacAskill, CEO & Chief Geek

SmugMug

Brian Kaiser, CTO

Hudl

See the S3 Pricing page for complete pricing information on this new storage class.

Reduced Price for Glacier Storage

Effective September 1, 2015, we are reducing the price for data stored in Amazon Glacier from $0.01 / gigabyte / month to $0.007 / gigabyte / month. As usual, this price reduction will take effect automatically and you need not do anything in order to benefit from it. This price is for the US East (Northern Virginia), US West (Oregon), and EU (Ireland) regions; take a look at the Glacier Pricing page for full information on pricing in other regions.

— Jeff;

Create Write-Once-Read-Many Archive Storage with Amazon Glacier

Many AWS customers use Amazon Glacier for long-term storage of their mission-critical data. They benefit from Glacier’s durability and low cost, along with the ease with which they can integrate it in to their existing backup and archiving regimen. To use Glacier, you create vaults and populate them with archives.

In certain industries, long-term records retention is mandated by regulations or compliance rules, sometimes for periods of up to seven years. For example, in the financial services industry (including large banks, broker-dealers, and securities clearinghouses), SEC Rule 17a-4(f) specifies that “electronic records must be preserved exclusively in a non-rewriteable and non-erasable format.” Other industries are tasked with meeting similar requirements when they store mission-critical information.

Lock Your Vaults

Today we are introducing a new Glacier feature that allows you to lock your vault with a variety of compliance controls that are designed to support this important records retention use case. You can now create a Vault Lock policy on a vault and lock it down. Once locked, the policy cannot be overwritten or deleted. Glacier will enforce the policy and will protect your records according to the controls (including a predefined retention period) specified therein.

You cannot change the Vault Lock policy after you lock it. However, you can still alter and configure the access controls that are not related to compliance by using a separate vault access policy. For example, you can grant read access to business partners or designated third parties (as sometimes required by regulation).

The Locking Process

Because the locking policy cannot be changed or removed after it is locked down (in order to assure compliance), we have implemented a two-step locking process in order to give you an opportunity to test it before locking the vault down for good. Here’s what you need to do:

- Call

InitiateVaultLockand pass along your Vault Lock retention policy. Glacier will install the policy and set the vault’s lock state to InProgress, and then return a unique LockID (keep this value around for 24 hours, after which it will expire). - Test your retention policy thoroughly. The vault will behave as though subject to the policy during the testing period. During this period you should thoroughly test the operations that are prohibited by the policy and ensure that they fail as expected. For example, you will probably want to test

DeleteArchiveandDeleteVaultattempts from the root account, all IAM users, and from any users with cross-account access. You should also let the 24 hour testing period go by and then test that users with proper permissions can delete the archives. - If you are satisfied that the policy works as expected, call

CompleteVaultLockwith the LockID that you dutifully saved in step 1, dust off your hands and stroll off into the sunset. The vault’s state will be set to Locked, and the policy will remain in effect until the heat death of the universe. - If the policy does not work as expected, call

AbortVaultLockduring the 24 hour window (or wait until it passes) in order to remove the in-progress policy. Refine the policy and start over at step 1.

In most cases, you should plan to create a vault, apply your locking policy, and then upload archives to the vault where they will be governed by the policy. This is because Vault Lock does not backdate existing archives or other activities that were performed before the vault was locked. As such, there is no way to ensure that they are in compliance with the policy.

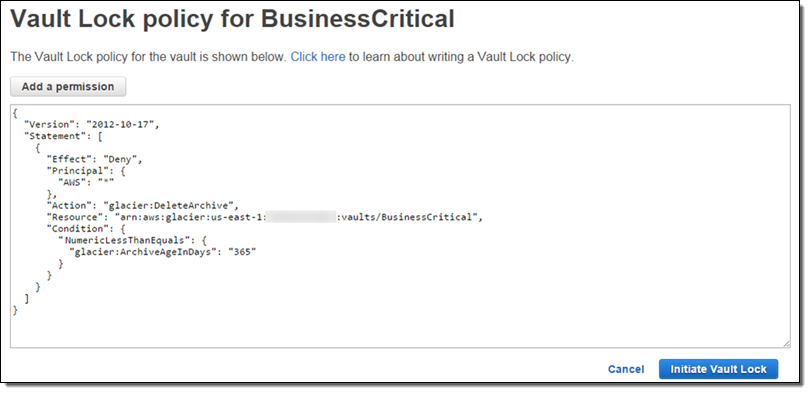

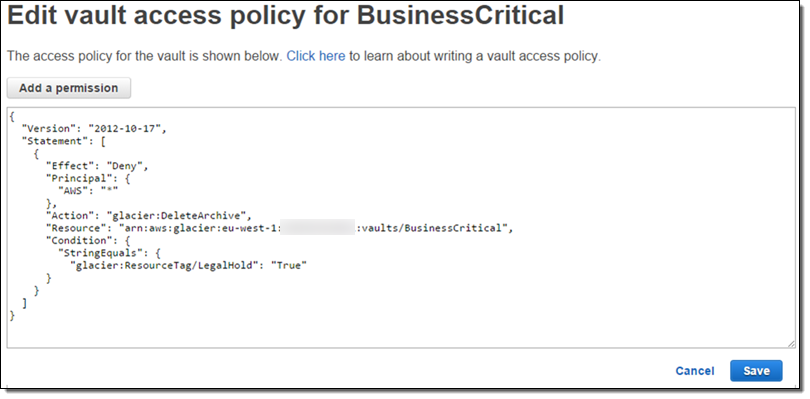

Here’s a sample policy that implements a 365 day retention rule:

As you can see this policy denies everyone (AWS:*) the ability to use Glacier’s DeleteArchive function on the resource named vaults/BusinessCritical for a period of 365 days.

More Control

After you apply and finalize your locking policy, you can continue to use the existing vault access policy as usual. However, the vault access policy cannot reduce the effect of the retention controls in the locking policy.

In certain situations you may be faced with the need to place a legal hold on your compliance archives for an indefinite period of time, generally until an investigation of some sort is concluded. You can do this by creating a vault access policy that denies the use of Glacier’s Delete functions if the vault is tagged in a particular way. For example, the following policy will deny deletes if the LegalHold tag is present and set to true:

Once the investigation is over, you can remove the hold by changing the LegalHold tag to false.

From Our Customers

Records retention in the Financial Services industry is governed by strict regulatory requirements. In advance of today’s launch, several AWS Financial Services customers were given a sneak peek at Vault Lock to review the new features. Here’s what they had to say:

Matt Claus (Vice President and CTO of eSpeed at Nasdaq):

Rob Krugman (Head of Digital Strategy, Broadridge Financial Solutions):

Lock up Today

This new feature is available now and you can start using it today. To learn more about this feature, read about Getting Started Amazon Glacier Vault Lock.

— Jeff;

New – Tag Your Amazon Glacier Vaults

Amazon Glacier is a secure, durable, and extremely low-cost storage service for data archiving and online backup (see my post, Amazon Glacier: Archival Storage for One Penny Per GB Per Month for an introduction).

Since we introduced Glacier in the summer of 2012, we have made it even more useful by adding lifecycle management, data retrieval policies & audit logging, range retrieval, and vault access policies.

Tag Your Vaults

If you are already a Glacier user (or if you have read my intro), you know that you create archives and store them in Glacier vaults.

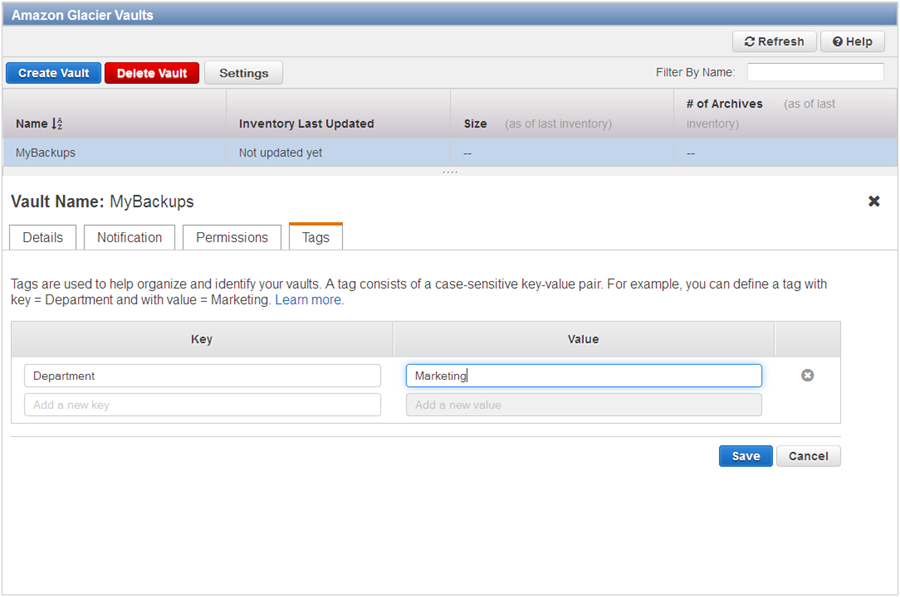

Today we are making Glacier even more useful by giving you the ability to tag your vaults. You can use these tags for cost allocation purposes (by department, group, or any other desired categorization) or for other forms of tracking.

Here’s how you tag a vault with a key named “Department”:

After you have tagged your vaults, you can use the AWS Cost Allocation Reports to view a breakdown of costs and usage by tag.

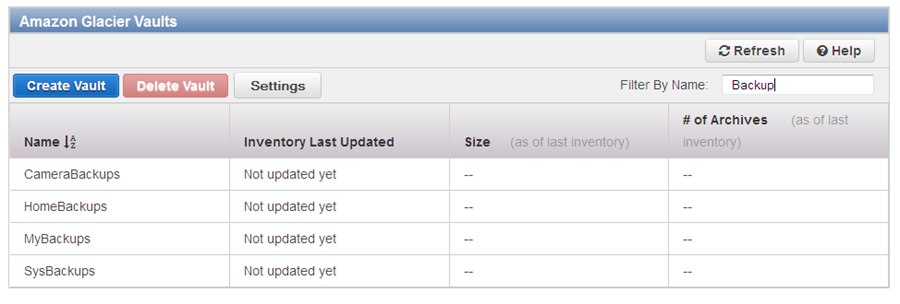

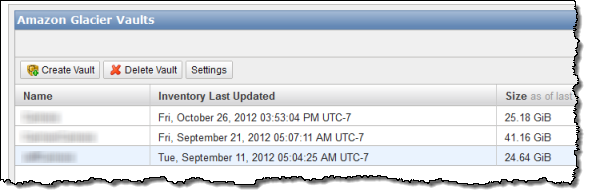

As part of today’s launch, we updated the design of the Glacier console. We also made some speed improvements and added a filtering mechanism to make it easier for you to locate a particular vault. For example, here are all of my “Backup” vaults:

This new feature is available now and you can start using it today! To learn more, read about Tagging Your Glacier Vaults.

— Jeff;

New – Glacier Vault Access Policies

Amazon Glacier provides secure and durable data storage at extremely low cost (as little as $0.01 per gigabyte per month). Each item stored in Glacier is known as an archive, and can be as large as 40 terabytes. Archives are stored in vaults, each of which can store as many archives as desired.

Today we are giving you a new way to manage access to individual vaults within your AWS account. You can now define a vault access policy and use the policy to grant access to individual users, business groups, and to external business partners. Using a single access policy to control access to a vault can be simpler than using individual user and group IAM policies in many cases. For instance, you can easily write a vault access policy that denies all delete requests on your vault to protect critical data from accidental deletion. Using the vault access policy in this scenario is simpler than configuring multiple IAM policies for users and groups.

You can set up vault access policies from the AWS Management Console, AWS Command Line Interface (CLI), AWS Tools for Windows PowerShell, or by making calls to the Glacier API. You can create one access policy for each vault; it can allow or deny access to individual API functions made by particular users or groups. It can also enable cross-account access, allowing you to share a vault with other AWS accounts.

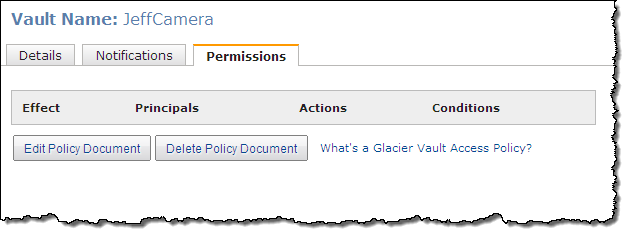

From the AWS Management Console

Here’s how you can set up a policy using the console. Start by opening the console and selecting the desired vault. You will see the new Permissions tab at the bottom:

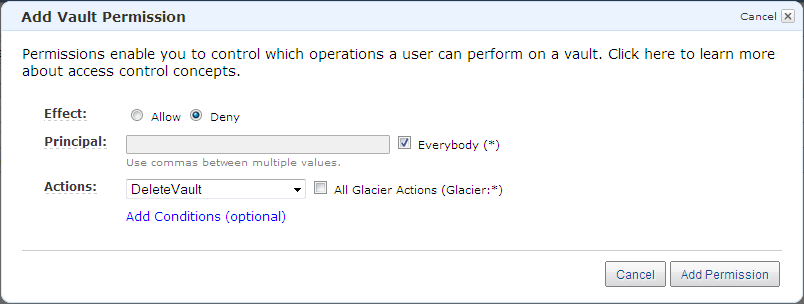

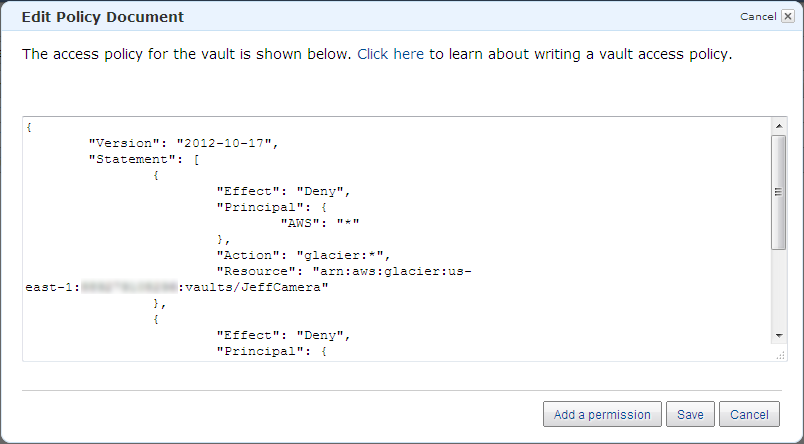

Click on Edit Policy Document and Add Permission. Set up a policy that denies all delete requests like this:

Click on Add Permission, Save the policy, and close the window:

Available Now

This feature is available now and you can start using it today! To learn more, read about Vault Access Policies in the Glacier Developer Guide.

— Jeff;

AWS GovCloud (US) Update – Glacier, VM Import, CloudTrail, and More

I am pleased to be able to announce a set of updates and additions to AWS GovCloud (US). We are making a number of new services available including Amazon Glacier, AWS CloudTrail, and VM Import. We are also enhancing the AWS Management Console with support for Auto Scaling and the Service Limits Report. As you may know, GovCloud (US) is an isolated AWS Region designed to allow US Government agencies and customers to move sensitive workloads in to the cloud. It adheres to the U.S. International Traffic in Arms Regulations (ITAR) regulations and well as the Federal Risk and Authorization Management Program (FedRampSM). AWS GovCloud (US) has received an Agency Authorization to Operate (ATO) from the US Department of Health and Human Services (HHS) utilizing a FedRAMP accredited Third Party Assessment Organization (3PAO) for the following services: EC2, S3, EBS, VPC, and IAM.

AWS customers host a wide variety of web and enterprise applications in GovCloud (US). They also run HPC workloads and count on the cloud for storage and disaster recovery.

Let’s take a look at the new features!

Amazon Glacier

Amazon Glacier is a secure and durable storage service designed for data archiving and online backup. With prices that start at $0.013 per gigabyte per month in this Region, you can store any amount of data and retrieve it within hours. Glacier is ideal for digital media archives, financial and health care records, long term database backups. It is also a perfect place to store data that must be retained for regulatory compliance. You can store data directly in a Glacier vault or you can make use of lifecycle rules to move data from Amazon Simple Storage Service (S3) to Glacier.

AWS CloudTrail

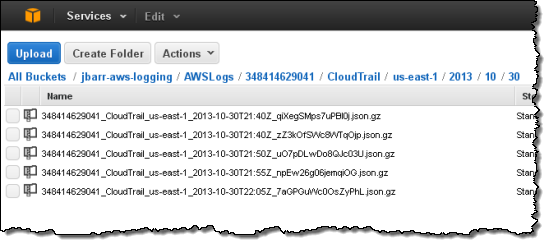

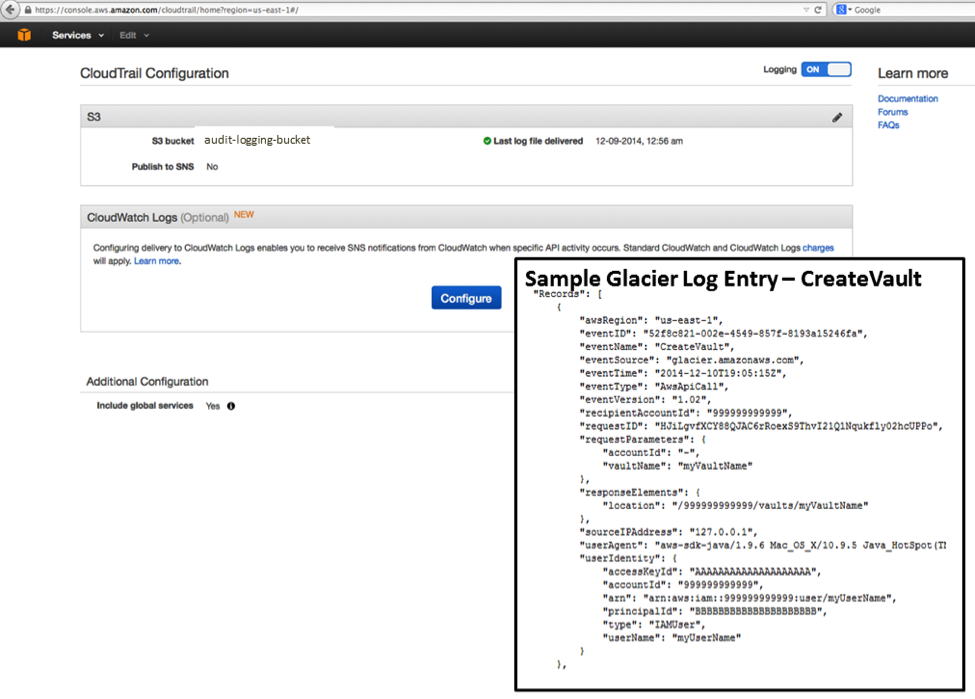

AWS CloudTrail records calls made to the AWS APIs and publishes the resulting log files to S3. The log files can be use as a compliance aid, allowing you to demonstrate that AWS resources have been managed according to rules and regulatory standards (see my blog post, AWS CloudTrail – Capture AWS API Activity, for more information). You can also use the log files for operational troubleshooting and to identity activities on AWS resources which failed due to inadequate permissions. As you can see from the blog post, you simply enable CloudTrail from the Console and point it at the S3 bucket of your choice. Events will be delivery to the bucket and stored in encrypted form, typically within 15 minutes after they take place. Within the bucket, events are organized by AWS Account Id, Region, Service Name, Date, and Time:

Our white paper, Security at Scale: Logging in AWS, will help you to understand how CloudTrail works and how to put it to use in your organization.

VM Import

VM Import allows you to import virtual machine images from your existing environment for use on Amazon Elastic Compute Cloud (EC2). This allows you to use build off of your existing investment in images that meet your IT security, configuration management, and compliance requirements.

You can import VMware ESX and VMware Workstation VMDK images, Citrix Xen VHD images and Microsoft Hyper-V VHD images for Windows Server 2003, Windows Server 2003 R2, Windows Server 2008, Windows Server 2008 R2, Windows Server 2012, Windows Server 2012 R2, Centos 5.1-6.5, Ubuntu 12.04, 12.10, 13.04, 13.10, and Debian 6.0.0-6.0.8, 7.0.0-7.2.0.

Console Updates

The AWS Management Console in the GovCloud Region now supports Auto Scaling and the Service Limits Report.

Auto Scaling allows you to build systems that respond to changes in demand by scaling capacity up or down as needed.

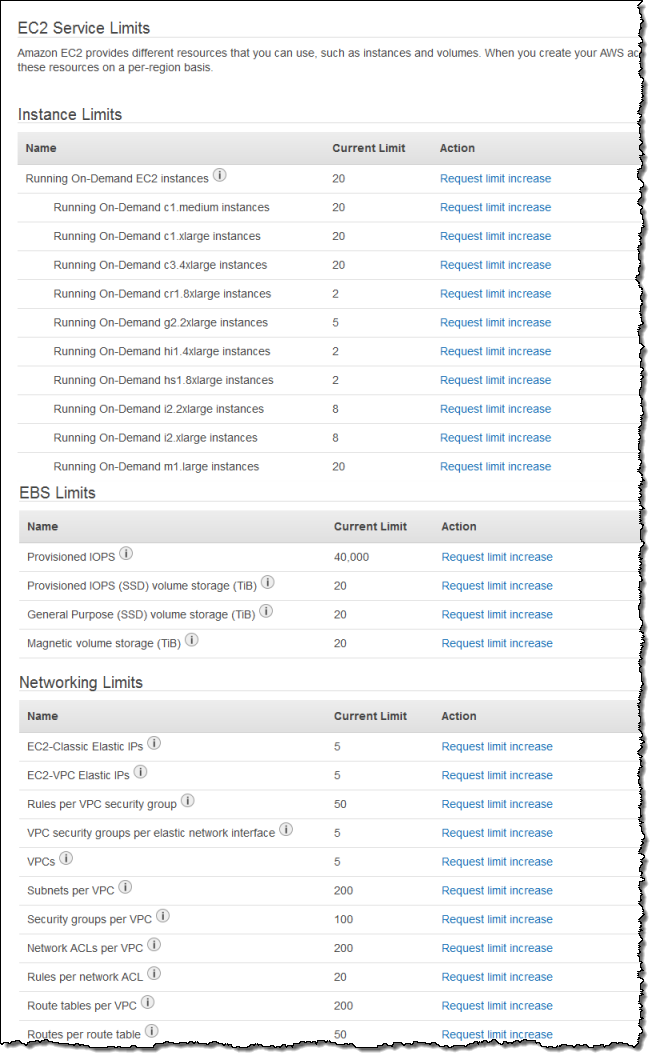

The Service Limits Report makes it easy for you to view and manage the limits associated with your AWS account. It includes links that let you make requests for increases in a particular limit with a couple of clicks:

All of these new features are operational now and are available to GovCloud users today!

— Jeff;

Data Retrieval Policies and Audit Logging for Amazon Glacier

Amazon Glacier is a secure and durable storage service for data archiving and backup. You can store infrequently accessed data in Glacier for as little as $0.01 per gigabyte per month. When you need to retrieve your data, Glacier will make it available for download within 3 to 5 hours.

Today we are launching two new features for Glacier. First, we are making it easier for you to manage data retrieval costs by introducing the data retrieval policies feature. Second, we are happy to announce that Glacier now supports audit logging with AWS CloudTrail. This pair of features should make Glacier even more attractive to customers who are leveraging Glacier as part of their archival solutions, where managing a predictable budget and the ability to create and examine audit trails are both very important.

Data Retrieval Policies

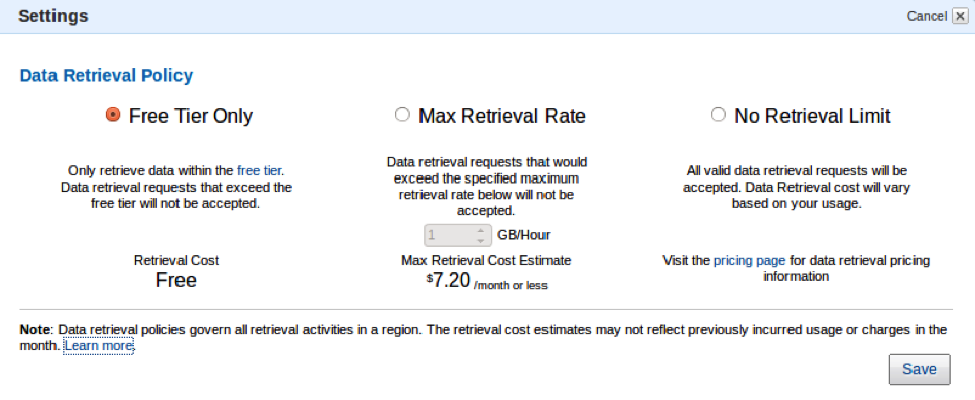

Glacier’s new data retrieval policies will help you manage your data retrieval costs with just a few clicks in the AWS Management Console. As you may know, Glacier’s free retrieval tier allows you to retrieve up to 5% of your monthly storage (pro-rated daily). This is best for smooth, incremental retrievals. With today’s launch you now have three options:

- Free Tier Only – You can retrieve up to 5% of your stored data per month. Retrieval requests above the daily free tier allowance will not be accepted. You will not incur data retrieval costs while this option is in effect. This is the default value for all newly created AWS accounts.

- Max Retrieval Rate – You can cap the retrieval rate by specifying a gigabyte per hour limit in the AWS Management Console. With this setting, retrieval requests that would exceed the specified rate will not be accepted to ensure a data retrieval cost ceiling.

- No Retrieval Limit – You can choose to not set any data retrieval limits in which case all valid retrieval requests will be accepted. With this setting, your data retrieval cost will vary based on your usage. This is the default value for existing Amazon Glacier customers.

The options are chosen on a per-account, per-region basis and apply to all Glacier retrieval activities within the region. This is due to the fact that data retrieval costs vary by region and the free tier is also region-specific. Also note that the retrieval policies only govern retrieval requests issued directly against the Glacier service (on Glacier vaults) and do not govern Amazon S3 restore requests on data archived in the Glacier storage class via Amazon S3 lifecycle management.

Here is how you can set up your data retrieval policies in the AWS Management Console:

If you have chosen the Free Tier Only or Max Retrieval Rate policies, retrieval requests (or “retrieval jobs”, to use Glacier’s terminology) that would exceed the specified retrieval limit will not be accepted. Instead, they will return an error code with information about your retrieval policy. You can use this information to delay or spread out the retrievals. You can also choose to increase the Max Retrieval Rate to the appropriate level.

We believe that this new feature will give you additional control over your data retrieval costs, and that it will make Glacier an even better fit for your archival storage needs. You may want to watch this AWS re:Invent video to learn more:

Audit Logging With CloudTrail

Glacier now supports audit logging with AWS CloudTrail. Once you have enabled CloudTrail for your account in a particular region, calls made to the Glacier APIs will be logged to Amazon Simple Storage Service (S3) and accessible to you from the AWS Management Console and third-party tools. The information provided to you in the log files will give you insight into the use of Glacier within your organization and should also help you to improve your organization’s compliance and governance profile.

Available Now

Both of these features are available now and you can start using them today in any region which supports Glacier and CloudTrail, as appropriate.

— Jeff;

Amazon S3 Lifecycle Management for Versioned Objects

Today I would like to tell you about a powerful new AWS feature that bridges a pair of existing AWS services and makes another pair of existing features far more useful! Let’s start with a quick review.

S3 & Versioned Objects

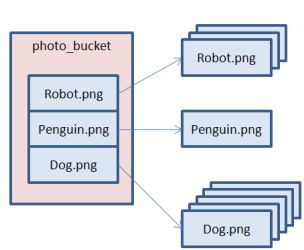

I’m sure that you already know about Amazon S3. First launched in 2006, S3 now processes over a million requests per second and stores trillions of documents, images, backups, and other data, all with high availability and eleven 9’s (i.e. 99.999999999%) durability. Since the initial launch, we have added many features and locations, and have also reduced the price (conveniently measured in pennies per Gigabyte per month) of storage repeatedly. One notable and popular S3 feature is object versioning . After you enable versioning for an S3 bucket, successive uploads or PUTs of a particular object will create distinct, named, individually addressable versions of the object in order to provide you with protection against overwrites and deletes. You can preserve, retrieve, and restore every version of every object in an S3 bucket that has versioning enabled.

You can retrieve previous versions of the object in order to recover from a human or programmatic error.

Glacier & Lifecycle Rules

You have probably heard about Amazon Glacier as well. Glacier shares eleven 9’s of data durability with S3, but offers a lower price per Gigabyte / month in exchange for a retrieval time that is typically between three and five hours. Glacier is ideal for long-term storage of important data that you don’t need to access within seconds or minutes.

S3’s Lifecycle Management integrates S3 and Glacier and makes the details visible via the Storage Class of each object. The data for objects with a Storage Class of Standard or RRS (Reduced Redundancy Storage) is stored in S3. If the Storage Class is Glacier, then the data is stored in Glacier. Regardless of the Storage Class, the objects are accessible through the S3 API and other S3 tools. Lifecycle Management allows you to define time-based rules that can trigger Transition (changing the Storage Class to Glacier) and Expiration (deletion of objects). The Expiration rules give you the ability to delete objects (or versions of objects) that are older than a particular age. You can use these rules to ensure that the objects remain available in case of an accidental or planned delete while limiting your storage costs by deleting them after they are older than your preferred rollback window.

S3 & Glacier & Versioned Objects & Lifecycle Rules

With all of that out of the way, I am finally ready to share today’s news! You can now create and apply Lifecycle rules to buckets that use versioned objects. This seemingly simple change makes S3, Glacier, and versioned objects a lot more useful. For example, you can arrange to keep the current version of an object in S3, and to transition older versions to Glacier. You can get to the current version (the one that you are most likely to need) immediately, with older versions accessible within three to five hours. Depending on your use case, you might want to transition all of the versions, including the current one, to Glacier. You might also want to expire each version a few days after it was created (using a rule for the current version) or overwritten/expired (using a rule based on the successor time for previous versions). In other words, this new feature combines the flexibility of S3 versioned objects with the extremely low cost of storage in Glacier, helping you to reduce your overall storage costs.

You can create and apply Lifecycle rules to an S3 bucket to take advantage of this new feature. You can do this through the S3 API, an AWS SDK, or from within the AWS Management Console.

Lifecycle Management in the Console

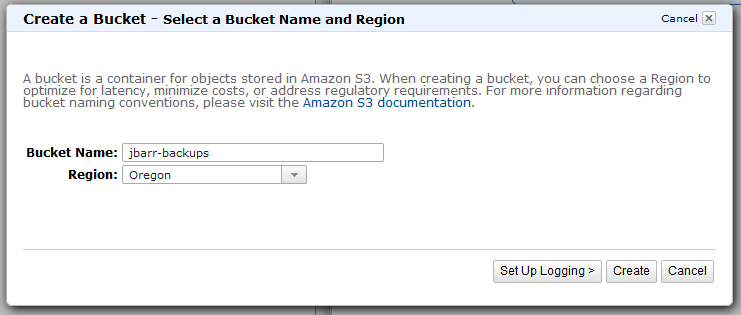

Let’s set up a simple Lifecycle rule using the AWS Management Console. I will create a fresh bucket to store some backups:

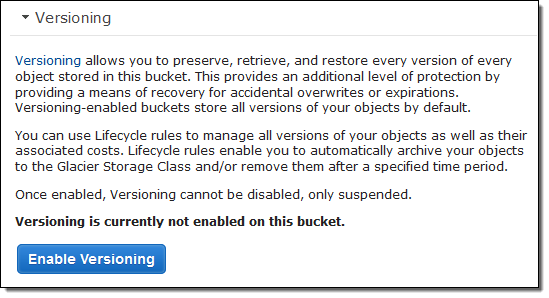

In this example, my backup app is very simple-minded and generates its output to the same file every time. I’ll enable versioning for the bucket. This will allow me to upload fresh backups without having to move or rename any files, while gaining all of the advantages of versioning including protection against overwrites and deletions. It will also allow me to archive the previous versions of the file in Glacier. Here’s how I enable versioning:

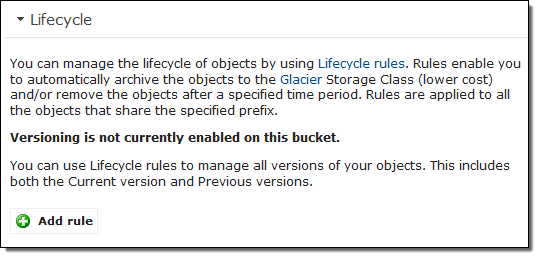

Now I need to set up the appropriate Transition and Expiration rules:

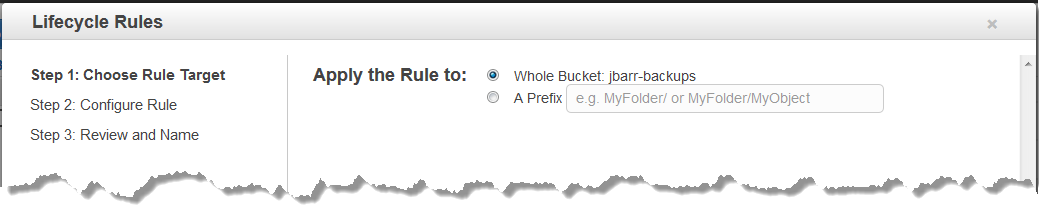

The console now includes a wizard to simplify this process! In the first step, I can choose to create a rule that addresses all of the objects in the bucket, or a subset of objects that share a common name prefix within the bucket.

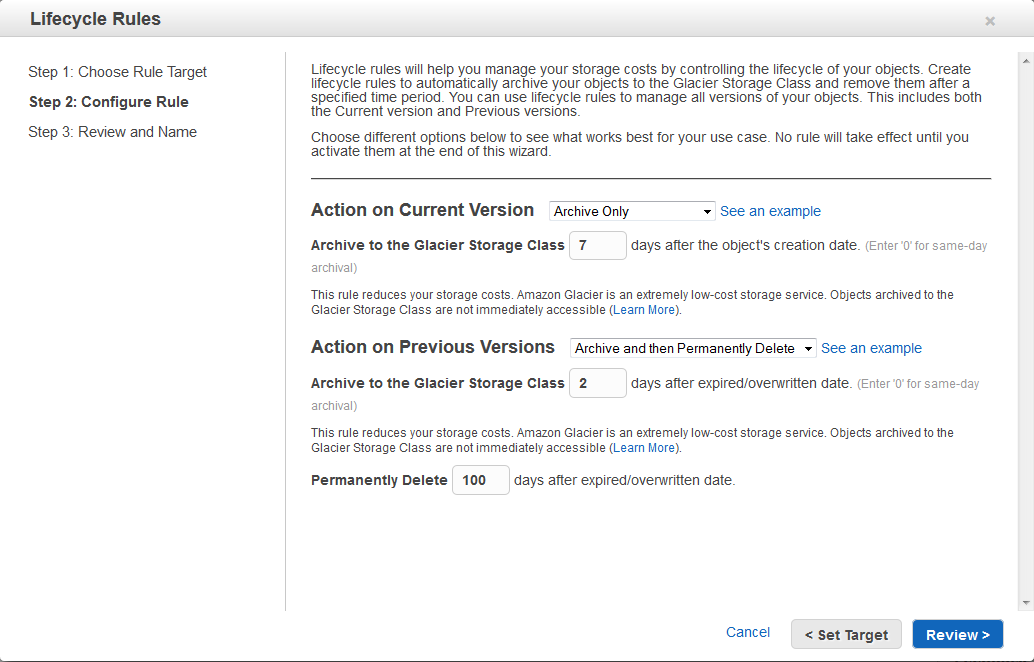

After choosing the objects that are addressed by the rule, I now specify the transitions and expirations for the current and previous versions of the object. Let’s say that I want to transition the current version of each backup to Glacier after a week, and the previous versions two days after they have been overwritten. Further, I would like to permanently delete the previous versions 100 days after they are no longer current. Here’s how I would set that up (you can also click on See an Example to get an even better understanding of the Lifecycle rules):

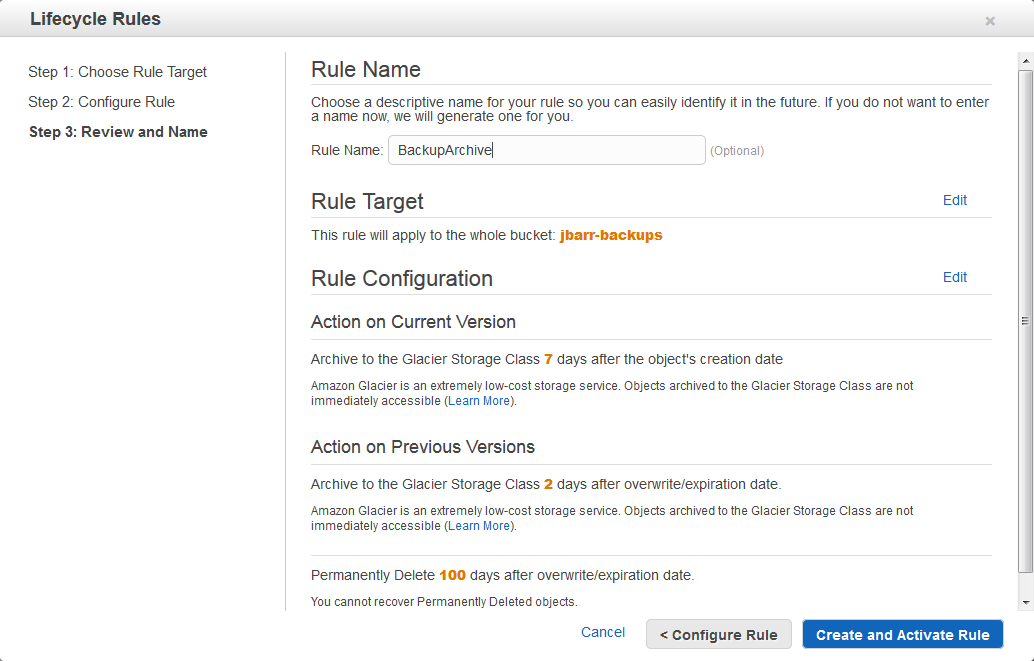

The console confirms my intent and then creates and activates the rule:

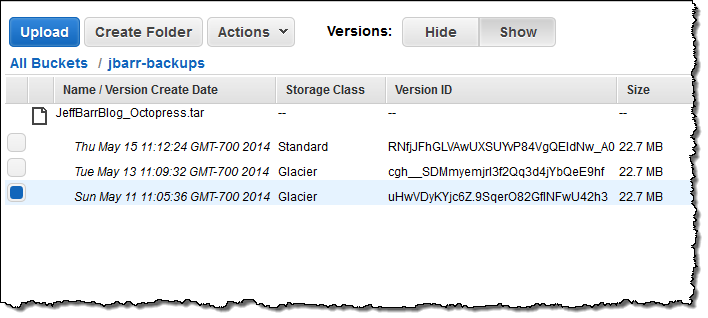

Once the rules have been established, transitions and expirations will happen automatically. I can see the current state of each version of an object from the console:

Important Note: In order to see the versions of my backup file, I clicked the Show button.

Learning More

The example shown above is a good starting point, but things are somewhat complex behind the scenes and you should plan to spend some time learning more about this feature before you start using it. In fact, you may want to create a bucket just for testing and use it to try out your proposed rules.

Here are some things to think about when you design your strategy for versioning, transitions, and expirations:

- Versioning Status – This value is maintained on a per-bucket basis. Each bucket can be unversioned (the default) or versioned, and you also have the option to suspend versioning. With versioning suspended, you will stop accruing new version of an object. Also, deleting an object when versioning is suspended creates a special Delete Marker with a NULL version, and makes this the current version.

- Actions – The current and previous versions of an object each have transition and expiration actions, each of which have behavior that is dependent on the versioning status of the associated bucket. Based on your use case, you can choose to just transition, just expire, or transition and then expire.

- Days and Dates Rules can specify a date or a number of days since the creation of an object. Rules created in the console must be day-based; you must use the API to create a rule that includes a date. The lifecycle rules for previous versions take effect from the time that a current version is retained as a previous one. You can control the time that a superseded version remains in S3 before it is transitioned to Glacier or expired.

- Existing Rules The rules that you created prior to the introduction of rules for versioning will still apply and will behave as expected. If they reference specific dates, you will need to use the API to edit them (you can still view, disable, and delete them in the console). You can set up rules for previous versions before you actually enable versioning for a bucket. The rules will become applicable only after you do so.

Give it a Try

This new feature is available now and you can start using it today. Give it a spin and let me know what you think.

— Jeff;

Create a Virtual Tape Library Using the AWS Storage Gateway

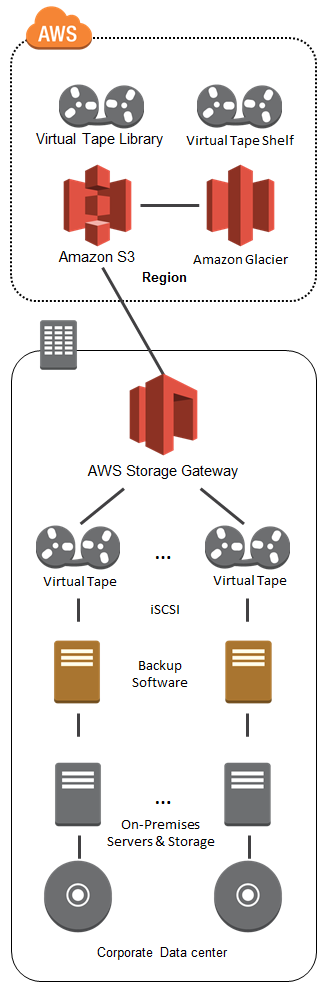

The AWS Storage Gateway connects an on-premises software appliance with cloud-based storage to integrate your on-premises IT environment with the AWS storage infrastructure.

Once installed and configured, each Gateway presents itself as one or more iSCSI storage volumes. Each volume can be configured to be Gateway-Cached (primary data stored in Amazon S3 and cached in the Gateway) or Gateway-Stored (primary data stored on the Gateway and backed up to Amazon S3 in asynchronous fashion).

Roll the Tape

Today we are making the Storage Gateway even more flexible. You can now configure a Storage Gateway as a Virtual Tape Library (VTL), with up to 10 virtual tape drives per Gateway. Each virtual tape drive responds to the SCSI command set, so your existing on-premises backup applications (either disk-to-tape or disk-to-disk-to-tape) will work without modification.

Virtual tapes in the Virtual Tape Library will be stored in Amazon S3, with 99.999999999% durability. Each Gateway can manage up to 1,500 virtual tapes or a total of 150 TB of storage in its Virtual Tape Library.

Virtual tapes in the Virtual Tape Library can be mounted to a tape drive and become accessible in a matter of seconds.

For long term, archival storage Virtual Tape Libraries are integrated with a Virtual Tape Shelf (VTS). Virtual tapes on the Virtual Tape Shelf will be stored in Amazon Glacier, with the same durability, but at a lower price per gigabyte and a longer retrieval time (about 24 hours). You can easily move your virtual tapes to your Virtual Tape Shelf, by simply ejecting them from the Virtual Tape Library using your backup application.

The virtual tapes are stored in a secure and durable manner. Amazon S3 and Amazon Glacier both make use of multiple storage facilities, and were designed to maintain durability even if two separate storage facilities fail simultaneously. Data moving from your Gateway to and from the AWS cloud is encrypted using SSL; data stored in S3 and Glacier is encrypted using 256-bit AES.

Farewell to Tapes and Tape Drives

As you should be able to tell from my description above, the Storage Gateway, when configured as a Virtual Tape Library, is a complete, plug-in replacement for your existing physical tape infrastructure. You no longer have to worry about provisioning, maintaining, or upgrading tape drives or tape robots. You don’t have to initiate lengthy migration projects every couple of years, and you don’t need to mount and scan old tapes to verify the integrity of the data. You can also forget about all of the hassles of offsite storage and retrieval!

In short, all of the headaches inherent in dealing with cantankerous mechanical devices with scads of moving parts simply vanish when you switch to a virtual tape environment. What’s more, so does the capital expenditure. You pay for what you use, rather than what you own.

Looks Like Tape, Tastes Like Cloud

Here’s a diagram to help you understand the Gateway-VTL concept. Your backup applications believe that they are writing to actual magnetic tapes. In actuality, they are writing data to the Storage Gateway, where it is uploaded to the AWS cloud:

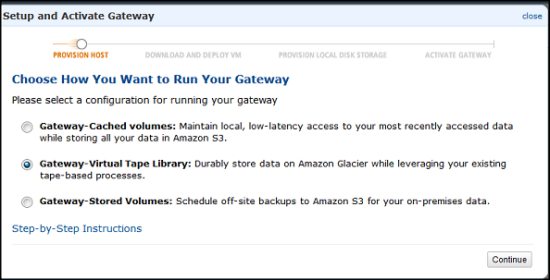

Getting Started

The Gateway takes the form of a virtual machine image that you run on-premises on a VMWare or Hyper-V host. The Storage Gateway User Guide will walk you through the process of installing the image, configuring the local storage, and activating your Gateway using the AWS Management Console:

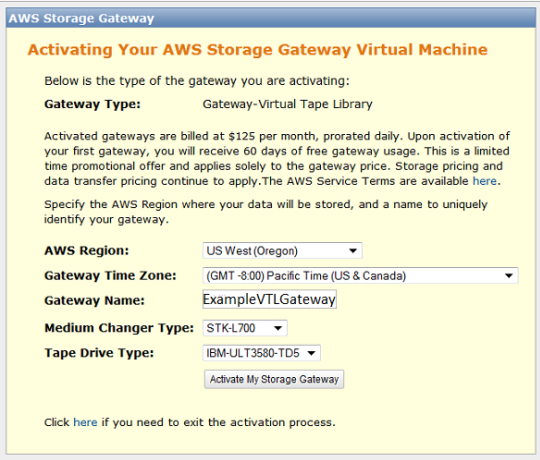

As part of the activation process, you will specify the type of medium changer and tape drive exposed by the Gateway:

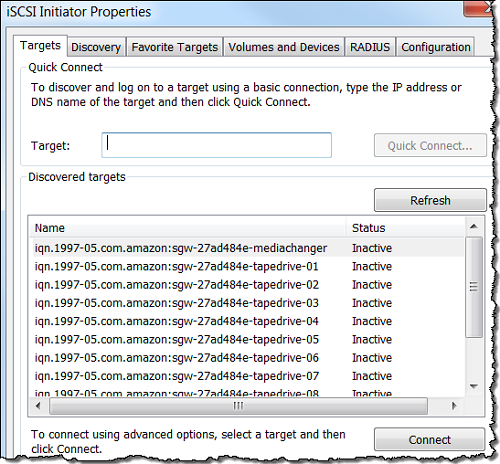

You will need to locate the Virtual Tape Drives in order to use them for backup. The details vary by operating system and backup tool. Here’s what the discovery process looks like from the Microsoft iSCSI Initiator running on the system that you use to create backups:

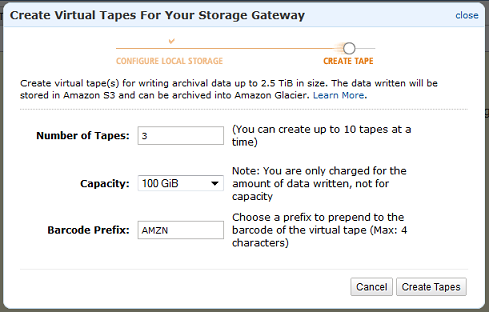

Then you create some virtual tapes:

Backing Up and Managing Tapes

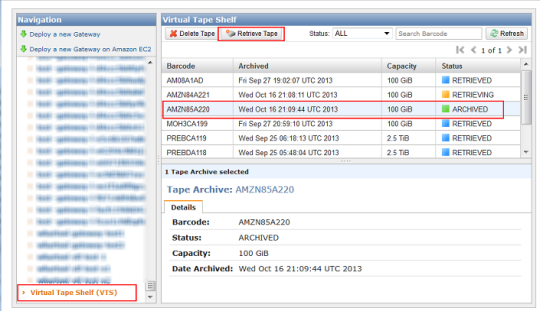

Once you locate the tape drives and tell your backup applications to use them, you can initiate your offsite backup process. You can find your Virtual Tapes in the AWS Management Console:

As you can see, the console provides you with a single, integrated view of all of your Virtual Tapes whether they are in the Virtual Tape Library and immediately accessible, or on the Virtual Tape Shelf, and accessible in about 24 hours.

Gateway in the Cloud

The Storage Gateway is also available as an Amazon EC2 AMI and you can launch it from the AWS Marketplace. There are several different use cases for this:

Perhaps you have migrated (or about to migrate) some on-premises applications to the AWS cloud. You can maintain your existing backup regimen and you can stick with tools that are familiar to you by using a cloud-based Gateway.

You can also use a cloud-based Gateway for Disaster Recovery. You can launch the Gateway and some EC2 instances, and bring your application back to life in the cloud. Take a look at our Disaster Recovery page to learn more about how to implement this scenario using AWS.

Speaking of Disaster Recovery, you can also use a cloud-based Gateway to make sure that you can successfully recover from an incident. You can make sure that your backups contain the desired data, and you can verify your approach to restoring the data and loading it into a test database.

Bottom Line

The AWS Storage Gateway is available in multiple AWS Regions and you can start using it today. Here’s what it will cost you:

- Each activated gateway costs $125 per month, with a 60-day free trial.

- There’s no charge for data transfer from your location up to AWS.

- Virtual Tapes stored in Amazon S3 cost $0.095 (less than a dime) per gigabyte per month of storage. You pay for the storage that you use, and not for any “blank tape” (so to speak).

- Virtual Tapes stored in Amazon Glacier cost $0.01 (a penny) per gigabyte per month of storage. Again, you pay for what you use.

- Retrieving data from a Virtual Tape Shelf costs $0.30 per gigabyte. If the tapes that you delete from the Virtual Tape Shelf are less than 90 days old, there is an additional, pro-rated charge of $0.03 per gigabyte.

These prices are valid in the US East (Northern Virginia) Region. Check the Storage Gateway Pricing page for costs in other Regions.

— Jeff;