Category: AWS Database Migration Service

Catching Up On AWS Announcements from Early 2017

Even though we have published 123 posts so far this year, we simply don’t have the time to cover every significant AWS launch. Also, the newer services are often richer and take more space to describe, adding to our workload. This post (and others to follow each quarter) will outline some of the launches that we did not have time to address earlier.

So, here we go:

- Migration Support for NoSQL Databases

- Comments, Tagging, and Metadata APIs for WorkDocs.

- Email and SMS Integration for Pinpoint

- Usage Type Groups and Linked Account Access for AWS Budgets

- EC2 Systems Manager Support for Hierarchies, Tagging, and CloudWatch Events

These features have already launched and you may already be using them!

Migration Support for NoSQL Databases

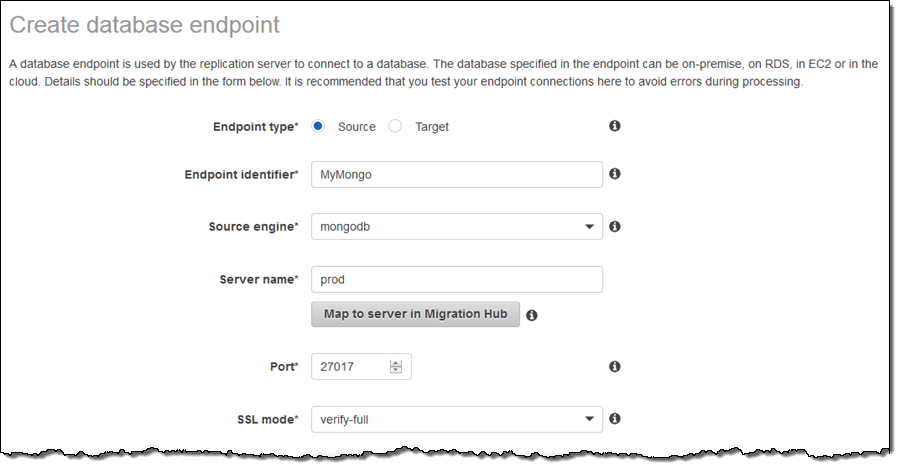

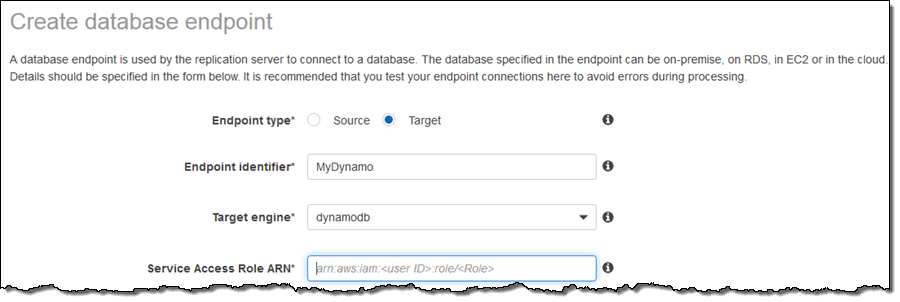

With this launch, AWS Database Migration Service can migrate relational databases, NoSQL databases, and data warehouses. The launch adds support for MongoDB databases as a migration source and Amazon DynamoDB tables as a migration target. To get started, create a replication instance and database endpoints for MongoDB and DynamoDB:

Read MongoDB as a Migration Source and DynamoDB as a Migration Target for more information.

Comments, Tagging, and Metadata APIs for WorkDocs

This addition to the Amazon WorkDocs Administrative SDK provides APIs for creating and accessing metadata, tags, and comments:

Metadata – CreateCustomMetadata, DeleteCustomMetadata.

Tags – CreateLabels, DeleteLabels.

Comments – CreateComment, DeleteComment, DescribeComments.

The SDK is available for Java, Python, Go, JavaScript, .NET, PHP, and Ruby. It handles signing of API requests using Sigv4, and is integrated with IAM (roles and permissions), SNS (real-time notifications), and CloudTrail (monitoring).

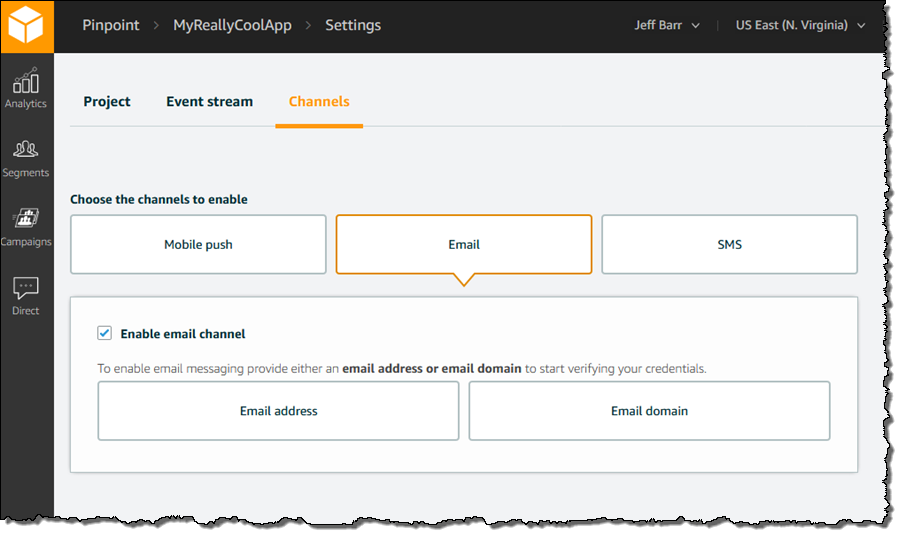

Email and SMS Integration for Pinpoint

In addition to the existing Mobile Push Notifications, Amazon Pinpoint can now drive user engagement through email and SMS notifications. In order to use this feature you must first enable the desired channel or channels:

To learn more, read about Amazon Pinpoint Channels.

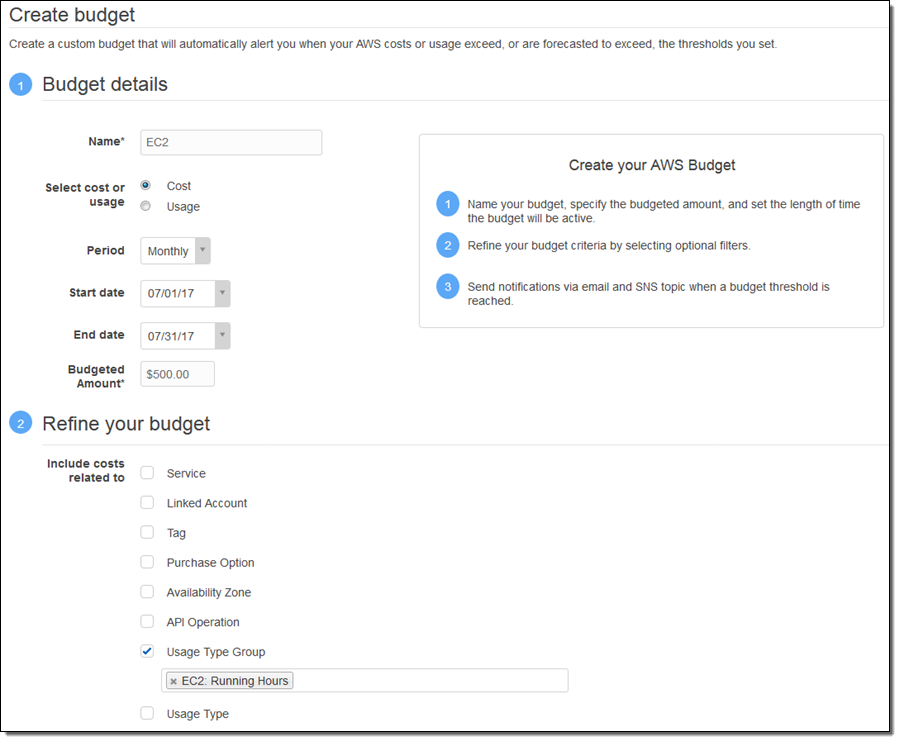

Usage Type Groups and Linked Account Access for AWS Budgets

AWS Budgets let you set cost and usage budgets and receive notification if they are breached (read Managing Your Costs with Budgets and AWS Budgets Update – Track Cloud Costs and Usage).

In order to make AWS Budgets even more useful, we added support for linked accounts and a new usage type filtering option. Organizations that make use of Consolidated Billing to consolidate payment for multiple AWS accounts will benefit from the support for linked accounts. The member accounts can now access their own budgets, while the payer account remains responsible for payment.

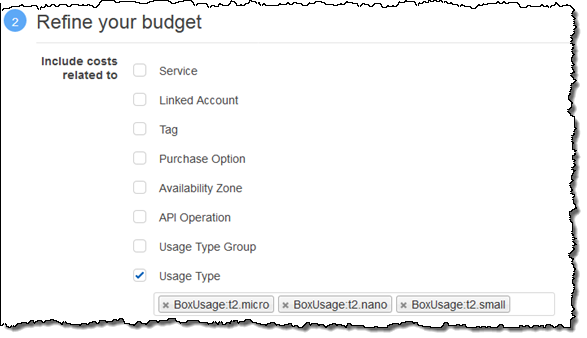

The usage type and usage type group filtering dimensions allow you to track your costs and usage from an aggregate level all the way down to the most basic unit of metering. For example, you can create a budget to track all EC2 usage (EC2-Running Hours):

Or a specific usage type, in this case three different sizes of T2 instances:

EC2 Systems Manager Support for Hierarchies, Tagging, and CloudWatch Events

This management service helps you to automatically collect software inventory, apply OS patches, create system images, and configure both Linux and Windows operating systems.

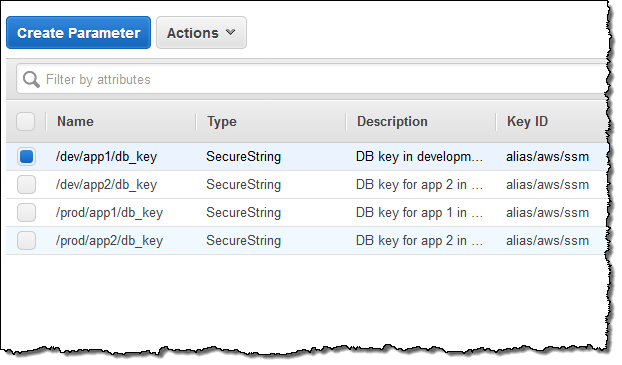

The Parameter Store (one of the service’s most popular features) stores configuration data such as database access strings and passwords in encrypted form. It is accessible from the CLI, APIs, and SDKs; this allows AWS Lambda functions and code running inside of Amazon ECS containers to access the same parameters.

We added support for storage of parameters in hierarchical form, giving you the ability to group them by organization, application, and so forth. You can also create parallel sets of parameters for use in development, testing, and production environments. To create a hierarchy of parameters, use names that include one or more “/” characters:

We also added support for tagging. You can query parameters based on tags and you can add IAM permissions to parameters via tags.

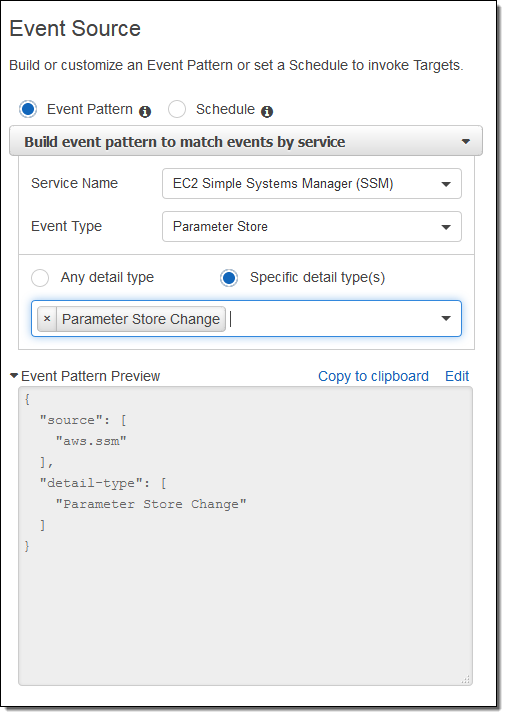

Finally, the Parameter Store is now a source of CloudWatch Events. You can now track changes to your parameters, perhaps making sure that they are not inadvertently changed in a way that could break an existing application:

Keeping Up

In addition to reading this blog on a regular basis, you can also follow me and AWS Cloud on Twitter. You can also check out the AWS What’s New and subscribe to the RSS Feed.

— Jeff;

Roundup of AWS HIPAA Eligible Service Announcements

At AWS we have had a number of HIPAA eligible service announcements. Patrick Combes, the Healthcare and Life Sciences Global Technical Leader at AWS, and Aaron Friedman, a Healthcare and Life Sciences Partner Solutions Architect at AWS, have written this post to tell you all about it.

-Ana

We are pleased to announce that the following AWS services have been added to the BAA in recent weeks: Amazon API Gateway, AWS Direct Connect, AWS Database Migration Service, and Amazon SQS. All four of these services facilitate moving data into and through AWS, and we are excited to see how customers will be using these services to advance their solutions in healthcare. While we know the use cases for each of these services are vast, we wanted to highlight some ways that customers might use these services with Protected Health Information (PHI).

As with all HIPAA-eligible services covered under the AWS Business Associate Addendum (BAA), PHI must be encrypted while at-rest or in-transit. We encourage you to reference our HIPAA whitepaper, which details how you might configure each of AWS’ HIPAA-eligible services to store, process, and transmit PHI. And of course, for any portion of your application that does not touch PHI, you can use any of our 90+ services to deliver the best possible experience to your users. You can find some ideas on architecting for HIPAA on our website.

As with all HIPAA-eligible services covered under the AWS Business Associate Addendum (BAA), PHI must be encrypted while at-rest or in-transit. We encourage you to reference our HIPAA whitepaper, which details how you might configure each of AWS’ HIPAA-eligible services to store, process, and transmit PHI. And of course, for any portion of your application that does not touch PHI, you can use any of our 90+ services to deliver the best possible experience to your users. You can find some ideas on architecting for HIPAA on our website.

Amazon API Gateway

Amazon API Gateway is a web service that makes it easy for developers to create, publish, monitor, and secure APIs at any scale. With PHI now able to securely transit API Gateway, applications such as patient/provider directories, patient dashboards, medical device reports/telemetry, HL7 message processing and more can securely accept and deliver information to any number and type of applications running within AWS or client presentation layers.

One particular area we are excited to see how our customers leverage Amazon API Gateway is with the exchange of healthcare information. The Fast Healthcare Interoperability Resources (FHIR) specification will likely become the next-generation standard for how health information is shared between entities. With strong support for RESTful architectures, FHIR can be easily codified within an API on Amazon API Gateway. For more information on FHIR, our AWS Healthcare Competency partner, Datica, has an excellent primer.

AWS Direct Connect

Some of our healthcare and life sciences customers, such as Johnson & Johnson, leverage hybrid architectures and need to connect their on-premises infrastructure to the AWS Cloud. Using AWS Direct Connect, you can establish private connectivity between AWS and your datacenter, office, or colocation environment, which in many cases can reduce your network costs, increase bandwidth throughput, and provide a more consistent network experience than Internet-based connections.

In addition to a hybrid-architecture strategy, AWS Direct Connect can assist with the secure migration of data to AWS, which is the first step to using the wide array of our HIPAA-eligible services to store and process PHI, such as Amazon S3 and Amazon EMR. Additionally, you can connect to third-party/externally-hosted applications or partner-provided solutions as well as securely and reliably connect end users to those same healthcare applications, such as a cloud-based Electronic Medical Record system.

AWS Database Migration Service (DMS)

To date, customers have migrated over 20,000 databases to AWS through the AWS Database Migration Service. Customers often use DMS as part of their cloud migration strategy, and now it can be used to securely and easily migrate your core databases containing PHI to the AWS Cloud. As your source database remains fully operational during the migration with DMS, you minimize downtime for these business-critical applications as you migrate your databases to AWS. This service can now be utilized to securely transfer such items as patient directories, payment/transaction record databases, revenue management databases and more into AWS.

Amazon Simple Queue Service (SQS)

Amazon Simple Queue Service (SQS) is a message queueing service for reliably communicating among distributed software components and microservices at any scale. One way that we envision customers using SQS with PHI is to buffer requests between application components that pass HL7 or FHIR messages to other parts of their application. You can leverage features like SQS FIFO to ensure your messages containing PHI are passed in the order they are received and delivered in the order they are received, and available until a consumer processes and deletes it. This is important for applications with patient record updates or processing payment information in a hospital.

Let’s get building!

We are beyond excited to see how our customers will use our newly HIPAA-eligible services as part of their healthcare applications. What are you most excited for? Leave a comment below.

AWS Database Migration Service – 20,000 Migrations and Counting

I first wrote about AWS Database Migration Service just about a year ago in my AWS Database Migration Service post. At that time I noted that over 1,000 AWS customers had already made use of the service as part of their move to AWS.

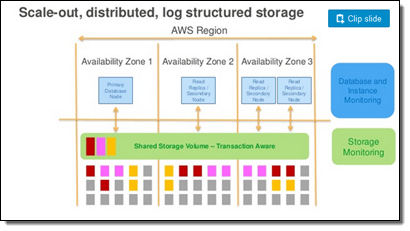

As a quick recap, AWS Database Migration Service and Schema Conversion Tool (SCT) help our customers migrate their relational data from expensive, proprietary databases and data warehouses (either on premises on in the cloud, but with restrictive licensing terms either way) to more cost-effective cloud-based databases and data warehouses such as Amazon Aurora, Amazon Redshift, MySQL, MariaDB, and PostgreSQL, with minimal downtime along the way. Our customers tell us that they love the flexibility and the cost-effective nature of these moves. For example, moving to Amazon Aurora gives them access to a database that is MySQL and PostgreSQL compatible, at 1/10th the cost of a commercial database. Take a peek at our AWS Database Migration Services Customer Testimonials to see how Expedia, Thomas Publishing, Pega, and Veoci have made use of the service.

20,000 Unique Migrations

I’m pleased to be able to announce that our customers have already used AWS Database Migration Service to migrate 20,000 unique databases to AWS and that the pace continues to accelerate (we reached 10,000 migrations in September of 2016).

We’ve added many new features to DMS and SCT over the past year. Here’s a summary:

- March 2016 – General availability in eight AWS regions.

- May 2016 – Addition of Redshift as a migration target.

- July 2016 – Highly available architecture for ongoing replication.

- July 2016 – SSL-enabled endpoints & support for Sybase as a migration source and target.

- August 2016 – Expansion to Asia Pacific (Mumbai), Asia Pacific (Seoul), and South America (São Paulo) Regions.

- October 2016 – Expansion to US East (Ohio) Region.

- December 2016 – Support for Oracle SSL connections.

- December 2016 – Expansion to Canada (Central) and EU (London) Regions.

- January 2017 – Support for events and event subscriptions.

- February 2017 – Migration from Oracle and Teradata data warehouses to Amazon Redshift.

Learn More

Here are some resources that will help you to learn more and to get your own migrations underway, starting with some recent webinars:

Migrating From Sharded to Scale-Up – Some of our customers implemented a scale-out strategy in order to deal with their relational workload, sharding their database across multiple independent pieces, each running on a separate host. As part of their migration, these customers often consolidate two or more shards onto a single Aurora instance, reducing complexity, increasing reliability, and saving money along the way. If you’d like to do this, check out the blog post, webinar recording, and presentation.

Migrating From Sharded to Scale-Up – Some of our customers implemented a scale-out strategy in order to deal with their relational workload, sharding their database across multiple independent pieces, each running on a separate host. As part of their migration, these customers often consolidate two or more shards onto a single Aurora instance, reducing complexity, increasing reliability, and saving money along the way. If you’d like to do this, check out the blog post, webinar recording, and presentation.

Migrating From Oracle or SQL Server to Aurora – Other customers migrate from commercial databases such as Oracle or SQL Server to Aurora. If you would like to do this, check out this presentation and the accompanying webinar recording.

We also have plenty of helpful blog posts on the AWS Database Blog:

- Reduce Resource Consumption by Consolidating Your Sharded System into Aurora – “You might, in fact, save bunches of money by consolidating your sharded system into a single Aurora instance or fewer shards running on Aurora. That is exactly what this blog post is all about.”

- How to Migrate Your Oracle Database to Amazon Aurora – “This blog post gives you a quick overview of how you can use the AWS Schema Conversion Tool (AWS SCT) and AWS Database Migration Service (AWS DMS) to facilitate and simplify migrating your commercial database to Amazon Aurora. In this case, we focus on migrating from Oracle to the MySQL-compatible Amazon Aurora.”

- Cross-Engine Database Replication Using AWS Schema Conversion Tool and AWS Database Migration Service – “AWS SCT makes heterogeneous database migrations easier by automatically converting source database schema. AWS SCT also converts the majority of custom code, including views and functions, to a format compatible with the target database.”

- Database Migration—What Do You Need to Know Before You Start? – “Congratulations! You have convinced your boss or the CIO to move your database to the cloud. Or you are the boss, CIO, or both, and you finally decided to jump on the bandwagon. What you’re trying to do is move your application to the new environment, platform, or technology (aka application modernization), because usually people don’t move databases for fun.”

- How to Script a Database Migration – “You can use the AWS DMS console or the AWS CLI or the AWS SDK to perform the database migration. In this blog post, I will focus on performing the migration with the AWS CLI.”

The documentation includes five helpful walkthroughs:

- Migrating Databases to Amazon Web Services.

- Migrating an On-Premises Oracle Database to Amazon Aurora Using AWS Database Migration Service.

- Migrating an Amazon RDS Oracle Database to Amazon Aurora Using AWS Database Migration Service.

- Migrating MySQL-Compatible Databases to AWS.

- Migrating MySQL-Compatible Databases to Amazon Aurora.

There’s also a hands-on lab (you will need to register in order to participate).

See You at a Summit

The DMS team is planning to attend and present at many of our upcoming AWS Summits and would welcome the opportunity to discuss your database migration requirements in person.

— Jeff;

AWS Database Migration Service

Do you currently store relational data in an on-premises Oracle, SQL Server, MySQL, MariaDB, or PostgreSQL database? Would you like to move it to the AWS cloud with virtually no downtime so that you can take advantage of the scale, operational efficiency, and the multitude of data storage options that are available to you?

Do you currently store relational data in an on-premises Oracle, SQL Server, MySQL, MariaDB, or PostgreSQL database? Would you like to move it to the AWS cloud with virtually no downtime so that you can take advantage of the scale, operational efficiency, and the multitude of data storage options that are available to you?

If so, the new AWS Database Migration Service (DMS) is for you! First announced last fall at AWS re:Invent, our customers have already used it to migrate over 1,000 on-premises databases to AWS. You can move live, terabyte-scale databases to the cloud, with options to stick with your existing database platform or to upgrade to a new one that better matches your requirements. If you are migrating to a new database platform as part of your move to the cloud, the AWS Schema Conversion Tool will convert your schemas and stored procedures for use on the new platform.

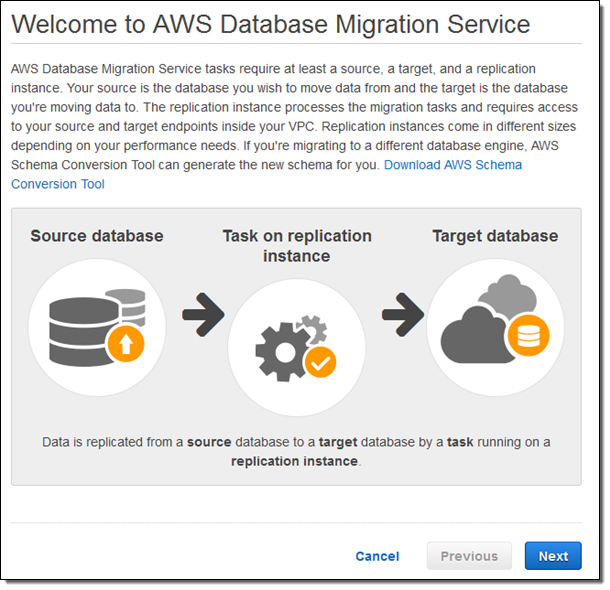

The AWS Database Migration Service works by setting up and then managing a replication instance on AWS. This instance unloads data from the source database and loads it into the destination database, and can be used for a one-time migration followed by on-going replication to support a migration that entails minimal downtime. Along the way DMS handles many of the complex details associated with migration, including data type transformation and conversion from one database platform to another (Oracle to Aurora, for example). The service also monitors the replication and the health of the instance, notifies you if something goes wrong, and automatically provisions a replacement instance if necessary.

The service supports many different migration scenarios and networking options One of the endpoints must always be in AWS; the other can be on-premises, running on an EC2 instance, or running on an RDS database instance. The source and destination can reside within the same Virtual Private Cloud (VPC) or in two separate VPCs (if you are migrating from one cloud database to another). You can connect to an on-premises database via the public Internet or via AWS Direct Connect.

Migrating a Database

You can set up your first migration with a couple of clicks! You simply create the target database, migrate the database schema, set up the data replication process, and initiate the migration. After the target database has caught up with the source, you simply switch to using it in your production environment.

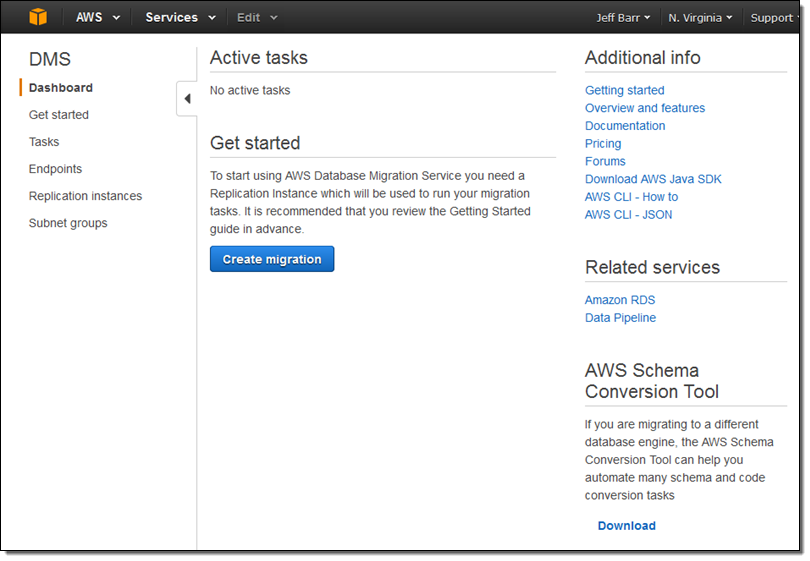

I start by opening up the AWS Database Migration Service Console (in the Database section of the AWS Management Console as DMS) and clicking on Create migration.

The Console provides me with an overview of the migration process:

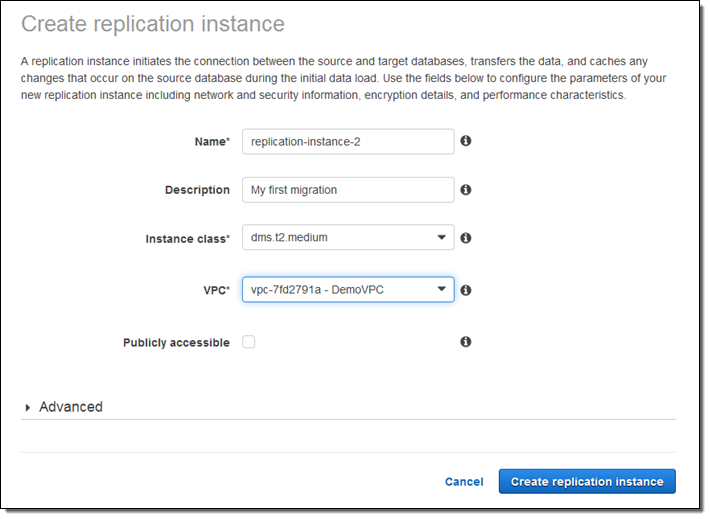

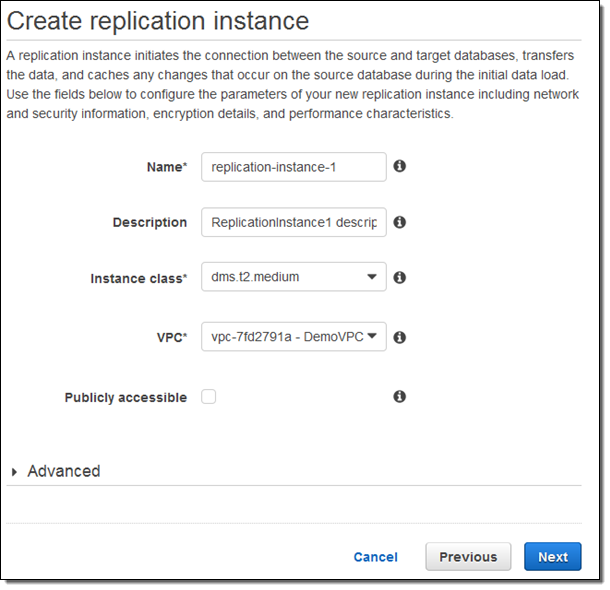

I click on Next and provide the parameters that are needed to create my replication instance:

For this blog post, I selected one of my existing VPCs and unchecked Publicly accessible. My colleagues had already set me up with an EC2 instance to represent my “on-premises” database.

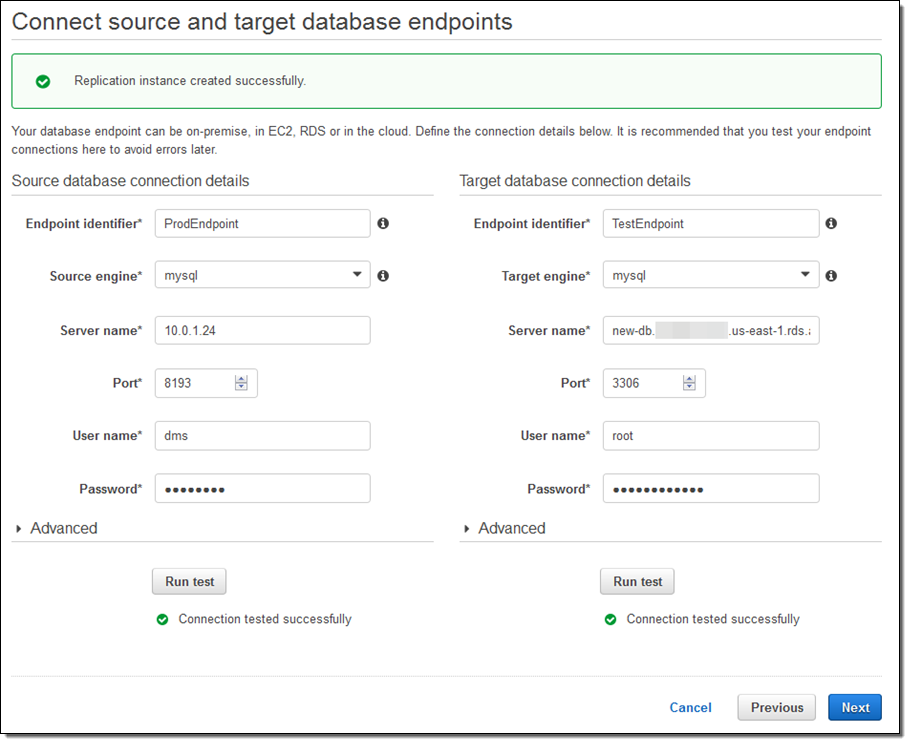

After the replication instance has been created, I specify my source and target database endpoints and then click on Run test to make sure that the endpoints are accessible (truth be told, I spent some time adjusting my security groups in order to make the tests pass):

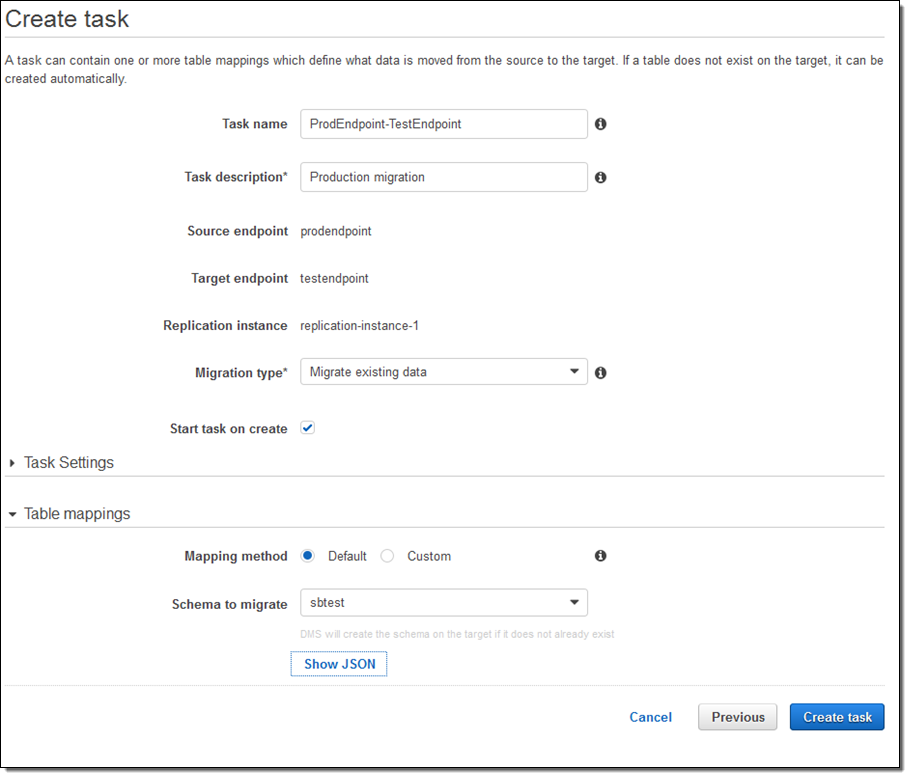

Now I create the actual migration task. I can (per the Migration type) migrate existing data, migrate and then replicate, or replicate going forward:

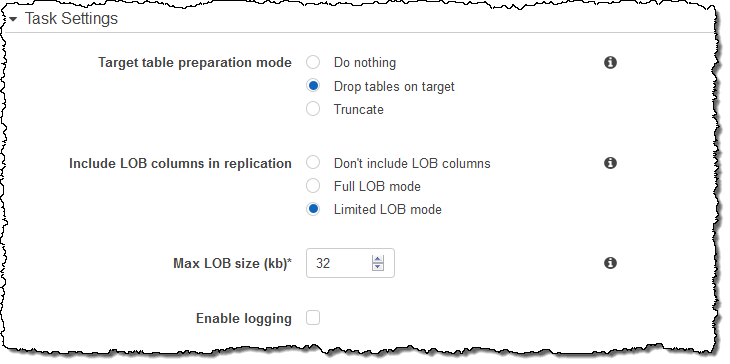

I could have clicked on Task Settings to set some other options (LOBs are Large Objects):

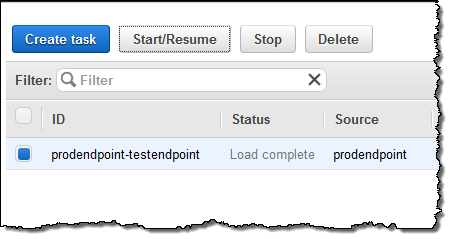

The migration task is ready, and will begin as soon as I select it and click on Start/Resume:

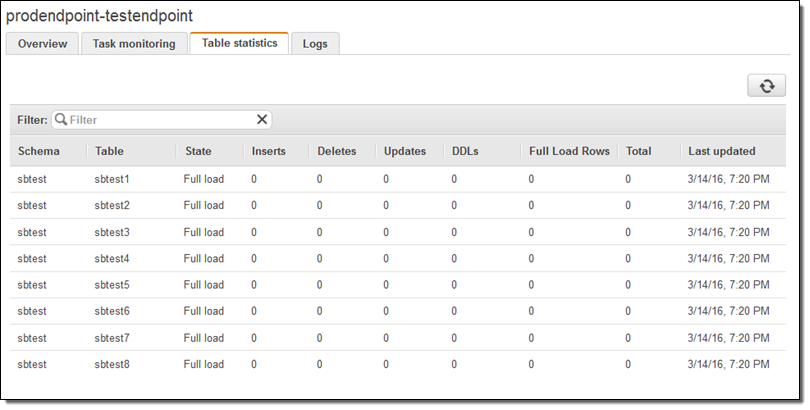

I can watch for progress, and then inspect the Table statistics to see what happened (these were test tables and the results are not very exciting):

At this point I would do some sanity checks and then point my application to the new endpoint. I could also have chosen to perform an ongoing replication.

The AWS Database Migration Service offers many options and I have barely scratched the surface. You can, for example, choose to migrate only certain tables. You can also create several different types of replication tasks and activate them at different times. I highly recommend you read the DMS documentation as it does a great job of guiding you through your first migration.

If you need to migrate a collection of databases, you can automate your work using the AWS Command Line Interface (CLI) or the Database Migration Service API.

Price and Availability

The AWS Database Migration Service is available in the US East (Northern Virginia), US West (Oregon), US West (Northern California), EU (Ireland), EU (Frankfurt), Asia Pacific (Tokyo), Asia Pacific (Singapore), and Asia Pacific (Sydney) Regions and you can start using it today (we plan to add support for other Regions in the coming months).

Pricing is based on the compute resources used during the migration process, with a charge for longer-term storage of logs. See the Database Migration Service Pricing page for more information.

— Jeff;