Category: AWS OpsWorks

New – AWS OpsWorks for Chef Automate

AWS OpsWorks helps you to configure and run applications using Chef. You use a Domain Specific Language (DSL) to write cookbooks that define your application’s architecture and the configuration of each component. The Chef server is an essential part of the configuration process. It stores all of the cookbooks and tracks state information for each of the instances (nodes in Chef terminology).

AWS OpsWorks helps you to configure and run applications using Chef. You use a Domain Specific Language (DSL) to write cookbooks that define your application’s architecture and the configuration of each component. The Chef server is an essential part of the configuration process. It stores all of the cookbooks and tracks state information for each of the instances (nodes in Chef terminology).

Because the Chef server is in the critical path when newly launched instances are configured, it must be reliable. Many OpsWorks and Chef users install and maintain this important architectural component themselves. In production-scale environments, this leaves them to handle backups, restores, version upgrades, and so forth.

New AWS OpsWorks for Chef Automate

Early this month we launched AWS OpsWorks for Chef Automate from the AWS re:Invent stage. You can launch the Chef Automate server with just 3 clicks and start using it within minutes. You can use community cookbooks from Chef Supermarket and community tools such as Test Kitchen and Knife.

You can use Chef Automate to manage your infrastructure throughout the life-cycle of your application’s infrastructure. For example, newly launched EC2 instances can automatically connect to the Chef server and run a specified recipe by using an unattended association script (read Adding Nodes Automatically in AWS OpsWorks for Chef Automate to learn more). The registration script can be used to register EC2 instances created dynamically through an Auto Scaling Group and to register on-premises servers.

Take a Look

Let’s launch a Chef Automate server from the OpsWorks Console. Click on Go to OpsWorks for Chef Automate to get started.

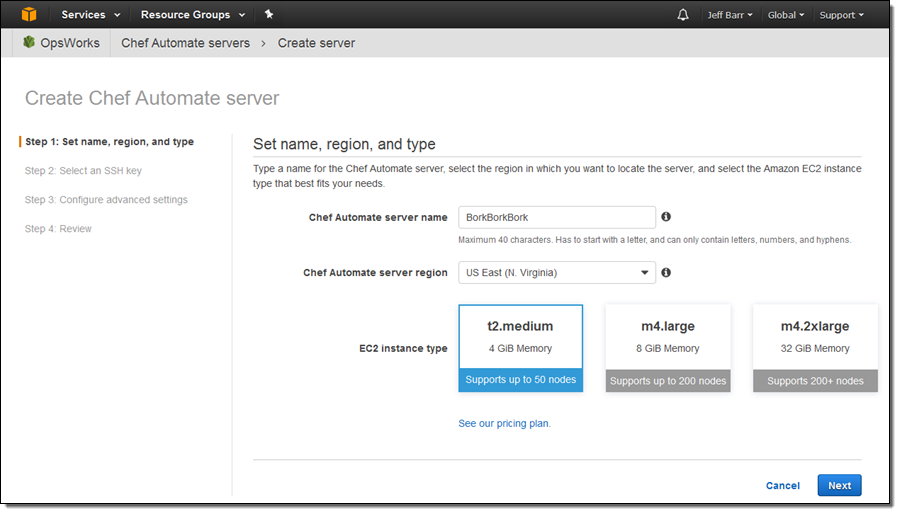

Click on Create Chef Automate server, give your server a name, choose a region, and select a suitable EC2 instance type:

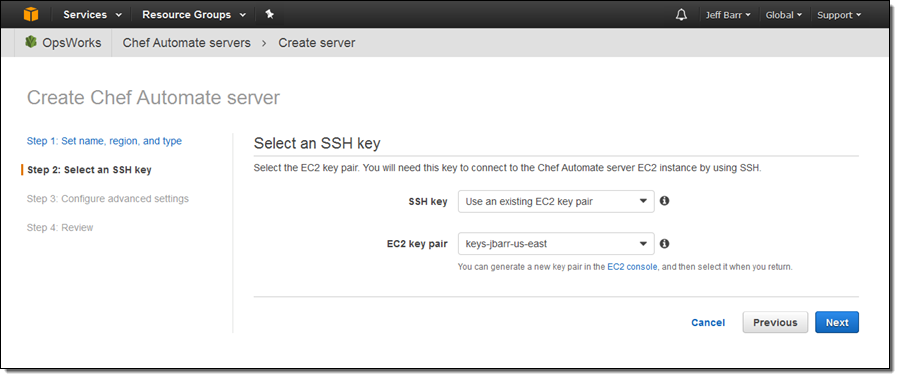

Choose one of your SSH key pairs, or opt out of SSH:

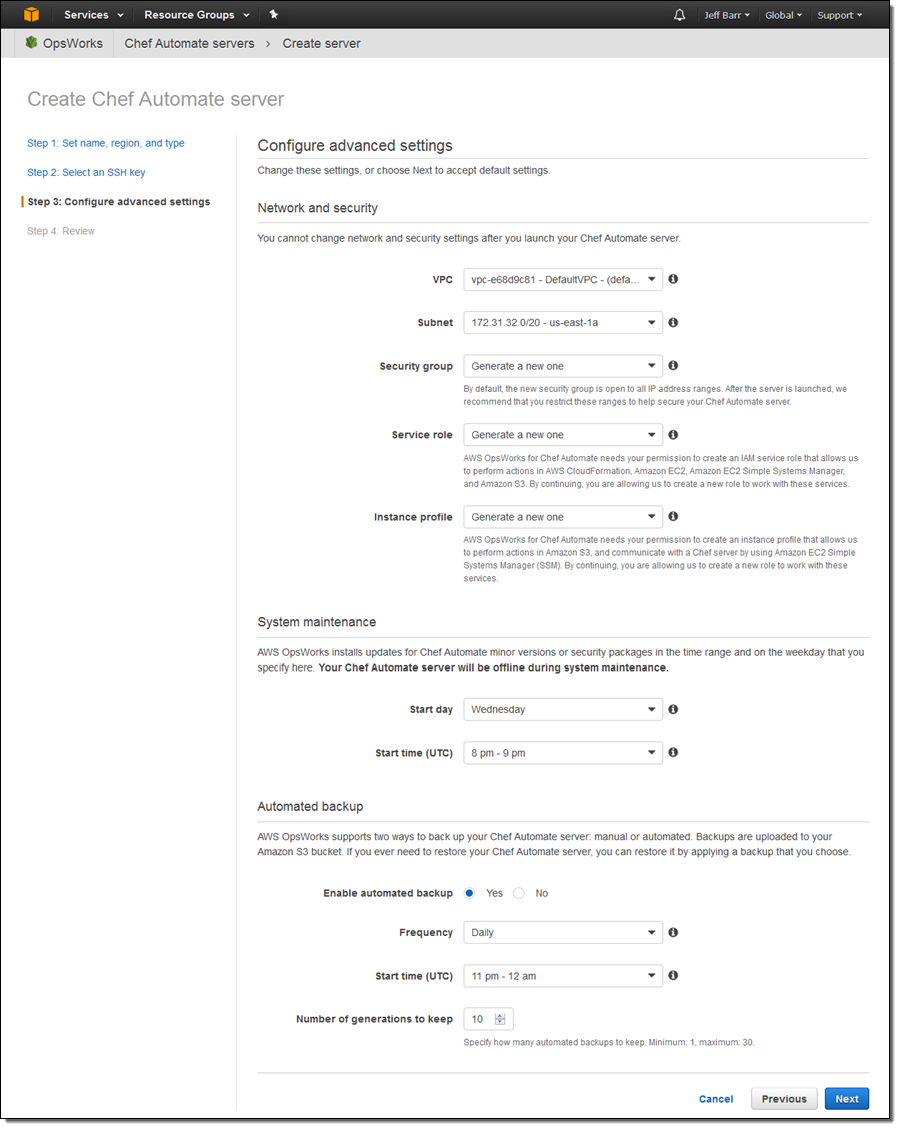

Finally, configure your network (VPC), IAM, maintenance window, and backup settings:

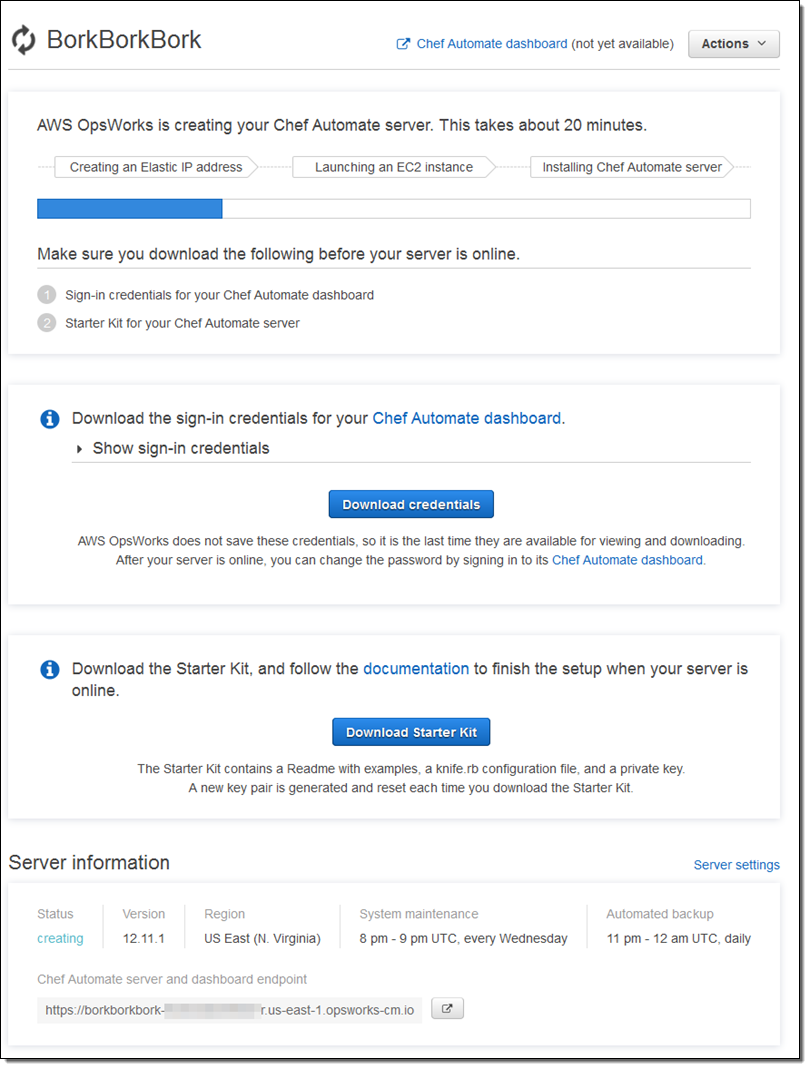

Click on Next, review your settings, and then click on Launch! The launch process takes less than 20 minutes. During that time you can download the sign-in credentials for your Chef Automate dashboard along with a Starter Kit:

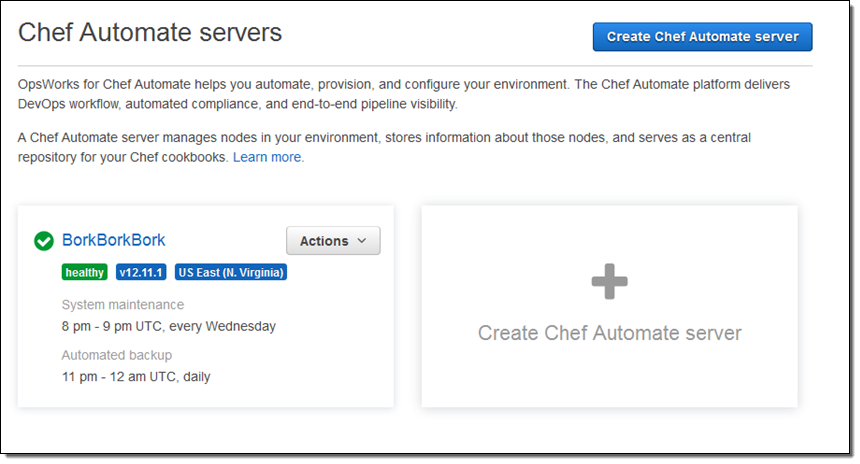

You can see all of your Chef Automate servers at a glance:

Click on the server name (BorkBorkBork here), and then on Open Chef Automate dashboard, then enter your credentials to log in:

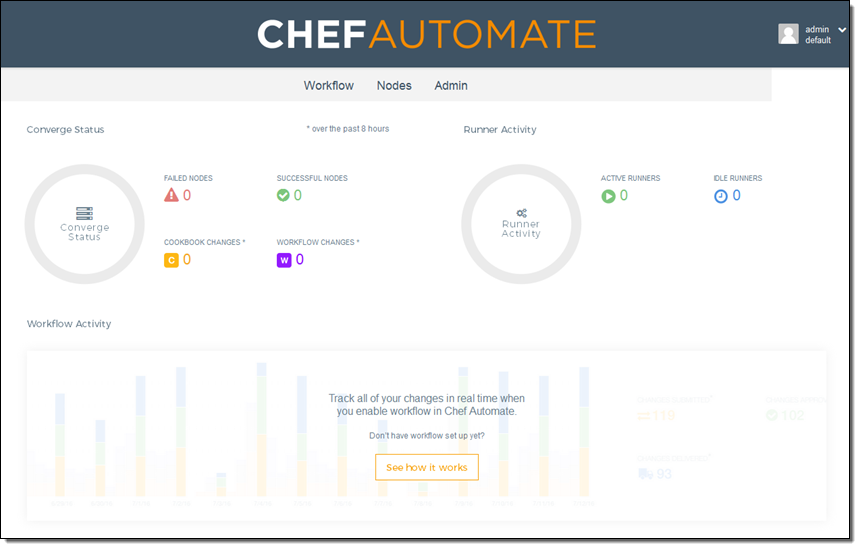

And here’s the dashboard:

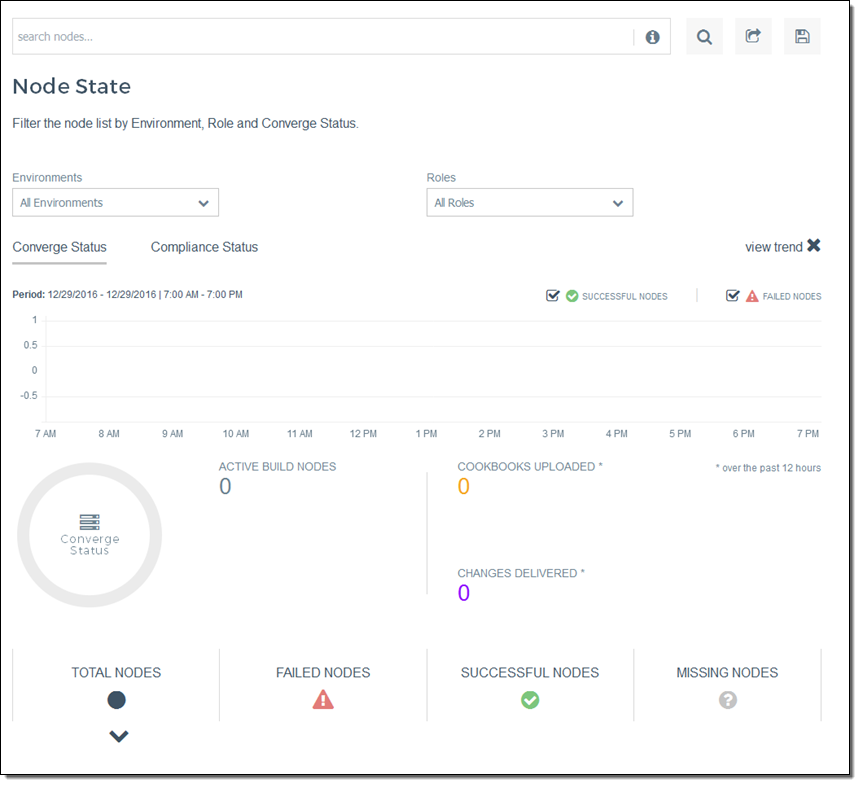

You can see and manage your nodes:

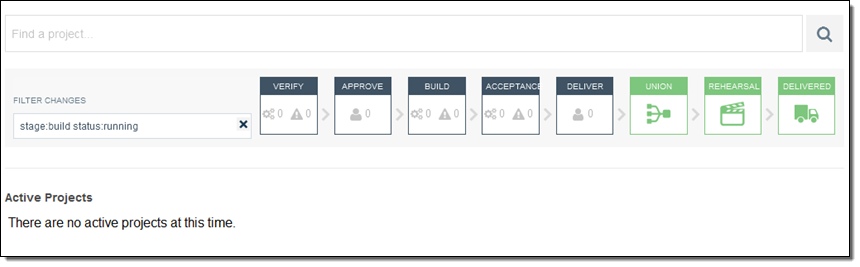

Manage your workflows:

And much more!

Behind the scenes, the launch process invokes a AWS CloudFormation template. The template creates an EC2 instance, an Elastic IP Address, and a Security Group.

Available Now

You can launch AWS OpsWorks for Chef Automate today in the US East (Northern Virginia), US West (Oregon), and EU (Ireland) Regions. Pricing is based on the number of nodes and the number of hours that they are connected to the server; see the Chef Automate Pricing page for more info. As part of the AWS Free Tier, you can use up to 10 nodes at no charge for 12 months.

— Jeff;

Human Longevity, Inc. – Changing Medicine Through Genomics Research

Human Longevity, Inc. (HLI) is at the forefront of genomics research and wants to build the world’s largest database of human genomes along with related phenotype and clinical data, all in support of preventive healthcare. In today’s guest post, Yaron Turpaz, Bryan Coon, and Ashley Van Zeeland talk about how they are using AWS to store the massive amount of data that is being generated as part of this effort to revolutionize medicine.

— Jeff;

When Human Longevity, Inc. launched in 2013, our founders recognized the challenges ahead. A genome contains all the information needed to build and maintain an organism; in humans, a copy of the entire genome, which contains more than three billion DNA base pairs, is contained in all cells that have a nucleus. Our goal is to sequence one million genomes and deliver that information—along with integrated health records and disease-risk models—to researchers and physicians. They, in turn, can interpret the data to provide targeted, personalized health plans and identify the optimal treatment for cancer and other serious health risks far earlier than has been possible in the past. The intent is to transform medicine by fostering preventive healthcare and risk prevention in place of the traditional “sick care” model, when people wind up seeing their doctors only after symptoms manifest.

Our work in developing and applying large-scale computing and machine learning to genomics research entails the collection, analysis, and storage of immense amounts of data from DNA-sequencing technology provided by companies like Illumina. Raw data from a single genome consumes about 100 gigabytes; that number increases as we align the genomic information with annotation and phenotype sources and analyze it for health insights.

From the beginning, we knew our choice of compute and storage technology would have a direct impact on the success of the company. Using the cloud was clearly the best option. We’re experts in genomics, and don’t want to spend resources building and maintaining an IT infrastructure. We chose to go all in on AWS for the breadth of the platform, the critical scalability we need, and the expertise AWS has developed in big data. We also saw that the pace of innovation at AWS—and its deliberate strategy of keeping costs as low as possible for customers—would be critical in enabling our vision.

Leveraging the Range of AWS Services

Spectral karyotype analysis / Image courtesy of Human Longevity, Inc.

Today, we’re using a broad range of AWS services for all kinds of compute and storage tasks. For example, the HLI Knowledgebase leverages a distributed system infrastructure comprised of Amazon S3 storage and a large number of Amazon EC2 nodes. This helps us achieve resource isolation, scalability, speed of provisioning, and near real-time response time for our petabyte-scale database queries and dynamic cohort builder. The flexibility of AWS services makes it possible for our customized Amazon Machine Images and pre-built, BTRFS-partitioned Amazon EBS volumes to achieve turn-up time in seconds instead of minutes. We use Amazon EMR for executing Spark queries against our data lake at the scale we need. AWS Lambda is a fantastic tool for hooking into Amazon S3 events and communicating with apps, allowing us to simply drop in code with the business logic already taken care of. We use Auto Scaling based on demand, and AWS OpsWorks for managing a Docker pipeline.

We also leverage the cost controls provided by Amazon EC2 Spot and Reserved Instance types. When we first started, we used on-demand instances, but the costs started to grow significantly. With Spot and Reserved Instances, we can allocate compute resources based on specific needs and workflows. The flexibility of AWS services enables us to make extensive use of dockerized containers through the resource-management services provided by Apache Mesos. Hundreds of dynamic Amazon EC2 nodes in both our persistent and spot abstraction layers are dynamically adjusted to scale up or down based on usage demand and the latest AWS pricing information. We achieve substantial savings by sharing this dynamically scaled compute cluster with our Knowledgebase service and the internal genomic and oncology computation pipelines. This flexibility gives us the compute power we need while keeping costs down. We estimate these choices have helped us reduce our compute costs by up to 50 percent from the on-demand model.

We’ve also worked with AWS Professional Services to address a particularly hard data-storage challenge. We have genomics data in hundreds of Amazon S3 buckets, many of them in the petabyte range and containing billions of objects. Within these collections are millions of objects that are unused, or used once or twice and never to be used again. It can be overwhelming to sift through these billions of objects in search of one in particular. It presents an additional challenge when trying to identify what files or file types are candidates for the Amazon S3-Infrequent Access storage class. Professional Services helped us with a solution for indexing Amazon S3 objects that saves us time and money.

Moving Faster at Lower Cost

Our decision to use AWS came at the right time, occurring at the inflection point of two significant technologies: gene sequencing and cloud computing. Not long ago, it took a full year and cost about $100 million to sequence a single genome. Today we can sequence a genome in about three days for a few thousand dollars. This dramatic improvement in speed and lower cost, along with rapidly advancing visualization and analytics tools, allows us to collect and analyze vast amounts of data in close to real time. Users can take that data and test a hypothesis on a disease in a matter of days or hours, compared to months or years. That ultimately benefits patients.

Our business includes HLI Health Nucleus, a genomics-powered clinical research program that uses whole-genome sequence analysis, advanced clinical imaging, machine learning, and curated personal health information to deliver the most complete picture of individual health. We believe this will dramatically enhance the practice of medicine as physicians identify, treat, and prevent diseases, allowing their patients to live longer, healthier lives.

— Yaron Turpaz (Chief Information Officer), Bryan Coon (Head of Enterprise Services), and Ashley Van Zeeland (Chief Technology Officer).

Learn More

Learn more about how AWS supports genomics in the cloud, and see how genomics innovator Illumina uses AWS for accelerated, cost-effective gene sequencing.

AWS OpsWorks Update – Provision & Manage ECS Container Instances; Run RHEL 7

AWS OpsWorks makes it easy for you to deploy applications of all shapes and sizes. It provides you with an integrated management experience that spans the entire application lifecycle including resource provisioning, EBS volume setup, configuration management, application deployment, monitoring, and access control (read my introductory post, AWS OpsWorks – Flexible Application Management in the Cloud Using Chef for more information).

Amazon EC2 Container Service is a highly scalable container management service that supports Docker containers and allows you to easily run applications on a managed cluster of Amazon Elastic Compute Cloud (EC2) instances (again, I have an introductory post if you’d like to learn more: Amazon EC2 Container Service (ECS) – Container Management for the AWS Cloud).

ECS and RHEL Support

Today, in the finest “peanut butter and chocolate” tradition, we are adding support for ECS Container Instances to OpsWorks. You can now provision and manage ECS Container Instances that are running Ubuntu 14.04 LTS or the Amazon Linux 2015.03 AMI.

We are also adding support for Red Hat Enterprise Linux (RHEL) 7.1.

Let’s take a closer look at both features!

Support for ECS Container Instances

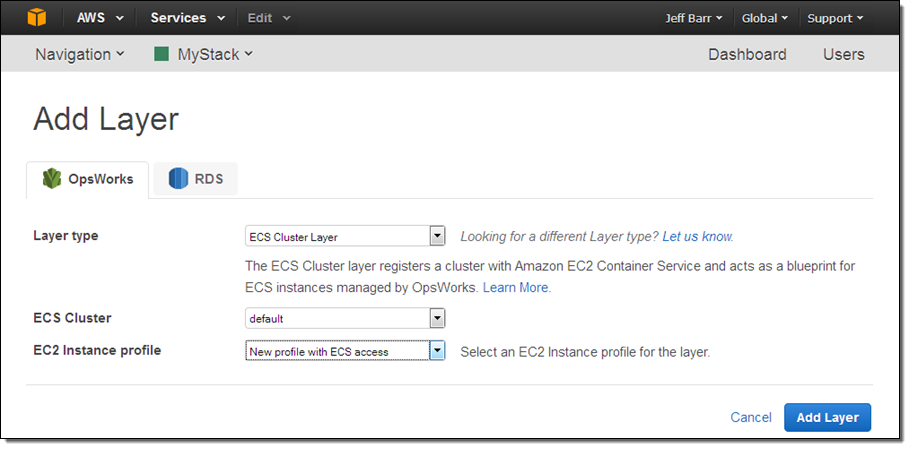

The new ECS Cluster layer type makes it easy for you to provision and configure ECS Container Instances. You simply create the layer, specify the name and instance type for the cluster (which must already exist), define and attach EBS volumes as desired, and you are good to go. The instances will be provisioned with Docker, the ECS agent, and the OpsWorks agent, and will be registered with the ECS cluster associated with the ECS Cluster layer.

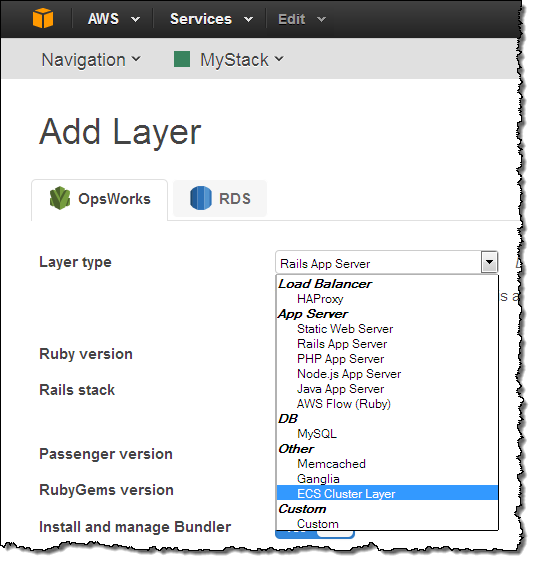

It is really easy to get started. Simply add a new layer and select the ECS Cluster Layer type:

Then choose a cluster and a profile:

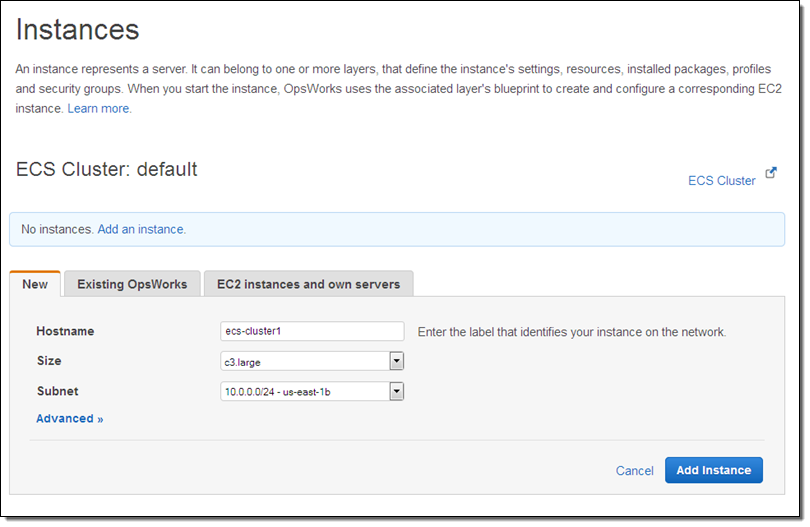

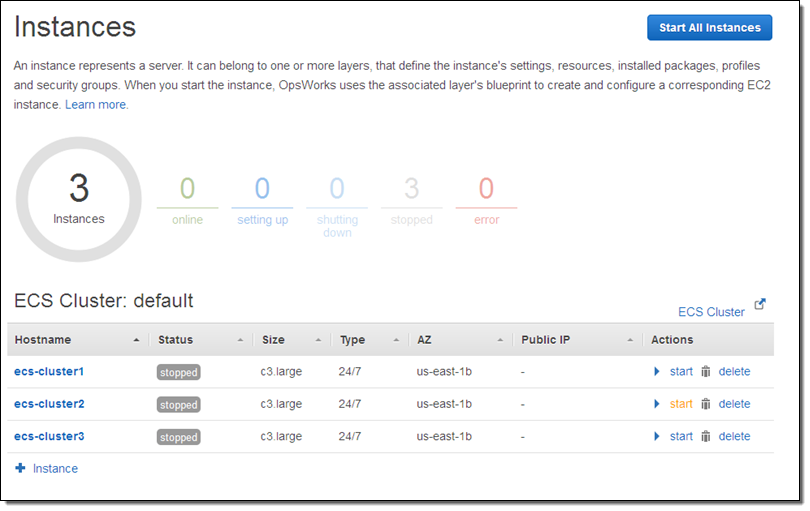

The next step is to add instances to the cluster. This takes just a couple of clicks per instance:

As is always the case with OpsWorks, the instances are initially in the Stopped state, and can be started with a click on Start All Instances (individual instances can also be started):

Once the instances are up and running you can run Chef recipes on them. You can also install operating system (Linux only) and package updates (read Run AWS OpsWorks Stack Commands to learn more) on the instances in the cluster. Finally, take a look at Using OpsWorks to Perform Operational Tasks to learn how to envelop shell commands in a simple JSON wrapper and run them.

For more information on this and other features, take a look at the OpsWorks User Guide. To learn more about how to run ECS tasks on Container Instances that have been provisioned by OpsWorks, read the ECS Getting Started Guide.

RHEL 7.1 Support

OpsWorks now supports version 7.1 of Red Hat Enterprise Linux (RHEL). Many AWS customers have asked us to support this OS and we are happy to oblige, as we did earlier this year when we announced OpsWorks support for Windows. You can launch and manage EC2 instances running RHEL 7. You can also manage existing, on-premises instances that are running RHEL 7.

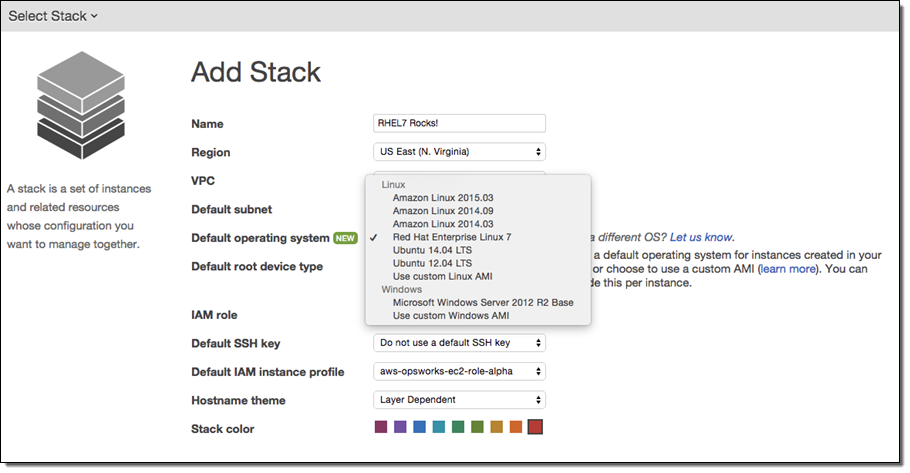

You have several launch options. You can choose RHEL 7 as the default when you launch a new stack, and you can set it as the default for an existing stack. You can also leave the default as-is and choose to run RHEL 7 when you launch new instances. Here’s how you select RHEL 7 as the default when you launch a new stack:

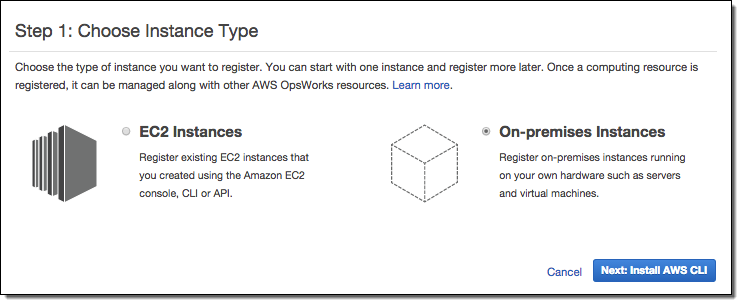

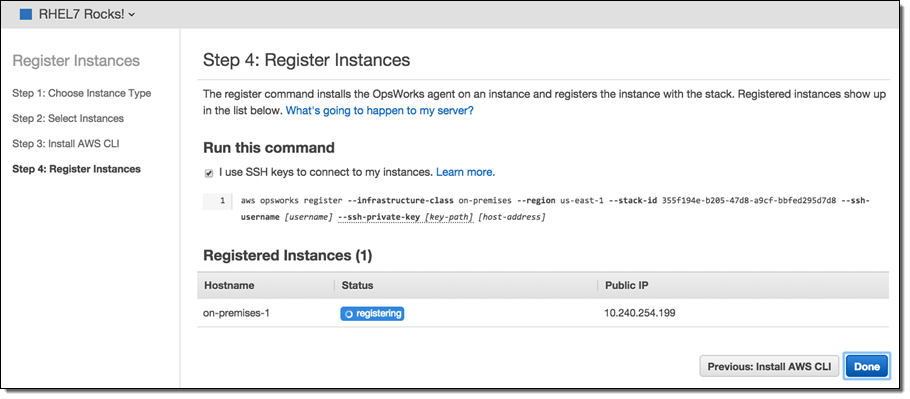

As you probably know already, you can manage instances that are not running on EC2 for a modest hourly fee. You can take advantage of the monitoring and management tools provided by OpsWorks while managing all of your instances using a single user interface. To do this, you add additional compute power to a layer by registering an existing instance instead of launching a new one:

Step through the wizard; the final step will show you how to install the OpsWorks agent on your instance and register with OpsWorks:

When you run the command it will download the agent, install any necessary packages, and start the agent. The agent will register itself with OpsWorks and the instance will become part of the stack specified on the command line. At that point the instance will be registered as part of the stack but not assigned to a layer or configured in any particular way. You can use OpsWorks user-management feature to create users, manage permissions, and provide them with SSH access if necessary.

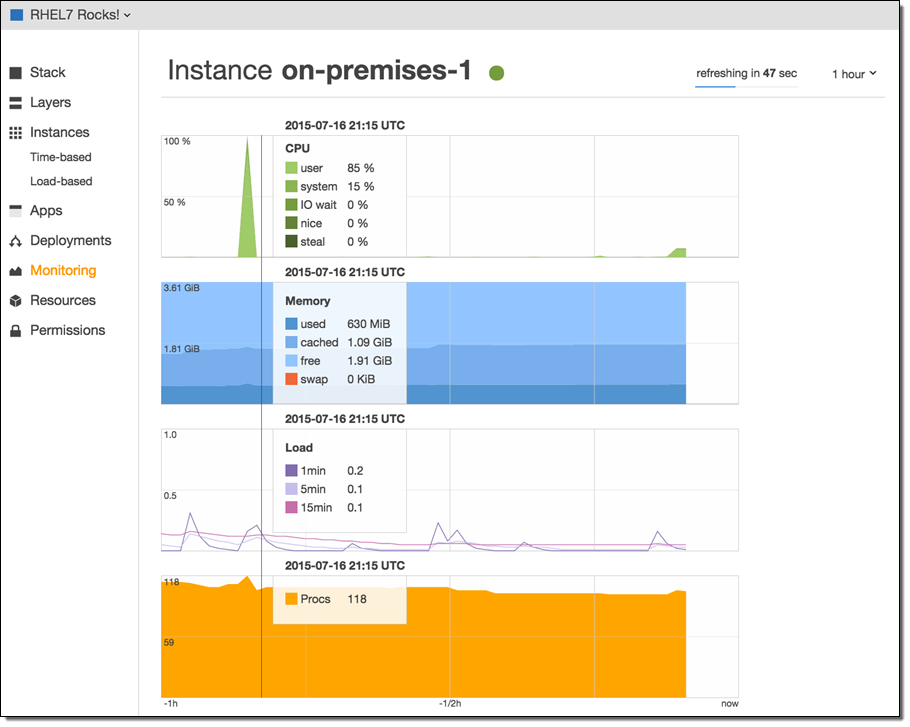

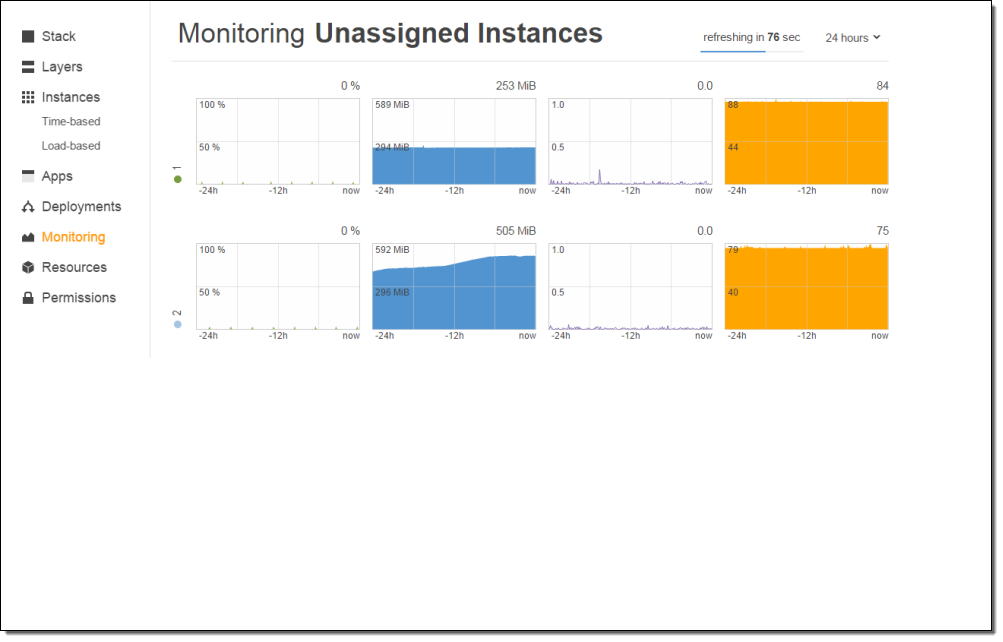

Installing the agent also sets up one-minute CloudWatch metrics:

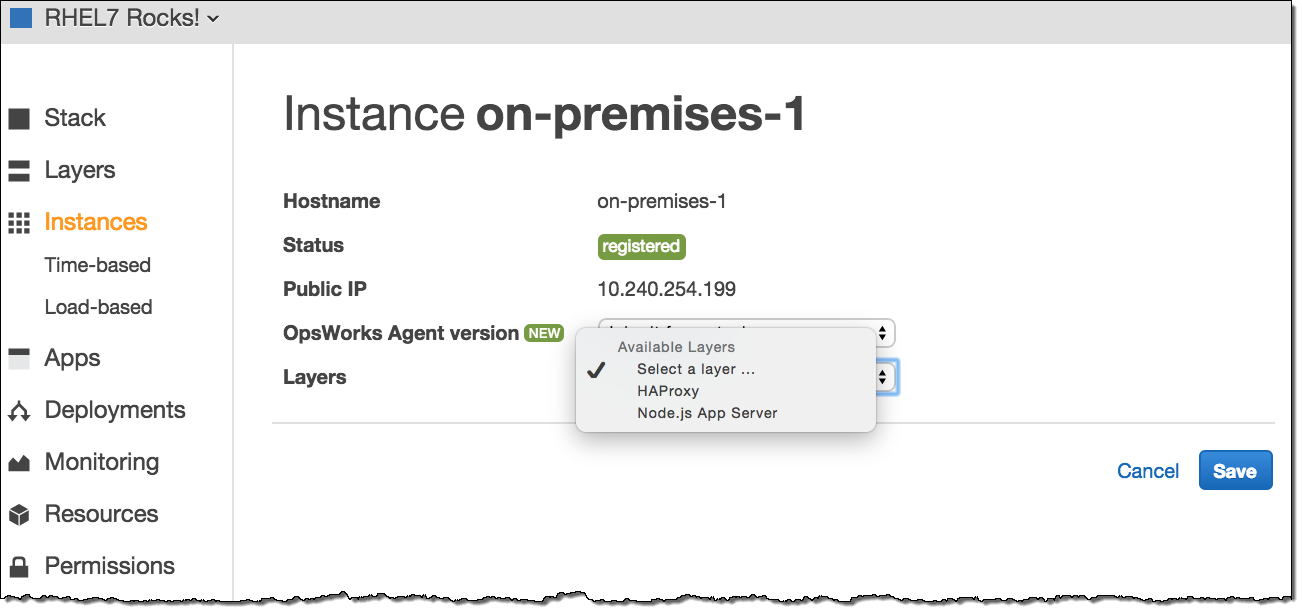

After you have configured the instances and verified that they are being monitored, you can assign them to a layer:

Available Now

These features are available now and you can start using them today.

— Jeff;

PS – Special thanks are due to my colleagues Mark Rambow and Cyrus Amiri for their help with this post.

AWS OpsWorks for Windows

AWS OpsWorks gives you an integrated management experience that spans the entire life cycle of your application including resource provisioning, configuration management, application deployment, monitoring, and access control. As I noted in my introductory post (AWS OpsWorks – Flexible Application Management in the Cloud Using Chef), it works with applications of any level of complexity and is independent of any particular architectural pattern.

We launched OpsWorks with support for EC2 instances running Linux. Late last year we added support for on-premises servers, also running Linux. In-between, we also added support for Java, Amazon RDS , Amazon Simple Workflow, and more.

Let’s review some OpsWorks terminology first! An OpsWorks Stack hosts one or more Applications. A Stack contains a set of Amazon Elastic Compute Cloud (EC2) instances and a set of blueprints (which OpsWorks calls Layers) for setting up the instances in the Stack. Each Stack can also contain references to one or more Chef Cookbooks.

Support for Windows

Today we are making OpsWorks even more useful by adding support for EC2 instances running Windows Server 2012 R2. These instances can be set up by using Custom layers. The Cookbooks associated with the layers can provision the instance, install packaged and custom software, and react to life cycle events. They can also run PowerShell scripts.

Getting Started with Windows

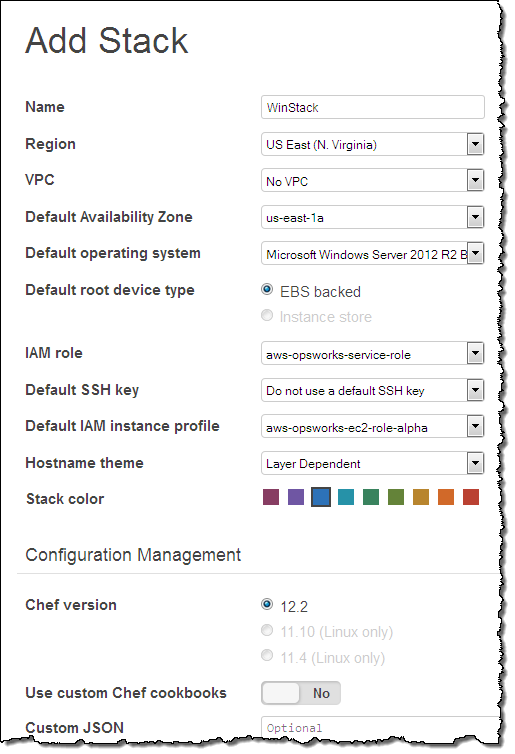

You can now specify Windows 2012 R2 as the default operating system when you create a new Stack. If you do this, you should also click on Advanced and choose version 12. of Chef, as follows:

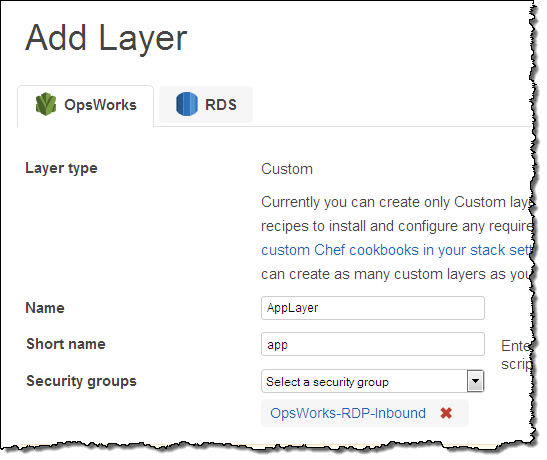

Now add a Custom Layer. If you select a security group that allows for inbound RDP access, you will be able to use a new OpsWorks feature that allows you to create temporary access credentials for the instances in the Layer:

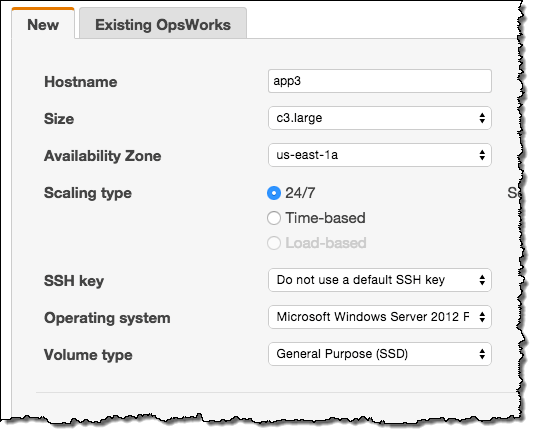

With the Stack and the Layer all set up, add an Instance to the Layer, and then start it:

Connecting to a Windows Instance

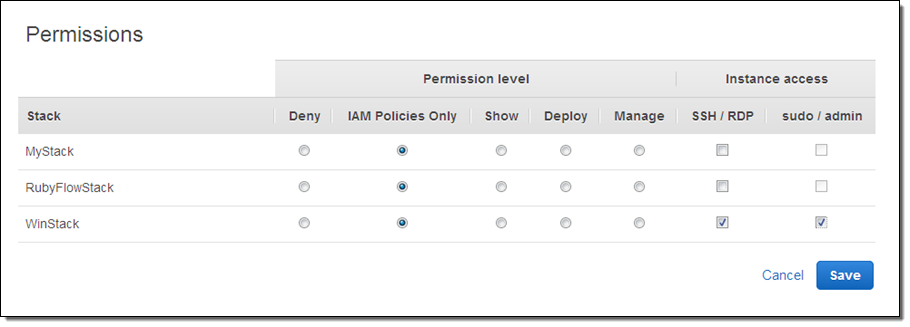

OpsWorks allows you to create IAM users, import them to OpsWorks, give them appropriate permissions, and log in to the instances with the credentials for the user (via RDP or SSH, as appropriate)! For example, you can create a user called winuser and allow it to be used for RDP access:

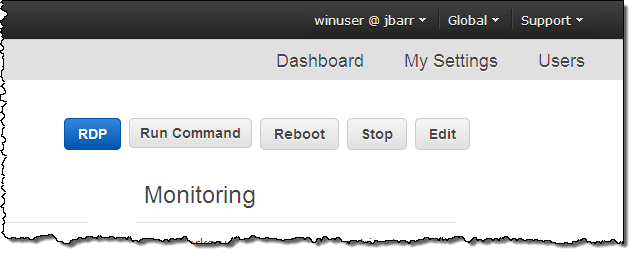

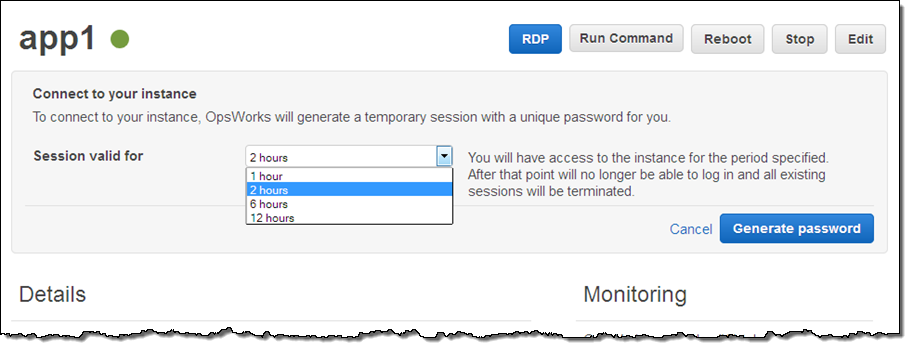

In order to connect to the instance as winuser, you’ll need to first log in to the console with the appropriate user (as opposed to account) credentials. After you do this, you can request temporary access to the instance. If you have the appropriate permissions (Show and SSH/RDP), you can connect via RDP:

OpsWorks will generate a temporary session for you:

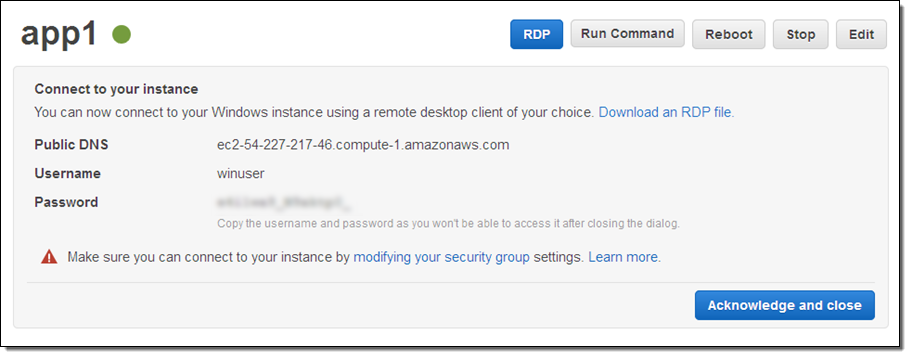

Then it will show you the credentials, and give you the option to download an RDP file:

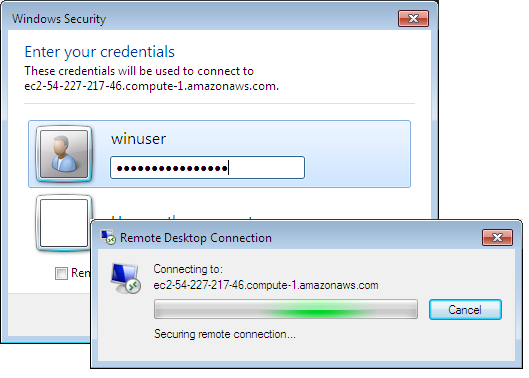

Use this file to connect, enter your password, and wait a couple of seconds to log in:

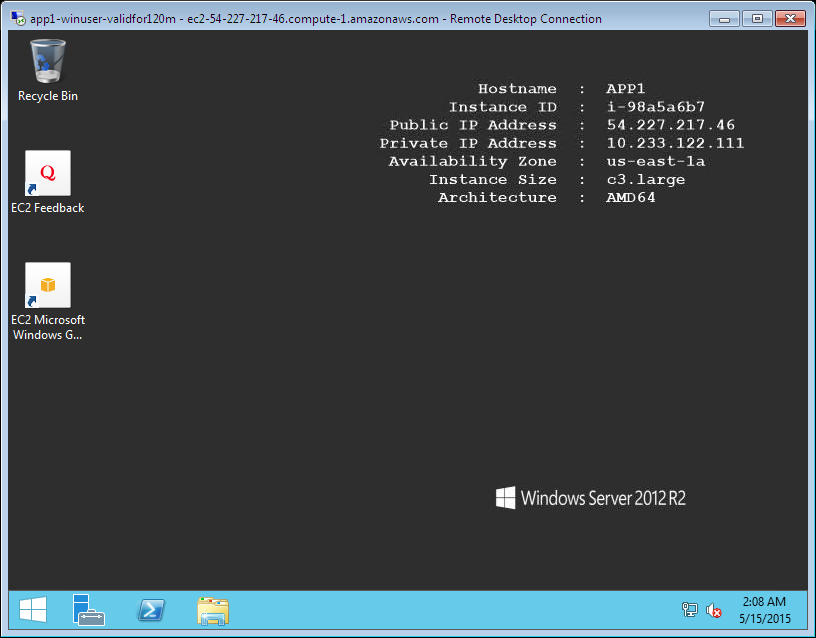

And there’s your Windows server desktop:

Available Now

This new functionality is available now and you can start using it today! To learn more, read Getting Started with Windows Stacks in the OpsWorks User Guide.

— Jeff;

AWS OpsWorks Update – Support for Existing EC2 Instances and On-Premises Servers

My colleague Chris Barclay sent a guest post to introduce two powerful new features for AWS OpsWorks.

— Jeff;

New Modes for OpsWorks

I have some good news for users that operate compute resources outside of AWS: you can now use AWS OpsWorks to deploy and operate applications on any server with an Internet connection including virtual machines running in your own data centers. Previously, you could only deploy and operate applications on Amazon EC2 instances created by OpsWorks. Now, OpsWorks can also manage existing EC2 instances created outside of OpsWorks. You may know that OpsWorks is a service that helps you automate tasks like code deployment, software configuration, operating system updates, database setup, and server scaling using Chef. OpsWorks gives you the flexibility to define your application architecture and resource configuration and handles the provisioning and management of resources for you. Click here to learn more about the benefits of OpsWorks.

Customers with on-premises servers no longer need to operate separate application management tools or pay up-front licensing costs but can instead use OpsWorks to manage applications that run on-premises, on AWS, or that span environments. OpsWorks can configure any software that is scriptable and includes integration with AWS services such as Amazon CloudWatch.

Use Cases & Benefits

OpsWorks can enhance the management processes for your existing EC2 instances or on-premises servers. For example:

- With a single command, OpsWorks can update operating systems and software packages to the latest version across your entire fleet, making it easy to keep up with security updates.

- Instead of manually running commands on each instance/server in your fleet, let OpsWorks run scripts or Chef recipes for you. You control who can run scripts and are able to view a history of each script that has been run.

- Instead of using one user login per instance/server, you can manage operating system users and ssh/sudo access. This makes it easier to add and remove an individual user’s access to your instances.

- Create alarms or scale instances/servers based on custom Amazon CloudWatch metrics for CPU, memory and load from one instance/server or aggregated across a collection of instances/servers.

Getting Started

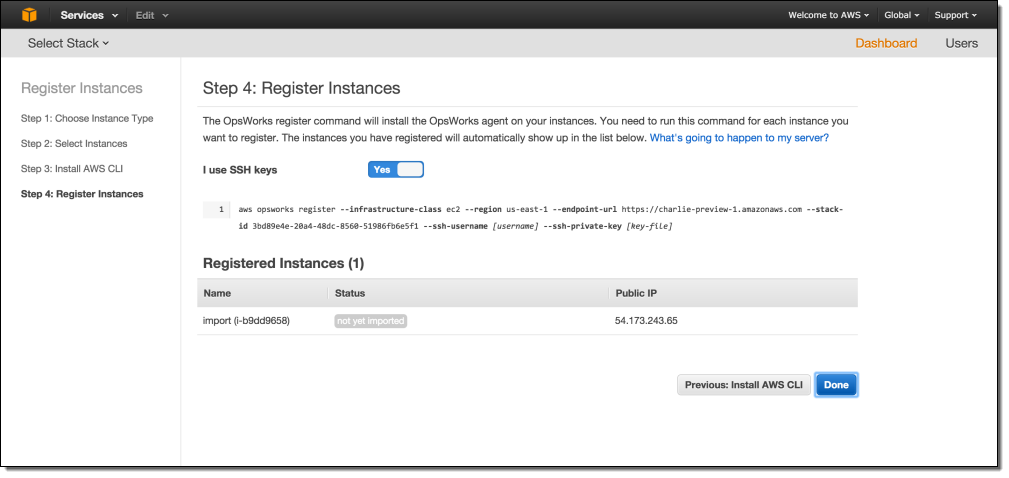

Let’s walk through process of registering existing on-premises or EC2 instances. Got to the OpsWorks Management Console and click Register Instances:

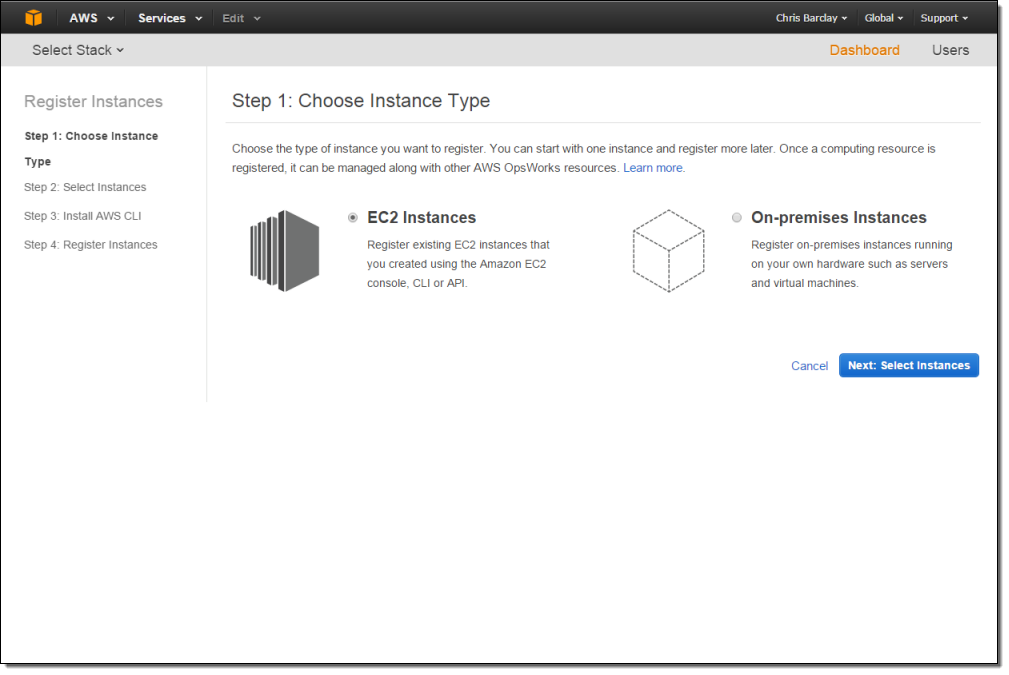

Select whether you want to register EC2 instances or on-premises servers. You can use both types, but the wizard operates with one class at a time.

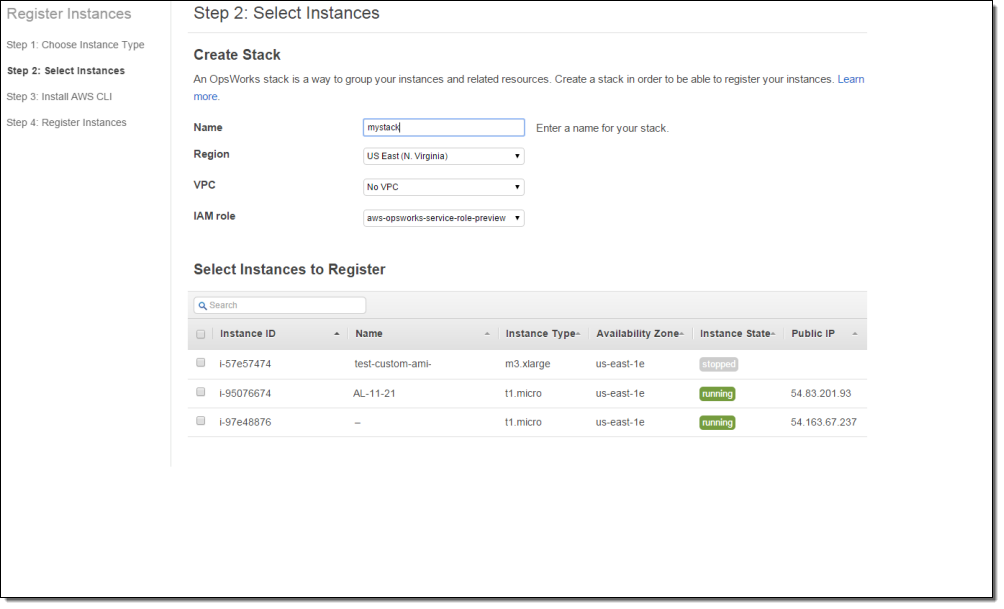

Give your collection of instances a Name, select a Region, and optionally choose a VPC and IAM role. If you are registering EC2 instances, select from the table before proceeding to the next step.

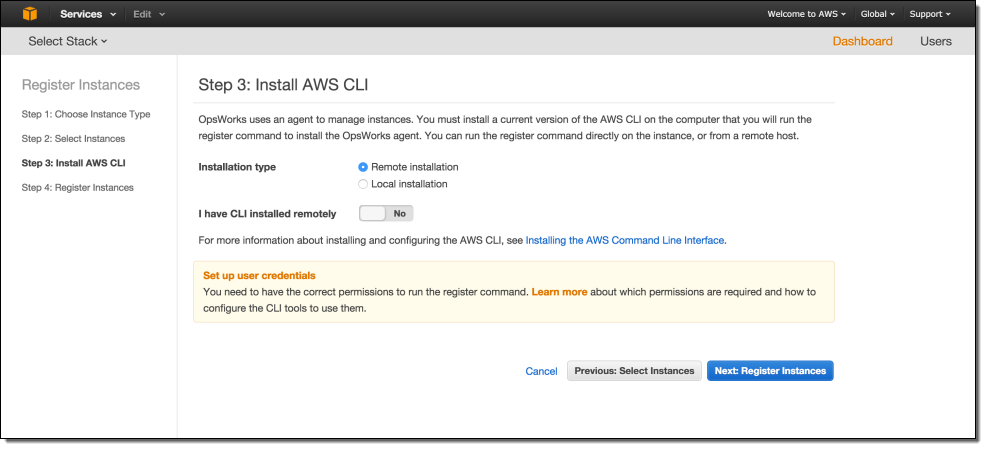

Install the AWS CLI on your desktop (if you have already installed an older version of the CLI, you may need to update it in order to use this feature).

Run the command displayed in the Run Register Command section using the CLI installed in the previous step. This uses the CLI installed on your desktop to install the OpsWorks agent onto the selected instances. You will need the instance’s ssh user name and private key in order to perform the installation. See the documentation if you want to run the CLI on the server you are registering. Once the registration process is complete, the instances will appear in the list as “registered.”

Click Done. You can now use OpsWorks to manage your instances! You can view and perform actions on your instances in the Instances view. Navigate to the Monitoring view to see the 13 included custom CloudWatch metrics for the instances you have registered.

You can learn more about using OpsWorks to manage on-premises and EC2 instances by taking a look at the examples in the Application Management Blog or the documentation.

Pricing and Availability

OpsWorks costs $0.02 per hour for each on-premises server on which you install the agent, and is available at no additional charge for EC2 instances. See the OpsWorks Pricing page to learn more about our free tier and other pricing information.

— Chris Barclay, Principal Product Manager

Use AWS OpsWorks & Ruby to Build and Scale Simple Workflow Applications

From time to time, one of my blog posts will describe a way to make use of two AWS products or services together. Today I am going to go one better and show you how to bring the following trio of items in to play simultaneously:

-

Amazon Simple Workflow (SWF) can help you to build, run, and scale background jobs that have parallel or sequential steps. SWF is a fully-managed state tracker and task coordinator that runs in the AWS cloud. You can read my blog post, Amazon Simple Workflow – Cloud-Based Workflow Management to learn more about SWF.

Amazon Simple Workflow (SWF) can help you to build, run, and scale background jobs that have parallel or sequential steps. SWF is a fully-managed state tracker and task coordinator that runs in the AWS cloud. You can read my blog post, Amazon Simple Workflow – Cloud-Based Workflow Management to learn more about SWF. - AWS OpsWorks helps you deploy and operate applications of all shapes and sizes. You can define your application’s architecture using any desired combination of predefined OpsWorks templates and custom templates that you build yourself. OpsWorks also assists with scaling (based on time or system load) and dynamic configuration as part of scaling operations. To learn more, read AWS OpsWorks – Flexible Application Management in the Cloud Using Chef.

- The AWS Flow Framework for Ruby (also known as Ruby Flow) simplifies and streamlines the process of building applications for Simple Workflow. Ruby Flow removes the need for glue code and state machines and allows you to focus on your business logic. I first talked about this Ruby Gem in AWS Flow Framework for Ruby for the Simple Workflow Service.

All Together Now

With today’s launch, it is now even easier for you to build, host, and scale SWF applications in Ruby. A new, dedicated layer in OpsWorks simplifies the deployment of workflows and activities written in the AWS Flow Framework for Ruby. By combining AWS OpsWorks and SWF, you can easily set up a worker fleet that runs in the cloud, scales automatically, and makes use of advanced Amazon Elastic Compute Cloud (EC2) features.

This new layer is accessible from the AWS Management Console. As part of this launch, we are also releasing a new command-line utility called the runner. You can use this utility to test your workflow locally before pushing it to the cloud. The runner uses information provided in a new, JSON-based configuration file to register workflow and activity types, and start the workers.

Console Support

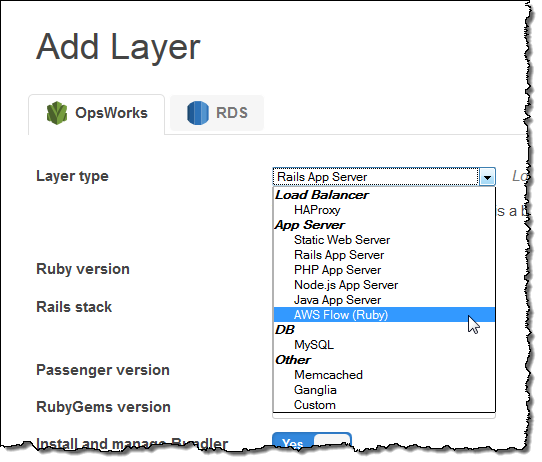

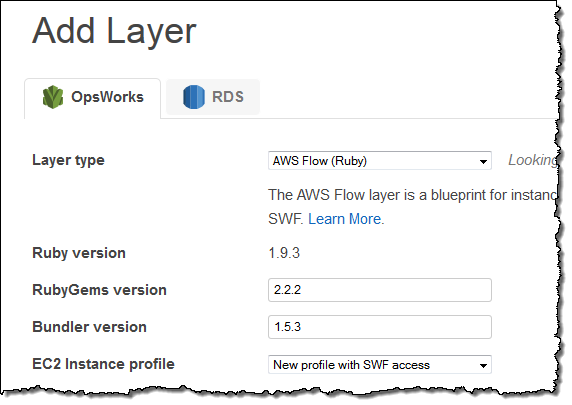

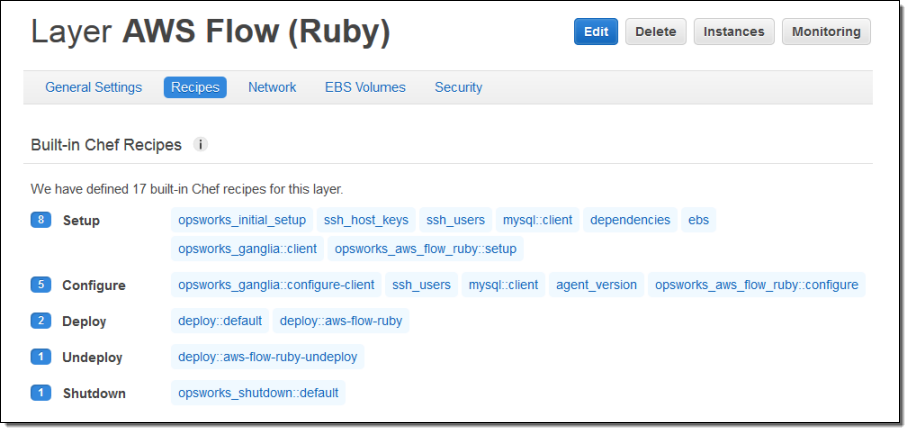

A Ruby Flow layer can be added to any OpsWorks stack that is running version 11.10 (or newer) of Chef. Simple add a new layer by choosing AWS Flow (Ruby) from the menu:

You can customize the layer if necessary (the defaults will work fine for most applications):

The layer will be created immediately and will include four Chef recipes that are specific to Ruby Flow (the recipes are available on GitHub):

The Runner

As part of today’s release we are including a new command-line utility, aws-flow-ruby, also known as the runner. This utility is used by AWS OpsWorks to run your workflow code. You can also use it to test your SWF applications locally before you push them to the cloud.

The runner is configured using a JSON file that looks like this:

{

"domains": [{

"name": "BookingSample",

}],

"workflow_workers": [{

"task_list": "workflow_tasklist"

}],

"activity_workers": [{

"task_list": "activity_tasklist"

}]

}

Go With the Flow

The new Ruby Flow layer type is available now and you can start using it today. To learn more about it, take a look at the new OpsWorks section of the AWS Flow Framework for Ruby User Guide.

— Jeff;

CloudTrail Expands Again – More Regions, More Services, Cool Partners

AWS CloudTrail records the API calls made in your AWS account and publishes the resulting log files to an Amazon S3 bucket in JSON format, with optional notification to an Amazon SNS topic each time a file is published.

Today I’m writing to provide you with more information on new releases from CloudTrail and to share some really cool tools and use cases that have been implemented by some of the CloudTrail Partners.

Regional Expansion

Effective immediately, CloudTrail is now available in three more AWS Regions. Here is the complete list:

-

US East (Northern Virginia)

US East (Northern Virginia) - US West (Northern California)

- US West (Oregon)

- Asia Pacific (Sydney)

- EU (Ireland)

- Asia Pacific (Tokyo) – New!

- Asia Pacific (Singapore) – New!

- South America (So Paulo) – New!

The Big Picture, Once More

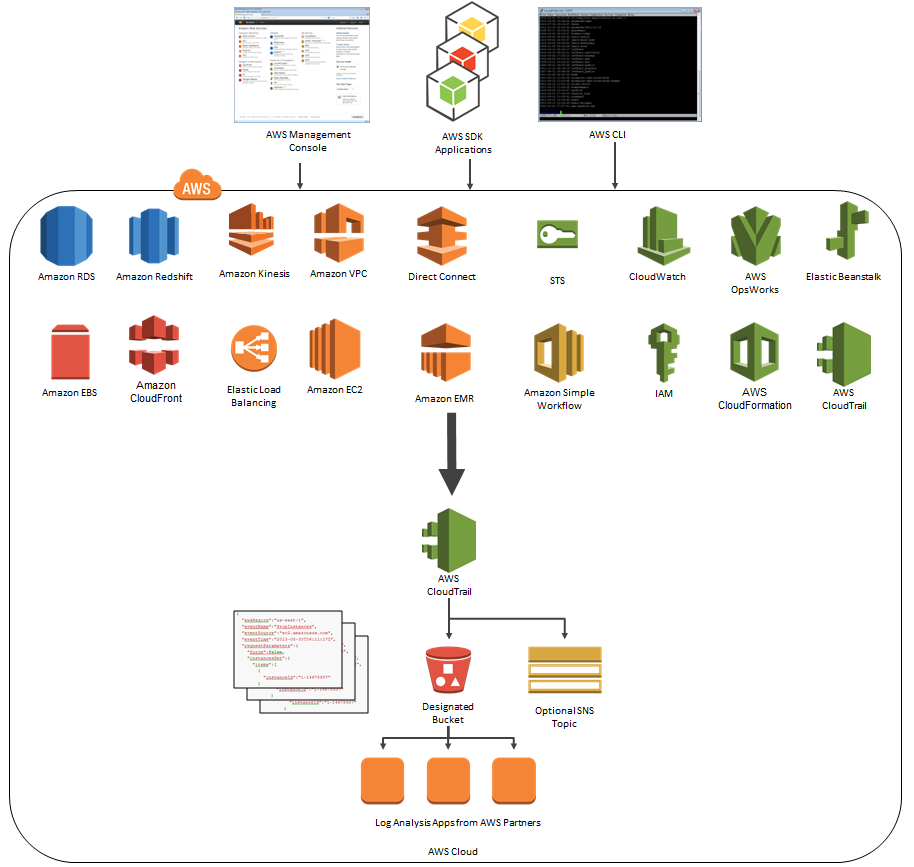

Here’s the latest and greatest version of the diagram that I first presented when we launched CloudTrail:

As you can see, CloudTrail can now record API calls made by eighteen AWS services! Earlier this month, we quietly added support for Amazon CloudFront and AWS CloudTrail.

Logentries and CloudTrail

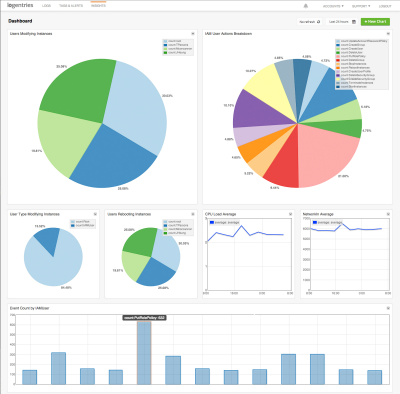

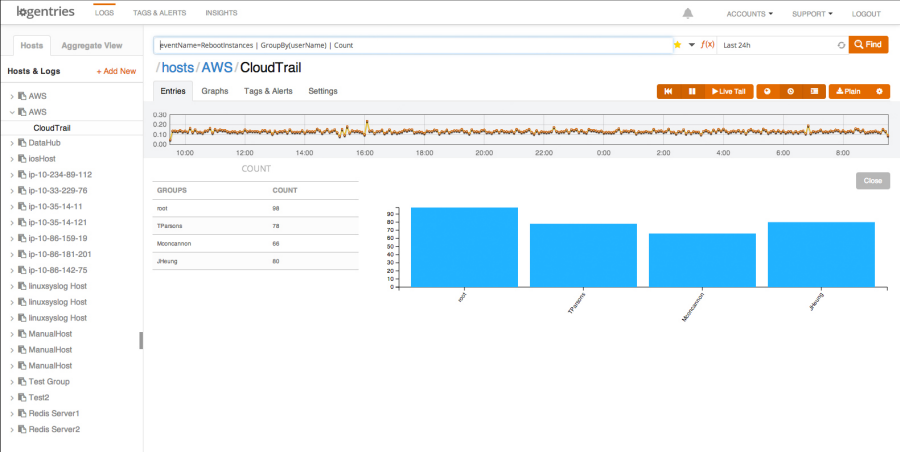

Logentries is designed to make business insights from machine-generated log data easily accessible to development, IT, and business operations teams of all sizes. The Logentries architecture is designed to manage and provide insights into huge amounts of data across their diverse, global user community. You can sign up for a free Logentries trial and be up and running within minutes.

Logentries is designed to make business insights from machine-generated log data easily accessible to development, IT, and business operations teams of all sizes. The Logentries architecture is designed to manage and provide insights into huge amounts of data across their diverse, global user community. You can sign up for a free Logentries trial and be up and running within minutes.

The Logentries team shared a cool, security-oriented use case that is made possible by their integration with AWS CloudTrail (read the Logentries CloudTrail Integration Documentation to learn more). Logentries provides pre-defined queries for important events so that you do not have to write complex queries. Additionally, Logentries provides out of the box tagging and alerting to highlight and notify you when an important security event takes place. For example, you can get notified via email or iPhone alert or you can have a message sent to a third-party service or API such as Pagerduty, Hipchat, or Campfire when any of the following occur:

- EC2 Security Group created, deleted, or edited

- New IAM user is created

- User’s IAM permissions are changed

Here is a screenshot of the alerts that Logentries provides out of the box:

And here’s a short video of Logentries in action:

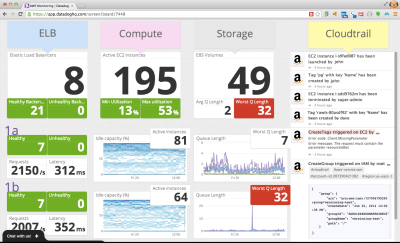

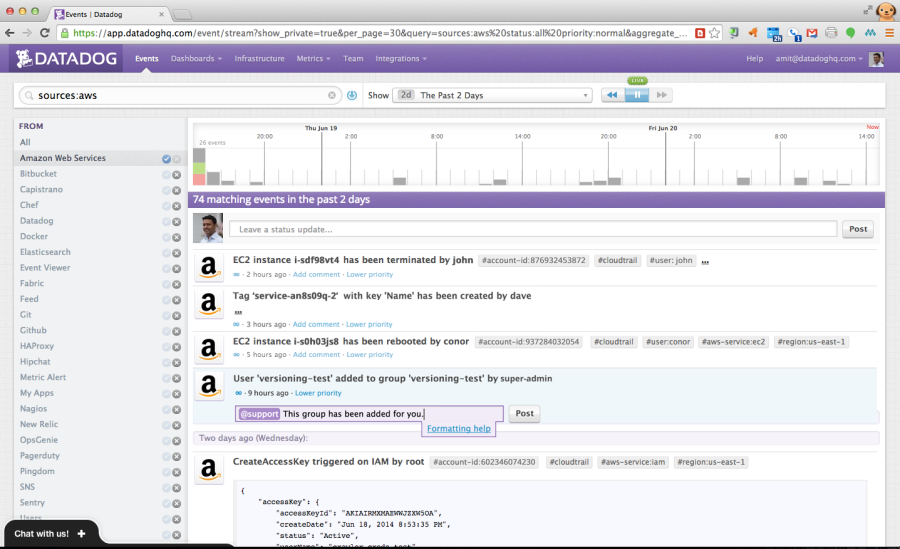

Datadog and CloudTrail

Datadog is a cloud monitoring service for IT, operations and development teams who run applications at scale. Datadog allows users to quickly troubleshoot availability and performance issues by automatically correlating change events and performance metrics from AWS CloudTrail, AWS Cloudwatch and many other sources.

Datadog is a cloud monitoring service for IT, operations and development teams who run applications at scale. Datadog allows users to quickly troubleshoot availability and performance issues by automatically correlating change events and performance metrics from AWS CloudTrail, AWS Cloudwatch and many other sources.

Datadog can overlay CloudTrail logs with metric collected from other systems to show how the metrics respond to AWS events. This allows you to investigate and understand cause and effect relationships.

Datadog can quickly find specific CloudTrail events and put them in context for you. You can collaborate with teammates using threaded discussions that are linked to CloudTrail logs:

— Jeff;

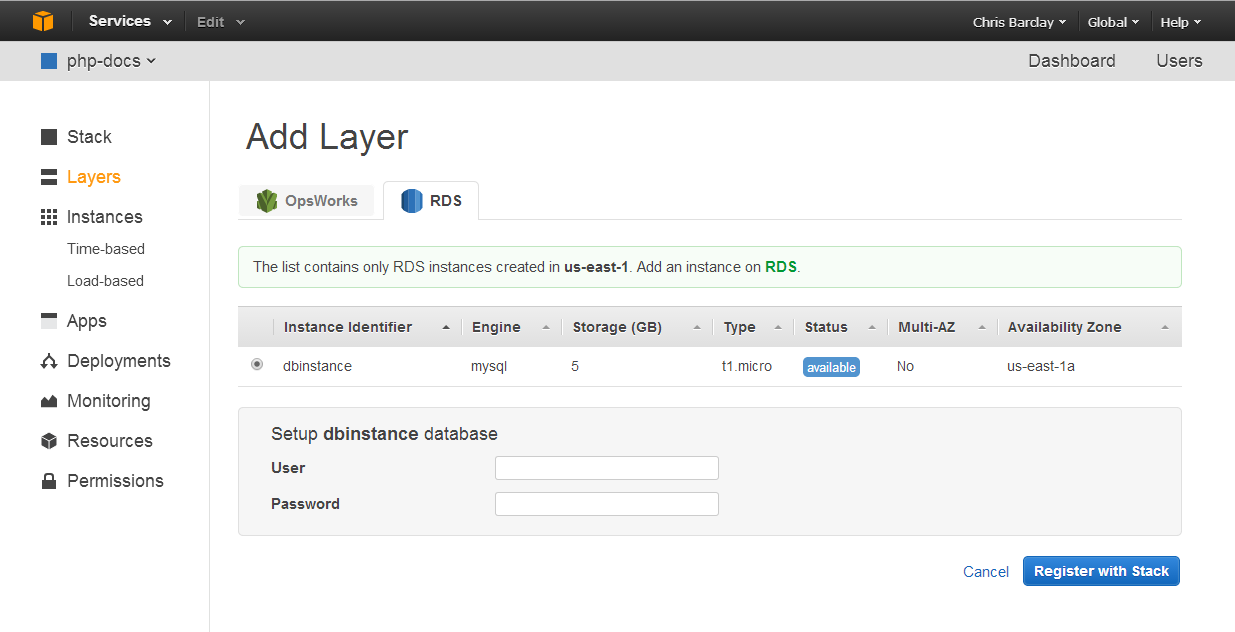

AWS OpsWorks With Amazon RDS

AWS OpsWorks is an application management service. You define your application as a set of layers within a stack. Each stack provides information about the packages to be installed and configured, and can also provision any necessary AWS resources, as defined within a particular OpsWorks Layer. OpsWorks also scales your application as needed, driven by workload or on a predefined schedule.

The Amazon Relational Database Service (RDS) takes care of all of the tedious, low-level system and database management work that you would end up doing yourself if you were hoping to use MySQL, Oracle Database, SQL Server, or PostgreSQL. You can let RDS handle the hardware provisioning, the operating system and database installation, setup, and patching, scaling, backups, fault detection and failover, and much more.

Today we are marrying OpsWorks and RDS, giving you the ability to define and use an RDS Service Layer to refer to an RDS database instance that you have already created within the AWS Region that serves as home to the OpsWorks stack for your application. This is in addition to the existing OpsWorks support for MySQL layers.

You can define an RDS Server Layer from within the AWS Management Console like this:

You will need to know the user name and password for the database instance in order to create the RDS Service Layer (this information is passed along to the application). You can always edit the layer later if you don’t have this information handy or if you change the user name and/or password in the future.

Note: Because all OpsWorks stacks access AWS resources and services through an IAM (Identity and Access Management) role, you may need to update the role accordingly. OpsWorks will detect this situation and offer to address it.

After you add the RDS Service Layer to the stack, OpsWorks will assign it an ID and add information about the database instance to the stack configuration and to the deployment JSON, where it can be accessed through the [:database] attribute. OpsWorks also provides helper functions to provide access to the connection details when used in conjunction with the Ruby, PHP, and Java application server layers.

As usual, this new feature is available now and you can start using it today. Consult the Database Layers section of the OpsWorks User Guide to learn more.

— Jeff;

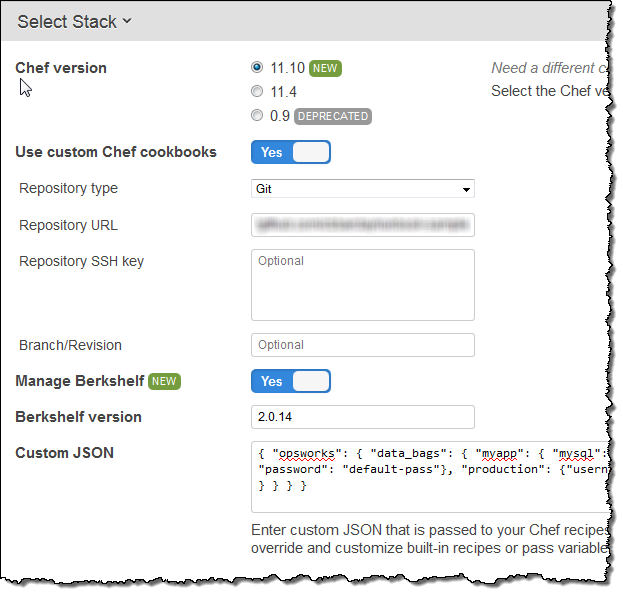

AWS OpsWorks Now Supports Chef 11.10

AWS OpsWorks gives you the power to model and manage your entire application, from load balancers to databases. You can customize the Amazon EC2 instances in the OpsWorks layers which comprise your application stack using any desired combination of the built-in OpsWorks templates and custom Chef recipes. The recipes can install software packages and can perform any task that you can script.

AWS OpsWorks gives you the power to model and manage your entire application, from load balancers to databases. You can customize the Amazon EC2 instances in the OpsWorks layers which comprise your application stack using any desired combination of the built-in OpsWorks templates and custom Chef recipes. The recipes can install software packages and can perform any task that you can script.

Today we are extending OpsWorks by adding support for version 11.10 of Chef.

This version of Chef improves compatibility with cookbooks written for Chef Server, including support for search and databags, making it easier for you to use community cookbooks such as those written for MongoDB (see our new blog post, Deploying MongoDB With OpsWorks, to learn more about this use case).

OpsWorks now supports Berkshelf. You can easily reference multiple cookbook repositories and you can include a combination of community cookbooks and your own custom cookbooks in the same stack.

The version of Ruby included with Chef has been updated, and we’ve also included a very handy customization feature for the built-in cookbooks. Simply place a customize.rb file in a cookbook’s attributes directory to change the configuration of the software that OpsWorks installs.

Getting Started With Chef 11.10

As part of the process of creating a new OpsWorks stack, you can choose the desired Chef version within the Advanced settings:

As you can see, you can also enable Berkshelf support and you can pass any desired JSON data to your Chef recipes in this section.

Learn More

You can read all about Using AWS OpsWorks With Chef 11, or you can visit the AWS OpsWorks Console and get started now.

— Jeff;

AWS Console for iOS and Android Now Supports AWS OpsWorks

The AWS Console for iOS and Android now includes support for AWS OpsWorks.

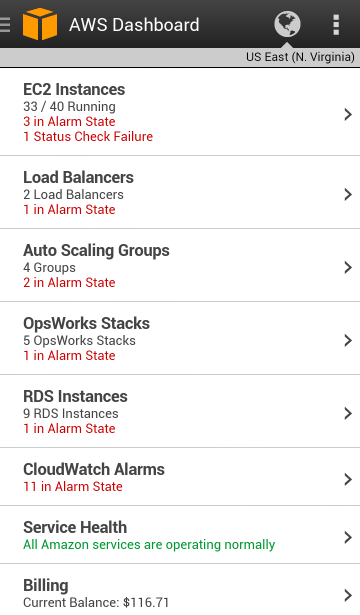

You can see your OpsWorks resources — stacks, layers, instances, apps, and deployments with the newest version of the app. It also supports EC2, Elastic Load Balancing, the Relational Database Service, Auto Scaling, CloudWatch, and the Service Health Dashboard.

The Android version of the console app also gets a new native interface.

OpsWorks Support

With this new release, iOS and Android users have access to a wide variety of OpsWorks resources. Here’s what you can do:

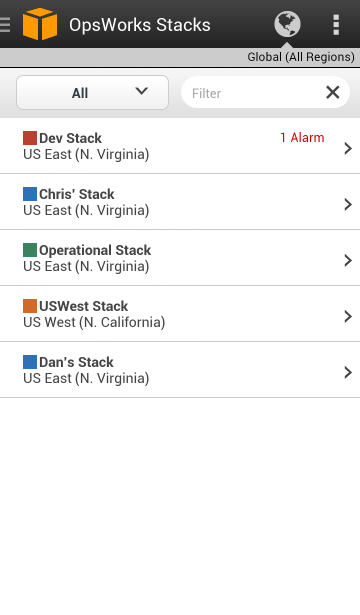

- View and navigate your OpsWorks stacks, layers, instances, apps, and deployments.

- View the configuration details for each of these resources.

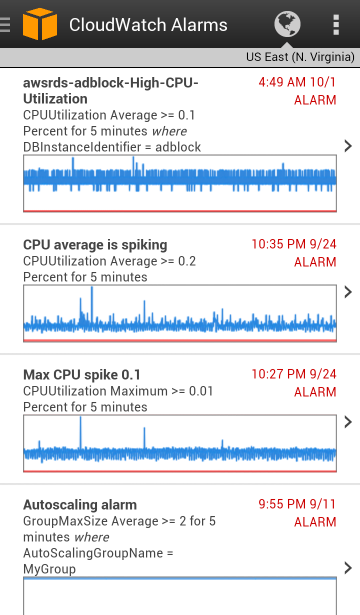

- View your CloudWatch metrics and alarms.

- View deployment details such as command, status, creation time, completion time, duration, and affected instances.

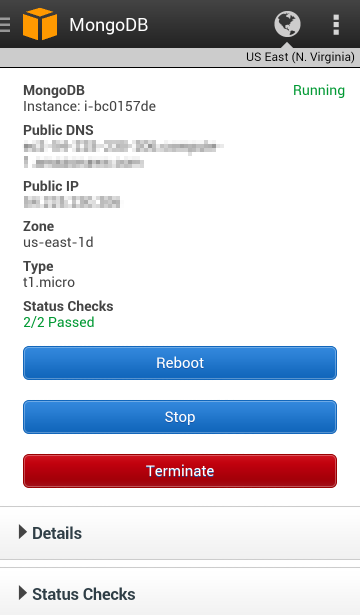

- Manage the OpsWorks instance lifecycle (e.g. reboot, stop, start), view logs, and create snaphsots of attached Volumes.

Take a Look

Here are some screen shots of the Android console app in action. The dashboard displays resource counts and overall status:

The status of each EC2 instance is visible. Instances can be rebooted, stopped, or terminated:

CloudWatch alarms and the associated metrics are visible:

Each OpsWork stack is shown, along with any alarms:

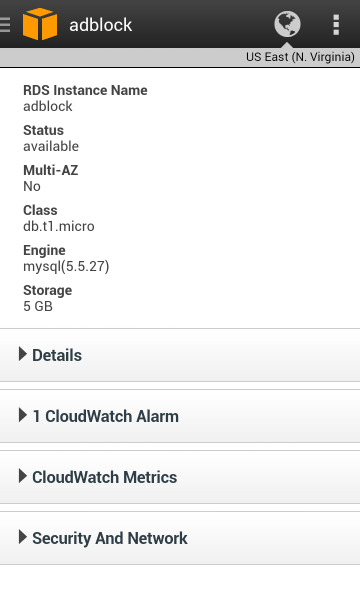

Full information is displayed for each database instance:

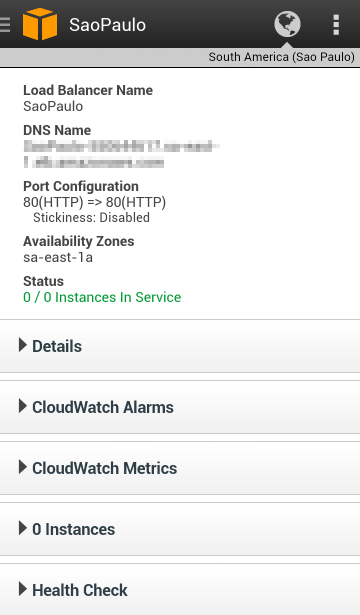

And for each Elastic Load Balancer:

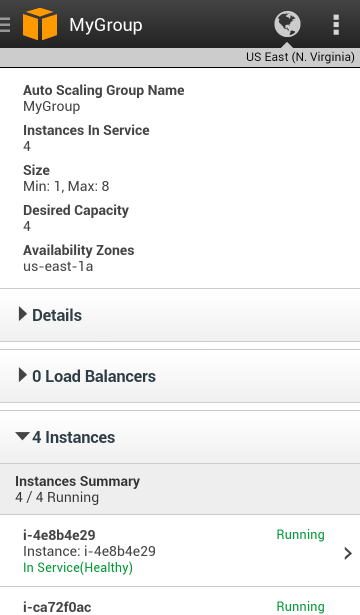

There’s also access to Auto Scaling resources:

Download Today

You can download the new version of the console app from Amazon AppStore, Google Play, or iTunes.

— Jeff;