Category: Security

In-Country Storage of Personal Data

My colleague Denis Batalov works out of the AWS Office in Luxembourg. As a Solutions Architect, he is often asked about the in-country storage requirement that some countries impose on certain types of data. Although this requirement applies to a relatively small number of workloads, I am still happy that he took the time to write the guest post below to share some of his knowledge.

— Jeff;

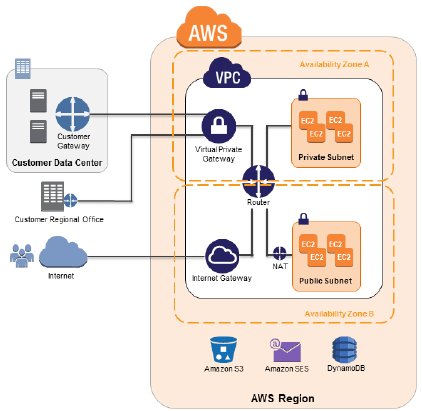

AWS customers sometimes offer their services in countries where local requirements necessitate storage and processing of certain sensitive data to take place within the applicable country, that is, in a datacenter physically located in the respective country. Examples of such sensitive data include financial transactions and personal data (also referred to in some countries as Personally Identifiable Information, or PII). Depending on the specific storage and processing requirements, one answer might be to utilize hybrid architectures where the component of the system that is responsible for collecting, storing and processing the sensitive data is placed in-country, while the remaining system resides in AWS. More information about hybrid architectures in general can be found on the Hybrid Architectures with AWS page.

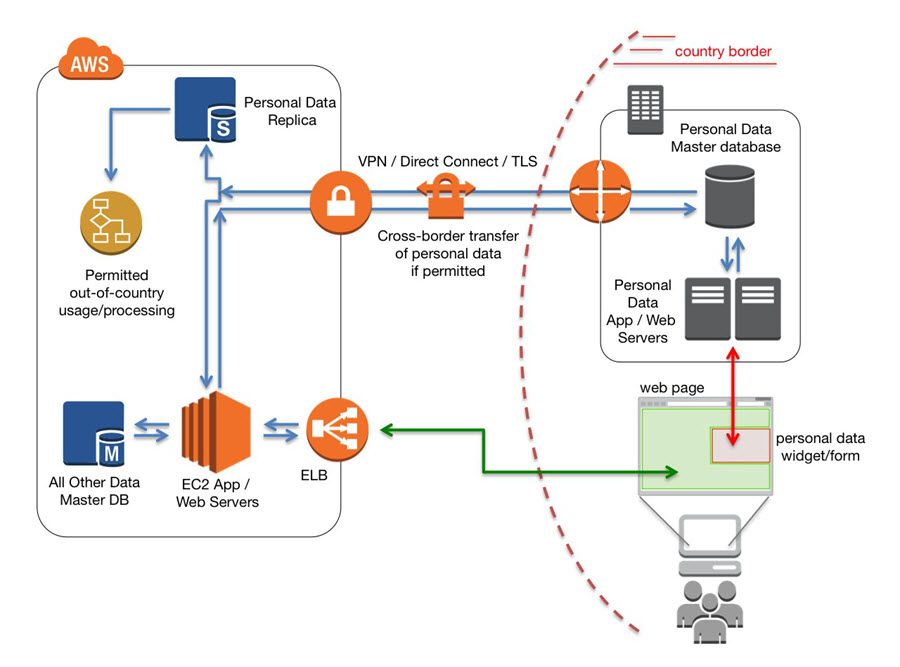

The reference architecture diagram included below shows an example of a hypothetical web application hosted on AWS that collects personal data as part of its operation. Since the collection of personal data may be required to occur in-country, the widget or form that is used to collect or display personal data (shown in red) is generated by a web server located in-country, while the rest of the web site (shown in green) is generated by the usual web server located in AWS. This way the authoritative copy of the personal data resides in-country and all updates to the data are also recorded in-country. Note that the data that is not required to be stored in-country can continue to be stored in the main database (or databases) residing in AWS.

This architecture still provides customers with the most important benefits of the cloud: it is flexible, scalable, and cost-effective.

There may be situations where a copy of personal data needs to be transferred across a national border, e.g. in order to fulfill contractual obligations, such as transferring the name, billing address and payment method when a cross-border purchase is transacted. Where permitted by local legislation, a replica of the data (either complete or partial) can be transferred across the border via a secure channel. Data can be securely transferred over public internet with the use of TLS, or using a VPN connection established between the Virtual Private Gateway of the VPC and the Customer Gateway residing in-country. Additionally, customers may establish private connectivity between AWS and an in-country datacenter by using AWS Direct Connect, which in many cases can reduce network costs, increase bandwidth throughput, and provide a more consistent network experience compared to Internet-based connections.

Alternatively, it may be possible to achieve certain processing outcomes in the AWS cloud while employing data anonymization. This is a type of information sanitization whose intent is privacy protection, commonly associated with highly sensitive personal information. It is the process of either encrypting, tokenizing, or removing personally identifiable information from data sets, so that the people whom the data describe remain anonymous in a particular context. Upon return of the processed dataset from the AWS cloud it could be integrated in to in-country databases to give it personal context again.

— Denis

PS – Customers should, of course, seek advice from professionals who are familiar with details of the country-specific legislation to ensure compliance with any applicable local laws, as this example architecture is shown here for illustrative purposes only!

Alert Logic Cloud Insight – Product Tour

I love to see all of the cool products and services that the Members of the AWS Partner Network (APN) build and bring to market. In the guest post below, my colleague Shawn Anderson takes you on a tour of Alert Logic’s new Cloud Insight product.

— Jeff;

In August, Alert Logic introduced Alert Logic Cloud Insight, which identifies vulnerabilities in operating systems and applications running on EC2 instances and configuration issues with AWS accounts and services. This product discovers and evaluates an AWS environment using data provided by EC2, Virtual Private Cloud, Auto-Scaling, Elastic Load Balancing, IAM, and RDS APIs. Currently, Alert Logic is offering a 30-day free trial of Cloud Insight.

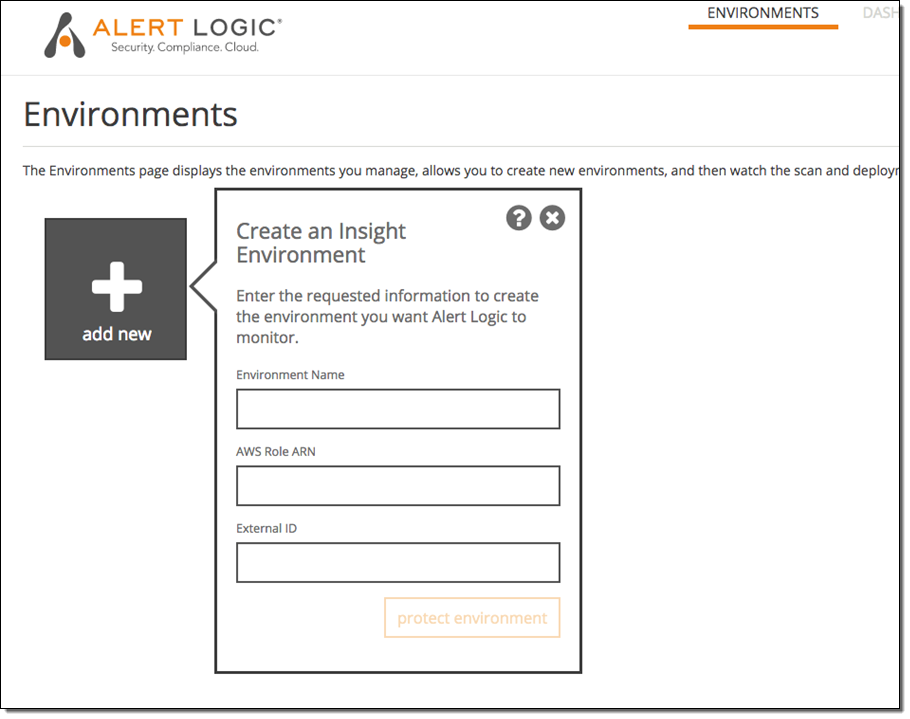

To begin using Cloud Insight you first login to the Cloud Insight web portal and give Cloud Insight access to your AWS environment via an IAM role. There are step-by-step instructions provided in the product describing how you set up this access:

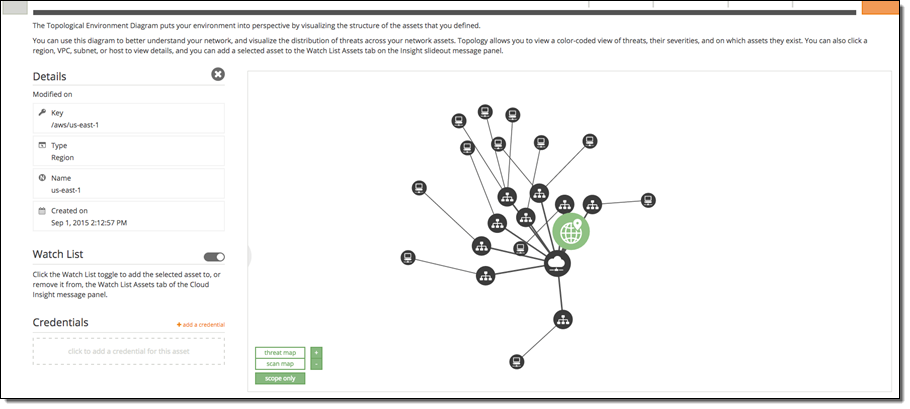

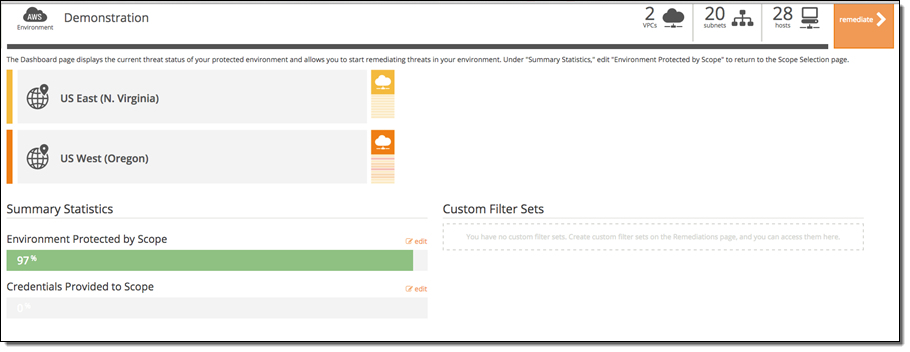

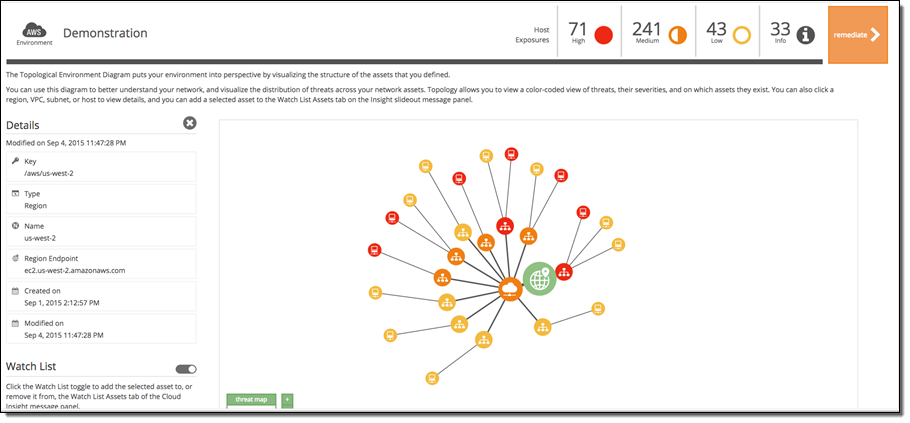

Cloud Insight will automatically discover all of the hosts and services associated with your AWS environment. Cloud Insight then automatically creates a dedicated security subnet in your VPC and launches a virtual Alert Logic appliance in the subnet. Within a few minutes you will see the results of the discovery process in the topology view:

The topology view shows the relationship between your AWS assets, The relationships (lines between assets) are updated dynamically as your AWS environment changes. To complete your setup, you select the assets you want to be part of Cloud Insight’s continuous assessments. You can choose to protect an entire region, VPC, or subnet. You can make adjustments to this scope at any time.

Once you finish this step, Cloud Insight is up and running. It will continuously scan your assets and audit your environment configuration, and identify vulnerabilities and configuration issues it encounters. In the topology view you can see where the issues were discovered, color-coded for severity:

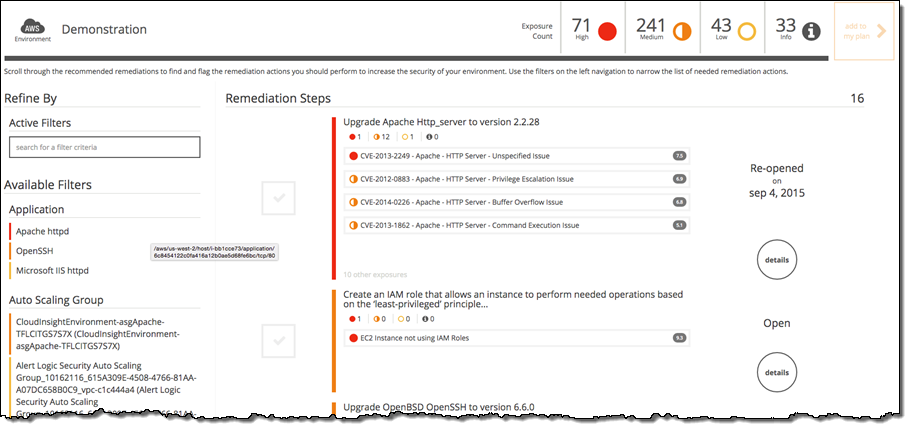

By accessing the Remediation page, you can see a list of prioritized remediation actions that will address the identified vulnerabilities and configuration issues. The prioritization of actions is based on contextual analysis using a proprietary methodology:

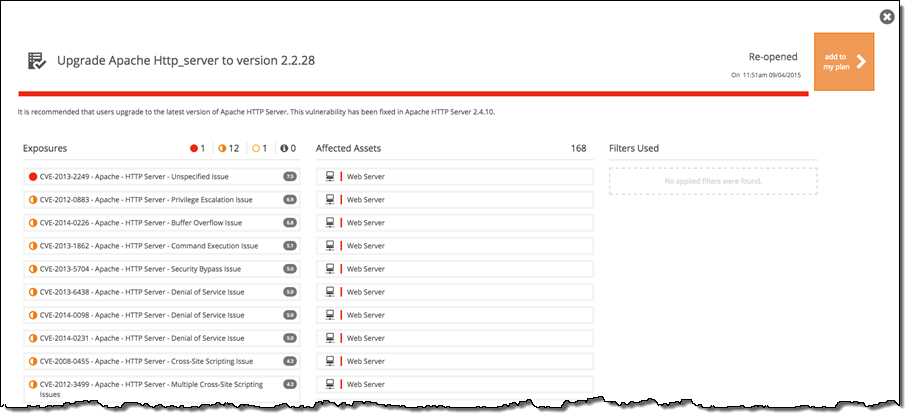

By taking these steps you can see that, for example, an upgrade to one Apache HTTP_server image addresses several vulnerabilities discovered in the environment:

When a remediation action is completed, mark it complete and Cloud Insight will rescan the impacted hosts to verify that the vulnerability has been eliminated.

Cloud Insight is well suited for a security analyst who wants to identify critical exposures in their environment. Additionally Cloud Insight is accessible via APIs meaning that you could incorporate it into a continuous deployment program. For more information on Cloud Insight you can visit Alert Logic’s website where you can access a few short videos, product documentation, and request your free trial.

— Shawn Anderson, Global Ecosystem Alliance Lead, AWS Partner Network

PCI Compliance for Amazon CloudFront

The scale of AWS makes it possible for us to take on projects that could be too large, too complex, too expensive, or too time-consuming for our customers. This is often the case for issues in security, particularly in the world of compliance. Establishing and documenting the proper controls, preparing the necessary documentation, and then seeking the original certification and periodic re-certifications take money and time, and require people with expertise and experience in some very specific areas.

From the beginning, we have worked to demonstrate that AWS is compliant with a very wide variety of national and international standards including HIPAA, PCI DSS Level 1, ISO 9001, ISO 27001, SOC (1, 2, and 3), FedRAMP, DoD CSM (to name a few).

In most cases we demonstrate compliance for individual services. As we expand our service repertoire, we likewise expand the work needed to attain and maintain compliance.

PCI Compliance for CloudFront

Today I am happy to announce that we have attained PCI DSS Level 1 compliance for Amazon CloudFront. As you may already know, PCI DSS is a requirement for any business that stores, processes, or transmits credit data.

Our customers that use AWS to implement and host retail, e-commerce, travel booking, and ticket sales applications, can take advantage of this, as can those that provide apps with in-app purchasing features. If you need to distribute static or dynamic content to your customers while maintaining compliance with PCI DSS as part of such an application, you can now use CloudFront as part of your architecture.

Other Security Features

In addition to PCI DSS Level 1 compliance, a number of other CloudFront features should be of value to you as part of your security model. Here are some of the most recent features:

HTTP to HTTPS Redirect – You can use this feature to enforce an HTTPS-only discipline for access to your content. You can restrict individual CloudFront distributions to serve only HTTPS content, or you can configure them to return a 301 redirect when a request is made for HTTP content.

Signed URLs and Cookies – You can create a specially formatted “signed” URL that includes a policy statement. The policy statement contains restrictions on the signed URL, such as a time interval which specifies a date and time range when the URL is valid, and/or a list of IP addresses that are allowed to access the content.

Advanced SSL Ciphers – CloudFront supports the latest SSL ciphers and allows you to specify the minimum acceptable protocol version.

OCSP Stapling – This feature speeds up access to CloudFront content by allowing CloudFront to validate the associated SSL certificate in a more efficient way. The effect is most pronounced when CloudFront receives many requests for HTTPS objects that are in the same domain.

Perfect Forward Secrecy -This feature creates a new private key for each SSL session. In the event that a key was discovered, it could not be used to decode past or future sessions.

Other Newly Compliant Services

Along with CloudFront, AWS CloudFormation, AWS Elastic Beanstalk, and AWS Key Management Service (KMS) have also attained PCI DSS Level 1 compliance. This brings the total number of PCI compliant AWS services to 23.

Until now, you needed to manage your own encryption and key management in order to be compliant with sections 3.5 and 3.6 of PCI DSS. Now that AWS Key Management Service (KMS) is included in our PCI reports, you can comply with those sections of the DSS using simple console or template-based configurations that take advantage of keys managed by KMS. This will save you from doing a lot of heavy lifting and will make it even easier for you to build applications that manage customer card data in AWS.

Use it Now

There is no additional charge to use CloudFront as part of a PCI compliant application. You can try CloudFront at no charge as part of the AWS Free Tier; large-scale, long-term applications (10 TB or more of data from a single AWS region) can often benefit from CloudFront’s Reserved Capacity pricing.

— Jeff;

Data Encryption Made Easier – New Encryption Options for Amazon RDS

Encryption of stored data (often referred to as “data at rest”) is an important part of any data protection plan. Today we are making it easier for you to encrypt data at rest in Amazon Relational Database Service (RDS) database instances running MySQL, PostgreSQL, and Oracle Database.

Before today’s release you had the following options for encryption of data at rest:

- RDS for Oracle Database – AWS-managed keys for Oracle Enterprise Edition (EE).

- RDS for SQL Server – AWS-managed keys for SQL Server Enterprise Edition (EE).

In addition to these options, we are adding the following options to your repertoire:

- RDS for MySQL – Customer-managed keys using AWS Key Management Service (KMS).

- RDS for PostgreSQL – Customer-managed keys using AWS Key Management Service (KMS).

- RDS for Oracle Database – Customer-managed keys for Oracle Enterprise Edition using AWS CloudHSM.

For all of the database engines and key management options listed above, encryption (AES-256) and decryption are applied automatically and transparently to RDS storage and to database snapshots. You don’t need to make any changes to your code or to your operating model in order to benefit from this important data protection feature.

Let’s take a closer look at all three of these options!

Customer-Managed Keys for MySQL and PostgreSQL

We launched the AWS Key Management Service last year at AWS re:Invent. As I noted at the time, KMS provides you with seamless, centralized control over your encryption keys. It was designed to help you to implement key management at enterprise scale with facility to create and rotate keys, establish usage policies, and to perform audits on key usage (visit the AWS Key Management Service (KMS) home page for more information).

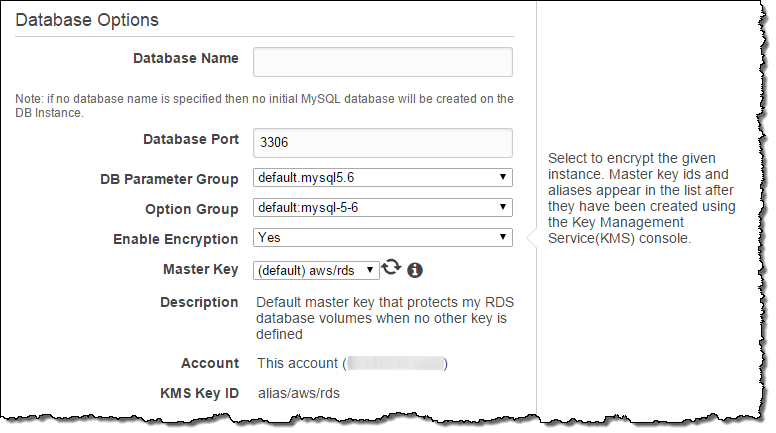

You can enable this feature and start to use customer-managed keys for your RDS database instances running MySQL or PostgreSQL with a couple of clicks when you create a new database instance. Turn on Enable Encryption and choose the default (AWS-managed) key or create your own using KMS and select it from the dropdown menu:

That’s all it takes to start using customer-managed encryption for your MySQL or PostgreSQL database instances. To learn more, read the documentation on Encrypting RDS Resources.

Customer-Managed Keys for Oracle Database

AWS CloudHSM is a service that helps you to meet stringent compliance requirements for cryptographic operations and storage of encryption keys by using single tenant Hardware Security Module (HSM) appliances within the AWS cloud.

CloudHSM is now integrated with Amazon RDS for Oracle Database. This allows you to maintain sole and exclusive control of the encryption keys in CloudHSM instances when encrypting RDS database instances using Oracle Transparent Data Encryption (TDE).

You can use the new CloudHSM CLI tools to configure groups of HSM appliances in order to ensure that RDS and other applications that use CloudHSM keep running as long as one HSM in the group is available. For example, the CLI tools allow you to clone keys from one HSM to another.

To learn how to use Oracle TDE in conjunction with a CloudHSM, please read our new guide to Using AWS CloudHSM with Amazon RDS.

Available Now

These features are available now and you can start using them today!

— Jeff;

AWS Certification Update – ISO 9001 and More

Today I would like to give you a quick update on the latest AWS certification and to bring you up to date on some of the existing ones.

Back in the early days of AWS and cloud computing, questions about security and compliance were fairly commonplace. These days, generally accepted wisdom seems to hold that the cloud can be even more secure than typical on-premises infrastructure. Security begins at design time and proceeds through implementation, operation, and certification & accreditation. Economies of scale and experience come in to play at each step and give the cloud provider an advantage over the lone enterprise.

ISO 9001 Certification

I am happy to announce that AWS has achieved ISO 9001 certification!

This certification allows AWS customers to run their quality-controlled IT workloads in the AWS cloud. It signifies that AWS has undergone a systematic, independent examination of our quality system. This quality system was found to have been implemented effectively and has been awarded an ISO 9001 certification as a result.

We believe that this certification will be of special interest and value to AWS customers in the life sciences and health care industries. Companies of this type (including drug manufacturers, laboratories, and those conducting clinical trials) have an FDA-mandated obligation to operate a Quality Management Program.

ISO 9001:2008 is a globally-recognized standard for managing the quality of products and services. The 9001 standard outlines a quality management system based on eight principles defined by the ISO Technical Committee for quality management and quality assurance. They include:

- Customer focus

- Leadership

- Involvement of people

- Process approach

- Systematic approach to management

- Continual Improvement

- Factual approach to decision-making

- Mutually beneficial supplier relationships

The key to the ongoing certification under this standard is establishing, maintaining and improving the organizational structure, responsibilities, procedures, processes, and resources for ensuring that the characteristics of AWS products and services consistently satisfy ISO 9001 quality requirements.

The certification also allows AWS customers to demonstrate to their customers and auditors that they are using a cloud service provider that has a robust, independently accredited quality management system. The certification covers the following nine AWS Regions: US East (Northern Virginia), US West (Oregon), US West (Northern California), AWS GovCloud (US), EU (Ireland), South America (São Paulo), Asia Pacific (Singapore), Asia Pacific (Sydney), and Asia Pacific (Tokyo). The following services are in scope for these Regions:

-

AWS CloudFormation

AWS CloudFormation - AWS CloudHSM

- AWS CloudTrail

- AWS Direct Connect

- Amazon DynamoDB

- AWS Elastic Beanstalk

- Amazon Elastic Block Store (EBS)

- Amazon Elastic Compute Cloud (EC2)

- Elastic Load Balancing

- Amazon EMR

- Amazon ElastiCache

- Amazon Glacier

- AWS Identity and Access Management (IAM)

- Amazon Redshift

- Amazon Relational Database Service (RDS)

- Amazon Route 53

- Amazon Simple Storage Service (S3)

- Amazon SimpleDB

- Amazon Simple Workflow Service (SWF)

- AWS Storage Gateway

- Amazon Virtual Private Cloud

- VM Import / VM Export

AWS was certified by EY CertifyPoint, an ISO certifying agent. There is no increase in service costs for any Region as a result of this certification. You can download a copy of the AWS certification and use it to jump-start your own certification efforts (you are not automatically certified by association; however, using an ISO 9001 certified provider like AWS can make your certification process easier). You may also want to read the AWS ISO 9001 FAQ.

Other Compliance News & Resources

We are also pleased to announce three additional achievements in the cloud compliance world:

- AWS is now listed as an Official Tier 3 Cloud Service Provider by the Multi-Tiered Cloud Security Standard Certification for Singapore.

- With Version 2.0 of PCI DSS set to expire at the end of 2014, AWS customers and potential customers should know that we achieved and were fully audited against the PCI DSS 3.0 standard a full 13 months early! This validation helps AWS customers obtain their own PCI certification in a timely manner.

- AWS has completed an independent assessment that has determined all applicable Australian Government Information Security Management controls are in place relating to the processing, storage and transmission of Unclassified (DLM) for the Asia Pacific (Sydney) Region.

As is always the case, the AWS Compliance Center contains the most current information about our certifications and accreditations. If you are interested in following along, subscribe to the AWS Security Blog.

— Jeff;

Multi-Factor Authentication for Amazon WorkSpaces

Amazon WorkSpaces is a fully managed desktop computing service in the cloud. You can easily provision and manage cloud-based desktops that can be accessed from laptops, iPads, Kindle Fire, and Android tablets.

Amazon WorkSpaces is a fully managed desktop computing service in the cloud. You can easily provision and manage cloud-based desktops that can be accessed from laptops, iPads, Kindle Fire, and Android tablets.

Today we are enhancing WorkSpaces with support for multi-factor authentication using an on-premises RADIUS server. In plain English, your WorkSpaces users will now be able to authenticate themselves using the same mechanism that they already use for other forms of remote access to your organization’s resources.

Once this new feature has been enabled and configured, WorkSpaces users will log in by entering their Active Directory user name and password followed by an OTP (One-Time Passcode) supplied by a hardware or a software token.

Important Details

This feature should work with any security provider that supports RADIUS authentication (we have verified our implementation against the Symantec VIP and Microsoft Radius Server products). We currently support the PAP, CHAP, MS-CHAP1, and MS-CHAP2 protocols, along with RADIUS proxies.

As a WorkSpaces administrator, you can configure this feature for your users by entering the connection information (IP addresses, shared secret, protocol, timeout, and retry count) for your RADIUS server fleet in the Directories section of the WorkSpaces console. You can provision multiple RADIUS servers to increase availability if you’d like. In this case you can enter the IP addresses of all of the servers or you can enter the same information for a load balancer in front of the fleet.

On the Roadmap

As is the case with every part of AWS, we plan to enhance this feature over time. Although I’ll stick to our usual policy of not spilling any beans before their time, I can say that we expect to add support for additional authentication options such as smart cards and certificates. We are always interested in your feature requests; please feel free to post a note to the Amazon WorkSpaces Forum to make sure that we see them. You can also consult the Amazon WorkSpaces documentation for more information about Amazon WorkSpaces and this new feature.

Price & Availability

This feature is available now at no extra charge to Amazon WorkSpaces and you can start using it today.

— Jeff;

PS – Last month we made a couple of enhancements to WorkSpaces that will improve integration with your on-premises Active Directory. You can now search for and select the desired Organizational Unit (OU) from your Active Directory. You can now use separate domains for your users and your resources; this improves both security and manageability. You can also add a security group that is effective within the VPC associated with your WorkSpaces desktops; this allows you to control network access from WorkSpaces to other resources in your VPC and on-premises network. To learn more, read this forum post.

Use Your own Encryption Keys with S3’s Server-Side Encryption

Amazon S3 stores trillions of objects and processes more than a million requests per second for them.

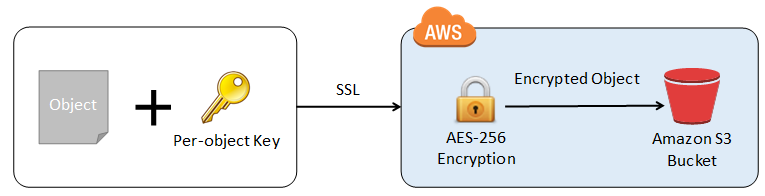

As the number of use cases for S3 has grown, so have the requests for additional ways to protect data in motion (as it travels to and from S3) and at rest (while it is stored). The first requirement is met by the use of SSL, which has been supported by S3 from the very beginning. There are several options for the protection of data at rest. First, users of the AWS SDKs for Ruby and Java can also use client-side encryption to encrypt data before it leaves the client environment. Second, any S3 user can opt to use server-side encryption.

Today we are enhancing S3’s support for server-side encryption by giving you the option to provide your own keys. You now have a choice — you can use the existing server-side encryption model and let AWS manage your keys, or you can manage your own keys and benefit from all of the other advantages offered by server-side encryption.

You now have the option to store data in S3 using keys that you manage, without having to build, maintain, and scale your own client-side encryption fleet, as many of our customers have done in the past.

Use Your Keys

This new feature is accessible via the S3 APIs and is very easy to use. You simply supply your encryption key as part of a PUT and S3 will take care of the rest. It will use your key to apply AES-256 encryption to your data, compute a one-way hash (checksum) of the key, and then expeditiously remove the key from memory. It will return the checksum as part of the response, and will also store the checksum with the object. Here’s the flow:

Later, when you need the object, you simply supply the same key as part of a GET. S3 will decrypt the object (after verifying that the stored checksum matches that of the supplied key) and return the decrypted object, once again taking care to expeditiously remove the key from memory.

Key Management

In between, it is up to you to manage your encryption keys and to make sure that you know which keys were used to encrypt each object. You can store your keys on-premises or you can use AWS Cloud HSM, which uses dedicated hardware to help you to meet corporate, contractual and regulatory compliance requirements for data security.

If you enable S3’s versioning feature and store multiple versions of an object, you are responsible for tracking the relationship between objects, object versions, and keys so that you can supply the proper key when the time comes to decrypt a particular version of an object. Similarly, if you use S3’s Lifecycle rules to arrange for an eventual transition to Glacier, you must first restore the object to S3 and then retrieve the object using the key that was used to encrypt it.

If you need to change the key associated with an object, you can invoke S3’s COPY operation, passing in the old and the new keys as parameters. You’ll want to mirror this change within your key management system, of course!

Ready to Encrypt

This feature is available now and you can start using it today. There is no extra charge for encryption, and there’s no observable effect on PUT or GET performance. To learn more, read the documentation on Server Side Encryption With Customer Keys.

— Jeff;

AWS May Webinars – Focus on Security

We have received a lot of great feedback on the partner webinars that we held in April. In conjunction with our partners, we will be holding two more webinars this month. The webinars are free, but space is limited and preregistration is advisable.

In May we are turning our focus to the all-important topic of security, and what it means in the cloud. When I first started talking about cloud computing, audiences would listen intently, and then ask “But what about security?” This question told me two things. First, it told me that the questioner saw some real potential in the cloud and might be able to use it on some mission-critical applications. Second, that it was very important that we share as much as possible about the security principles and practices within and around AWS. We built and maintain AWS Security Center and have published multiple editions of the Overview of Security Processes.

In May we are turning our focus to the all-important topic of security, and what it means in the cloud. When I first started talking about cloud computing, audiences would listen intently, and then ask “But what about security?” This question told me two things. First, it told me that the questioner saw some real potential in the cloud and might be able to use it on some mission-critical applications. Second, that it was very important that we share as much as possible about the security principles and practices within and around AWS. We built and maintain AWS Security Center and have published multiple editions of the Overview of Security Processes.

In our never-ending quest to keep you as fully informed as possible about this important topic, we have worked with two APN Technology Partners to bring you some new and exciting information.

May 20 – Log Collection and Analysis (Splunk and CloudTrail)

At 10:00 AM PT on May 20, AWS and Splunk will present the Stronger Security and Compliance on AWS with Log Collection and Analysis webinar. In the webinar you will learn how CloudTrail collects and stores your AWS log files so that software from Splunk can be used as a Big Data Security Information and Event Management (SIEM) system. You will hear how AWS log files are made available for many security use cases, including incident investigations, security and compliance reporting, and threat detection/alerting. FINRA (a joint Splunk/AWS customer) will explain how they leverage Splunk on AWS to support their cloud efforts.

May 28 – Federated Single Sign-On (Ping Identity and IAM)

At 10:00 AM PT on May 28, AWS and Ping Identity will present the Get Closer to the Cloud with Federated Single Sign-On webinar.

In the webinar you will learn how the Ping Identity platform offers federated single sign-on (SSO) to quickly and securely manage authentication of partners and customers through seamless integration with the AWS Identity and Access Management service. You will also hear from Ping Identity partner and Amazon Web Services Customer, Geezeo. They will share best practices based on their experience.

Again, these webinars are free but I strongly suggest that you register ahead of time for best results!

— Jeff;

AWS FedRAMP ATO: Difficult to Achieve, Easily Misunderstood, Valuable to All AWS Customers

Compliance with FedRAMP is a complex process with a high bar for a providers security practices. Because few providers have secured an Authority To Operate (ATO) under FedRAMP, and FedRAMP in general is very new, the topic often leaves many confused. So, we wanted to build upon our press release, security blog post, and AWS blog post to briefly clarify a few points.

FedRAMP is a U.S. government-wide program that provides a standardized approach to security assessment, authorization, and continuous monitoring for cloud products and services. With the award of this ATO, AWS has demonstrated it can meet the extensive FedRAMP security requirements and as a result, an even wider range of federal, state and local government customers can leverage AWSs secure environment to store, process, and protect a diverse array of sensitive government data. Leveraging the HHS authorization, all U.S. government agencies now have the information they need to evaluate AWS for their applications and workloads, provide their own authorizations to use AWS, and transition workloads into the AWS environment.

On May 21, 2013, AWS announced that AWS GovCloud (US) and all U.S. AWS Regions received an Agency Authority to Operate (ATO) from the U.S. Department of Health and Human Services (HHS) under the Federal Risk and Authorization Management Program (FedRAMP) requirements at the Moderate impact level. Two separate FedRAMP Agency ATOs have been issued; one encompassing the AWS GovCloud (US) Region, and the other covering the AWS US East/West Regions. These ATOs cover Amazon EC2, Amazon S3, Amazon VPC, and Amazon EBS. Beyond the services covered in the ATO, customers can evaluate their workloads for suitability with other AWS services. AWS plans to onboard other AWS services in the future. Interested customers can contact AWS Sales and Business Development for a detailed discussion of security controls and risk acceptance considerations.

The FedRAMP audit was one of the most in-depth and rigorous security audits in the history of AWS, and that includes the many previous rigorous audits that are outlined on the AWS Compliance page. The FedRAMP audit was a comprehensive, six-month assessment of 298 controls including:

- The architecture and operating processes of all services in scope.

- The security of human processes and administrative access to systems.

- The security and physical environmental controls of our AWS GovCloud (US), AWS US East (Northern Virginia), AWS US West (Northern California), and AWS US West (Oregon) Regions

- The underlying IAM and other security services.

- The security of networking infrastructure.

- The security posture of the hypervisor, kernel and base operating systems.

- Third-Party penetration testing.

- Extensive onsite auditor interviews with service teams.

- Nearly 1,500 individual evidence files.

The award of this FedRAMP Agency ATO enables agencies and federal contractors to immediately request access to the AWS Agency ATO packages by submitting a FedRAMP Package Access Request Form and begin to move through the authorization process to achieve an ATO using AWS. Additional information on FedRAMP, including the FedRAMP Concept of Operations (CONOPS) and Guide to Understanding FedRAMP, can be found at http://www.fedramp.gov .

It is important to note that while FedRAMP applies formally only to U.S. government agencies, the rigorous audit process and the resulting detailed documentation benefit all AWS customers. Many of our commercial and enterprise customers, as well as public sector customers outside the U.S., have expressed their excitement about this important new certification. All AWS customers will benefit from the FedRAMP process without any change to AWS prices or the way that they receive and utilize our services.

You can visit http://aws.amazon.com/compliance/ to learn more about the AWS and FedRAMP or the multitude of other compliance evaluations of the AWS platform such as SOC 1, SOC 2, SOC 3, ISO 27001, FISMA, DIACAP, ITAR, FIPS 140-2, CSA, MPAA, PCI DSS Level 1, HIPAA and others.

— Jeff;

AWS achieves FedRAMP Compliance

AWS has achieved FedRAMP compliance now federal agencies can save significant time, costs and resources in their evaluation of AWS! After demonstrating adherence to hundreds of controls by providing thousands of artifacts as part of a security assessment, AWS has been certified by a FedRAMP-accredited third-party assessor (3PAO) and has achieved agency ATOs (Authority to Operate) demonstrating that AWS complies with the stringent FedRAMP requirements.

Numerous U.S. government agencies, systems integrators and other companies that provide products and services to the U.S. government are using AWS services today. Now all U.S. government agencies can save significant time, costs and resources by leveraging the AWS Department of Health and Human Services (HHS) ATO packages in the FedRAMP repository to evaluate AWS for their applications and workloads, provide their own authorizations to use AWS, and transition workloads into the AWS environment. Agencies and federal contractors can immediately request access to the AWS FedRAMP package by submitting a FedRAMP Package Access Request Form and begin to moving through the authorization process to achieve an ATO with AWS.

Numerous U.S. government agencies, systems integrators and other companies that provide products and services to the U.S. government are using AWS services today. Now all U.S. government agencies can save significant time, costs and resources by leveraging the AWS Department of Health and Human Services (HHS) ATO packages in the FedRAMP repository to evaluate AWS for their applications and workloads, provide their own authorizations to use AWS, and transition workloads into the AWS environment. Agencies and federal contractors can immediately request access to the AWS FedRAMP package by submitting a FedRAMP Package Access Request Form and begin to moving through the authorization process to achieve an ATO with AWS.

What is FedRAMP? Check-out the answer to this and other frequently asked questions on the AWS FedRAMP FAQ site.

— Jeff;